Magic 1-For-1: efficient generation of video open source project that claims to generate a minute of video in one minute

General Introduction

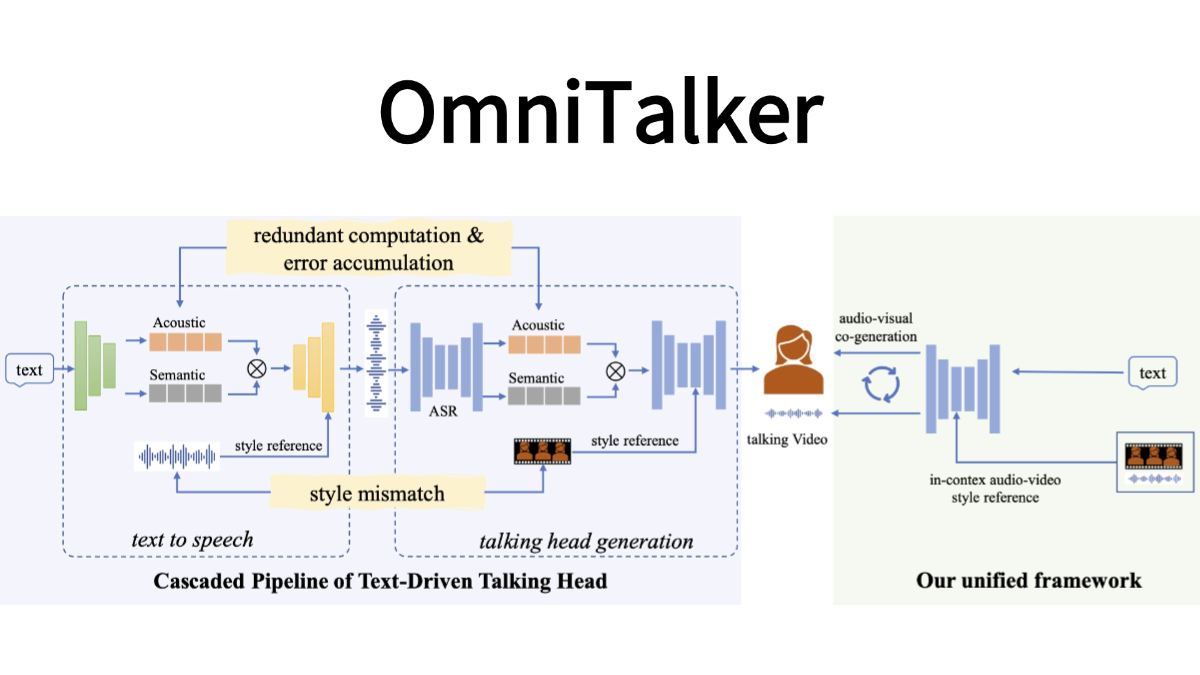

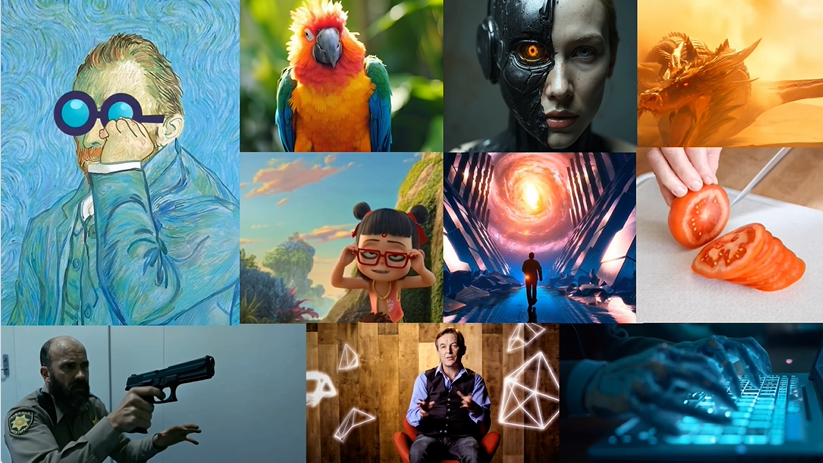

Magic 1-For-1 is an efficient video generation model designed to optimize memory usage and reduce inference latency. The model decomposes the text-to-video generation task into two subtasks: text-to-image generation and image-to-video generation for more efficient training and distillation.Magic 1-For-1 can generate high-quality one-minute video clips in less than a minute, making it suitable for scenarios where short videos need to be generated quickly. The project was developed by researchers at Peking University, Hedra Inc. and Nvidia, and the code and model weights are publicly available on GitHub.

Function List

- Text-to-Image Generation: Converts the input text description into an image.

- Image to Video Generation: Convert generated images to video clips.

- Efficient Memory Usage: Optimizes memory usage for single GPU environments.

- Fast inference: reducing inference latency for fast video generation.

- Model Weights Download: Provides links to download pre-trained model weights.

- Environment Setup: Provides detailed environment setup and dependency installation guide.

Using Help

Environmental settings

- Install git-lfs:

sudo apt-get install git-lfs

- The Conda environment is created and activated:

conda create -n video_infer python=3.9

conda activate video_infer

- Install project dependencies:

pip install -r requirements.txt

Download model weights

- Create a directory to store the pre-trained weights:

mkdir pretrained_weights

- Download Magic 1-For-1 weights:

wget -O pretrained_weights/magic_1_for_1_weights.pth <model_weights_url>

- Download the Hugging Face component:

huggingface-cli download tencent/HunyuanVideo --local_dir pretrained_weights --local_dir_use_symlinks False

huggingface-cli download xtuner/llava-llama-3-8b-v1_1-transformers --local_dir pretrained_weights/text_encoder --local_dir_use_symlinks False

huggingface-cli download openai/clip-vit-large-patch14 --local_dir pretrained_weights/text_encoder_2 --local_dir_use_symlinks False

Generate Video

- Run the following commands for text and image-to-video generation:

python test_ti2v.py --config configs/test/text_to_video/4_step_ti2v.yaml --quantization False

- Or use the provided script:

bash scripts/generate_video.sh

Detailed function operation flow

- Text-to-Image Generation: Enter a textual description and the model will generate a corresponding image.

- Image to Video Generation: Input the generated images into the video generation module to generate short video clips.

- Efficient memory usage: Ensures efficient operation even in single GPU environments by optimizing memory usage.

- fast inference: Reduce the inference delay and realize fast video generation, which is suitable for the scenarios that need to generate short videos quickly.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...