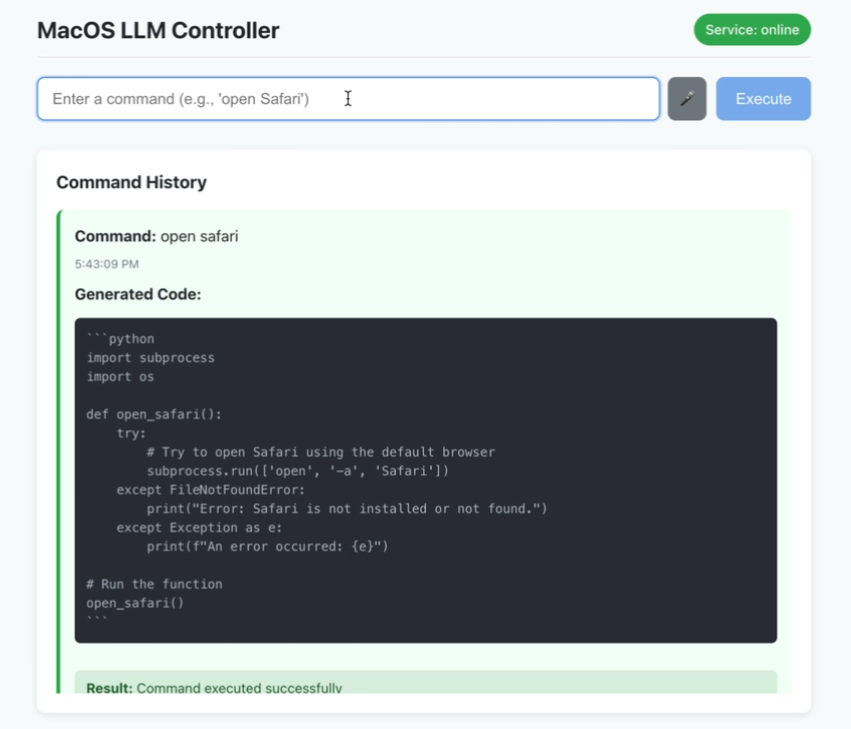

Open source tool to control macOS operations with voice and text

General Introduction

MacOS LLM Controller is an open source desktop application, hosted on GitHub, that allows users to execute macOS system commands by entering natural language commands via voice or text. It is based on the Llama-3.2-3B-Instruct model and utilizes LlamaStack to generate Python code that calls the macOS system API to accomplish tasks. Users can say "open terminal" or type "create folder" and the tool will automatically parse and execute the operation. The project uses React Front-end and Flask back-end, support real-time status feedback and command history, suitable for macOS users to improve operational efficiency, especially for developers or people with accessibility needs. The code is publicly available and the community can participate in optimization.

Function List

- Voice command recognition: Input your voice through the microphone and translate it into macOS commands in real time.

- Text Command Input: Supports text input natural language to perform system operations.

- Command History: Displays the success or failure status of executed commands.

- Real-time status feedback: the interface dynamically updates the status of service connections and command execution.

- Python code generation: Turns instructions into executable macOS API call code based on LlamaStack.

- Localized operation: all processing is done locally to protect user privacy.

- Security Check: Performs basic security validation of generated Python code.

Using Help

Installation process

The MacOS LLM Controller requires an environment to be configured on the macOS system, including the front-end, back-end, and LlamaStack model. Below are the detailed installation steps to ensure that users can run it smoothly:

1. Environmental preparation

Ensure that the system meets the following requirements:

- operating system: macOS (the project is designed for macOS and does not explicitly support other systems).

- Node.js: Version 16 or higher, including

npmThe Node.js website is a great source of information about Node.js. It can be downloaded from the Node.js website. - Python: Version 3.8 or higher, including the

pipThe It can be downloaded from the Python website. - Ollama: For running the Llama model. Access Ollama Official website Installation.

- Docker: For running LlamaStack. install Docker Desktop.

- hardware requirement: 16GB or more of RAM and a multi-core CPU are recommended to support model inference.

2. Cloning of project codes

Open a terminal and run the following command to clone the code:

git clone https://github.com/duduyiq2001/llama-desktop-controller.git

cd llama-desktop-controller

3. Configuring LlamaStack

LlamaStack is the project's core dependency for generating Python code. The configuration steps are as follows:

Setting environment variables::

Run the following command in the terminal to specify the inference model:

export INFERENCE_MODEL="meta-llama/Llama-3.2-3B-Instruct"

export OLLAMA_INFERENCE_MODEL="llama3.2:3b-instruct-fp16"

Starting the Ollama Reasoning Server::

Run the following command to start the model and set it to remain active for 60 minutes:

ollama run $OLLAMA_INFERENCE_MODEL --keepalive 60m

Running LlamaStack with Docker::

Set the port and start the container:

export LLAMA_STACK_PORT=5001

docker run \

-it \

-p $LLAMA_STACK_PORT:$LLAMA_STACK_PORT \

-v ~/.llama:/root/.llama \

llamastack/distribution-ollama \

--port $LLAMA_STACK_PORT \

--env INFERENCE_MODEL=$INFERENCE_MODEL \

--env OLLAMA_URL=http://host.docker.internal:11434

Ensure that the LlamaStack is in the http://localhost:5001 It's running fine.

4. Back-end set-up

The backend is based on Flask and is responsible for handling API requests and code generation. The steps are as follows:

- Go to the back-end catalog:

cd backend - Install the Python dependencies:

pip install -r ../requirements.txtDependencies include

flask,flask-cors,requests,pyobjccap (a poem)llama-stack-client. If the installation fails, use a domestic mirror:pip install -r ../requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple - Start the Flask server:

python server.py - Confirm that the backend is running on

http://localhost:5066The

5. Front-end setup

The front end is based on React and provides the user interface. The steps are as follows:

- Go to the front-end directory:

cd .. # 返回项目根目录 - Install the Node.js dependencies:

npm install - Start the development server:

npm run dev - Verify that the front-end is running on

http://localhost:5173The

6. Access applications

Open your browser and visit http://localhost:5173. Ensure that the backend and LlamaStack are functioning properly, otherwise functionality may be limited.

Function Operation Guide

1. Use of voice commands

Voice input is the core function of the program and is suitable for quick operation. The operation steps are as follows:

- Activate voice mode: Click on the "Voice Input" button in the main interface (or use the shortcut keys, you need to check the documentation to confirm).

- Recording Instructions: Say commands into the microphone, such as "Open Finder" or "Close Safari". Keep the environment quiet to improve recognition rates.

- implementation process: The tool converts speech to text using the SpeechRecognition API, which LlamaStack parses into Python code that calls the macOS API to perform the task.

- Examples of common commands::

- "Open Terminal": launches Terminal.app.

- "New Folder": Creates a folder in the current directory.

- "Screenshot": triggers the macOS screenshot feature.

- caveat::

- Authorization of microphone privileges is required for first-time use.

- If recognition fails, check the microphone settings or switch text input.

2. Use of textual instructions

Text input is suitable for precise control. The operation steps are as follows:

- Open Input Box: Find the text input area in the interface.

- input: Type in natural language, such as "open calendar" or "turn volume to 50%".

- Submit instructions: Click the Execute button or press Enter, and the tool generates and runs the Python code.

- Advanced UsageLlamaStack breaks down tasks and executes them sequentially.

- draw attention to sth.: Clarity of instructions improves success, e.g. "Open Chrome" is better than "Open Browser".

3. View command history

The interface provides a command history area that shows the execution status of each command:

- success state: A green mark indicates that the command was executed correctly.

- error state: Red flag with an error message (e.g. "insufficient rights").

- manipulate: You can click History to rerun the command, or view the generated Python code.

4. Real-time status monitoring

The service status is displayed in the upper right corner of the interface:

- greener: The backend and LlamaStack are connected properly.

- red (color): The service is disconnected and you need to check if Flask or LlamaStack is running.

- manipulate: Click the status icon to manually refresh the connection.

5. Security inspections

tool performs basic security validation of the generated Python code:

- Filter high-risk commands (e.g., delete system files).

- Check for syntax errors and make sure the code is executable.

- take note of: Caution should still be used to avoid running commands from unknown sources.

caveat

- model dependency: The project is fixed to use Llama-3.2-3B-Instruct and cannot directly replace other models.

- Performance Requirements: Running LlamaStack requires high computational power, it is recommended to close extraneous programs.

- Commissioning method: If startup fails, check the terminal logs, or access the

http://localhost:5066/statusCheck the status of the backend. - Competence issues: Some macOS commands require authorization (such as accessing files or controlling volume), and a permission prompt pops up the first time you run them.

- Community Support: Issues can be submitted via GitHub Issues with error logs for developers to troubleshoot.

application scenario

- Accessibility assistance

Visually impaired or mobility-impaired users can use voice commands to operate macOS, such as "Open Mail" to quickly launch the Mail.app, for greater ease of use. - Developer efficiency

Developers can quickly execute commands such as "Open Xcode and create a new project," saving time on manual tasks and allowing them to focus on their development tasks. - routine automation

Users can complete repetitive tasks with text commands, such as "Organize desktop files into archive folders every day", which is suitable for efficient office work. - Education and experimentation

Programming enthusiasts can study how LlamaStack turns natural language into code and learn how AI integrates with systems.

QA

- What about inaccurate voice recognition?

Make sure the microphone is working and the environment is free of noise. If it still fails, switch to text input or check the SpeechRecognition API configuration. - What if the backend fails to start?

Verify that the Python dependencies are fully installed and that LlamaStack is running on thehttp://localhost:5001. Viewserver.pyLog positioning error. - Does it support non-macOS systems?

Currently only macOS is supported, as the code relies on the macOS API, and may be extended to other platforms through the community in the future. - How to optimize performance?

Increase memory or use a high performance CPU/GPU and close other resource-hogging programs. Also try a more efficient LlamaStack configuration. - How do I contribute code?

Fork the repository and submit a Pull Request. It is recommended to read the project documentation and follow the contribution guidelines.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...