LLManager: a management tool that combines intelligent automated process approvals with human reviews

General Introduction

LLManager is an open source intelligent approval management tool based on LangChain's LangGraph framework developed to focus on automating the processing of approval requests while optimizing decisions with human review. It learns from historical approvals and improves approval accuracy through semantic search, sample less learning and reflective mechanisms. Users can use the Agent Inbox Viewing and responding to requests, supporting custom approval and rejection criteria, and adapting to multiple language models (e.g., OpenAI, Anthropic). lLManager is suitable for enterprise budget approval, project management, and compliance review scenarios, and its code is hosted on GitHub, allowing developers to freely extend its functionality. The tool emphasizes collaboration between AI and humans, balancing efficiency and reliability.

Function List

- Automated approvals: Automatically generate approval recommendations based on user-defined approval and rejection criteria.

- Human Audit: Supports manual acceptance, modification, or rejection of AI-generated approvals to ensure accurate decision-making.

- semantic search: extract 10 semantically similar samples from historical requests to improve the contextual relevance of approvals.

- learning with fewer samples: Optimize the model's future decision-making capabilities based on historical approval data.

- Reflection mechanisms: Analyze the results of the modified approvals to generate reflective reports to improve model performance.

- Model Customization: Support for models such as OpenAI, Anthropic, etc., with support for the tool call function.

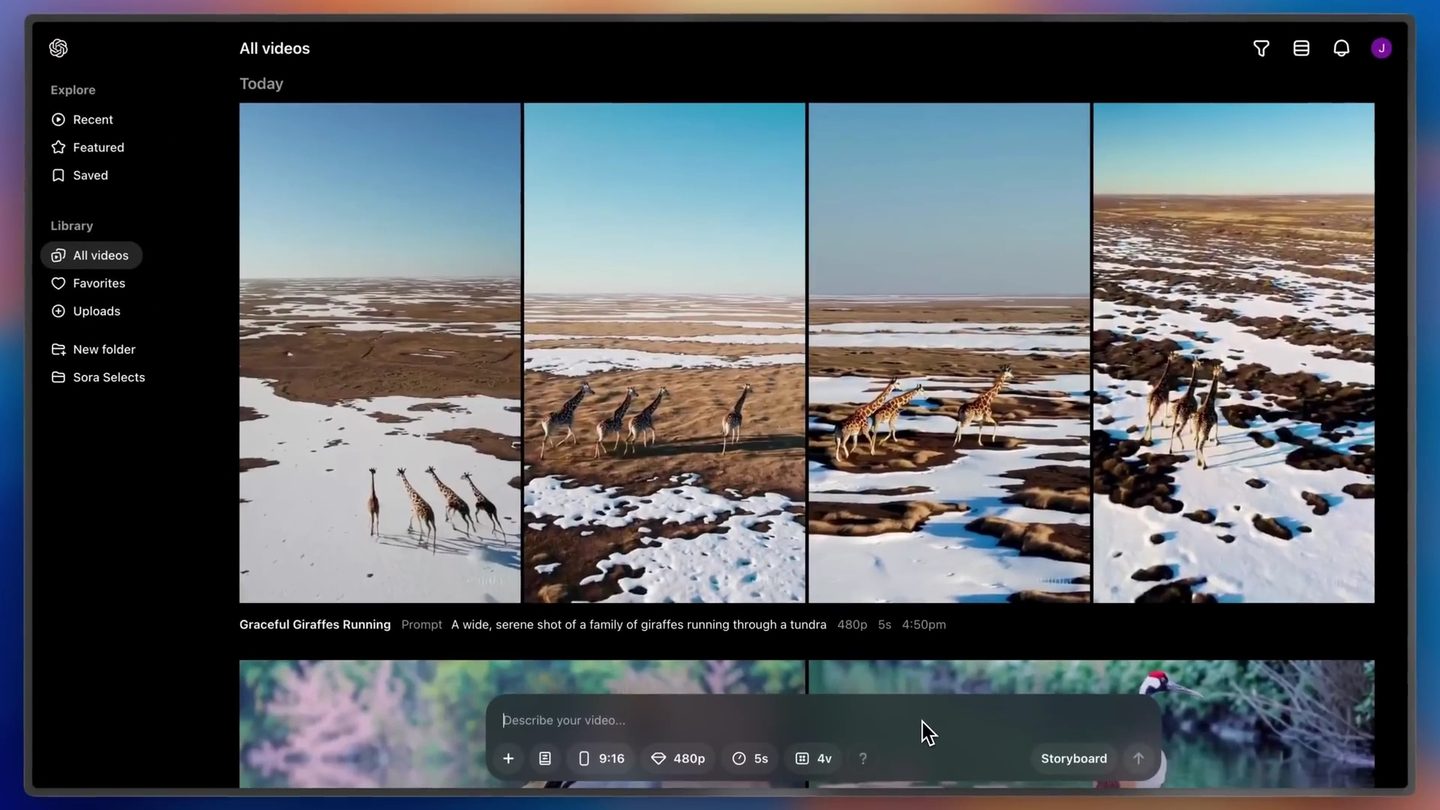

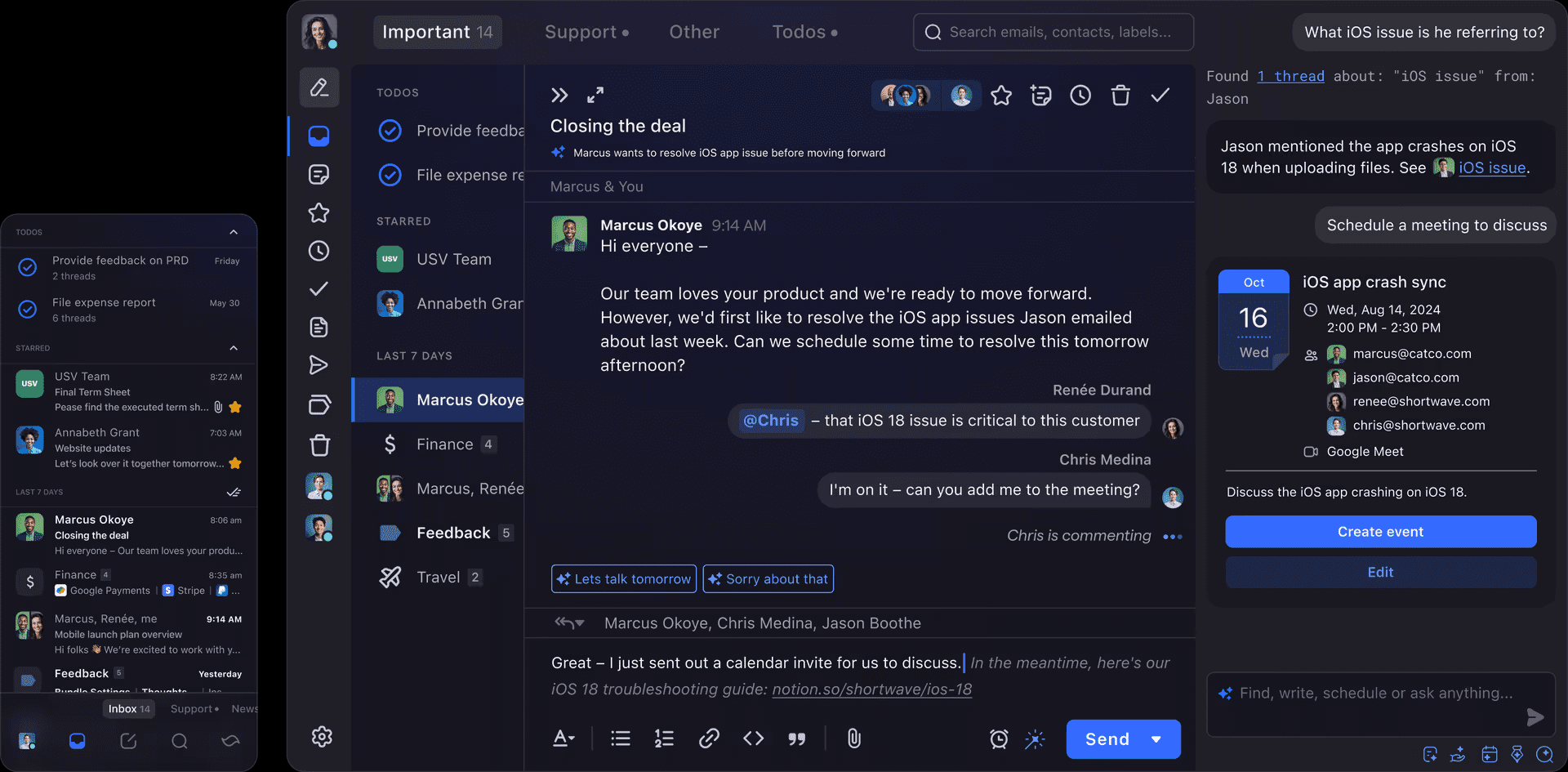

- Agent Inbox Interactive: Provides an intuitive interface for viewing, responding to, and managing approval requests.

- Dynamic cue building: Dynamically adjusts prompts based on requests, adapting to a variety of approval scenarios.

- End-to-end assessment: Run test cases to verify the reliability and accuracy of the workflow.

Using Help

Installation process

LLManager is based on LangChain and LangGraph open source project , need to be deployed locally . The following are the detailed installation steps:

- Cloning Codebase::

Run the following command in the terminal to get the LLManager code:git clone https://github.com/langchain-ai/llmanager.git cd llmanager - Installation of dependencies::

Ensure that Python 3.11 or later and Node.js (for Yarn) are installed on your system. Create a virtual environment and install dependencies:python3 -m venv venv source venv/bin/activate yarn installDefault dependencies include LangChain and the Anthropic/OpenAI integration package. Additional installations are required if using other models (e.g. Google GenAI):

yarn install @langchain/google-genai - Configuring Environment Variables::

Copy the sample environment file and fill in the necessary information:cp .env.example .envcompiler

.envfile, add the LangSmith API key and the Anthropic API key (if using the Anthropic model):LANGCHAIN_PROJECT="default" LANGCHAIN_API_KEY="lsv2_..." LANGCHAIN_TRACING_V2="true" LANGSMITH_TEST_TRACKING="true" ANTHROPIC_API_KEY="your_anthropic_key"LangSmith is used for tracing and debugging and is recommended to be enabled.

- Starting the Development Server::

Run the following command to start the LangGraph server:yarn devThe server runs by default on the

http://localhost:2024In production environments, deploy to a cloud server and update the URL. For production environments, deploy to a cloud server and update the URL.

Usage

The core of LLManager is to manage and respond to approval requests through Agent Inbox. Below is the detailed operation flow:

- Running an end-to-end assessment::

- Execute the following command to generate a new approval assistant and run 25 test cases:

yarn test:single evals/e2e.int.test.ts - The terminal outputs the UUID of the new assistant, recording this ID for Agent Inbox configuration.

- If you need to reuse the same helper, you can modify the

evals/e2e.int.test.tsIf you are using a fixed helper ID, use the Fixed Helper ID.

- Execute the following command to generate a new approval assistant and run 25 test cases:

- Configuring Approval Rules::

- exist

config.jsonSet approval and rejection criteria in the Example:{ "approvalCriteria": "请求需包含详细预算和时间表", "rejectionCriteria": "缺少必要文件或预算超标", "modelId": "anthropic/claude-3-7-sonnet-latest" } - When no rules are set, LLManager learns based on historical data, but setting rules accelerates model adaptation.

modelIdbe in favor ofprovider/model_nameformat, such asopenai/gpt-4omaybeanthropic/claude-3-5-sonnet-latestThe

- exist

- Using Agent Inbox::

- Go to dev.agentinbox.ai and click "Add Inbox".

- Enter the following information:

- Assistant/Graph ID: UUID generated by end-to-end evaluation.

- Deployment URL::

http://localhost:2024(development environment). - Name: Customize the name, such as

LLManagerThe

- Refresh the Inbox after submission to see the pending requests.

- For each request, the Agent Inbox displays:

- AI-generated approval recommendations and reasoning reports.

- Action options: Accept, Modify (edit suggestions or instructions), or Reject (provide reasons).

- Modified or accepted requests are automatically stored in the Less Sample Example library for optimizing subsequent approvals.

- View and optimize approval history::

- In Agent Inbox, click Processed Requests to view approval details, AI inference reports, and manual modification logs.

- The system extracts similar requests from the history as contextual input models through semantic search.

- The modified request triggers the reflection mechanism, which generates a reflection report that is stored in the reflection repository and used to improve the model.

Featured Function Operation

- semantic search: When a new request arrives, the system extracts 10 semantically similar requests (including the request content, final answer, and description) from the history as a prompt context to improve approval accuracy.

- Reflection mechanisms::

- If the manual only modifies the description (correct answer but incorrect reasoning), trigger

explanation_reflectionnodes to analyze reasoning errors and generate new reflections. - If both the answer and the description are modified, trigger

full_reflectionnodes, analyze overall errors and generate reflections. - Reflection reports are deposited in a reflection bank to optimize subsequent reasoning.

- If the manual only modifies the description (correct answer but incorrect reasoning), trigger

- Dynamic cue buildingDynamically adjusts prompts based on request content and historical data to ensure that approvals are adapted to a variety of scenarios.

- Model switching: by modifying the

config.jsonhit the nail on the headmodelId, switching to a model that supports tool calls (e.g., theopenai/gpt-4o), you need to make sure that the corresponding integration package is installed.

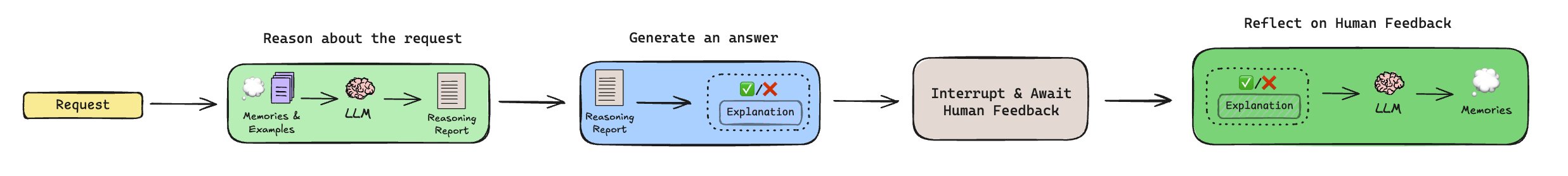

Workflow Explained

LLManager's approval process includes the following steps:

- Reasoning::

- Extracting historical reflections and less sample examples (via semantic search).

- Generates a reasoning report that analyzes whether the request should be granted, but does not make the final decision.

- Generate Answer::

- Combine the reasoning report and context to generate a final approval recommendation (approve or reject) and description.

- Human Review::

- Suspend the workflow and wait for manual review through Agent Inbox.

- A human can accept, modify, or reject the suggestions, and the modifications are deposited in the Lesser Sample Example Library.

- Reflection::

- If the recommendation is modified, a reflection mechanism is triggered to generate recommendations for improvement.

- Unmodified requests skip this step.

caveat

- Ensure that the LangSmith API key is valid and that both development and production environments have stable networks.

- Non-OpenAI/Anthropic models require additional integration packages to be installed, see LangChain documentation.

- Production deployments require moving the LangGraph server to the cloud and updating the URL for Agent Inbox.

application scenario

- Enterprise budget approval

LLManager automates the processing of employee budget requests, generating recommendations based on preset rules (e.g., budget caps, project types). The finance team reviews, modifies or confirms the results via Agent Inbox, reducing repetitive tasks. - Allocation of project tasks

Project managers use LLManager to approve task assignment requests. The system analyzes task priorities and resource requirements to generate allocation recommendations. The manager manually reviews the request to ensure efficient and reasonable task allocation. - Compliance review

LLManager checks submitted documents for regulatory compliance and flags potential issues. The compliance team confirms or adjusts the results through Inbox, increasing the efficiency of the review. - Customer request management

Customer service teams use LLManager to approve customer refunds or service requests. The system generates recommendations based on historical data, and the team manually reviews them to ensure fair and consistent decision-making.

QA

- What models does LLManager support?

Support models such as OpenAI, Anthropic, etc., need to support the tool call function. Non-default models require the installation of LangChain integration packages, such as@langchain/google-genaiThe - How do I reuse the same assistant?

modificationsevals/e2e.int.test.tsIf you are using a fixed helper ID, or searching for an existing ID before running an evaluation, avoid generating a new helper. - How is it deployed in a production environment?

Deploy LangGraph to a cloud server, update the Deployment URL for Agent Inbox, and use LangSmith to monitor workflow performance. - How do I customize my workflow?

Edit the reasoning subgraph (to adjust the prompt generation logic) or the reflection subgraph (to control the reflection generation rules) to adapt to specific approval scenarios.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...