LLM application: reflections on Agent dialog (with tool calls)

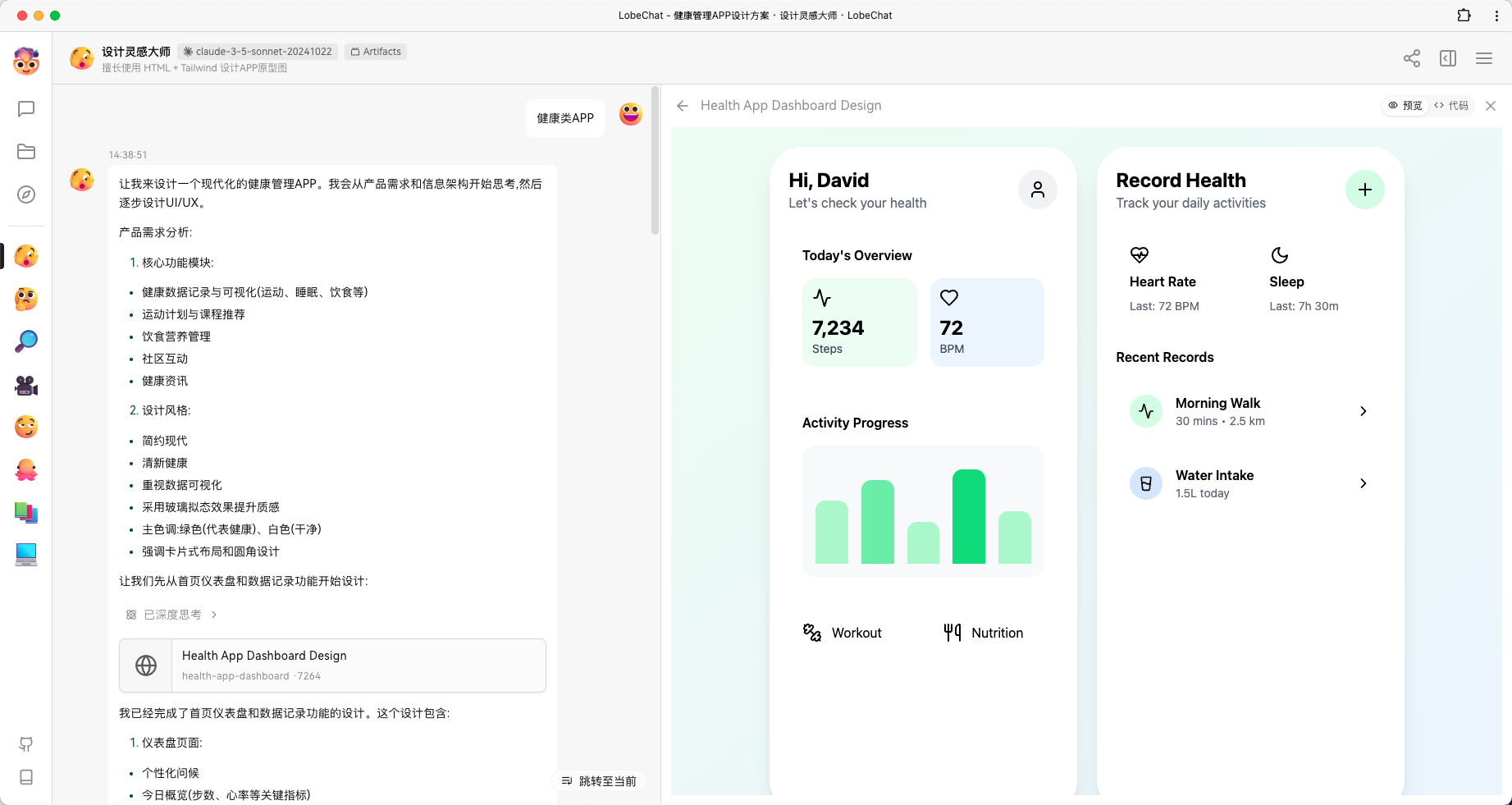

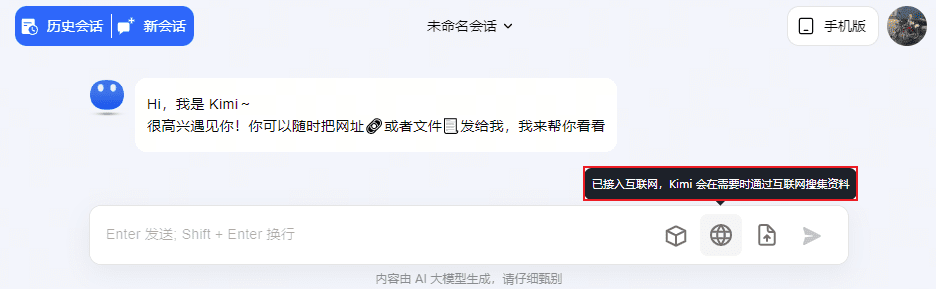

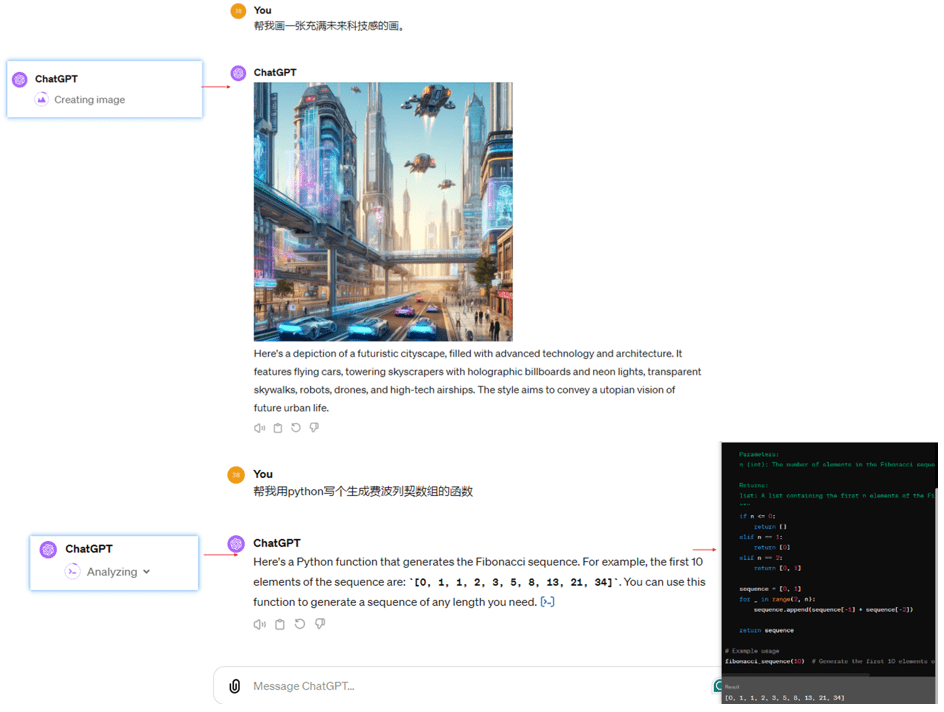

Q&A products such as ChatGPT and Kimi are using Agent conversations (the ability to invoke different tools to interact with the user), for example Kimi's tools have LLM conversations, link conversations, file conversations, and networking conversations. For example, ChatGPT, Wenxin Yiyin and Xunfei Starfire have also been extended with tools such as Wensheng Diagram, Code Writer, and Math Calculator.

Agent dialog for ChatGPT4

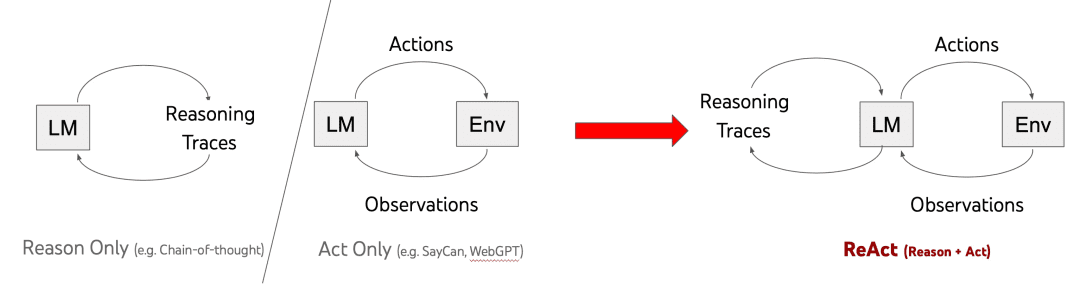

The dominant framework nowadays for realizing the conversational capabilities of Agents is ReAct (proposed by Princeton University and Google in 2022), which is ReAct [1] is a cued-word approach that blends thinking and acting. Its historical evolution is shown below:

The 3 methods are shown above:

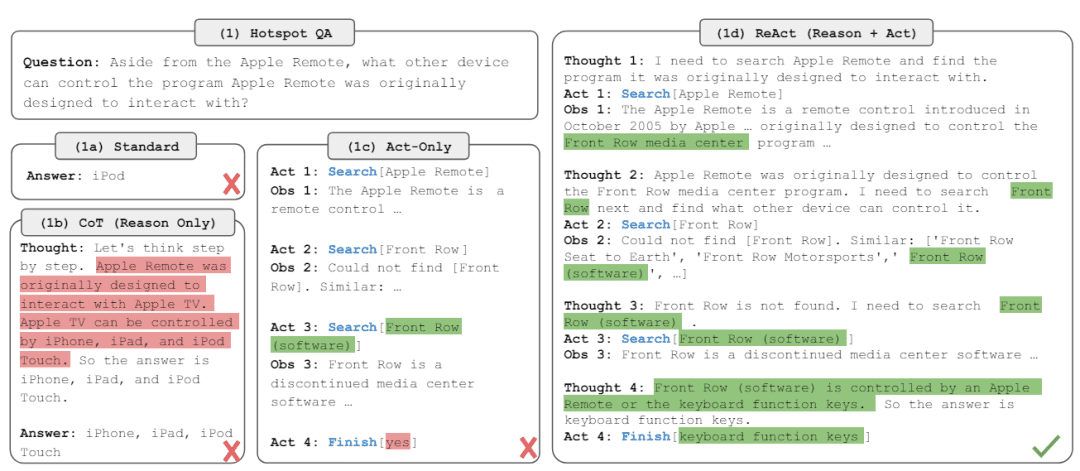

Reason Only:Use Chain-of-thought for multi-step thinking, add the prompt "Let's think step by step" before entering the question in the Prompt to guide multi-step thinking, don't give the answer directly, but the disadvantage is obvious: Reason Only closed door just think not to do, will not go out to see the outside world to understand the world before thinking, so it will produce hallucinations, the change of dynasties do not know;

Act-Only:Obtaining Observation through single-step ActionObservation, Disadvantage: Doing it immediately without thinking about it, the answer I get at the end may not be guaranteed to be the same as what I want;

ReAct:A blend of thinking and acting, i.e., thinking before acting, returning the results of the action and then thinking about what to do next, then acting, and repeating the process over and over again, knowing that the final answer is produced.

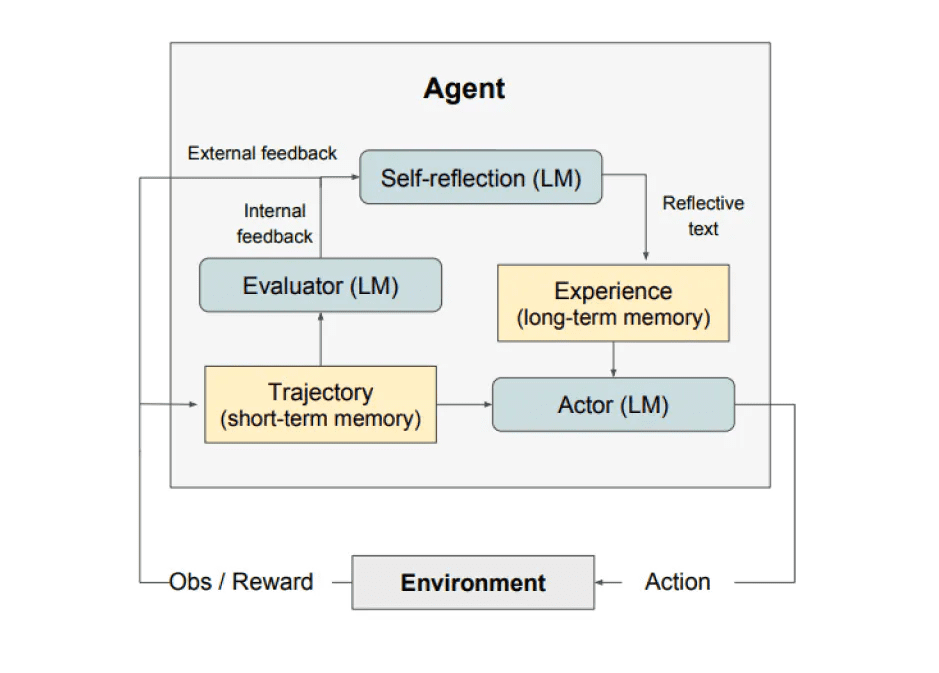

Extension: 2023 also has a self-reflection (Reflexion) of the framework, joined the reflection, as shown below, not in depth here. In addition, interested friends can look at the big Taobao technology public number of "Agent research - 19 types of Agent framework comparison", this piece in order to send a paper is really more and more play more and more flowers.

Showing the examples mentioned in the ReAct paper better illustrates the above logic and advantages and disadvantages:

ReAct's Prompt is:

"""

Explanation of input variables for Agent Prompt:

- tools: toolset description in the form "{tool.name}: {tool.description}".

- toool_names: list of tool names

- history: the history of the conversation between the user and the Agent (note that the multiple rounds of ReAct in the middle of an agent chat are not counted as part of the conversation history)

- input: user questions

- agent_scratchpad: intermediate action and observation processes.

would be formatted as "\nObservation: {observation}\nThought:{action}".

Then pass in agent_scratchpad (Agent's thought record)

"""

agent_prompt = """

Answer the following questions as best you can. If it is in order, you can use some tools appropriately. You have access to the following tools.{tools}

Use the following format.

Question: the input question you must answer1

Thought: you should always think about what to do and what tools to use.

Action: the action to take, should be one of {toool_names}

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can be repeated zero or more times)

I now know the final answer

Final Answer: the final answer to the original input question

Begin!history: {history}

Question: {input}

Thought: {agent_scratchpad}"""""

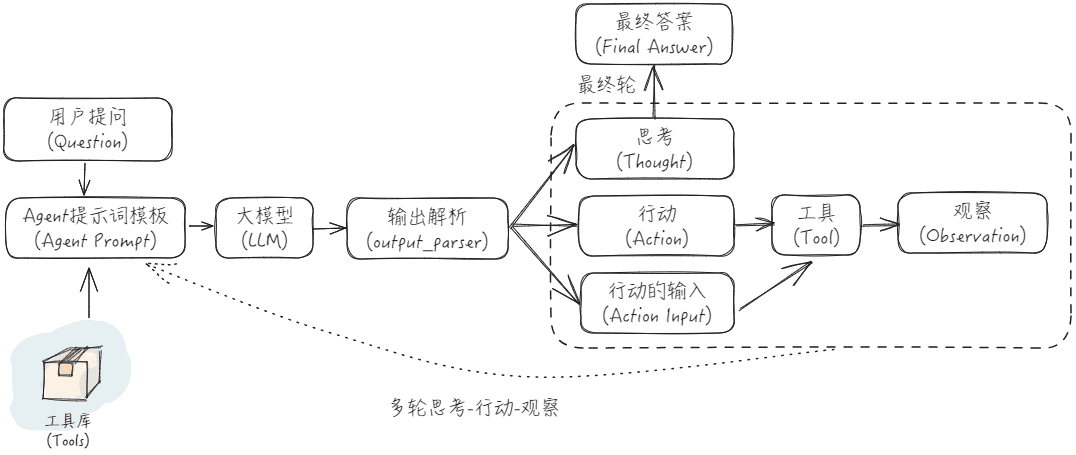

The ReAct application flowchart is shown below:

There is a good example on the Internet, which is referenced here for illustrative purposes [2]:

Suppose we have:

User Questions: "What is the average price of roses in the market today? How should I price it if I sell it for 151 TP3T on top of that?"

Tools:: {'bing web search': a tool for searching the web for open source information using Bing Search; 'llm-math': a tool for doing math with big models and Python}

Then the input for the first round of dialog is:

Answer the following questions as best you can. If it is in order, you can use some tools appropriately. You have access to the following tools.

bing-web-search: Bing Search open source information on the web with the tool

llm-math: tools for doing math with big models and Python

Use the following format.

Question: the input question you must answer1

Thought: you should always think about what to do and what tools to use.

Action: the action to take, should be one of [bing-web-search, llm-math]Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can be repeated zero or more times)

I now know the final answer

Final Answer: the final answer to the original input question

Begin!

history.

Question: What is the average price of roses in the current market? What is the average price of roses in the market today? If I sell them at a markup of 15%, how should I price them?

Thought.

Get the output and parse it to get Thought, Action and Action Input:

Thought: I should use the search tool to find answers so I can quickly find the information I need.

Action: bing-web-search

Action Input: Average price of roses

Call the bing-web-search tool, type in "average price of roses", and get the return content Observation: "According to the web, each bouquet of roses in the United States is $80.16." After that, we will organize the above content and put it into the website. After that, put the above content into ReAct's prompt word template, and start the second round of dialog input:

Answer the following questions as best you can. If it is in order, you can use some tools appropriately. You have access to the following tools.

bing-web-search: Bing Search open source information on the web with the tool

llm-math: tools for doing math with big models and Python

Use the following format.

Question: the input question you must answer1

Thought: you should always think about what to do and what tools to use.

Action: the action to take, should be one of [bing-web-search, llm-math]Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can be repeated zero or more times)

I now know the final answer

Final Answer: the final answer to the original input question

Begin!

history.

Question: What is the average price of roses in the current market? What is the average price of roses in the market today? If I sell them at a markup of 15%, how should I price them?

Thought: I should use the search tool to find answers so I can quickly find the information I need.

Action: bing-web-search

Action Input: Average price of roses

Observation: According to online sources, each bouquet of roses in the U.S. is at $80.16.

Thought.

Get the output and parse it to get Thought, Action and Action Input:

Thought: I need the math to figure out what a 151 TP3T markup on top of that would be.

Action: llm-math

Action Input: 80.16*1.15

Call llm-math tool, input "80.16*1.15", get the return content Observation: "92.184". After that, put the above content into ReAct's prompt word template, and open the third round of dialog input:

Answer the following questions as best you can. If it is in order, you can use some tools appropriately. You have access to the following tools.

bing-web-search: Bing Search open source information on the web with the tool

llm-math: tools for doing math with big models and Python

Use the following format.

Question: the input question you must answer1

Thought: you should always think about what to do and what tools to use.

Action: the action to take, should be one of [bing-web-search, llm-math]Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can be repeated zero or more times)

I now know the final answer

Final Answer: the final answer to the original input question

Begin!

history.

Question: What is the average price of roses in the current market? What is the average price of roses in the market today? If I sell them at a markup of 15%, how should I price them?

Thought: I should use the search tool to find answers so I can quickly find the information I need.

Action: bing-web-search

Action Input: Average price of roses

Observation: According to online sources, each bouquet of roses in the U.S. is at $80.16.

Thought: I need data to calculate what the price would be with a 15 markup on top of that.

Action: llm-math

Action Input: 80.16*1.15

Observation: 92.184

Thought.

Get the output and parse it to get Thought, Action and Action Input:

Thought: I know the final answer.

Final Answer: If it is going to be sold at a markup of 15%, it should be priced at $92.184.

Langchain has already implemented ReAct invocation [3], which requires you to create ReAct Agent, AgentExecutor, and tools. through ReAct Agent, we can realize the previously mentioned, connect to different tools, and call different tools according to the user's needs and LLM's thinking to improve the performance of the Q&A. If you rewrite your own ReAct-based Agent Chat, you need to pay special attention to some boundary conditions, such as the choice of tool does not exist, the tool call fails, the tool call into a dead loop and so on, because these will ultimately affect the effectiveness of the interaction. Personally, when practicing the effect of ReAct Agent Chat, I have a few insights:

- Choose LLMs that have done Agent dialog capability alignment, and preferably ReAct Prompt to adapt to LLMs;

- Clearly describing good tool introductions reduces description ambiguity between tools and avoids using the wrong tool;

- Beware of multiple rounds of think-act-observe that lead to too much input context, and many LLMs become poor at understanding long contextual inputs.

- If there is only a single round of think-act-observe, then a set of process down, you need to go through 2 times LLM and 1 time tool call, it will affect the response time, if you want to simplify the process of this ReAct, you can directly use the intent recognition + tool call, by the tool to directly output the final results, do not have to feed back to the LLM to do a summary answer, the disadvantage is that it's not so perfect.

LangChain provides a number of starter kits which can be found in [4].

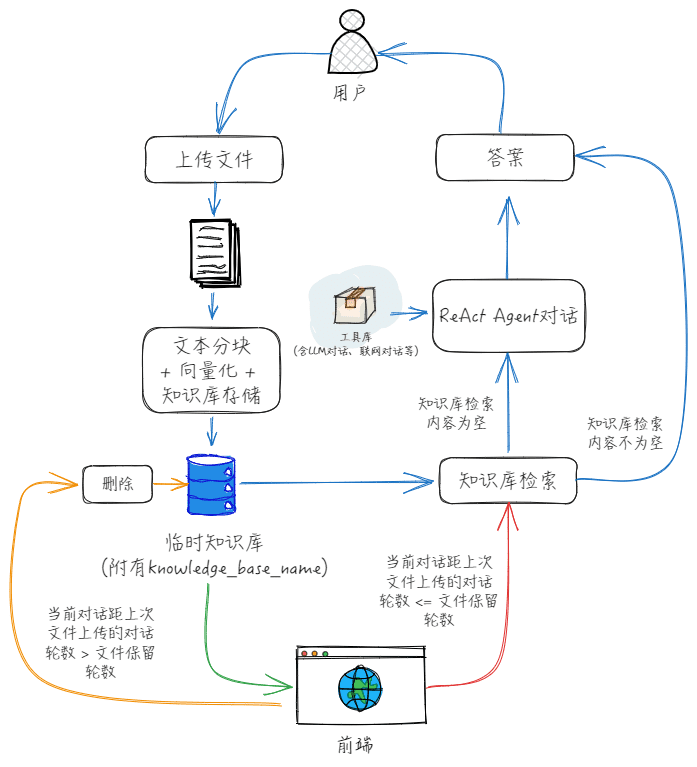

Through the front, we can find Action and Action Input are the name of the tool and the input of the tool, if we want to have a conversation about temporary uploaded files, we can just add the tools directly? In fact, it's not that good, because Action Input is the input of the tool based on query parsing by LLM, while "Dialog about temporary uploaded file" function needs file location or file content, for this reason, in fact, you can press my drawing below, and put "Dialog about temporary uploaded file" in the "Action Input" function alone. For this reason, you can separate the "Dialog on temporary uploaded file" function from the "Dialog on temporary uploaded file" function as I have drawn in the figure below.

The specific front-end and back-end cooperation process is as follows:

1. After the user uploads a file, the front-end first creates a temporary knowledge base and then uploads the file to that knowledge base, while initializing the number of file conversation rounds to 0 and recording the variable name of that temporary knowledge base;

2, each subsequent round of dialog, the name of the knowledge base are passed into Agent Chat, according to the above flow, after each round of dialog, are updated with the number of rounds of dialogue under the document;

3、When the number of document dialog rounds > the number of document retention rounds or the user manually clears the context, then the front-end deletes the temporary knowledge base and clears the variable name of the temporary knowledge base.

bibliography

[1] ReAct: Synergizing Reasoning and Acting in Language Models, official presentation: https://react-lm.github.io/[2] LangChain dry run (1): How exactly does AgentExecutor drive models and tools to accomplish tasks? - Huang Jia's article - 知乎https://zhuanlan.zhihu.com/p/661244337[3] ReAct, Langchain documentation: https://python.langchain.com/docs/modules/agents/agent_types/react/[4] Agent Toolkit, Langchain documentation: https://python.langchain.com/docs/integrations/toolkits/[5] "LLM+Search Rewrite" 10 papers at a glance - Articles by Obsessed with Search and Broad Push - Knowledge: https://zhuanlan.zhihu.com/p/672357196[6] MultiQueryRetriever, Langchain documentation: https://python.langchain.com/docs/modules/data_connection/retrievers/MultiQueryRetriever/[7] HypotheticalDocumentEmbedder, Langchain documentation: https://github.com/langchain-ai/langchain/blob/master/cookbook/hypothetical_document_ embeddings.ipynb[8] Big Model Application Development, Must-See RAG Advanced Tips - Articles by Rainfly - Knowledge: https://zhuanlan.zhihu.com/p/680232507© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...