LLM API Engine: Rapid API Generation and Deployment through Natural Language

General Introduction

LLM API Engine is an open source project designed to help developers rapidly build and deploy AI-powered APIs.The project leverages the Large Language Model (LLM) and intelligent web crawling technologies to allow users to create custom APIs through natural language descriptions.Key features include automatic data structure generation, real-time data updates, structured data output, and flexible deployment options. The LLM API Engine utilizes a modular architecture that supports deployment of API endpoints on multiple platforms such as Cloudflare Workers, Vercel Edge Functions, and AWS Lambda.

Function List

- Text to API Conversion: Generate APIs with simple natural language descriptions.

- Automatic data structure generation: Automatically generate data structures using OpenAI technology.

- Intelligent Web Crawling: Web page data crawling using Firecrawl technology.

- Real-time data updates: Supports timed crawls and real-time data updates.

- Instant API Deployment: Rapid deployment of API endpoints.

- Structured Data Output: Validates the output data via JSON Schema.

- Caching and Storage Architecture: Use Redis for caching and storage.

Using Help

Installation process

- clone warehouse::

git clone https://github.com/developersdigest/llm-api-engine.git

cd llm-api-engine

- Installation of dependencies::

npm install

- Creating an environment variable file: Create the following in the project root directory

.envfile and add the following variables:

OPENAI_API_KEY=your_openai_key

FIRECRAWL_API_KEY=your_firecrawl_key

UPSTASH_REDIS_REST_URL=your_redis_url

UPSTASH_REDIS_REST_TOKEN=your_redis_token

NEXT_PUBLIC_API_ROUTE=http://localhost:3000

- Running the development server::

npm run dev

Open your browser to accesshttp://localhost:3000View app.

Functional operation flow

- Creating API Endpoints::

- Visit the application homepage and click the "Create New API" button.

- Enter the API name and description, and select the type of data source (e.g. web crawling).

- Use natural language to describe the required data structures and crawling rules.

- Click "Generate API" button, the system will automatically generate API endpoints and data structures.

- Configuring and managing the API::

- View the list of created APIs on the API Management screen.

- Click on an API to go to the details page, where you can edit the API configuration, view crawl logs, and test API endpoints.

- Configured using the Redis Storage API, with support for changes and updates at any time.

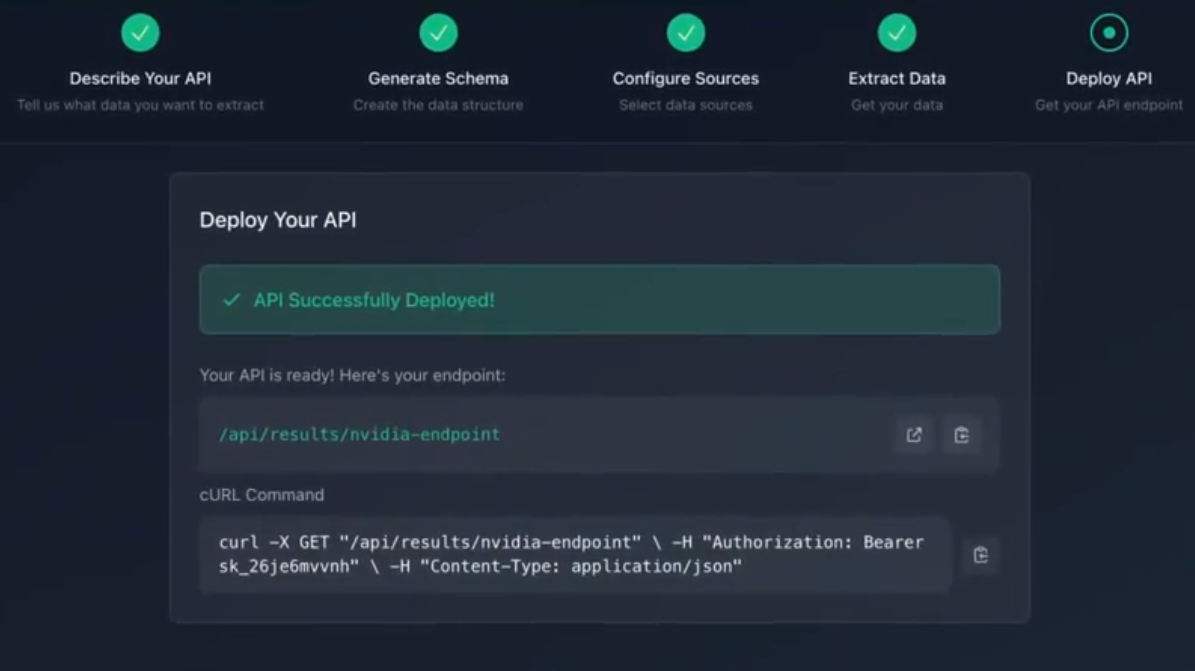

- Deploying API Endpoints::

- Select the deployment platform (e.g. Vercel, AWS Lambda, etc.).

- Configure the deployment parameters according to the platform requirements and click the "Deploy" button.

- After the deployment is complete, you can view the URL and status of the API endpoints on the "API Management" screen.

- Using API Endpoints::

- Call API endpoints in your application to get structured data.

- Supports access to API endpoints via HTTP requests that return data in JSON format.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...