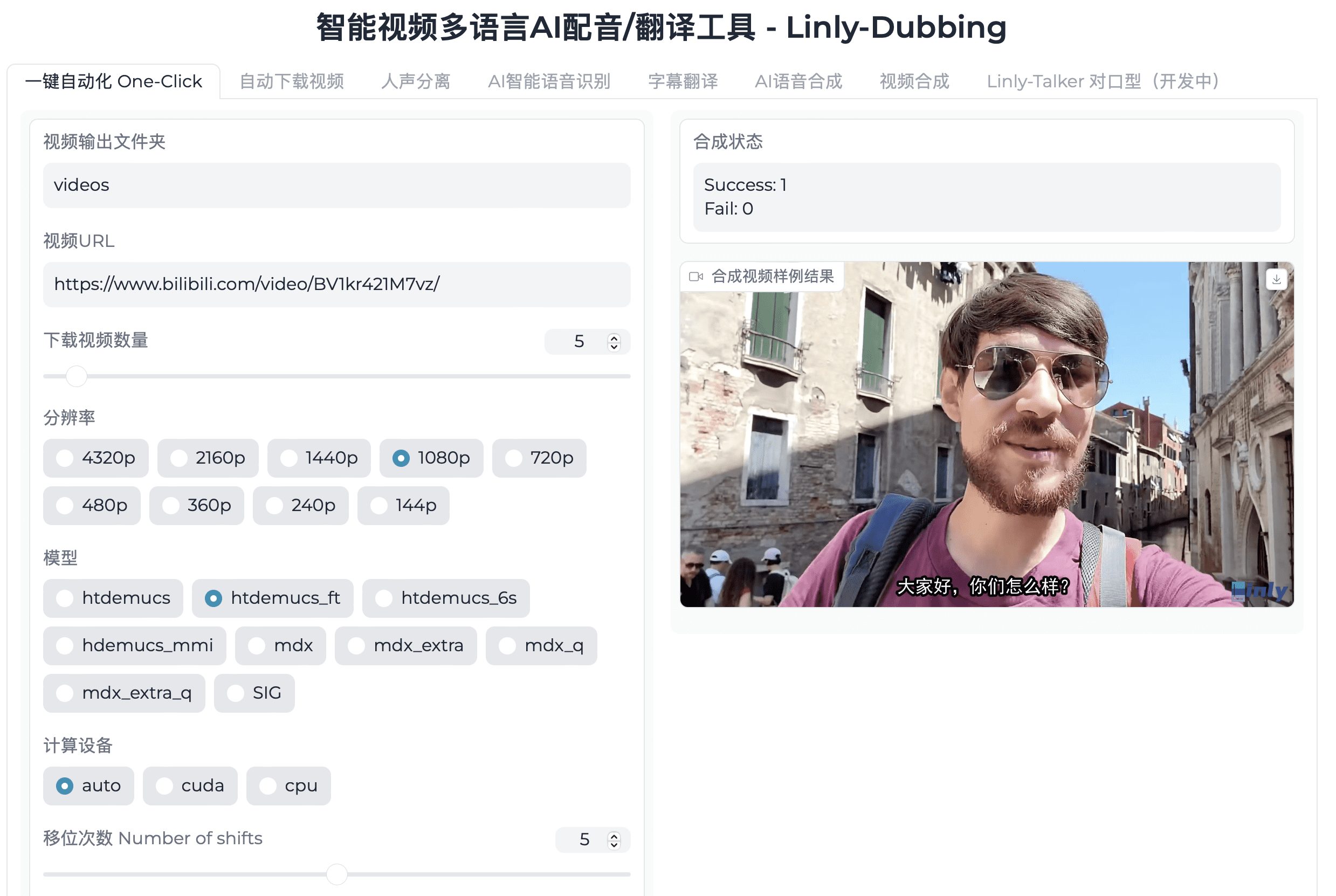

Linly-Dubbing: Intelligent Video Multilingual AI Dubbing/Translation Tool

General Introduction

Linly-Dubbing is an intelligent multilingual AI dubbing and translation tool designed to provide users with high-quality multilingual video dubbing and subtitle translation services by integrating advanced AI technology. The tool is particularly suitable for scenarios such as international education, global content localization, etc., helping teams spread high-quality content across the globe.

Function List

- Multi-language support: Provides dubbing and subtitling translations in Chinese and many other languages to meet globalization needs.

- AI speech recognition: Speech-to-text conversion and speaker recognition using advanced AI technology.

- Large Language Model Translation: Combined with leading-edge language modeling (e.g., GPT), translations are performed quickly and accurately, ensuring professionalism and naturalness.

- AI voice cloning: Utilizes cutting-edge voice cloning technology to generate a voice that is highly similar to the original video dub, maintaining emotional and intonational coherence.

- Digital human-to-human lip-synching technology: Through lip-synching technology, the voice-over is highly compatible with the video screen, enhancing the sense of realism and interactivity.

- Flexible Uploading and Translation: Users can upload videos and choose their own translation language and standard, ensuring personalization and flexibility.

- regular update: Continuously introducing the latest models to stay at the forefront of dubbing and translation.

Using Help

Installation process

- clone warehouse: First, clone the Linly-Dubbing repository to your local machine and initialize the submodules.

git clone https://github.com/Kedreamix/Linly-Dubbing.git --depth 1 cd Linly-Dubbing git submodule update --init --recursive - Installation of dependencies: Create a new Python environment and install the required dependencies.

conda create -n linly_dubbing python=3.10 -y conda activate linly_dubbing cd Linly-Dubbing/ conda install ffmpeg==7.0.2 -c conda-forge python -m pip install --upgrade pip pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple pip install torch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 --index-url https://download.pytorch.org/whl/cu118 pip install -r requirements.txt pip install -r requirements_module.txt - Configuring Environment Variables: Create the .env file in the project root directory and fill in the necessary environment variables.

OPENAI_API_KEY=sk-xxx MODEL_NAME=gpt-4 HF_TOKEN=your_hugging_face_token - Running the application: Download the required model and launch the WebUI interface.

bash scripts/download_models.sh python webui.py

Usage Process

- Upload Video: Users can upload video files to be dubbed or translated through the WebUI interface.

- Selection of language and criteria: After uploading the video, the user can select the language to be translated and the dubbing standard.

- Generate voiceovers and subtitles: The system will automatically perform speech recognition, translation and dubbing generation, and synchronize the generation of subtitle files.

- Download results: Users can download the generated dubbed video and subtitle files for subsequent editing and use.

Main Functions

- Automatic video download: Use the yt-dlp utility to download video and audio in a variety of formats and resolution options.

- vocal separation: Vocal and backing track separation using Demucs and UVR5 technology to produce high quality backing track and vocal extracts.

- AI speech recognition: Accurate speech recognition and subtitle generation using WhisperX and FunASR, with support for multi-speaker recognition.

- Large Language Model Translation: High-quality multilingual translations combining the OpenAI API and the Qwen model.

- AI speech synthesis: Utilizing Edge TTS and CosyVoice Generate natural and smooth speech output with support for multiple languages and speech styles.

- Video Processing: Provide functions such as adding subtitles, inserting background music, adjusting volume and modifying playback speed to personalize the video content.

- Digital human-to-human lip-synching technology: Digital human-to-digital lip-synchronization through Linly-Talker technology to enhance the professionalism of the video and the viewing experience.

Linly-Dubbing one-click installation package

Quark: https://pan.quark.cn/s/f526eb488113

Dial: https://pan.baidu.com/s/1aapXpIc7qwO5h5sDzF9dLA?pwd=np7w

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...