MediaTek's Open Source Traditional Chinese Multimodal Model and Taiwan Accent Speech Synthesis Model

MediaTek Research recently announced that it has officially open-sourced two multimodal models optimized for Traditional Chinese: Llama-Breeze2-3B and Llama-Breeze2-8B, which are designed for different computing platforms such as cell phones and PCs, and have the ability to call functions, which allows for the flexible use of external tools to expand application scenarios. The two models are designed for different computing platforms, such as mobile phones and PCs. In addition, MediaTek has also open-sourced an Android application based on Llama-Breeze2-3B and BreezyVoice, a speech synthesis model that can generate a natural Taiwanese accent, demonstrating its comprehensive layout of terminal AI technology.

The Llama-Breeze2 series of multimodal models for cell phones and PCs.

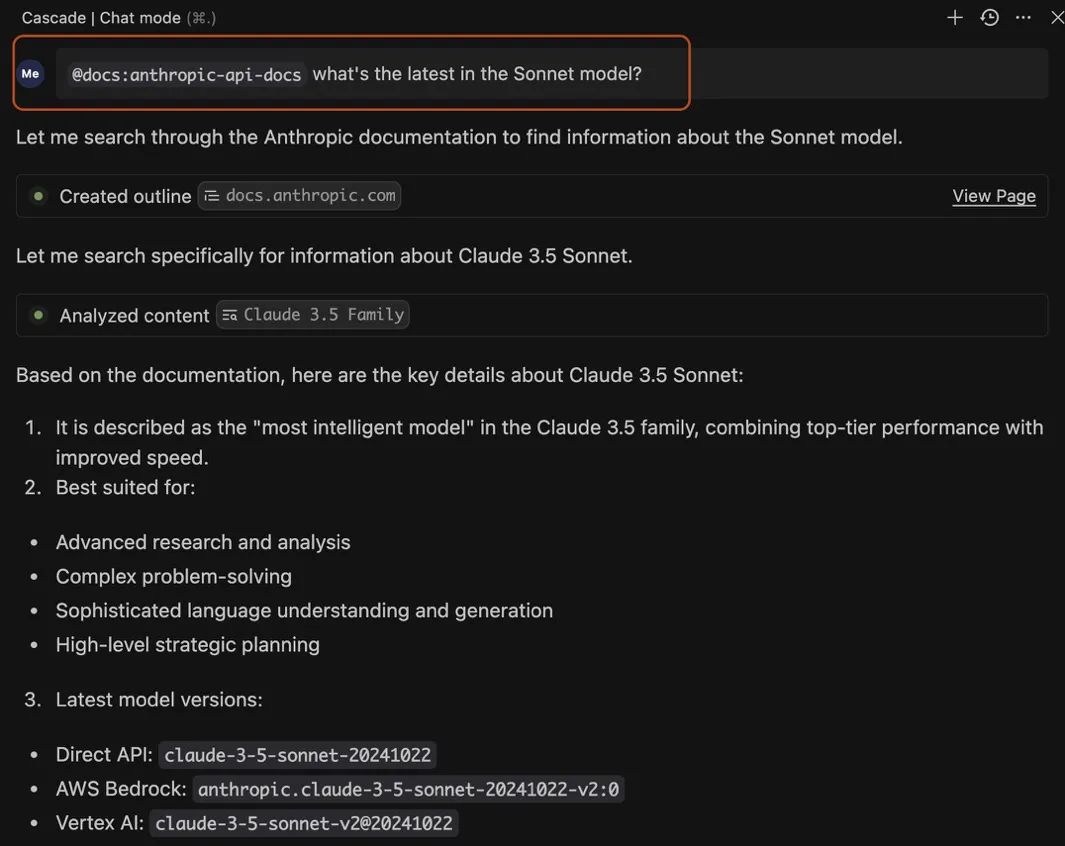

MediaTek Innovation Base this open source Llama-Breeze2 Series Traditional Chinese Multimodal Base ModelsThe Llama-Breeze2-3B is a lightweight version of Llama-Breeze2 that can run on mobile devices, and a lightweight version of Llama-Breeze2-8B that offers more powerful performance on PCs. According to MediaTek, this series of models is not only proficient in Traditional Chinese, but also integrates advanced features such as multimodality and function calls, enabling it to understand image information and call external tools to perform complex tasks.

In order to further promote the development of mobile AI applications, MediaTek has taken the Llama-Breeze2-3B model as the core.Developed and open sourced an Android applicationThis app is designed to enhance the capabilities of your phone's AI assistant, such as image content recognition and external tool invocation. The app is designed to enhance the capabilities of the phone's AI assistant, such as image content recognition, external tool invocation, and more. Meanwhile, MediaTek has also synchronized disengagement BreezyVoice, a speech synthesis model capable of synthesizing authentic Taiwanese accents. The open source content of the above three models and apps includes model weights and part of the execution code, which is convenient for developers to research and apply.

Llama-Breeze2 Modeling Technology Explained: Based on Llama 3 Optimization, Combining Complexity, Visualization, and Tool Calling Capabilities

In-depth analysis of the Llama-Breeze2 model, whose core technology is optimized based on Meta's open-source Llama 3 major language model. MediaTek further utilizes the Traditional Chinese corpus to strengthen the model's understanding of Traditional Chinese, and integrates the visual language model as well as function calls (Function Calling), giving the Llama-Breeze2 series of models three main features: Traditional Chinese optimization, image comprehension, and the ability to call external tools.

existTraditional Chinese capabilityAs for the comparison results provided by MediaTek, compared to the Llama 3 3B Instruct model with the same parameter scale, Llama-Breeze2-3B is able to accurately list well-known night markets, such as Shihlin Night Market, Raohe Night Market, and Luodong Night Market, in the generated short text of Taiwan night markets; whereas, the Llama 3 3B Instruct model correctly identifies only Shihlin Night Market, and However, it generates two fictitious night markets - Telecom Night Market and World Trade Night Market. This result highlights the advantage of the Llama-Breeze2 family of models in understanding Traditional Chinese.

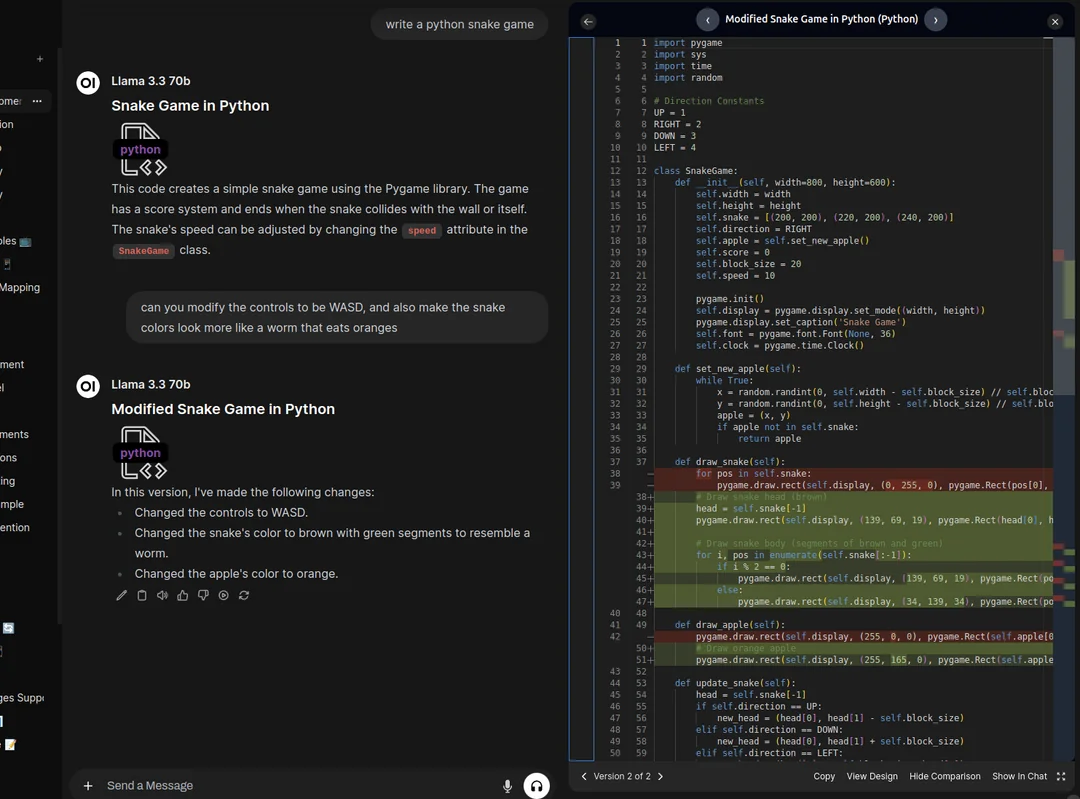

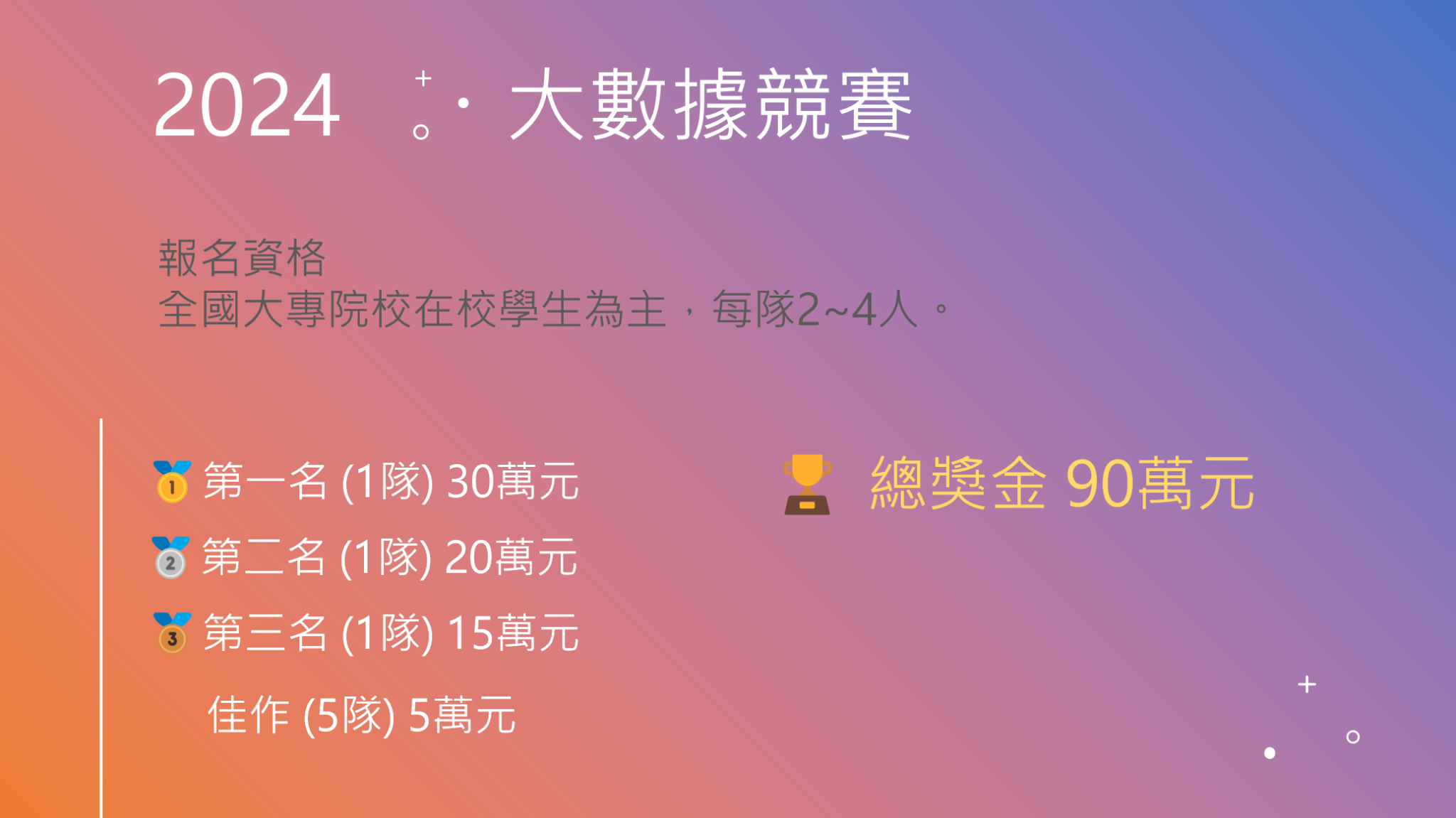

existmultimodal capabilityLlama-Breeze2-3B not only processes textual information, but also effectively analyzes the content of images, such as diagrams, optical character recognition (OCR) results or photos of places of interest. The model is able to understand the meaning of the image and make відповідь. For example, in the following scenario:

User Question: What is the total amount of prize money available to the top three finishers?

Llama-Breeze2-8B replied: According to the information in the picture, the first place prize is 300,000 RMB, the second place is 200,000 RMB, and the third place is 150,000 RMB. Totaling these figures, the top three prizes totaled 650,000 RMB.

In addition, the Llama-Breeze2 series models are equipped with function calls, enabling them to call external tools to accomplish more complex tasks. For example, when a user inquires about the weather, the model can call the API interface of a weather application to instantly obtain the latest weather information and reply to the user with the results, providing a smarter and more interactive experience.

Android App Example: Llama-Breeze2-3B Driving Mobile AI Apps

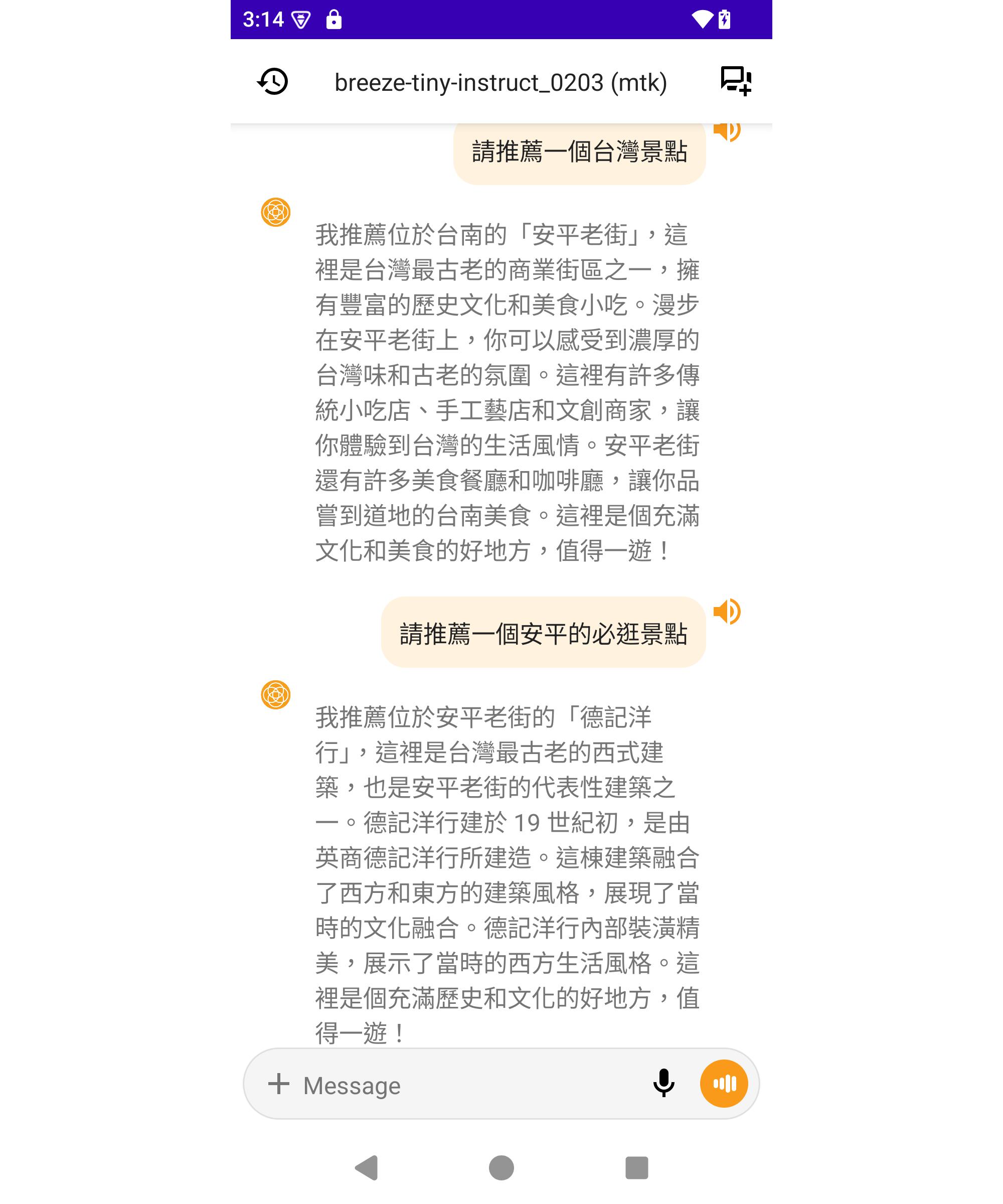

In addition to open-sourcing two multimodal language models, MediaTek Innovation Base has further open-sourced an Android app that can be deployed directly on cell phones. Based on the Llama-Breeze2-3B model, this app can be used as a personal AI assistant to help users handle real-time translation, attraction recommendations and other tasks - as shown in the figure below. What's more, the app is also equipped with a speech generation function, which allows users to input text and the model will generate a natural and smooth voice response, which can be used in multiple scenarios such as intelligent navigation.

BreezyVoice Speech Synthesis Model: Five Seconds of Audio Samples to Generate Authentic Taiwanese Accents

In this open source program, MediaTek Innovation Base also launched BreezyVoice, a speech synthesis model that is specially trained for traditional Chinese speech and designed with a lightweight architecture to quickly generate highly realistic speech with only 5 seconds of sample audio input. BreezyVoice can be used as a speech output solution for AI assistants to provide a more natural and interactive experience. BreezyVoice can be used as a voice output solution for AI assistants to provide a more natural interactive experience. According to MediaTek, BreezyVoice now runs smoothly on laptops and can be combined with any large language model (LLM) or speech-to-text system to expand the application possibilities.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...