Lambda Chat: various fine-tuned Llama 3.1 405B models (codename Hermes 3)

General Introduction

Lambda Chat is an innovative AI chat platform designed to provide developers with the opportunity to quickly experiment with and apply top AI models. The platform supports a wide range of advanced AI models, including Hermes 3 and Llama 3.1, and allows users to integrate these models via an API for contextual inputs up to 128k tokens long.Lambda Chat also provides private instances to ensure maximum flexibility and performance, and is an open source alternative to ChatGPT.

interviews lambda (Greek letter Λλ) Run the fine-tuned Llama 3.1 model API without creating any instances.

Function List

- Support for multiple AI models (e.g. Hermes 3, Llama 3.1)

- Provides contextual input of up to 128k tokens

- Public and private reasoning endpoints

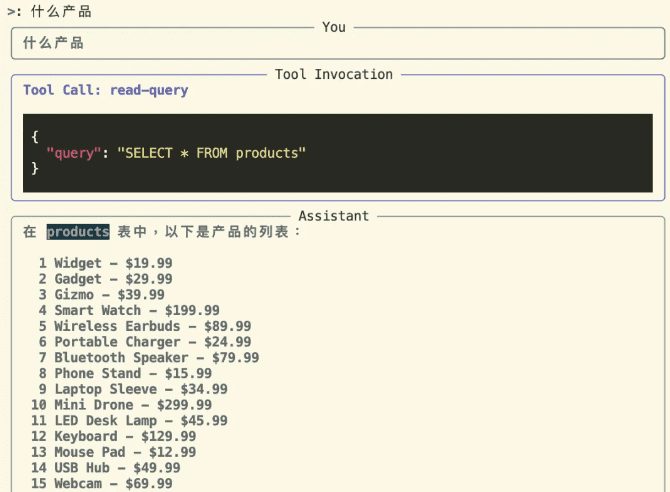

- Creating a Data Conversion Agent

- Generate Role Form

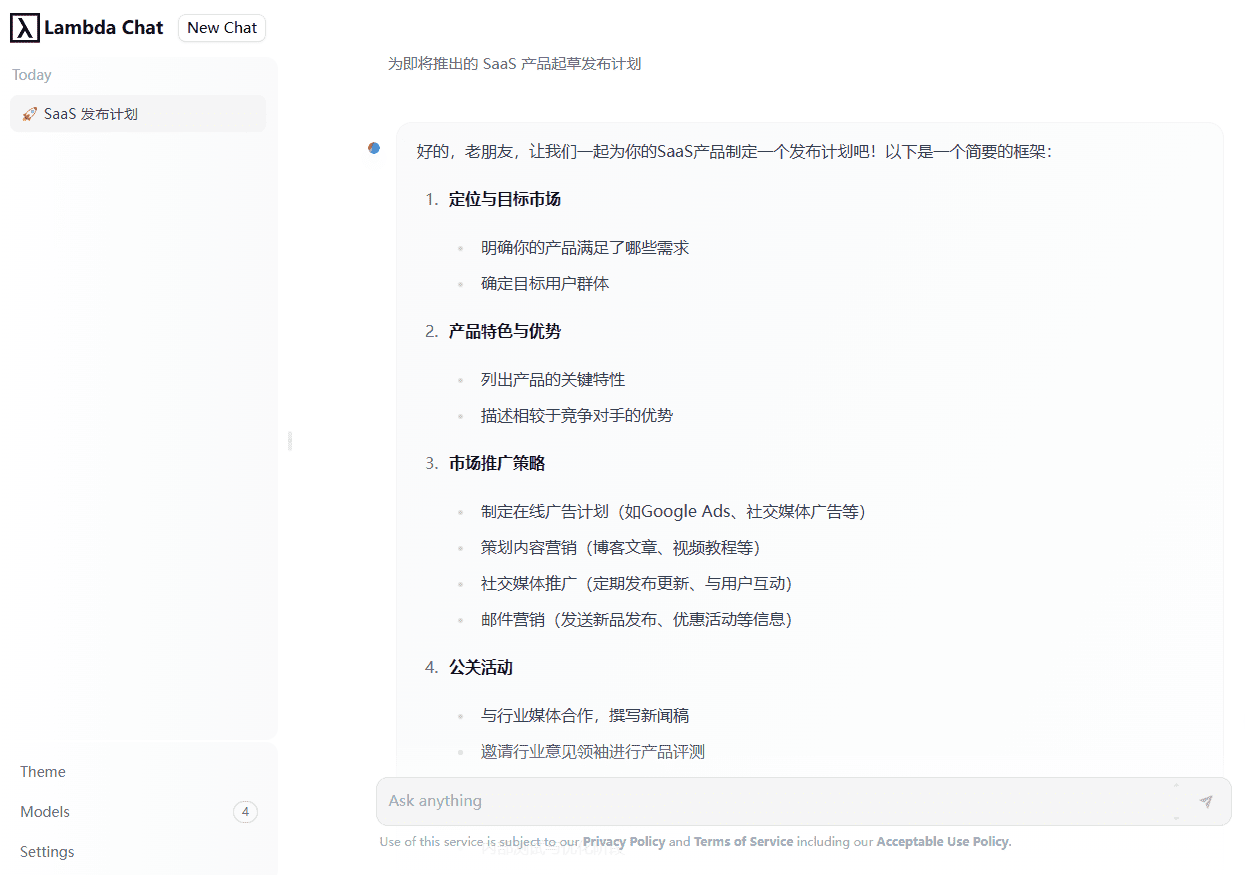

- Developing a product release plan

- Open Source Alternative to ChatGPT

Using Help

Installation and use

Lambda Chat requires no installation and can be accessed and used by users directly through the website. Below is a guide to using the main features:

1. Selection of AI models

On the Lambda Chat homepage, users can select different AI models such as Hermes 3 or Llama 3.1. Click on the model name to view detailed information and instructions.

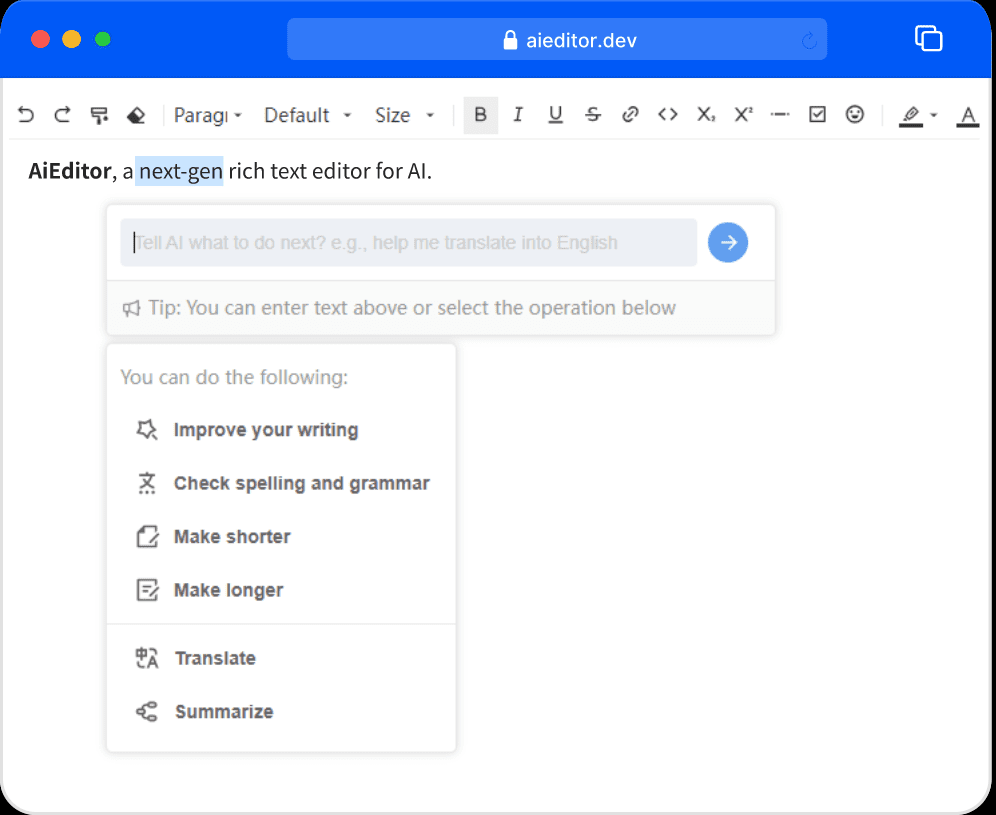

2. Creation of data conversion agents

- Once in Lambda Chat, select the "Create Agent" option.

- Enter data conversion requirements, such as data format, conversion rules, etc.

- Click "Generate Proxy", the system will automatically create a proxy that meets the requirements.

3. Generating role forms

- Select the "Generate Role Form" function.

- Enter basic information about your character, such as race, occupation, etc.

- The system will automatically generate a detailed role form that the user can modify and save as needed.

4. Developing a product launch plan

- Select the "Create Release Plan" function.

- Enter basic information about the product and the goal of the release.

- The system will generate a detailed release plan, including timelines, task assignments, etc.

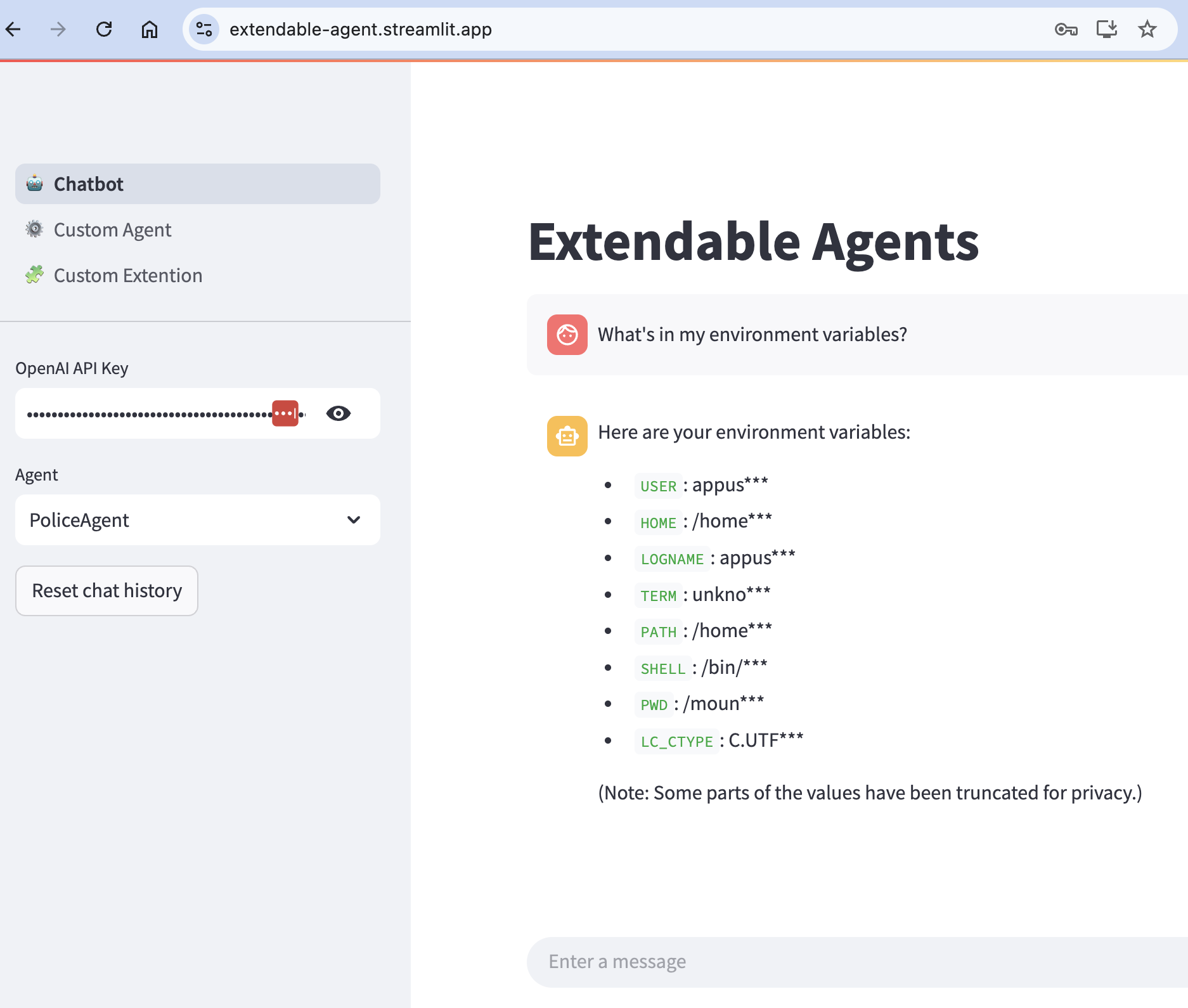

5. Integration using APIs

- Users can integrate AI models into their applications through the API provided by Lambda Chat.

- Visit the API documentation for detailed integration steps and sample code.

6. Private instances

- Users can choose to create private instances if they need more flexibility and performance.

- Contact the Lambda Chat team for a guide to setting up and using private instances.

7. Public reasoning endpoints

- Lambda Chat provides public inference endpoints that users can use directly to reason about AI models.

- Visit the Inference Endpoints page for detailed instructions and examples.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...