Kunlun Weaver Releases China's First Open Source Video Big Model for AI Short Drama

Generalized scenarios versus vertical scenarios, this is the primary decision facing the development of AI big models.

The current video big models on the market are mostly generalized models, capable of generating video content for various scenarios based on prompt words input by users. At the same time, some of the video big models have also begun to explore vertical fields that are closer to application scenarios, such as ByteDance's recently released Goku The model, then, focuses on the Digital Man live bandwagon application.

SkyReels V1: A Milestone in Chinese AI Short Drama Video Generation Modeling

Kunlun World Wide today announced SkyReels V1, China's first large model of video generation for AI short drama creation. Kunlun Wanwei officially launched SkyReels, an AI short drama platform, in December 2024, and the SkyReels V1 will provide robust large model technical support for the platform.

According to actual observation and experience, SkyReels V1 can be called the "most knowledgeable performance" video model on the market. It has demonstrated its expressive power comparable to movie and TV production level in many aspects such as micro-expressions of characters, details of actions, scene construction, picture composition, and lens operation, and is capable of generating "movie star and movie queen level" performance footage.

SkyReels A1: Synchronized open-source algorithm for controlled expression movements

Simultaneously released with SkyReels V1, the SkyReels A1, which is the first SOTA level, video base model based expression action controllable algorithm developed by KunlunWanwei.

What's more, SkyReels V1 and SkyReels A1 are both open source models, and all users can download and use them for free according to the open source agreement. The open source address is as follows:

https://github.com/SkyworkAI/SkyReels-V1

https://github.com/SkyworkAI/SkyReels-A1

https://skyworkai.github.io/skyreels-a1.github.io/report.pdf

if DeepSeek R1 has set a new open source benchmark for large models of text, especially inference models, then Kunlun Wanwei's SkyReels V1 SkyReels A1 and SkyReels A1 set new heights for open source in the booming AI skit market. With the support of these two models, the AI short drama market is expected to usher in the "DeepSeek moment".

1. The Great Model of Video that "Knows Best for Performance"

SkyReels V1 is a large model that benefits from and actively contributes back to the open source community. It is trained based on HunYuan-Video, a hybrid video grand model open-sourced by Tencent in December 2023 .

Although open source models perform well on general-purpose tasks, their performance may not be optimal in specific domains or segmented tasks. As a result, model vendors usually need to perform a lot of fine-tuning, inference optimization, and security alignment during the actual training process.

At present, the video big models in the AI short drama market generally have deficiencies in character expression generation, and the most prominent problem is that the character expressions are empty and lack of vividness. With SkyReels V1, Kunlun wants to break through these industry pain points.

Model training is a key component for large models to acquire knowledge and capabilities. In the training process of SkyReels V1, the core goal of KunlunWei is to teach the model "how to perform". To this end, Kunlun has mainly carried out the following two core technological innovations:

Data cleaning and labeling: the cornerstone of model fine-tuning

The first is data cleaning and labeling, which is a key part of model fine-tuning. Just as a teacher needs high-quality teaching materials to prepare for a class, KunlunWavi has constructed a ten-million level high-quality movie, TV series and documentary dataset based on its self-developed high-quality data cleansing and manual labeling process. This constitutes the "teaching materials" for SkyReels V1 to learn acting.

The Human-Centric Multimodal Grand Model of Video Comprehension: Improving Character Comprehension

The "textbook" alone is not enough; more in-depth guidance on modeling is needed. Therefore, KunlunWanwei has developed its own Human-Centric (character-centered) multimodal model for video understanding. It aims to significantly improve the model's ability to understand the information related to the characters in the video.

This set of character intelligent analysis system based on multimodal large model of video understanding can realize "movie star level" character performance effects at multiple levels, such as expression recognition, character spatial position perception, behavioral intent understanding and performance scene understanding.

What is a "movie star" performance?

For example, SkyReels V1 is capable of generating movie-quality character micro-expressions, supporting 33 subtle character expressions and more than 400 combinations of natural movements, thus highly reproducing real-life emotional expressions.

Another example is that SkyReels V1 has also mastered the aesthetics of movie-grade lighting. Trained with high-quality Hollywood-grade movie and TV data, every frame generated by SkyReels V1 has a movie-grade texture in terms of composition, actor's position and camera angle.

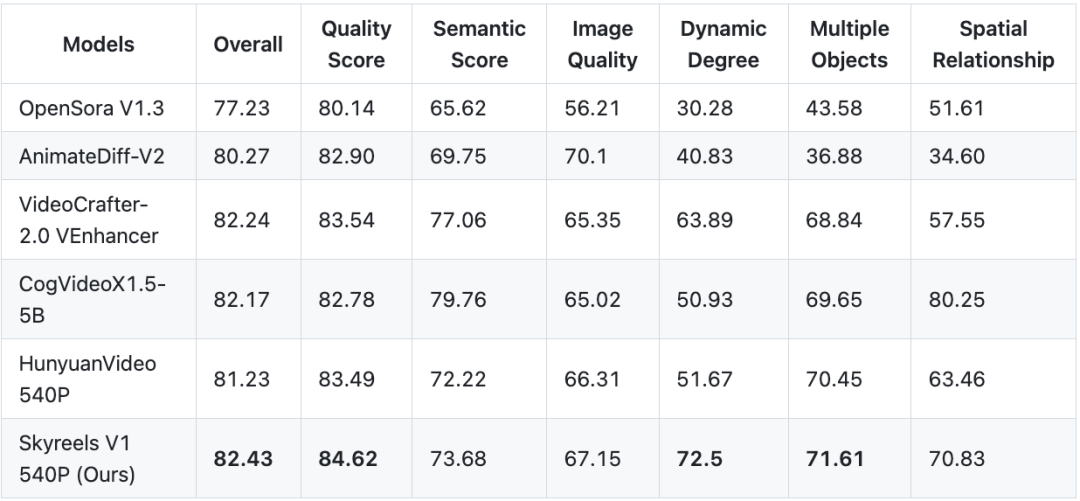

Comparison of SkyReels V1 and closed-source modeling results

Although SkyReels V1 is an open-source model, it generates results comparable to closed-source models such as Conch AI and Coring AI. The comparison of the generation results of SkyReels V1, Conch AI and Keling AI under the same cue word condition is as follows:

Cue word 1: A picture of a brown haired female with gorgeous red tinted glasses and crimson lipstick. She waved her hand toward the front, smiling, then laughing.

SkyReels V1.

Conch AI.

Kling AI.

Cue word 2: Dramatic frontal close-up reveals the face of a deep-sea diver wearing an old-fashioned copper diving helmet. The thick circular glass of the helmet provides a clear view of his calm expression. Tiny bubbles float upward inside the helmet, with water droplets clinging to the inner walls. He carefully held an open book, the pages fluttering gently in the underwater currents. The book appeared to be dry and intact, in stark contrast to its watery surroundings. Soft beams of sunlight pierced through the water, illuminating his face and casting a golden glow over the pages. Fish swam around, their colors muted by depth but still vivid in front of the blue-green background. The diver reads the text intently, completely immersed in the reading despite being underwater. The surreal combination of literature and the depths of the ocean creates a dreamlike atmosphere, highlighting the quest for knowledge in the most unexpected places.

SkyReels V1.

Conch AI.

Kling AI.

In terms of actual results, SkyReels V1 shows the strength of closed-source modeling, both in terms of image clarity and the fineness of character performance. SkyReels V1 even outperforms closed-source models in terms of hair dynamics and other details.

Graph-generated video capabilities second to none in open source models

In addition, SkyReels V1 not only supports text-generated video, but also supports image-generated video, which is one of the most powerful graph-generated video models among the current open source models.

To validate SkyReels V1's graph-generated video capabilities, we fed the model the popular groundhog stills from the Chinese New Year movie Ne Zha's Demon Child Descends, and set the cue word: the groundhog looks up, pauses for a second, and then yells. The results generated by SkyReels V1 are stunning:

Suffice it to say.SkyReels V1 is the most performance savvy video grand model on the market today.

2. Self-developed inference framework SkyReels Infer: building an open-source model for everyone

What is even more valuable is that SkyReels V1, as an open source model, has not only made significant breakthroughs in generating results, but also has a very high inference efficiency. This is thanks to SkyReels Infer, a reasoning framework developed by Kunlun Wanwei.

Implications of a Self-Study Reasoning Framework

What is the importance of self-referential reasoning frameworks?

In general, open source models are less often optimized specifically for inference frameworks, especially in large-scale application scenarios. However, without optimizing the inference framework, it is difficult to meet the user experience requirements in terms of inference efficiency and cost.

A case in point is the early 2024 release of OpenAI. Sora At the time, some users reported that Sora took an hour to generate a one-minute video. This was one of the main reasons why Sora went live almost a year after its release. To this day, many big video models still face long wait times for video generation.

SkyReels Infer, the inference framework developed by KunlunWanwei, not only maintains high performance, but also takes into account efficiency and ease of use.

SkyReels Infer's superior performance

SkyReels Infer's inference speed is excellent. On a single RTX 4090 graphics card, it takes just 80 seconds to generate a 544P video. Users can be lost in thought or browsing their phones and the video is already generated.

SkyReels Infer supports distributed multi-card parallel computing. This is a powerful technology. Simply put, it allows multiple graphics cards to work together on video generation tasks.

With technologies such as Context Parallel, CFG Parallel, and VAE Parallel, multiple graphics cards work together as an efficient team to achieve significant processing speeds. This is especially useful for applications that require large-scale computation, such as creating complex animations or special effects videos.

SkyReels Infer also excels in low video memory optimization. It uses fp8 quantization and parameter-level offloading technology to make SkyReels Infer run smoothly even on ordinary graphics cards with small video memory.

Graphics memory is a key parameter of a graphics card, which determines the amount of data that the card can process at the same time. In the past, many video generation models required high video memory, which was often unavailable to the average user due to lack of graphics card performance. SkyReels Infer's low video memory optimization has completely changed this situation. This means that users can easily experience the power of video generation models without having to purchase an expensive high-end graphics card. This undoubtedly significantly lowers the threshold of AI video generation, allowing more users to enjoy the fun of AI technology.

SkyReels Infer is based on the open source Diffuser library. The Diffuser library is an excellent open source library that provides a wealth of features and tools. SkyReels Infer is based on the Diffuser library and naturally inherits many of its advantages. For developers, this means that they can get started quickly and easily integrate SkyReels Infer into their existing projects.

Performance Comparison

What is the actual performance of SkyReels Infer? SkyReels V1, equipped with SkyReels Infer inference framework, was tested against Tencent's official open-source HunYuan-Video. The test results show that SkyReels V1 has better speed and latency than HunYuan-Video in generating 544p videos.

In addition, SkyReels V1 supports a multi-card deployment strategy, allowing up to 8 graphics cards to be utilized simultaneously to accelerate computing tasks. In addition, SkyReels V1 is compatible with high-end graphics cards such as the A800 and consumer graphics cards such as the RTX 4090, meeting the needs of both professional and casual users.

3. Open source expression movement control algorithm SkyReels A1: industry-leading "AI face-swap" technology

It is worth mentioning that after the model training and inference sessions, theIn order to realize more accurate and controllable character video generation, Kunlun also further open-sourced SkyReels A1, an expression action controllable algorithm based on video base model.

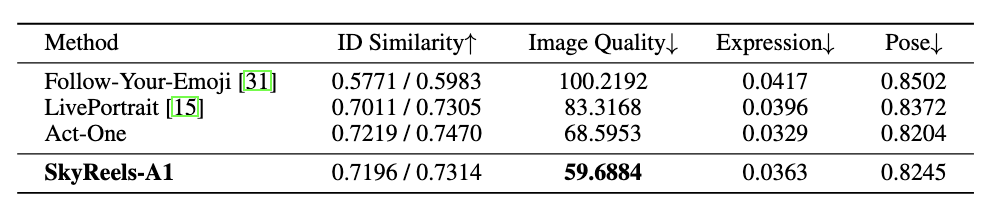

SkyReels A1 is an application-layer algorithm that sits on top of the underlying training and inference technology, and is benchmarked against Runway's Act-One technology, which enables movie-quality expression capture.

SkyReels A1's "AI Face Swap" game.

The core gameplay of both Runway Act-One and SkyReels A1 is "AI Face Swap": users only need to prepare a photo of Character A and a video clip of Character B, and then the expression, movement and lines of Character B will be transplanted to Character A directly.

In terms of ID Similarity, Image Quality, Expression, and Pose, SkyReels A1 achieves SOTA (State of the Art) results compared to similar open source algorithms in the industry. Compared to similar open source algorithms in the industry, SkyReels A1 achieves SOTA (State of the Art) results, which are close to those of the closed-source Act-One technology, with an advantage in generation quality.

Video-driven, cinematic expression capture

First of all, SkyReels A1 is capable of video-driven, cinematic expression capture. Compared to the Runway Act-One, SkyReels A1 realizes a greater degree of character expression drive.

High-fidelity micro-expression reproduction

Next is high fidelity micro-expression reproduction. SkyReels A1 is capable of generating highly realistic video of people in motion based on any human scale, including portraits, half-body and full-body compositions. This realism stems from the accurate simulation of the character's expression changes and emotions, as well as the in-depth reproduction of multi-dimensional details such as skin texture and body movement following.

For example, side face expression control generation:

and more realistic eyebrow and eye micro-expression generation:

Face Hold and Large Motion Processing

In addition to micro-expressions, SkyReels A1 also outperforms Runway Act-One in areas such as face retention and large-scale motion processing. Face retention and large-scale motion processing are precisely the areas where many video generation models are prone to errors.

For example, in the following case, the face of the rightmost character shows significant distortion distortion that does not match the original character image.

SkyReels A1 also allows for greater head and natural body movement. In the following case, the body of the rightmost character has almost no movement.

From the actual effect, it is easy to see that SkyReels A1 algorithms simplify the complex process of traditional video production, providing content creators with an efficient, flexible and low-cost solution, which can be widely used in the production of a variety of creative content.

4. The "DeepSeek Moment" in the AI Sketch Marketplace

The open source of SkyReels V1 and SkyReels A1 is only the first step of the open source video modeling program of KunlunWei. In the future, Kunlun will continue to open source related technologies, including professional-grade mirror control versions, 720P resolution model parameters, model parameters for larger training datasets, and video generation algorithms that support full-body controlled generation.

As a matter of fact, open source has long been integrated into the DNA of Kunlun World Wide. Mr. Fang Han, Chairman and CEO of Kunlun World Wide, as the founding father of Chinese Linux, one of the Four Musketeers of Chinese Linux, and the earliest cybersecurity expert in China, has 30 years of experience in the Internet industry. Mr. Fang has been actively involved in the open source movement since 1994, and is an early promoter of the open source concept in the Internet.

Fang Han has publicly stated that the open source big model is an important supplement and alternative to the commercial closed source big model, hoping to promote the democratization of technology and lower the threshold of the industry through open source.

As early as in December 2022, Kunlun Waveway released the whole series of "Kunlun Tian Gong" AIGC algorithms and models, and announced the full open source. Kunlun is not only one of the enterprises with the most comprehensive layout in the domestic AIGC field, but also the first company in China to devote itself to the AIGC open source community.

In the past three years, Kunlun World Wide has continued to release and open source the Tiangong series of large models. In April 2024, Kunlun World Wide released and simultaneously open-sourced Tiangong 3.0, a MoE super model with 400 billion parameters, whose performance exceeds that of Grok 1.0 at the same time. In June 2024, KunlunWei again open-sourced the 200 billion sparse model Tiangong MoE, which became the first open-source 100 billion MoE model that supports inference on a single RTX 4090 server. In November 2024, KWL open-sourced Skywork-o1-Open and other series of models.

With the belief in open source technology, Kunlun is committed to promoting the realization of AGI (General Artificial Intelligence) in the whole industry.

Since entering the field of AI in 2020, Kunlun has completed the layout of the whole industry chain of "arithmetic infrastructure - big model algorithm - AI application" and constructed a diversified AI business matrix.

AI short dramas are an important segment of Kunlun Wealth's diversified AI application matrix.

AI short dramas are an emerging market that is expected to see high growth in 2025. The "2024 Short Drama Overseas Marketing White Paper" released by TikTok for Business predicts that the average monthly short drama users in overseas markets will reach 200-300 million in the future, and the market size is expected to reach 10 billion U.S. dollars, so the market potential is huge.

In December, 2024, KunlunWanwei launched the Skyreels AI short drama platform in the U.S., marking an important step for KunlunWanwei in the global AI entertainment market, and bringing a new intelligent short drama experience to North American audiences. SkyReels AI Short Drama platform not only provides powerful creation tools for professional content creators, but also significantly lowers the threshold of AI short drama creation, making it easy for non-professional users to get started.

The Far-Reaching Implications of AI for the Global Film and TV Industry

How will AI technology revolutionize the global film and television industry?

In his speech at the 2024 World Artificial Intelligence Conference, Fang Han, Chairman of Kunlun World Wide, pointed out that AI holds a huge development dividend overseas, especially in small-language countries.

Citing the film and drama industry as an example, he noted that it costs about $20,000 to produce a movie in Nigeria. Such a production is clearly uncompetitive when compared to Wandering Earth, which cost 300 million RMB to produce in China, and Avatar, which cost hundreds of millions of dollars to produce in the United States. However, the advent of AI technology is expected to bridge this gap.

"My personal prediction is that in 3-5 years, the cost of producing a Wandering Earth-level blockbuster could drop to tens of thousands of dollars with the help of AI technology. This will bring huge development opportunities to many regions overseas. People everywhere are eager to see localized cultural products, be it novels, music, videos or comics, and need content that is closer to local culture. Therefore, AI going overseas holds a huge development dividend." Fang Han said.

On a smaller level, the dividend brought by AI is the exponential reduction of the production cost of cultural products, which makes the creation mode of "one person, one drama" possible. On a larger level, by lowering the threshold of creation, AIGC technology empowers disadvantaged cultural groups to produce content on their own, and will promote global cultural affirmation, which is the best manifestation of technology for the good.

The industry generally considers the emergence of AI as the "iPhone moment", but Fang Han believes that AI is more like the revolution of cell phone camera, because the camera triggered a change in the way of filming, which in turn gave birth to huge short video platforms such as Jittery and Shutterbug. Similarly, AI will also give rise to a large number of new AI UGC platforms, opening a golden age of personalized content production and consumption.

SkyReels V1, the first open-source video generation model for AI skit creation, and SkyReels A1, the first SOTA-rated, video pedestal model-based algorithm for controlling facial expressions, are precisely the tools to accelerate the popularization of the AIGC era. The SkyReels V1, the first open source video generation model for AI short drama creation, and SkyReels A1, the first SOTA-level algorithm for controlling facial expressions based on a video base model, are precisely the tools to accelerate the arrival of AIGC.

The AI short drama market, is expected to usher in the "DeepSeek moment" that belongs to it.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...