KTransformers: Large Model Inference Performance Engine: Extreme Acceleration, Flexible Empowerment

General Introduction

KTransformers: A high-performance Python framework designed to break through the bottleneck of large model inference. KTransformers is more than a simple model running tool, it is a set of extreme performance optimization engine and flexible interface empowerment platform. KTransformers is dedicated to improving the efficiency of large model inference from the bottom up, significantly accelerating model inference speed and lowering the hardware threshold through advanced kernel optimization, powerful parallelism strategies (multi-GPU, sparse attention) and other core technologies.

Beyond simply running models, KTransformers offers a full range of performance improvements and application scalability. Not only do you get a native Transformers-compatible interface to seamlessly migrate your existing projects, you can also easily build OpenAI- and Ollama standard RESTful API services for rapid integration into all types of applications. At the same time, we also provide out-of-the-box ChatGPT Style web interface for quick experience and testing model effects without tedious configuration.

KTransformers is designed for users who demand more performance. Whether you are a developer seeking the ultimate in reasoning speed, an engineer needing to efficiently deploy large model applications, or a user wanting to easily experience high-performance large models locally, KTransformers provides you with powerful support to help you unleash the full potential of large models to drive innovative applications.

Core strengths:

- Extreme performance: Kernel-level optimizations and parallel strategies bringAn order of magnitude improvement in reasoning speedThe

- Flexible interfaces: Providing Transformers-compliant interfaces, RESTful APIs and web interfaces.Meet the needs of different application scenariosThe

- Widely compatible: supporting multiple GPUs, multiple CPU architectures, and multiple mainstream big models.Accommodate diverse hardware and modeling optionsThe

- Ease of use goes hand in hand with customizability: existingOut-of-the-box convenienceAlso availableRich configuration options, to meet the deep optimization needs of advanced users.

Function List

- High Performance Transformers Compatible Interface : provides a fully compatible interface with the Transformers library.Migrate existing projects at zero cost and enjoy performance improvements instantly!The

- Flexible and easy-to-use RESTful API services : Following the OpenAI and Ollama standards.Rapidly Build Scalable API ServicesThe company's products are designed to be easily integrated into a variety of applications and platforms.

- Out-of-the-box ChatGPT style web interface : Providing a user-friendly interactive interface.Zero-code rapid experience and test model performance, for easy demonstration and validation.

- Multi-GPU parallel computing engine :: Unleash the power of multiple GPUs, linearly improving inference speed and dramatically reducing response time.

- Deep kernel-level performance optimizations : Using advanced kernel optimization techniques.Tapping hardware potential from the bottom up, achieving a qualitative leap in model inference performance.

- Intelligent Sparse Attention Framework : support for the block sparse attention mechanism.Significantly reduced memory footprintand supports CPU-efficient decoding.Breaking through hardware bottlenecksThe

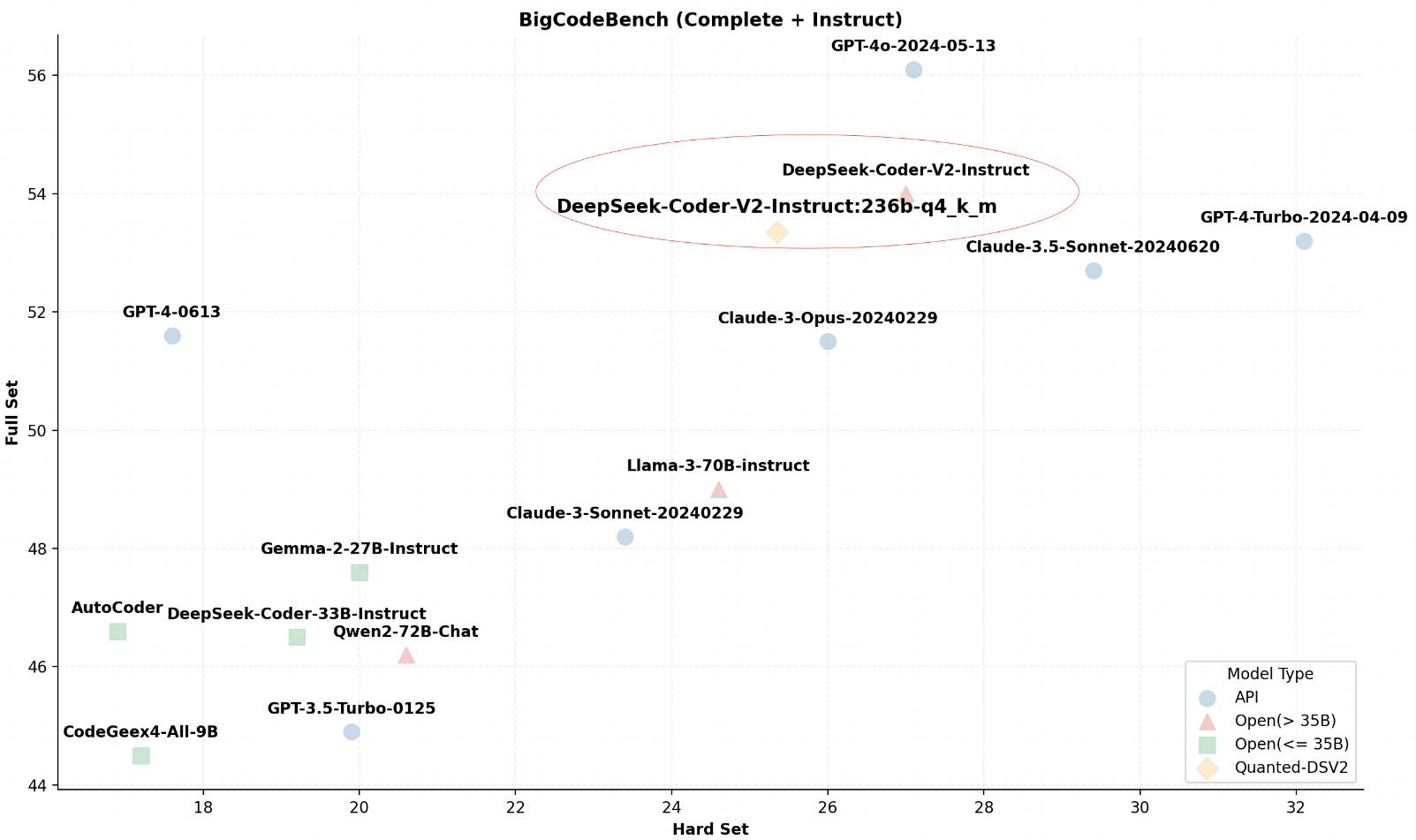

- Extensive ecological support for large models : Compatible with InternLM, DeepSeek-Coder, etc.A variety of mainstream large models (under continuous expansion).Flexibility to choose the best modeling solutionThe

- Lightweight Local High-Performance Reasoning : No need for expensive specialized hardware.Superior inference performance in a common desktop environment, lowering the threshold for use.

Using Help

mounting

- clone warehouse ::

git clone https://github.com/kvcache-ai/ktransformers.git

cd ktransformers

- Installation of dependencies ::

pip install -r requirements-local_chat.txt

- Installing KTransformers ::

python setup.py install

Getting Started

- Loading Models ::

from ktransformers import KTransformers

model = KTransformers(model_name="your_model_name")

- Example of reasoning ::

input_text = "你好,KTransformers!"

output = model.infer(input_text)

print(output)

- Using the RESTful API : Start the API service:

python -m ktransformers.api

Send request:

curl -X POST "http://localhost:8000/infer" -d '{"text": "你好,KTransformers!"}'

Advanced Features

- Multi-GPU Support : Edit the configuration file in the project root directory

config.yaml, specify multi-GPU settings to improve inference speed. - sparse attention span : Configuration file in the project root directory

config.yamlAdd sparse attention configuration in to optimize memory usage, especially for resource-constrained environments. - local inference : Configuration file in the project root directory

config.yamlSpecify memory and video memory parameters for efficient inference in a local desktop environment, supporting 24GB VRAM and 150GB DRAM.

Configuration Details

- Configuring multiple GPUs : Edit Configuration File

config.yaml::

gpu:

- id: 0 # GPU 设备索引 0

- id: 1 # GPU 设备索引 1

- Enabling sparse attention : Add in the configuration file:

attention:

type: sparse

- Local reasoning settings : Specify memory and video memory parameters in the configuration file:

memory:

vram: 24GB # 显存限制 (GB),根据实际情况调整

dram: 150GB # 内存限制 (GB),根据实际情况调整© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...