KrillinAI: Multilingual Globalization Tool for Video with One-Click Translation and Dubbing

General Introduction

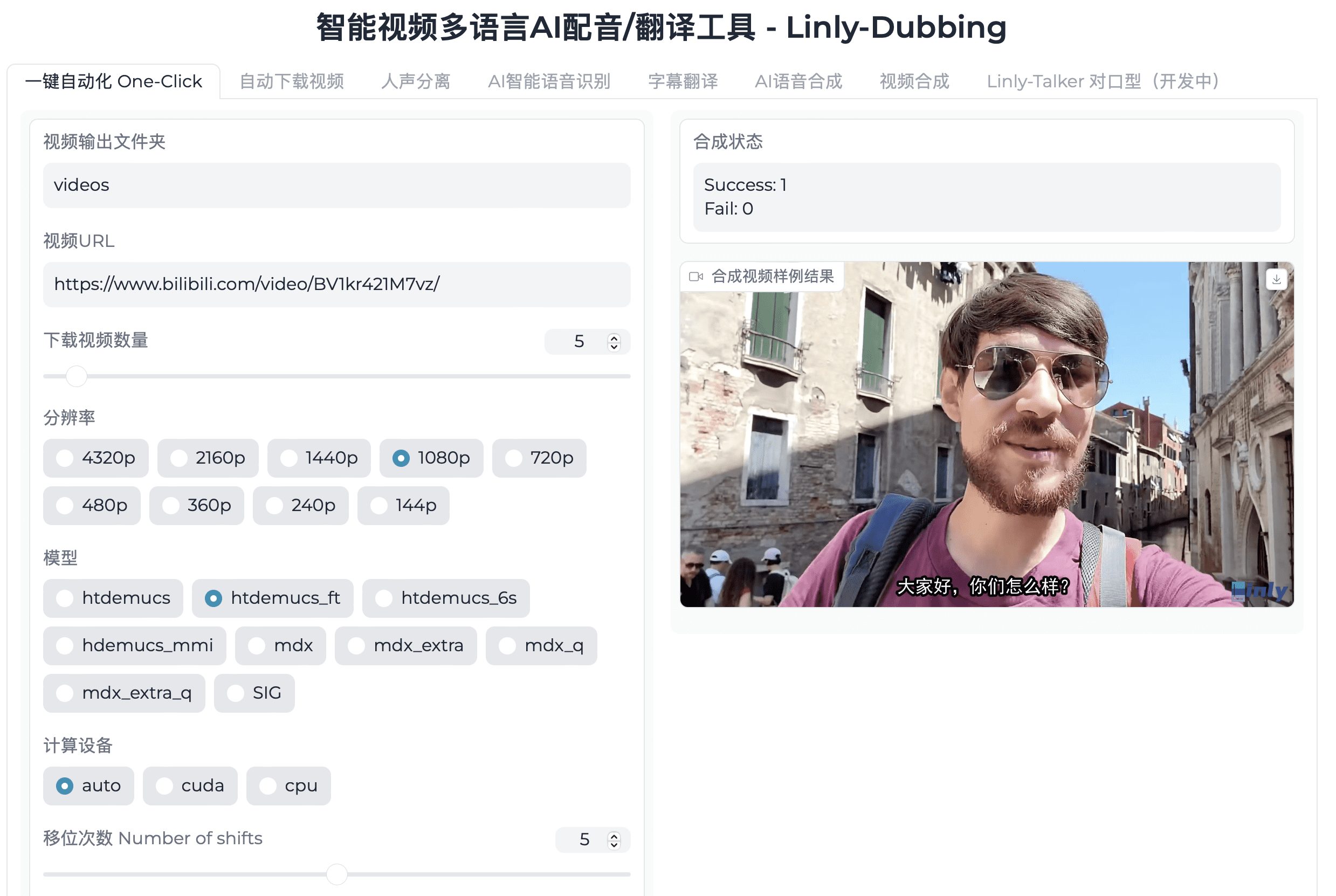

KrillinAI is an open-source video processing tool that focuses on using artificial intelligence to help users translate videos and automatically dub them. It can start from video download and continue to generate finished products for different platforms in just a few clicks. The developers have provided free code on GitHub that users can download and run locally. KrillinAI uses Large Language Modeling (LLM) technology to provide high-quality translations and subtitle generation, and currently supports translations for 56 languages, with inputs in Chinese, English, Japanese, and more. It also adjusts video formats and adapts to platforms such as YouTube, TikTok, and Jieyin, making it suitable for content creators to quickly produce multilingual videos.

Function List

- Video Download & Upload: Supports downloading videos from YouTube, Jitterbug, Bilibili, or uploading local files.

- Accurate Subtitle Generation: Use of Whisper The technology recognizes speech and generates highly accurate subtitles.

- Intelligent Subtitle Splitting: Segmentation of subtitles is done naturally by means of a large language model, keeping the semantic integrity intact.

- professional translation: Translate entire paragraphs to ensure contextual consistency and near human level.

- Dubbing and voice cloning: Provided CosyVoice of male and female dubs, or upload an audio clone of the voice.

- Video format adjustment: Automatically generates horizontal or vertical video, adapting to different platforms.

- Terminology replacement: Supports one-click replacement of vocabulary in specialized fields.

Using Help

Installation process

KrillinAI needs to be installed locally to run, here are the detailed steps:

- Download file

- Open https://github.com/krillinai/KrillinAI.

- Click "Releases" in the upper right corner of the page to download the appropriate version for your system (e.g. Windows, macOS).

- Unzip the file into an empty folder.

- Configuration environment

- Create a folder within the

configFolder. - exist

configCreate a new folderconfig.tomlDocumentation. - From GitHub's

config-example.tomlCopy the content toconfig.toml, and then fill in the configuration. - Simplest Configuration: Using only the OpenAI service, fill in the following:

[openai] apikey = "你的OpenAI API密钥" transcription_provider = "openai" llm_provider = "openai" - If an agent or custom model is required, you can add

app.proxymaybeopenai.base_urlThe

- Create a folder within the

- running program

- Windows (computer): Double-click the executable to start it.

- macOS: Manual trust is required because it is not signed:

- Open a terminal and go to the file directory.

- Enter the command:

sudo x'attr -rd com.apple.quarantine ./KrillinAI_1.0.0_macOS_arm64 sudo chmod +x ./KrillinAI_1.0.0_macOS_arm64 ./KrillinAI_1.0.0_macOS_arm64

- After starting, the service runs on the

http://127.0.0.1:8888(Port can be changed).

- Docker deployment (optional)

- In GitHub's

docs/docker.mdView detailed steps. - Install Docker, pull the image and run it.

- In GitHub's

Operation of the main functions

Video translation and subtitle generation

- move::

- After starting the service, the browser accesses the

http://127.0.0.1:8888The - Enter the video link (e.g.

https://www.youtube.com/watch?v=xxx) or upload a file. - Select the input language (e.g. Chinese) and the target language (e.g. English).

- Click "Start" and the program automatically recognizes speech, generates subtitles and translates them.

- After starting the service, the browser accesses the

- in the end: Subtitle files are saved in the

tasksFolder. - take note of: If the download fails, you need to configure the

cookies.txtappear (to be sth)docs/get_cookies.mdThe

dubbing function

- move::

- Once the subtitles have been generated, click on the "Dubbing" option.

- Choose a male or female voice for CosyVoice, or upload an audio sample to clone the voice.

- Click "Generate" and the program will automatically synthesize the voiceover.

- in the end: The voiceover is merged with the video to generate a new file.

- distinctiveness: Cross-language dubbing is supported, and audio tracks and subtitles are precisely aligned.

Video format adjustment

- move::

- When generating a video, select "Landscape" or "Portrait".

- Click "Finish" and the program adjusts the resolution and subtitle layout.

- in the end: Outputs videos adapted to YouTube (landscape) or TikTok (portrait).

- draw attention to sth.: The subtitles will automatically change lines if they are too long, to ensure a neat and tidy screen.

Featured Function Operation

Intelligent Subtitle Splitting

- The program analyzes speech using a large language model to segment subtitles by semantics. For example, a 10-second conversation is split into segments based on sentence integrity, rather than a fixed time cut.

- No manual setup is required, processing is done automatically.

Terminology replacement

- move::

- exist

config.tomlAdd a replacement rule such as:[custom_vocab] "AI" = "人工智能" "LLM" = "大语言模型" - Restart the program and replace it automatically when translating.

- exist

- use: Suitable for technology, education, etc., to ensure accurate terminology.

sound cloning

- move::

- Upload a 10-30 second audio sample in the voiceover screen.

- Select "Clone Sound" and the program generates a similar sound.

- request: If you are using the AliCloud service, you need to configure

aliyun.ossappear (to be sth)docs/aliyun.mdThe

Additional configuration options

- local model: Settings

transcription_provider = "fasterwhisper"Required fieldslocal_model.faster_whisperThe model will be downloaded automatically (macOS is not supported yet). - AliCloud Services: If you use AliCloud's big model or dubbing, you need to configure the

aliyun.bailianmaybealiyun.speechThe

application scenario

- Multilingual content creation

- YouTube bloggers want to translate Chinese videos into English and French. krillinAI quickly generates subtitles and voiceovers in landscape format.

- Short video promotion

- Merchants use Jitterbug to promote their products, and KrillinAI converts the video to vertical screen with local language to enhance the appeal.

- Sharing of educational resources

- Teachers translate course videos into multiple languages, and KrillinAI provides accurate subtitling and voice-overs for students around the world.

QA

- Why does the startup prompt for a missing API?

- need to be at

config.tomlFill in OpenAI'sapikeyFor more information, go to the OpenAI website.

- need to be at

- What input languages are supported?

- Currently supports Chinese, English, Japanese, German, Turkish, and more languages under development.

- How long does it take to translate and dub?

- Processing a 10-minute video takes about 5-10 minutes, depending on network and configuration.

- How do I fix a failed download?

- configure

cookies.txtreferencedocs/get_cookies.mdExport browser cookies.

- configure

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...