DeepSeek Hands-on: Knowledge Graph Construction in Three Steps - Single Extraction, Multiple Fusion, Topic Generation

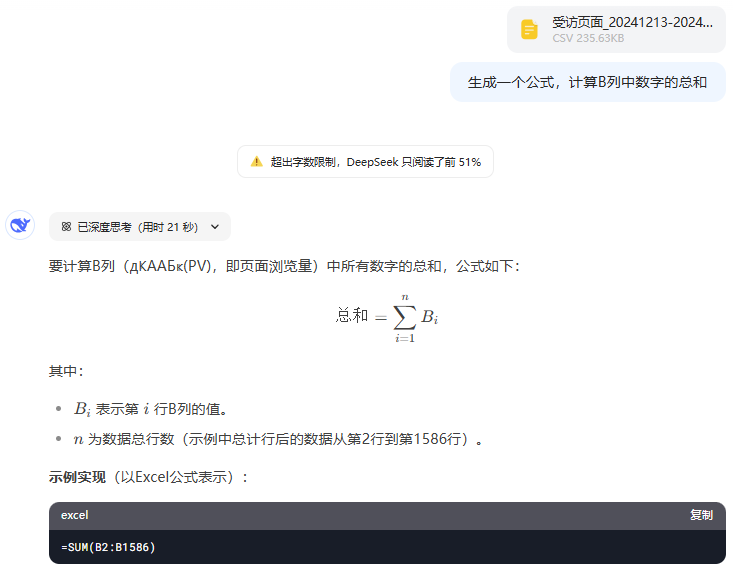

Question: Knowledge graphs are important, DeepSeek language model is hot, can it be used to build knowledge graphs quickly? I'd like to try DeepSeek for real to see how it does at extracting information, integrating knowledge, and creating graphs out of thin air. METHODS: I did three experiments to measure...

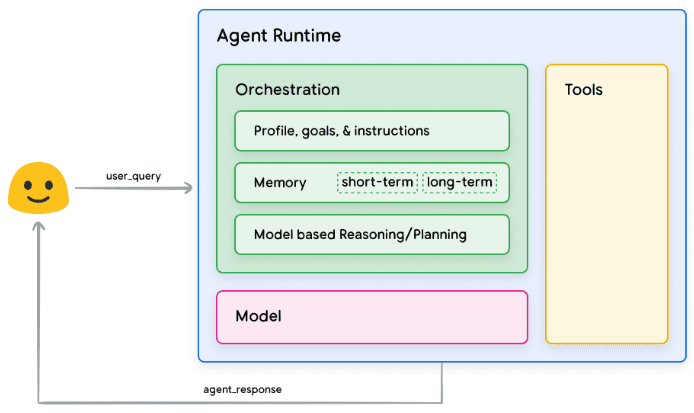

![[转]从零拆解一款火爆的浏览器自动化智能体,4步学会设计自主决策Agent](https://aisharenet.com/wp-content/uploads/2025/01/e0a98a1365d61a3.png)

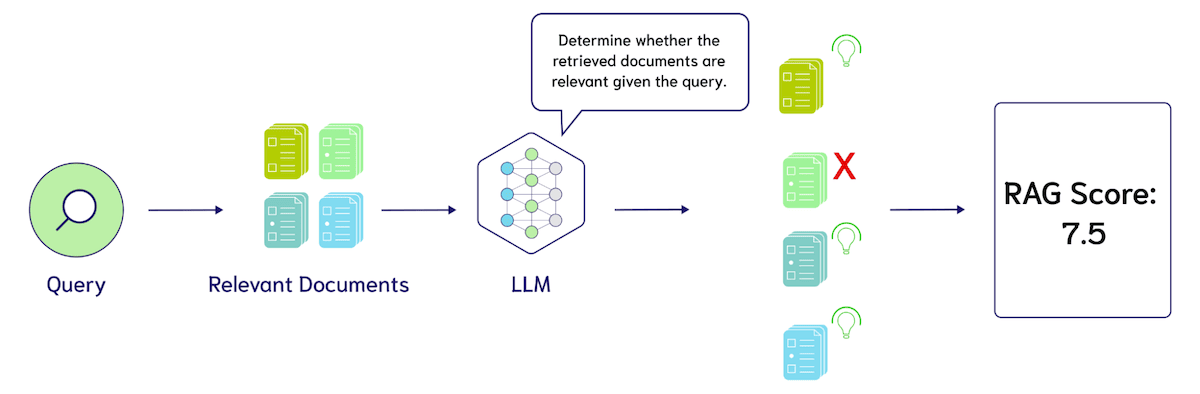

![Agent AI: 探索多模态交互的前沿世界[李飞飞-经典必读]](https://aisharenet.com/wp-content/uploads/2025/01/6dbf9ac2da09ee1.png)