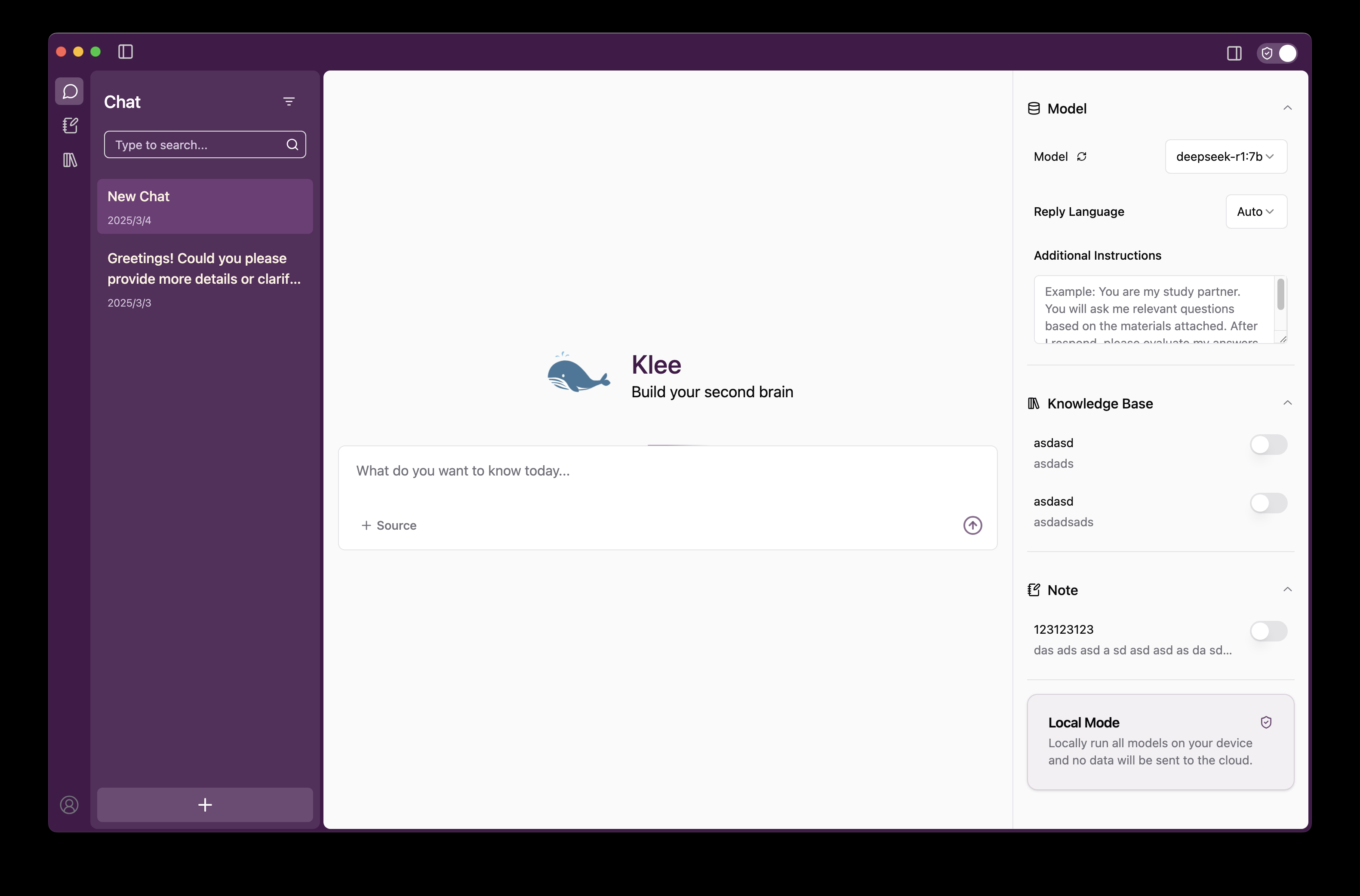

Klee: Running AI Big Models Locally on the Desktop and Managing a Private Knowledge Base

General Introduction

Klee is an open source desktop application designed to help users run the open source Large Language Model (LLM) locally with secure private knowledge base management and Markdown note taking capabilities. It is based on Ollama Built with LlamaIndex technology, Klee allows users to download and run AI models with simple operations, and all data processing is done locally without the need to connect to the Internet or upload to the cloud to ensure privacy and security. Klee provides an intuitive user interface for Windows, MacOS, and Linux, making it easy for both technical developers and casual users to generate text, analyze documents, and organize knowledge. Klee is currently open-sourced on GitHub and has been well received by the community, with users free to download, customize, or participate in development.

Function List

- Download and run large language models in one click: Download and run open source LLM directly from Ollama through the interface, without having to manually configure the environment.

- Local knowledge base management: Support for uploading files and folders, building private knowledge indexes and making them available for querying by AI.

- Markdown note generation: Automatically saves AI conversations or analysis results in Markdown format for easy documentation and editing.

- Fully offline use: No internet connection is required, all functions run locally and no user data is collected.

- Cross-platform support: Compatible with Windows, MacOS and Linux systems for a consistent experience.

- Open Source and Customizable: Full source code is provided to support user modification of features or participation in community contributions.

Using Help

Installation process

The installation of Klee is divided into two parts: client (klee-client) and server (klee-service), the following are the detailed steps:

1. System requirements

- operating system: Windows 7+, macOS 15.0+, or Linux.

- software dependency::

- Node.js 20.x or later.

- Yarn 1.22.19 or later.

- Python 3.x (server-side required, 3.12+ recommended).

- Git (for cloning repositories).

- hardware requirement: At least 8GB of RAM, 16GB or more recommended to run larger models.

2. Install the client (klee-client)

- Clone Client Repository::

Runs in the terminal:

git clone https://github.com/signerlabs/klee-client.git

cd klee-client

- Installation of dependencies::

yarn install

- Configuring Environment Variables::

- Copy the example file:

cp .env.example .env - compiler

.envfile, the default configuration is as follows:VITE_USE_SUPABASE=false VITE_OLLAMA_BASE_URL=http://localhost:11434 VITE_REQUEST_PREFIX_URL=http://localhost:6190If the server port or address is different, please adjust the

VITE_REQUEST_PREFIX_URLThe

- Development mode operation::

yarn dev

This starts the Vite Development Server and the Electron application.

5. Packaging application (optional)::

yarn build

The packaged file is located at dist Catalog.

6. MacOS Signature (optional)::

- compiler

.envAdd an Apple ID and team information:APPLEID=your_apple_id@example.com APPLEIDPASS=your_password APPLETEAMID=your_team_id - (of a computer) run

yarn buildThe signed application can be generated afterward.

3. Installation of the server (klee-service)

- Clone the server-side repository::

git clone https://github.com/signerlabs/klee-service.git

cd klee-service

- Creating a Virtual Environment::

- Windows:

python -m venv venv venv\Scripts\activate - MacOS/Linux:

python3 -m venv venv source venv/bin/activate

- Installation of dependencies::

pip install -r requirements.txt

- Starting services::

python main.py

The default port is 6190, if you need to change it:

python main.py --port 自定义端口号

The service needs to be kept running after it is started.

4. Download precompiled version (optional)

- interviews GitHub ReleasesDownload the installation package for your system.

- Unzip it and run it directly, no need to build it manually.

Main function operation flow

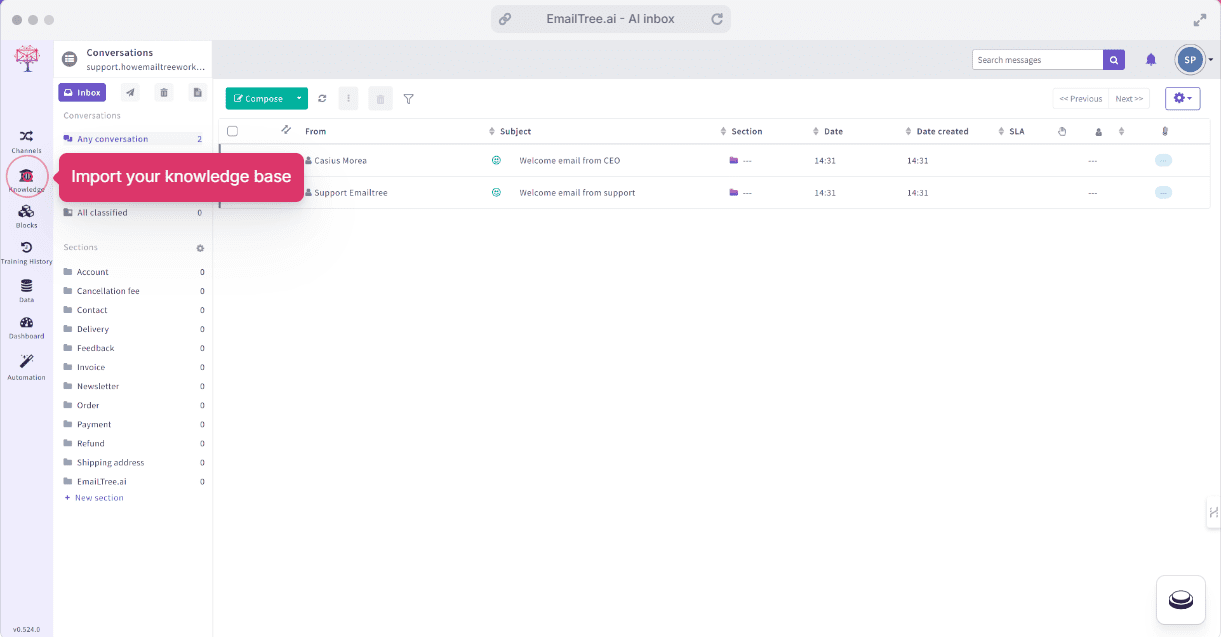

Running large language models with one click

- launch an application::

- Make sure the server is running and open the client program.

- Download model::

- Select an Ollama supported model (e.g. LLaMA, Mistral) in the interface.

- Click the "Download" button and Klee will automatically download the model locally.

- operational model::

- Once the download is complete, click "Run" to load the model into memory.

- Enter a question or command in the dialog box and click "Send" to get a response.

- caveat::

- Loading the model for the first time may take a few minutes, depending on the model size and hardware performance.

- If there is no response, check if the server is running on the

http://localhost:6190The

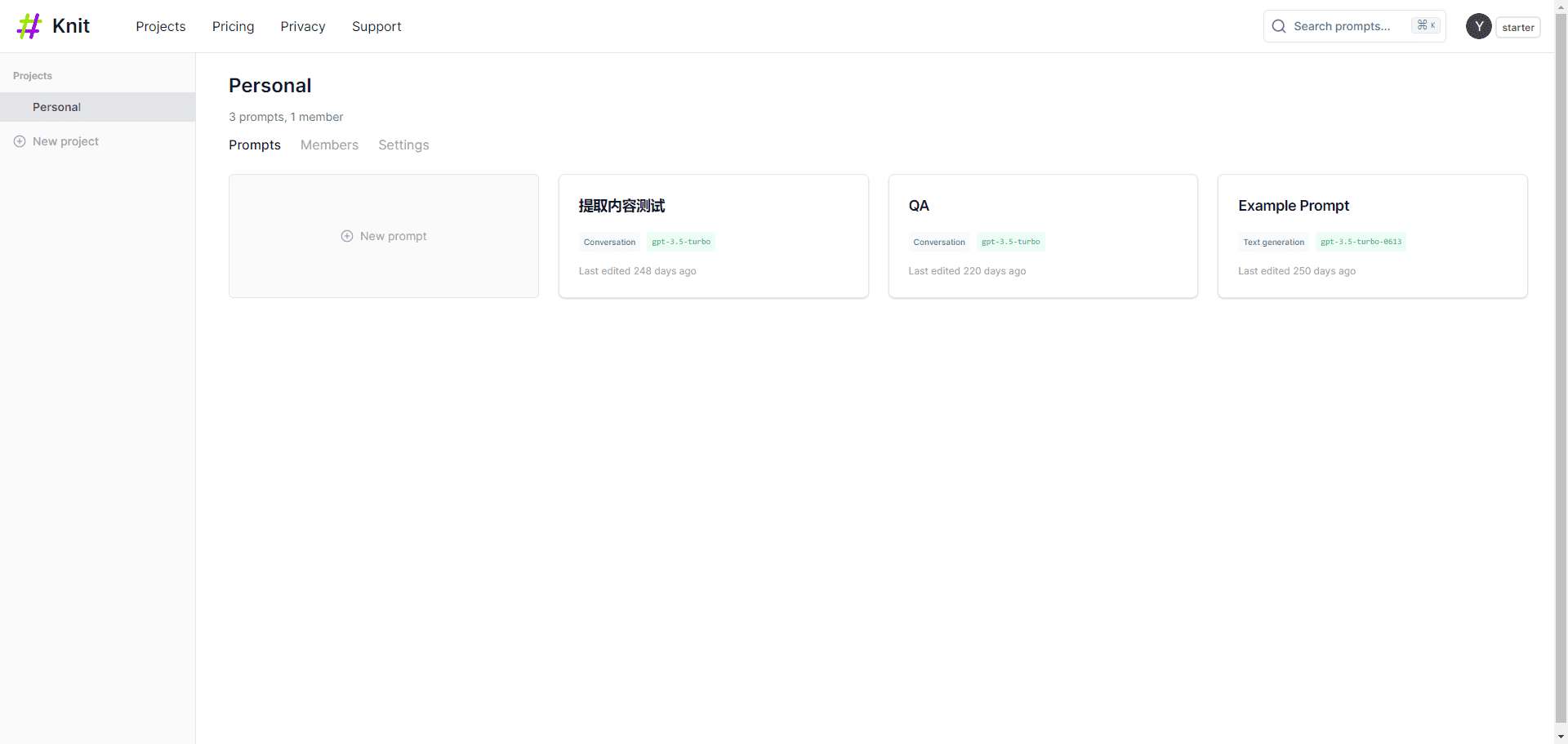

Local knowledge base management

- Uploading files::

- Click on the "Knowledge" option in the interface.

- Support drag-and-drop or manual file/folder selection (supports PDF, TXT, etc.).

- Building an Index::

- After uploading, LlamaIndex automatically generates an index for the file.

- Once indexing is complete, the contents of the file can be retrieved by AI.

- Querying the Knowledge Base::

- Check "Use Knowledge Base" on the dialog screen and enter your question.

- The AI will generate answers in conjunction with knowledge base content.

- Managing the knowledge base::

- You can delete or update files on the Knowledge screen.

Markdown note generation

- Saving notes::

- When the AI responds, click the "Save as Note" button.

- The system automatically saves the content in Markdown format.

- Management Notes::

- View all notes on the Notes screen.

- Supports editing, exporting (save as .md file) or deleting.

- Usage Scenarios::

- Ideal for recording AI analysis results, study notes, or work summaries.

Featured Functions

Fully offline use

- Operating Methods::

- Once the installation is complete, all functions will work without the need for a network.

- Download the model and disconnect from the network and still work normally.

- data security::

- Klee does not collect any user data and all files and conversations are stored locally only.

- Logs are used for debugging purposes only and are not uploaded to external servers.

Open Source and Community Contributions

- Getting the source code::

- interviews GitHub Repositories, download the code.

- Mode of contribution::

- Submit a Pull Request to add a feature or fix a bug.

- Participate in GitHub Issues discussions to optimize documentation or promote applications.

- Customization method::

- Modify the server side to support other models or APIs.

- Adjustment of the client interface requires familiarization React and Electron.

Recommendations for use

- performance optimization: When running large models (e.g. 13B parameters), 16GB+ RAM or GPU acceleration is recommended.

- Model Selection: A smaller model (e.g. 7B parameter) can be selected for testing for the first time.

- Question Feedback: on GitHub or Discord Seek help.

With these steps, users can quickly install and use Klee to enjoy the convenience of localized AI.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...