KBLaM: An Open Source Enhanced Tool for Embedding External Knowledge in Large Models

General Introduction

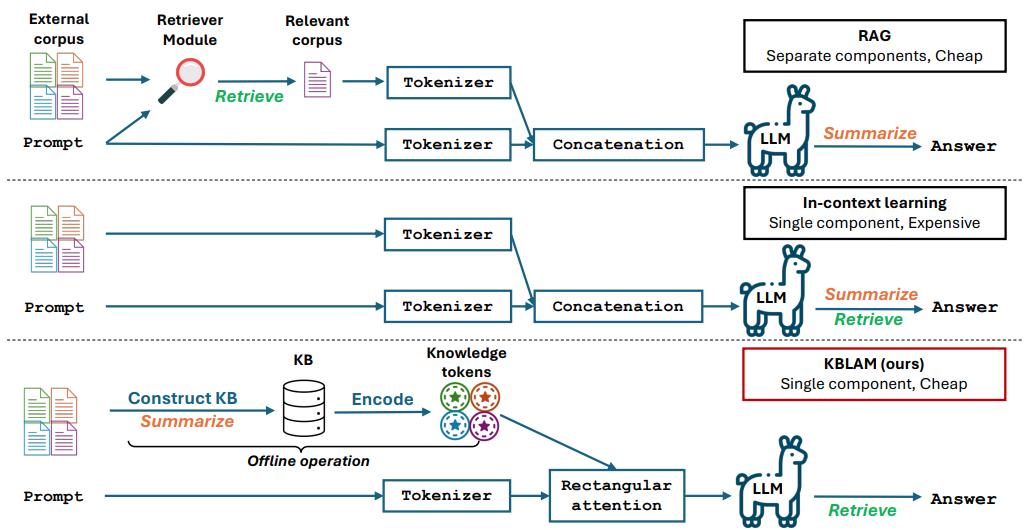

KBLaM is an open source project developed by Microsoft, the full name is "Knowledge Base augmented Language Model" (Knowledge Base Augmented Language Model). It transforms external knowledge into vectors and embeds them in the attention layer of a large model, so that the model can directly use this knowledge to answer questions or reasoning. Compared to traditional retrieval-augmented generation (RAG), it does not require an additional retrieval module, and compared to contextual learning, its computational cost grows linearly with the size of the knowledge base, rather than in squared steps. KBLaM is open-sourced on GitHub, and is primarily aimed at researchers and developers, and is suited for exploring how to make large models more efficient in processing external information. It currently supports models such as Meta's Llama family and Microsoft's Phi-3.

Function List

- Transforming external knowledge bases into key-value vector pairs for model enhancement.

- Embedding Knowledge in Large Models Using the Rectangular Attention Mechanism.

- Supports dynamic updating of the knowledge base without retraining the model.

- The computational cost grows linearly with the size of the knowledge base and is efficient.

- Open source code, experimental scripts and datasets to facilitate research and development.

- Supports tasks such as quizzing and reasoning, and can generate accurate answers based on a knowledge base.

- The text processing capability of the base model is not modified and the original performance is maintained.

Using Help

KBLaM is a research open source tool with code and documentation available through GitHub. Below is a detailed installation and usage guide to help users get started quickly.

Installation process

- Preparing the environment

Requires Python 3.8 or higher and Git; Linux or Windows recommended. If you are working with large-scale knowledge bases, an NVIDIA GPU (e.g., A100 with 80GB or more of video memory) is recommended. - Download Warehouse

Open a terminal and enter the command Clone KBLaM repository:

git clone https://github.com/microsoft/KBLaM.git

Go to the project catalog:

cd KBLaM

- Installation of dependencies

Run the following command to install the required libraries:

pip install -e .

This will install PyTorch, Transformers, and other dependencies. If you need to use the Llama model, you will also need to install the Hugging Face tool and log in:

pip install huggingface_hub

huggingface-cli login

To log in, you need to generate a token from Hugging Face.

- Verify Installation

Run the test script to confirm that the environment is OK:

python -m kblam.test

If no errors are reported, the installation was successful.

Main Functions

1. Creating a knowledge base

KBLaM needs to transform external knowledge into vector pairs.

- move::

- Prepare the knowledge base file (e.g. in JSON format), example:

{"entity": "AI", "description": "人工智能是模拟人类智能的技术"}

- Generate vectors:

python dataset_generation/generate_kb_embeddings.py --input knowledge.json --output embeddings.npy

- Supported embedding models include

text-embedding-ada-002cap (a poem)all-MiniLM-L6-v2The Output filesembeddings.npyis a knowledge vector.

2. Embedding knowledge into models

Embedding knowledge vectors in the attention layer of a large model.

- move::

- Download supported models (e.g.

meta-llama/Meta-Llama-3-8B-Instruct). - Run the embedded script:

python src/kblam/integrate.py --model meta-llama/Meta-Llama-3-8B-Instruct --kb embeddings.npy --output enhanced_model

- Export an enhanced model catalog

enhanced_modelThe

3. Testing of enhanced models

Load the enhancement model and test the effect.

- move::

- Run the test script:

python src/kblam/evaluate.py --model enhanced_model --question "AI是什么?"

- The model will return, "AI is technology that simulates human intelligence."

Featured Function Operation

Dynamic updating of the knowledge base

KBLaM supports updating the knowledge base at any time without retraining the model.

- move::

- Modify the knowledge base file to add new entries:

{"entity": "KBLaM", "description": "微软开发的知识增强工具"}

- Generate a new vector:

python dataset_generation/generate_kb_embeddings.py --input updated_knowledge.json --output new_embeddings.npy

- Update the model:

python src/kblam/integrate.py --model enhanced_model --kb new_embeddings.npy --output updated_model

- The updated model is immediately ready to use the new knowledge.

Training adapters

Training adapters to optimize knowledge embedding effects.

- move::

- Training with synthetic datasets:

python train.py --dataset synthetic_data --N 120000 --B 20 --total_steps 601 --encoder_spec OAI --use_oai_embd --key_embd_src key --use_data_aug

- An Azure OpenAI endpoint is required to generate synthetic data, see

dataset_generation/gen_synthetic_data.pyThe

replication experiment

The repository provides experimental scripts that reproduce the results of the paper.

- move::

- Go to the Experimental Catalog:

cd experiments

- Run the script:

python run_synthetic_experiments.py

- Azure OpenAI keys need to be configured to generate synthetic datasets.

caveat

- Model Support: Current support

Meta-Llama-3-8B-Instruct,Llama-3.2-1B-Instructcap (a poem)Phi-3-mini-4k-instruct. Additional models need to be modifiedsrc/kblam/modelsThe adapter code in the - hardware requirement: High-performance GPUs are required to process large knowledge bases, while small-scale experiments can be run on CPUs.

- Question Feedback: In case of problems, check the

SUPPORT.mdor submit an issue on GitHub.

application scenario

- research experiment

Researchers can use KBLaM to test how large models handle specialized knowledge, such as embedding a knowledge base in the field of chemistry, to improve model answer accuracy. - Corporate Q&A

Developers can turn company documents into a knowledge base and develop intelligent assistants to quickly answer employee or customer questions. - Educational aids

Teachers can embed course materials into KBLaM to create a tool that answers students' questions and enhances learning.

QA

- How is KBLaM different from traditional fine tuning?

KBLaM does not modify the base model and only trains the adapter embedding knowledge. Traditional fine-tuning requires retraining the entire model, which is more costly. - Is it suitable for a production environment?

KBLaM is a research project. Answers may be inaccurate if the knowledge base is too different from the training data. The official recommendation is to use it for research only. - How can I evaluate the performance of KBLaM?

Evaluated by accuracy (how correctly knowledge is retrieved), rejection rate (whether unanswerable questions are correctly identified) and precision and recall of answers.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...