Jina AI Introduces Reader-LM, a Revolutionary Small Language Model to Efficiently Extract the Main Content of HTML Web Pages

Jina AI has released Reader-LM-0.5B and Reader-LM-1.5B, two small language models designed to convert raw, noisy HTML from the open web into clean Markdown format, which support context lengths of up to 256K tokens and show comparable or better performance than large language models on conversion tasks. performance on the conversion task is comparable or even better than large language models.

preamble

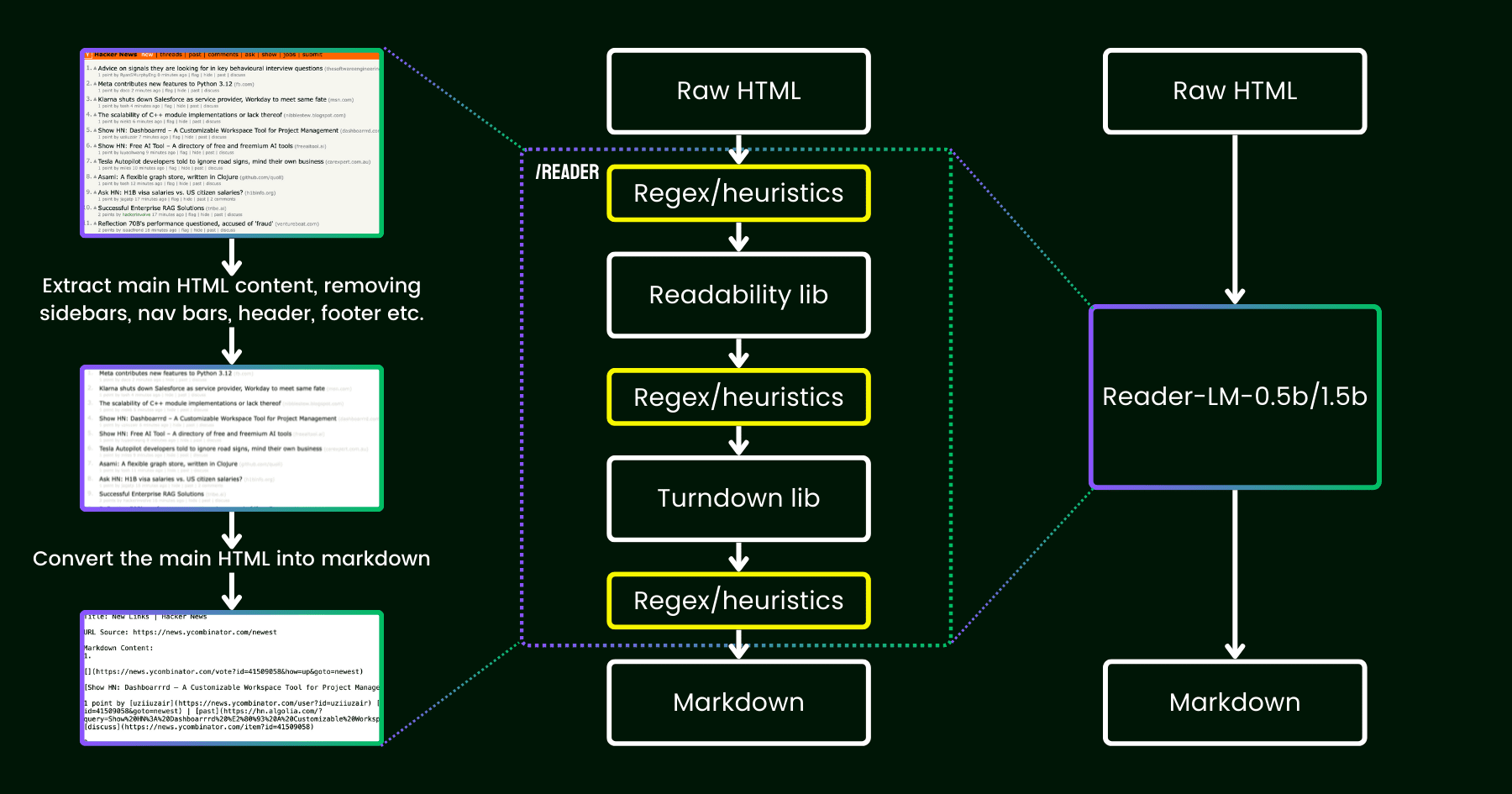

Jina AI released Jina Reader in April 2024, a simple API that converts any URL into LLM-friendly markdown. the API uses the headless Chrome browser to fetch the source code of a web page, extracts the main content using Mozilla's Readability package, and converts the cleaned-up HTML into markdown using the regex and Turndown libraries to convert the cleaned up HTML to markdown.

After the release, user feedback pointed to issues with the quality of the content, which Jina AI addressed by patching the existing pipeline.

Since then, we've been pondering the question: instead of tinkering with more heuristics and regular expressions (which are becoming increasingly difficult to maintain and are not conducive to multilingualism), can we solve this problem end-to-end with a single language model?

Illustration illustrating reader-lm, replacing the pipeline of readability+turndown+regex heuristics with a small language model.

About Reader-LM

September 11, 2024 -- Continuing to drive innovation in artificial intelligence for content processing and text conversion, Jina AI today announced the launch of its latest technology achievements -- Reader-LM-0.5B and Reader-LM-1.5B, two small language models. These models mark a new era of raw HTML content processing on the open web, efficiently converting complex HTML into structured Markdown format, providing powerful support for content management and machine learning applications in the Big Data era.

Breakthrough performance and efficiency

The Reader-LM-0.5B and Reader-LM-1.5B models achieve comparable or even better performance than larger language models while maintaining a compact parameter scale. Supporting context lengths of up to 256K tokens, these two models are able to handle noisy elements such as inline CSS, scripts, etc. in modern HTML, producing clean, well-structured Markdown files. This is a great convenience for users who need to extract and convert text from raw web content.

User-friendly hands-on experience

Jina AI provides a program in Google Colab (0.5Bcap (a poem)1.5BThe Reader-LM model is a free, cloud-based environment that allows users to easily experience the power of the Reader-LM model by taking notes. Whether it's loading different versions of the model, changing the URL of a processed website, or exploring the output, users are able to do so in a free, cloud-based environment. Additionally, Reader-LM will soon be available in the Azure and AWS marketplaces, providing more integration and deployment options for enterprise users.

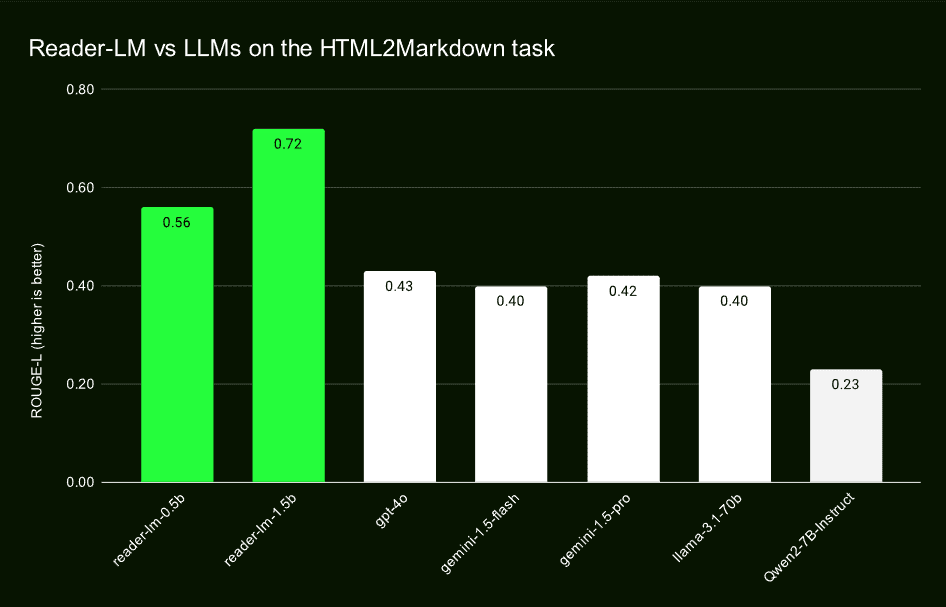

Performance beyond traditional models

Through comparison tests with large language models such as GPT-4o, Gemini-1.5-Flash, Gemini-1.5-Pro, LLaMA-3.1-70B and Qwen2-7B-Instruct, Reader-LM has shown good results in ROUGE-L, Word Error Rate (WER) and Token Error Rate (TER), among other key metrics. These evaluations demonstrate Reader-LM's leadership in accuracy, recall, and ability to generate clean Markdown.

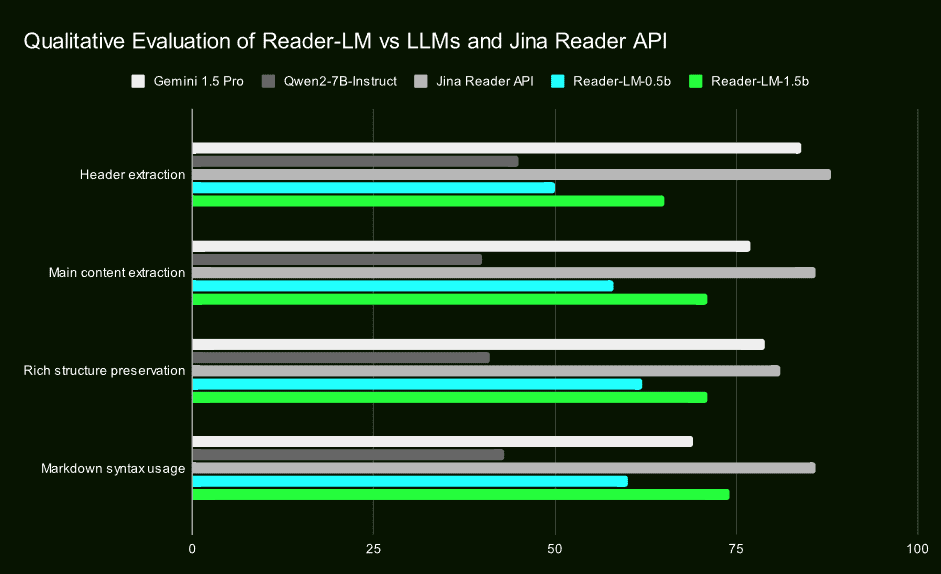

Qualitative research confirms its benefits

In addition to the quantitative evaluation, Jina AI also confirmed Reader-LM's superior performance in headline extraction, main content extraction, structure retention, and Markdown syntax usage through a qualitative study of visually inspecting output Markdown. These findings emphasize the efficiency and reliability of Reader-LM in real-world applications.

An innovative approach to two-stage training

Jina AI revealed the details of their process for training Reader-LM, including data preparation, two-stage training, and how they overcame model degradation and cycling issues. They emphasized the importance of the quality of the training data and ensured the stability of the model and the quality of the generation through technical means such as comparative search and repeated stopping criteria.

ultimate

Jina AI's Reader-LM is not only a major breakthrough in the field of small-scale language modeling, but also a significant enhancement to the processing power of open web content. The release of these two models not only provides developers and data scientists with an efficient, easy-to-use tool, but also opens up new possibilities for AI applications in content extraction, cleansing, and transformation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...