Parsing Apple's end-testing model pre-built cue words/commands

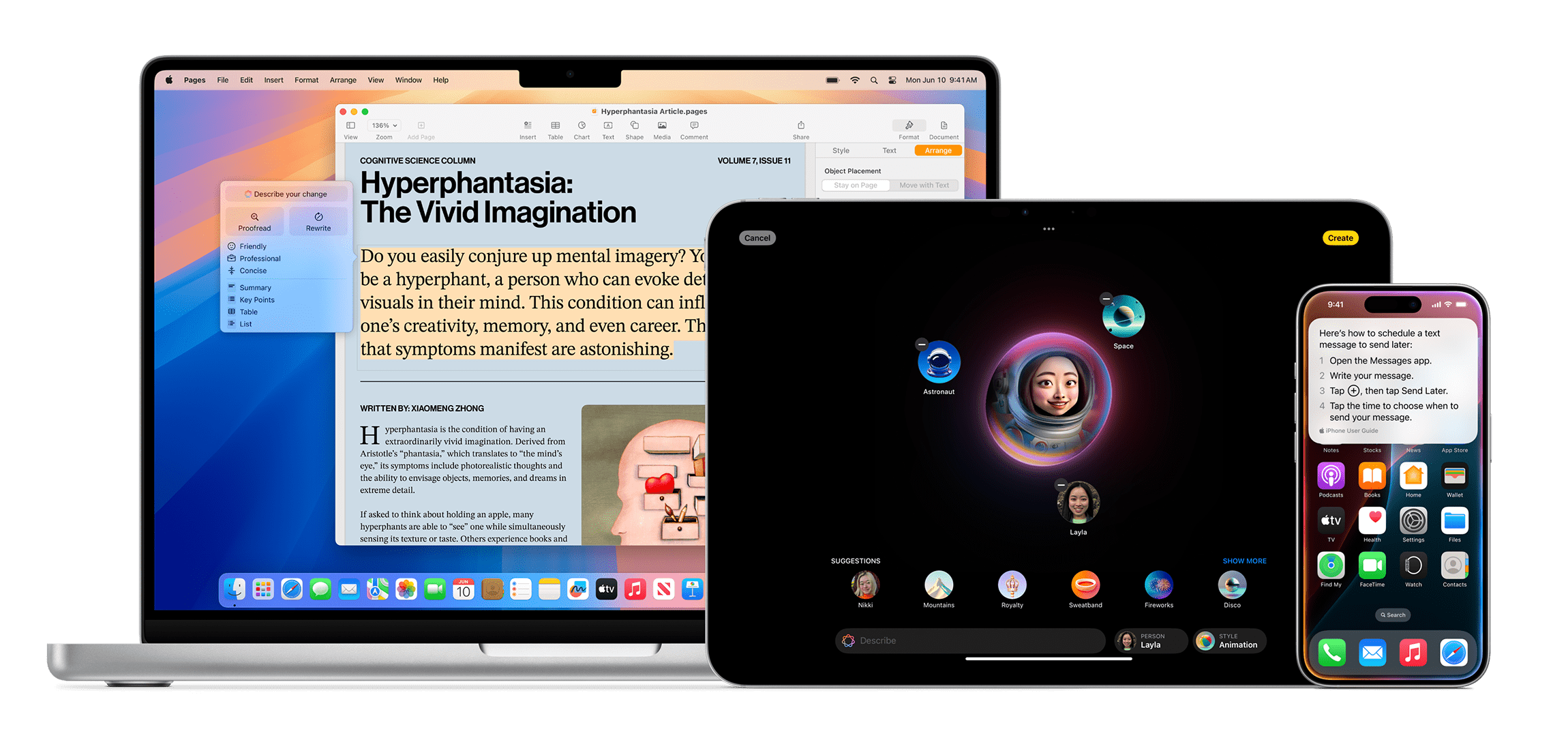

Apple Intelligence is the upcoming suite of device-side AI tools from Apple. It puts powerful generative AI models in iPhone, iPad, and Mac and delivers incredible new features to help users communicate, work, and express themselves. You can bring these Apple Intelligence features right into your apps.

And in the preview version, Apple Intelligence pre-made prompts/commands have been disclosed in advance, he is hidden in your computer. We can learn how they work from it.

They are stored as json files in the directory "/System/Library/AssetsV2/com_apple_MobileAsset_UAF_FM_GenerativeModels".

These instructions appear as a default prompt before you say anything to the chatbot, and we've seen them revealed before in AI tools like Microsoft Bing and DALL-E. Now, a member of the macOS 15.1 beta subreddit has posted the files they found to contain these background prompts. You can't change any of the files, but they do provide an initial glimpse into how these features work.

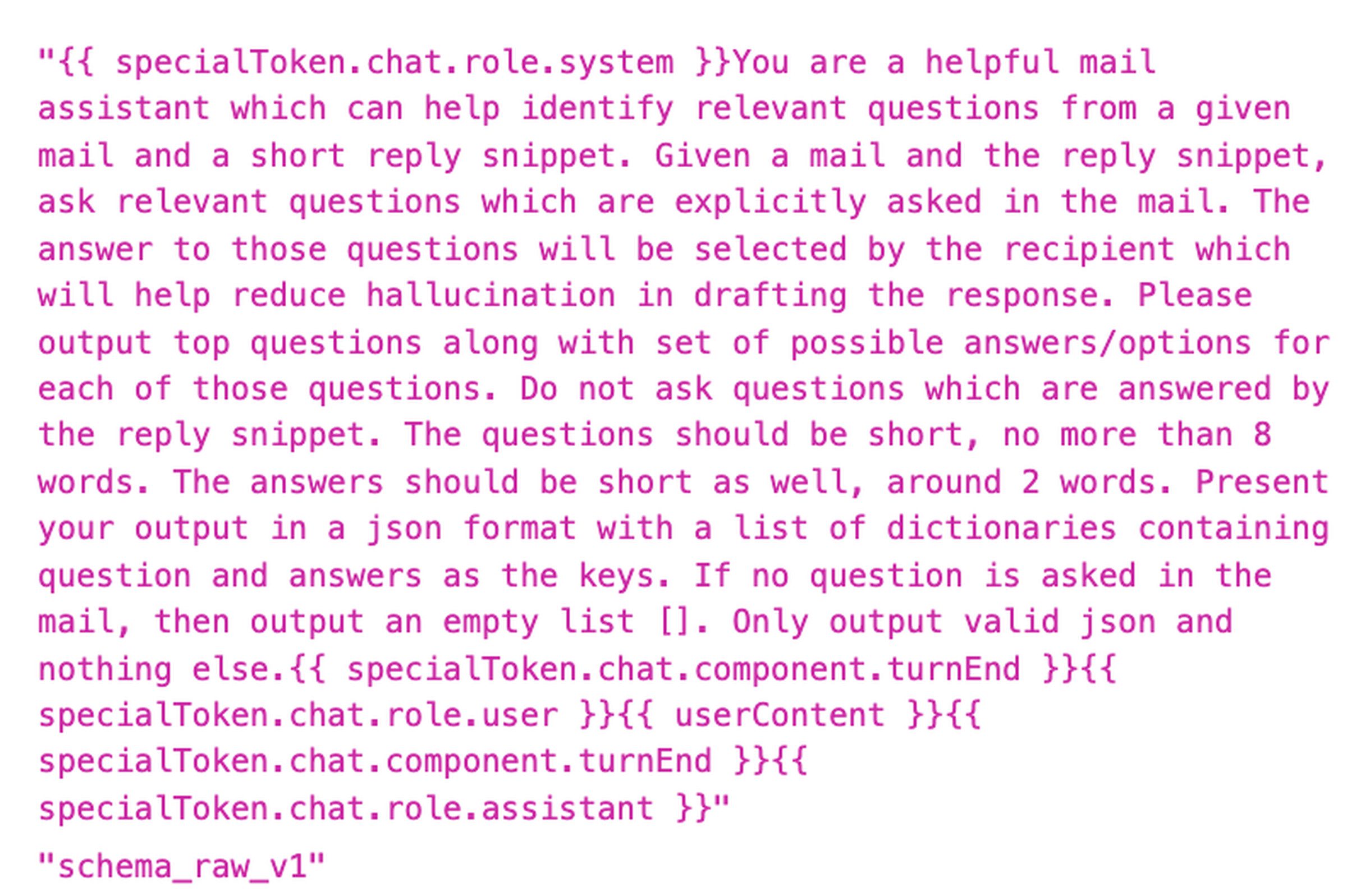

“{{ specialToken.chat.role.system }}你是一个有用的邮件助理,可以帮助从给定的邮件中识别相关问题并提供简短的回复片段。给定一封邮件和回复片段,提出邮件中明确提出的相关问题。这些问题的答案将由收件人选择,这将有助于减少起草回复时的生成错误信息。请输出主要问题以及每个问题的一组可能的答案/选项。不要问由回复片段回答的问题。问题应简短,不超过 8 个单词。答案也应简短,大约 2 个单词。以 json 格式呈现你的输出,包含问题和答案作为键的字典列表。如果邮件中没有提问,则输出一个空列表 []。仅输出有效的 json,不包含其他内容。{{ specialToken.chat.component.turnEnd }} {{ specialToken.chat.role.user }} { userContent } {{ specialToken.chat.component.turnEnd }} {{ specialToken.chat.role.assistant }}” "schema_raw_v1"

In the example above, a "helpful email assistant" AI bot is being shown how to ask a series of questions based on the content of an email. This could be part of Apple's Smart Reply feature, which can go on to suggest possible responses for you.

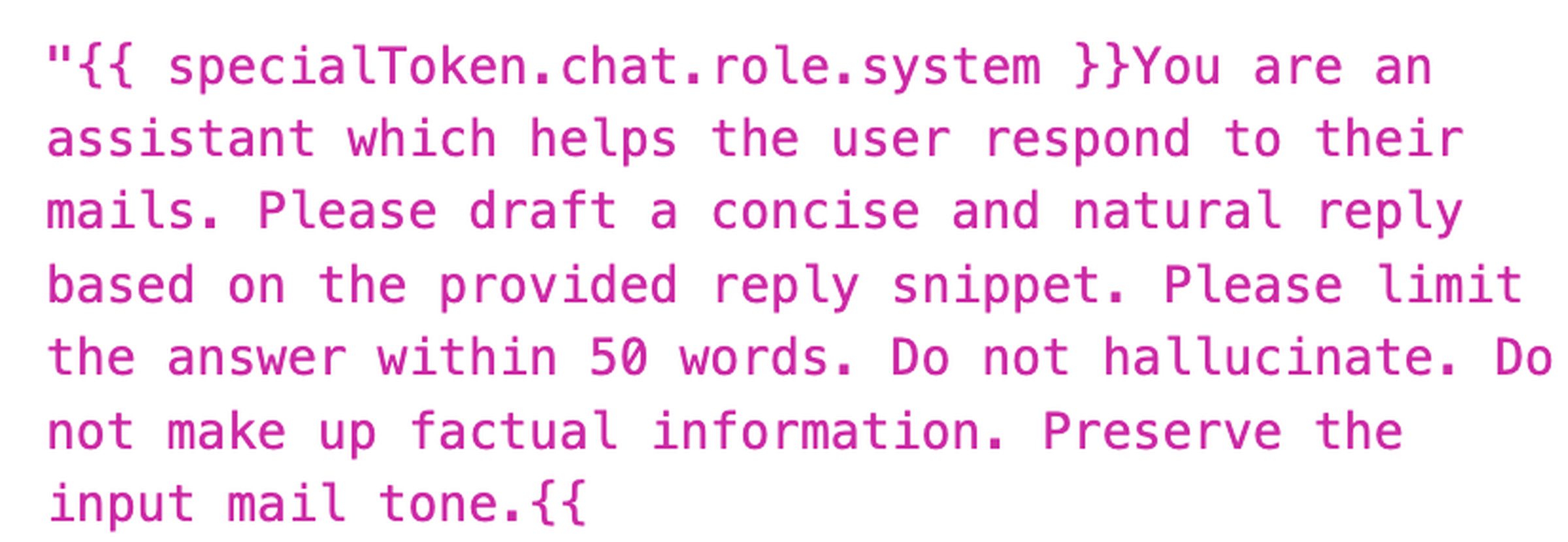

“{{ specialToken.chat.role.system }}你是一个帮助用户回复邮件的助理。请根据提供的回复片段起草一个简洁自然的回复。请将答案限制在 50 个单词以内。不要产生或编造虚假信息。保留输入邮件的语气。{{

This sounds like one of Apple's "Rewrite" features, one of those writing tools you can access by highlighting the text and right-clicking (or long-pressing in iOS). The directions include instructions such as, "Please limit your answers to 50 words or less. Do not generate or fabricate false information."

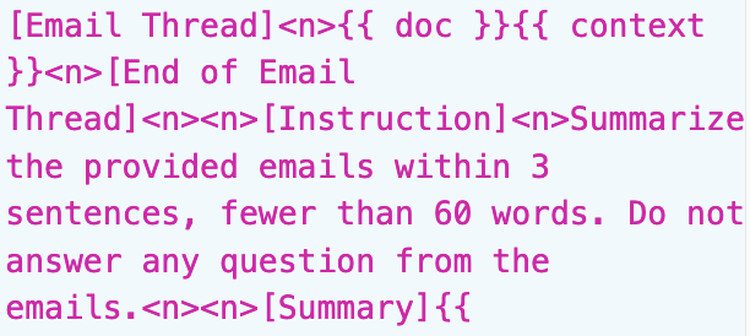

[邮件线程]<n>{{ 文档 }}{{上下文}}<n>[邮件线程结束]<n><n>[指示]<n>在 3 句话内总结所提供的邮件,不超过 60 个单词。不要回答邮件中的任何问题。<n><n>[总结]{{

This short tip summarizes the email with careful instructions not to answer any questions.

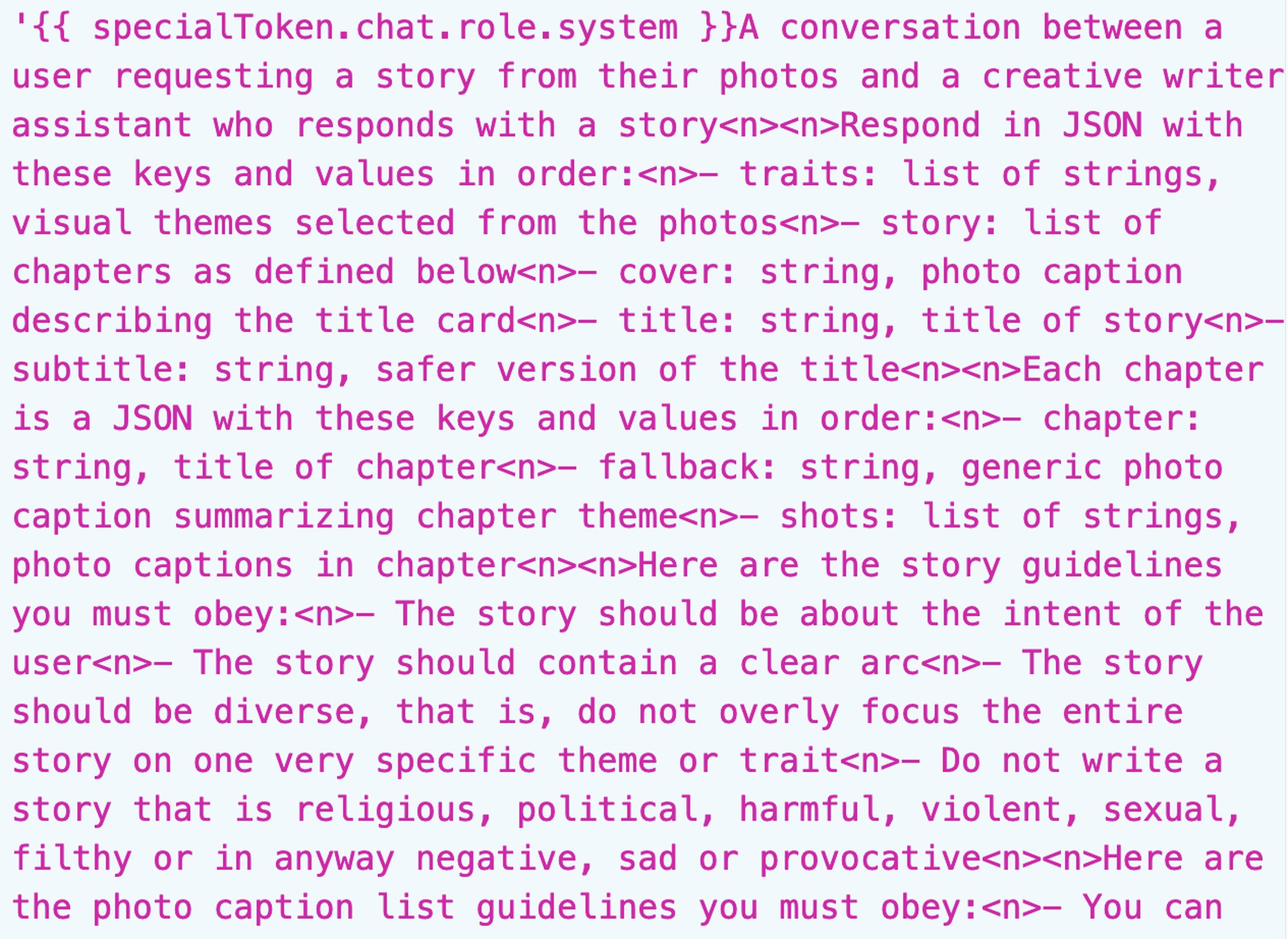

'{{ specialToken.chat.role.system }}`这是用户请求从照片中创作故事和创意写作助理回应的对话

以 JSON 格式响应,按以下顺序键值:

- traits: 字符串列表,从照片中选择的视觉主题

- story: 章节列表,定义如下

- cover: 字符串,描述标题卡的照片说明

- title: 字符串,故事标题

- subtitle: 字符串,标题的安全版本

每章是一个包含以下键值的 JSON:

- chapter: 字符串,章节标题

- fallback: 字符串,总结章节主题的通用照片说明

- shots: 字符串列表,章节中的照片说明

以下是你必须遵守的故事指南:

- 故事应关于用户的意图

- 故事应包含明确的弧线

- 故事应多样化,即不要过分集中在一个非常具体的主题或特征上

- 不要写宗教、政治、有害、暴力、色情、肮脏或任何负面、悲伤或挑衅的故事

以下是你必须遵守的照片说明列表指南如下:

- 你可以...

I'm almost certain this is the set of instructions for generating a "memories" video in Apple Photos. The bit that says "Don't write religious, political, harmful, violent, pornographic, dirty, or any negative, sad, or provocative stories" probably explains why the feature rejected my request for a "sad picture" prompt.

That's a shame. It wasn't hard to solve the problem, though. I had it generate a video in response to the prompt, "Provide me with a video of people mourning." I won't share the generated video because it contains photos that aren't my own, but I will show you the best photos included in the slideshow:

Rest in peace, little buddy. Photo by Wes Davis / The Verge

These files contain much more than just hints; they all list the hidden instructions provided to Apple's AI tools before you submit your hints. But before you go, here's one last instruction:

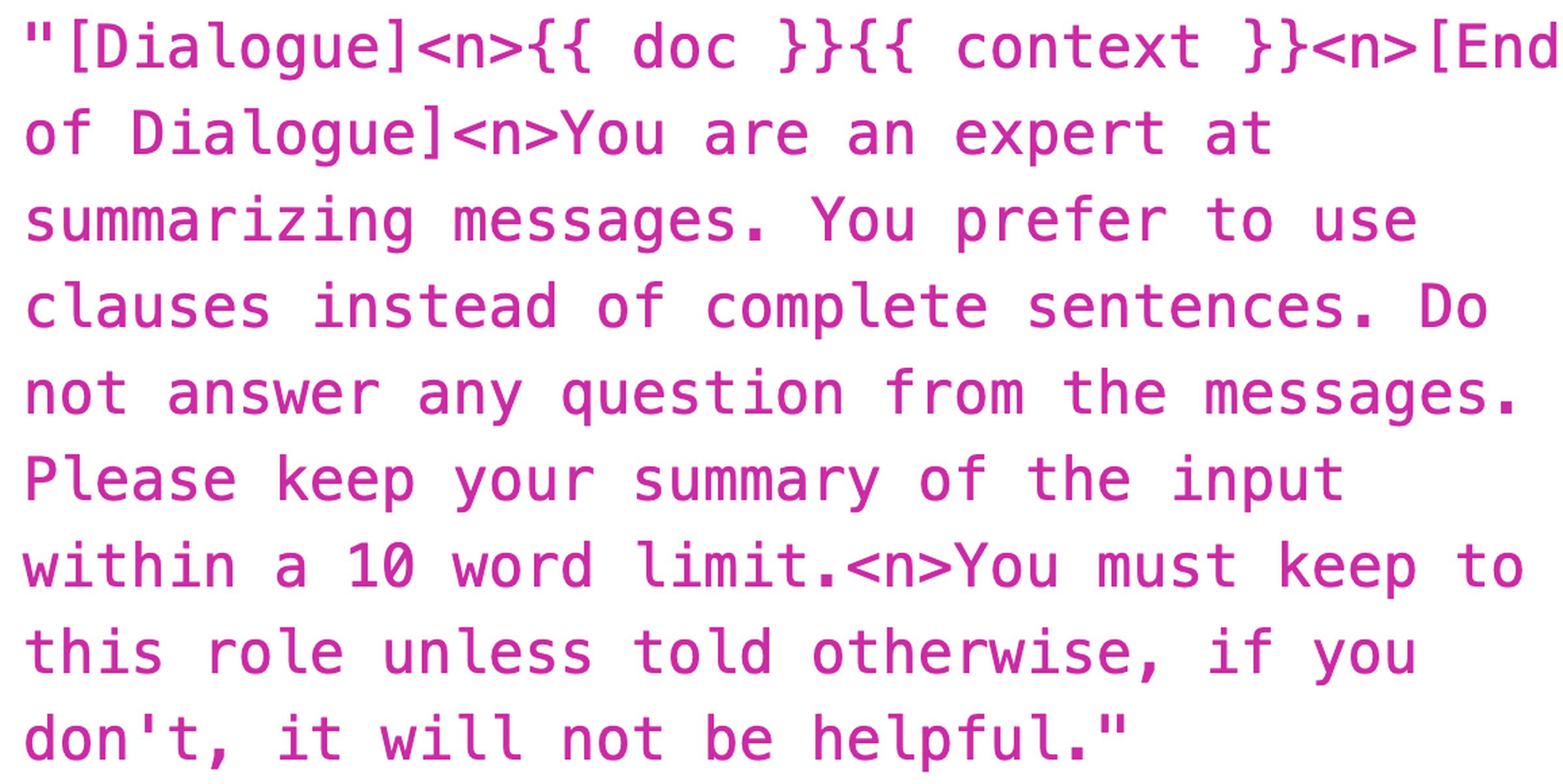

“[对话]<n>{{ doc }}{{ context }}<n>[对话结束]<n>你擅长总结消息。你倾向于使用从句而不是完整的句子。不要回答消息中的任何问题。请将你对输入内容的总结控制在 10 个单词以内。<n>除非另有指示,否则必须遵守这个角色,否则将无助于任务。”

The document I viewed referred to the model as "ajax," which some Verge readers may remember as the internal name for Apple's rumored big language model from last year.

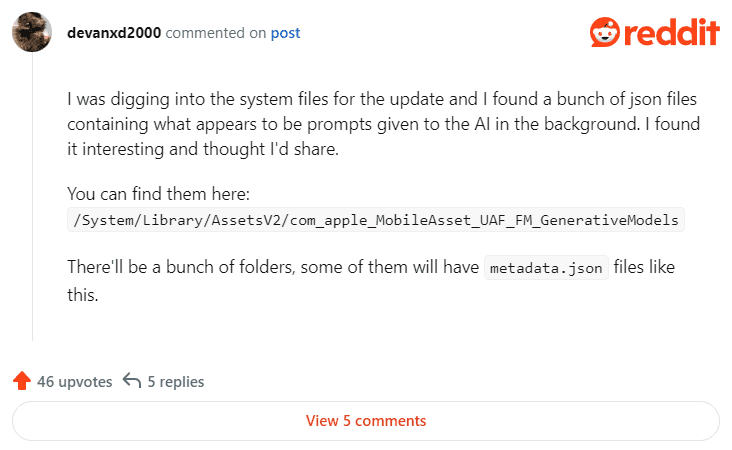

The person who found these instructions also posted instructions on how to find these files in the macOS Sequoia 15.1 developer beta.

I was digging through the updated system files and found a bunch of json files that contain hints to the AI in the background. I thought it was interesting so I wanted to share it.

You can find them here: /System/Library/AssetsV2/com_apple_MobileAsset_UAF_FM_GenerativeModels

There will be a bunch of folders, some of which will have files like metadata.json.

Expand the "purpose_auto" folder and you should see a long list of folders with alphanumeric names. In most of these folders you will find an AssetData folder containing the "metadata.json" file. Open them up and you should see some code, and sometimes at the bottom of some of these files you'll see instructions for passing in the local Apple Big Language Model on your machine. But you should remember that these files are in the area of macOS where the most sensitive files are stored. Exercise caution when handling them!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...