InternVL: Open Source Multimodal Large Model with Image, Video and Text Processing Support

General Introduction

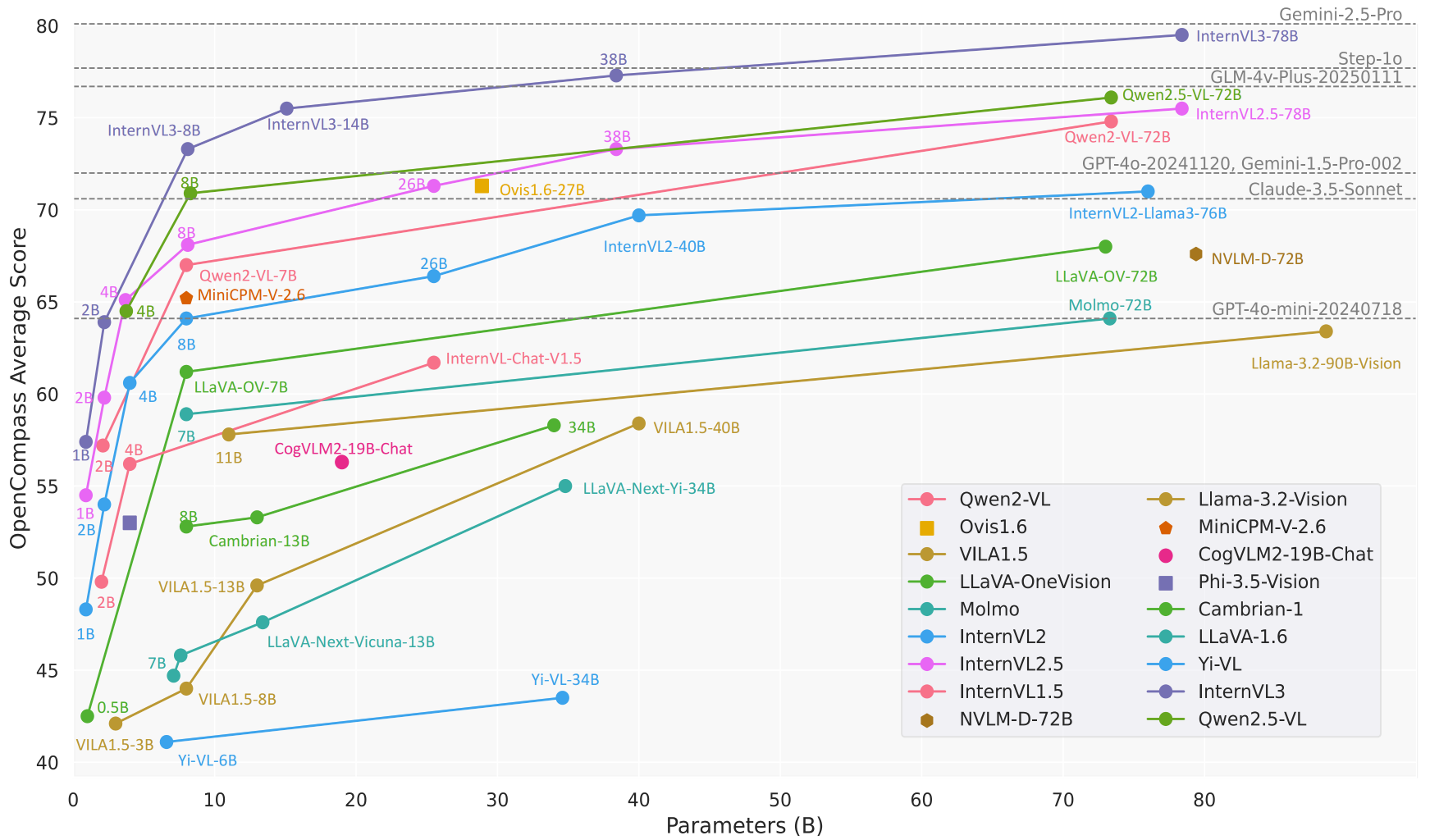

InternVL is an open source multimodal grand modeling project developed by Shanghai Artificial Intelligence Lab (OpenGVLab) and hosted on GitHub. It integrates visual and linguistic processing capabilities to support the comprehensive understanding and generation of images, videos, and texts.InternVL aims to build an open source alternative comparable to commercial models (e.g., GPT-4o) for a wide range of tasks such as visual perception, cross-modal retrieval, and multimodal dialog. The project is known for its powerful visual encoders, dynamic high-resolution support, and efficient training strategies, with model sizes ranging from 1B to 78B parameters, suitable for a wide range of application scenarios from edge devices to high-performance servers. The code, models, and datasets are open under the MIT license, and researchers and developers are encouraged to use and improve them freely.

Function List

- multimodal dialog: Supports image, video, and text input to generate natural language responses for chat, quiz, and task instruction.

- image processing: Dynamically processes images up to 4K resolution and supports image classification, segmentation and object detection.

- Video comprehension: Analyzing video content for zero-sample video classification and text-video retrieval.

- document resolution: Handles complex documents and specializes in OCR, form recognition, and document quizzing for tasks such as DocVQA.

- Multi-language support: Built-in multilingual text encoder supporting 110+ language generation tasks.

- Efficient Reasoning: Provides a simplified inference process through LMDeploy, supporting multi-image and long context processing.

- Openness of data sets: Provides large-scale multimodal datasets such as ShareGPT-4o, containing images, video and audio.

Using Help

Installation process

To use InternVL locally, you need to configure your Python environment and install the relevant dependencies. The following are the detailed installation steps:

- clone warehouse

Run the following command in the terminal to get the InternVL source code:git clone https://github.com/OpenGVLab/InternVL.git cd InternVL - Creating a Virtual Environment

Create a Python 3.9 environment with conda and activate it:conda create -n internvl python=3.9 -y conda activate internvl - Installation of dependencies

Install the required dependencies for the project, which by default include the necessary libraries for multimodal dialogs and image processing:pip install -r requirements.txtIf additional functionality is required (such as image segmentation or classification), specific dependencies can be installed manually:

pip install -r requirements/segmentation.txt pip install -r requirements/classification.txt - Install Flash-Attention (optional)

To accelerate model inference, it is recommended that Flash-Attention be installed:pip install flash-attn==2.3.6 --no-build-isolationOr compile from source:

git clone https://github.com/Dao-AILab/flash-attention.git cd flash-attention git checkout v2.3.6 python setup.py install - Install MMDeploy (optional)

If you need to deploy the model to a production environment, install MMDeploy:pip install -U openmim mim install mmdeploy

Usage

InternVL provides multiple ways of use, including command line reasoning, API services and interactive demonstrations. The following is an example of the InternVL2_5-8B model to introduce the operation flow of the main functions:

1. Multimodal dialogues

InternVL supports dialogs with both image and text input. The following is an example of reasoning using LMDeploy:

- Preparing models and images: Ensure that the model has been downloaded (e.g.

OpenGVLab/InternVL2_5-8B) and prepare an image (e.g.tiger.jpeg). - running inference: Execute the following Python code that describes the contents of the image:

from lmdeploy import pipeline, TurbomindEngineConfig from lmdeploy.vl import load_image model = 'OpenGVLab/InternVL2_5-8B' image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg') pipe = pipeline(model, backend_config=TurbomindEngineConfig(session_len=8192)) response = pipe(('描述这张图片', image)) print(response.text) - in the end: The model outputs a detailed description of the image, e.g. "The picture is of a standing tiger with green grass in the background".

2. Multi-image processing

InternVL supports simultaneous processing of multiple images, suitable for comparison or comprehensive analysis:

- code example::

from lmdeploy.vl.constants import IMAGE_TOKEN image_urls = [ 'https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg', 'https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/det.jpg' ] images = [load_image(url) for url in image_urls] prompt = f'Image-1: {IMAGE_TOKEN}\nImage-2: {IMAGE_TOKEN}\n描述这两张图片' response = pipe((prompt, images)) print(response.text) - in the end: The model will describe the content of each image separately and possibly summarize the relationships between them.

3. Document parsing

InternVL performs well in document quizzing (DocVQA) and form recognition tasks. The operation flow is as follows:

- Preparing Document Images: Upload images that contain text, tables or charts.

- ask questions: Use prompts like "Extract data from table" or "Summarize document contents".

- code example::

image = load_image('document.jpg') response = pipe(('提取图片中表格的内容', image)) print(response.text) - in the end: The model returns a summary of the structured data of the table or document.

4. Deployment of API services

InternVL supports deployment of RESTful APIs via LMDeploy for production environments:

- Starting services::

lmdeploy serve api_server OpenGVLab/InternVL2_5-8B --server-port 23333 - Accessing the API: Use OpenAI-compatible interfaces to send requests, such as through the

curlor Python client call model.

5. Online presentation

OpenGVLab provides an online demonstration platform (https://internvl.opengvlab.com/), no installation is required to experience it:

- Visit the website, upload an image or video, enter a question.

- The model returns results in real time, making it suitable for quick testing.

Featured Function Operation

- Dynamic high resolution: InternVL automatically splits images into 448x448 chunks and supports up to 4K resolution. Users don't need to manually resize images, just upload them directly.

- Video comprehensionUploading a video file, combined with a prompt (e.g., "summarize the video content"), the model analyzes the key frames and generates a description.

- Multi-language generation: Specify the language in the prompt (e.g., "Answer in French") and the model generates a response in the corresponding language.

caveat

- Ensure that you have enough GPU memory (8B models require about 16GB of GPU memory).

- Increase the context window when processing multiple images or long videos (

session_len=16384). - Check dependent versions to avoid compatibility issues.

application scenario

- academic research

Researchers use InternVL to analyze scientific diagrams, process experimental images, or parse tabular data from papers. The model's high-precision OCR and document comprehension capabilities dramatically increase the efficiency of data extraction. - Educational aids

Teachers and students use InternVL to solve image-related homework problems, such as interpreting historical pictures or analyzing geographic charts. The model's multilingual support is suitable for internationalized educational scenarios. - Enterprise Document Processing

Organizations use InternVL to automate the processing of scanned documents, contracts or invoices, extracting key information and generating reports, saving labor costs. - content creation

Content creators use InternVL to analyze video footage and generate scripts or subtitles to improve creative efficiency. - Intelligent Customer Service

The customer service system integrates with InternVL to process user uploaded images (e.g. product failure photos), quickly diagnose problems and provide solutions.

QA

- What model sizes does InternVL support?

InternVL offers models with parameters ranging from 1B to 78B, suitable for different devices. 1B models are suitable for edge devices, while 78B models have performance comparable to GPT-4o. - How do you handle high resolution images?

The model automatically splits the image into 448x448 chunks and supports 4K resolution. Upload images directly without pre-processing. - Does it support video analytics?

Yes, InternVL supports zero-sample video classification and text-video retrieval. Just upload a video and enter the prompt word. - Is the model open source?

InternVL is completely open source, with code and model weights available on GitHub under the MIT license. - How to optimize the speed of reasoning?

Install Flash-Attention and use GPU acceleration. Adjustmentssession_lenparameter to accommodate long contexts.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...