InternLM-XComposer: a multimodal macromodel for outputting very long text and image-video comprehension

General Introduction

InternLM-XComposer is an open source graphical multimodal large model project developed by the InternLM team and hosted on GitHub, which is based on the InternLM language model and is capable of processing multimodal data such as text, images, videos, etc., and is widely used in the fields of graphic creation, image understanding, and video analysis, etc. The project is known for its support of up to 96K long contexts, processing of 4K high-resolution images, and fine-grained video understanding. The project is known for its ability to support up to 96K long contexts, process 4K high-resolution images, and understand fine-grained video, with performance comparable to GPT-4V using only 7B parameters. The code, model weights, and detailed documentation can be accessed via GitHub for researchers, developers, or users interested in multimodal AI. As of February 2025, the project has released multiple versions, including InternLM-XComposer-2.5 and OmniLive, to continuously optimize the multimodal interaction experience.

Function List

- Support for ultra-long contextual output: Handles up to 96K of mixed graphic content for complex tasks.

- High-resolution image understanding: Supports image analysis from 336 pixels to 4K with clear details.

- Fine-grained video understanding: breaking down video into multi-frame images to capture dynamic details.

- Graphic Creation: Generate graphic articles or web content according to instructions.

- Multiple rounds of multi-image dialog: support multiple image inputs for continuous dialog analysis.

- Open source model support: provide a variety of model weights and fine-tuning code to facilitate secondary development.

- Multimodal Streaming Interaction: The OmniLive version supports long duration video and audio processing.

Using Help

InternLM-XComposer is an open source project based on GitHub, users need some programming foundation to install and use it. The following is a detailed operation guide to help users get started quickly .

Installation process

1. Environmental preparation

- Make sure you have Python 3.9 or above installed on your device.

- Requires NVIDIA GPU and CUDA support (CUDA 11.x or 12.x recommended).

- Install Git to clone your code base.

2. Cloning project

Run the following command in the terminal to download the project locally:

git clone https://github.com/InternLM/InternLM-XComposer.git cd InternLM-XComposer

3. Creating a Virtual Environment Isolate dependencies using Conda or virtual environment tools:

conda create -n internlm python=3.9 -y conda activate internlm

4. Installation of dependencies Install the necessary libraries according to the official documentation:

pip install torch==2.0.1+cu117 torchvision==0.15.2+cu117 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu117 pip install transformers==4.33.2 timm==0.4.12 sentencepiece==0.1.99 gradio==4.13.0 markdown2==4.4.10 xlsxwriter==3.1.2 einops

- Optional: install flash-attention2 to save GPU memory:

pip install flash-attn --no-build-isolation

5. Download model weights The project supports downloading pre-trained models from Hugging Face, for example:

model = AutoModel.from_pretrained('internlm/internlm-xcomposer2d5-7b', torch_dtype=torch.bfloat16, trust_remote_code=True).cuda().eval()

6. Verify Installation Run the sample code to test whether the environment is normal:

python -m torch.distributed.run --nproc_per_node=1 example_code/simple_chat.py

Main function operation flow

1. Graphic creation

- Function Introduction: Generate content containing text and images, such as articles or web pages, based on user instructions.

- procedure::

- Prepare input: Write a text instruction (e.g., "Write an article about traveling, including three pictures").

- Run the code:

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained('internlm/internlm-xcomposer2d5-7b', trust_remote_code=True).cuda().eval()

tokenizer = AutoTokenizer.from_pretrained('internlm/internlm-xcomposer2d5-7b', trust_remote_code=True)

query = "写一篇关于旅行的文章,包含三张图片"

response, _ = model.chat(tokenizer, query, do_sample=False, num_beams=3)

print(response)

- Output: The model generates a mixture of graphical and textual content, and the image descriptions are automatically embedded in the text.

2. High-resolution image understanding

- Function Introduction: Analyze high-resolution images and provide detailed descriptions.

- procedure::

- Prepare the image: Place the image file in a local directory (e.g.

examples/dubai.png). - Run the code:

query = "详细分析这张图片"

image = ['examples/dubai.png']

with torch.autocast(device_type='cuda', dtype=torch.float16):

response, _ = model.chat(tokenizer, query, image, do_sample=False, num_beams=3)

print(response)

- Output: the model returns a detailed description of the image content, e.g. details such as buildings, colors, etc.

3. Video analysis

- Function Introduction: Decompose video frames and describe the content.

- procedure::

- Preparation Video: Download the example video (e.g.

liuxiang.mp4). - Use the OmniLive version:

from lmdeploy import pipeline

pipe = pipeline('internlm/internlm-xcomposer2d5-ol-7b')

video = load_video('liuxiang.mp4')

query = "描述这段视频内容"

response = pipe((query, video))

print(response.text)

- Output result: returns a detailed description of the video frame, such as an action or scene.

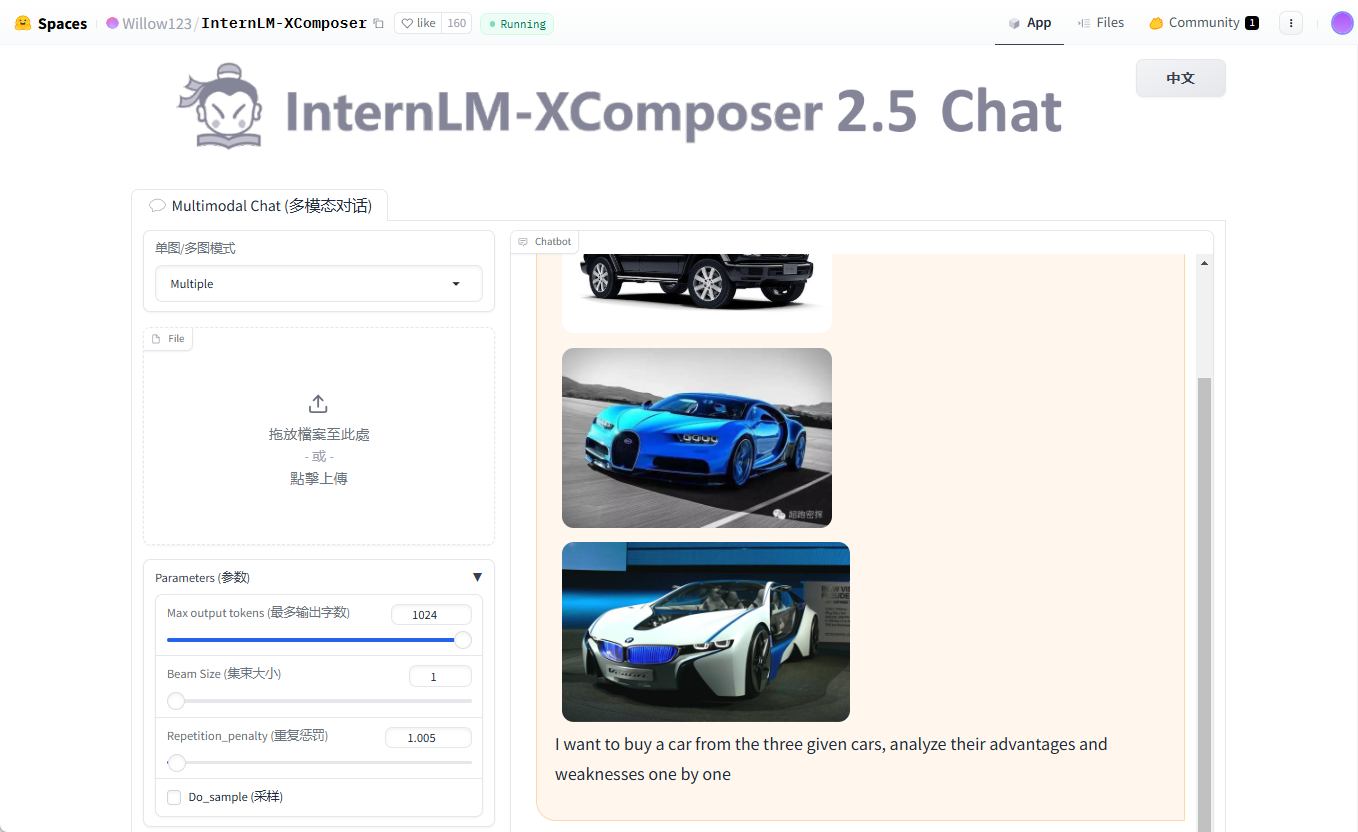

4. Multiple rounds of multi-chart dialogues

- Function Introduction: Supports multiple picture input for continuous dialog.

- procedure::

- Prepare multiple images (e.g.

cars1.jpg,cars2.jpg,cars3.jpg). - Run the code:

query = "Image1 <ImageHere>; Image2 <ImageHere>; Image3 <ImageHere>; 分析这三辆车的优缺点"

images = ['examples/cars1.jpg', 'examples/cars2.jpg', 'examples/cars3.jpg']

response, _ = model.chat(tokenizer, query, images, do_sample=False, num_beams=3)

print(response)

- Output: the model analyzes the vehicle characteristics corresponding to each picture one by one.

caveat

- hardware requirement: At least 24GB of GPU memory is recommended, try the 4-bit quantized version for lower-end devices.

- Debugging Tips: If you encounter insufficient video memory, lower the

hd_numparameter (default 18). - Community Support: Visit the GitHub Issues page to see frequently asked questions or submit feedback.

By following these steps, you can easily install and use the powerful features of InternLM-XComposer for both research and development.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...