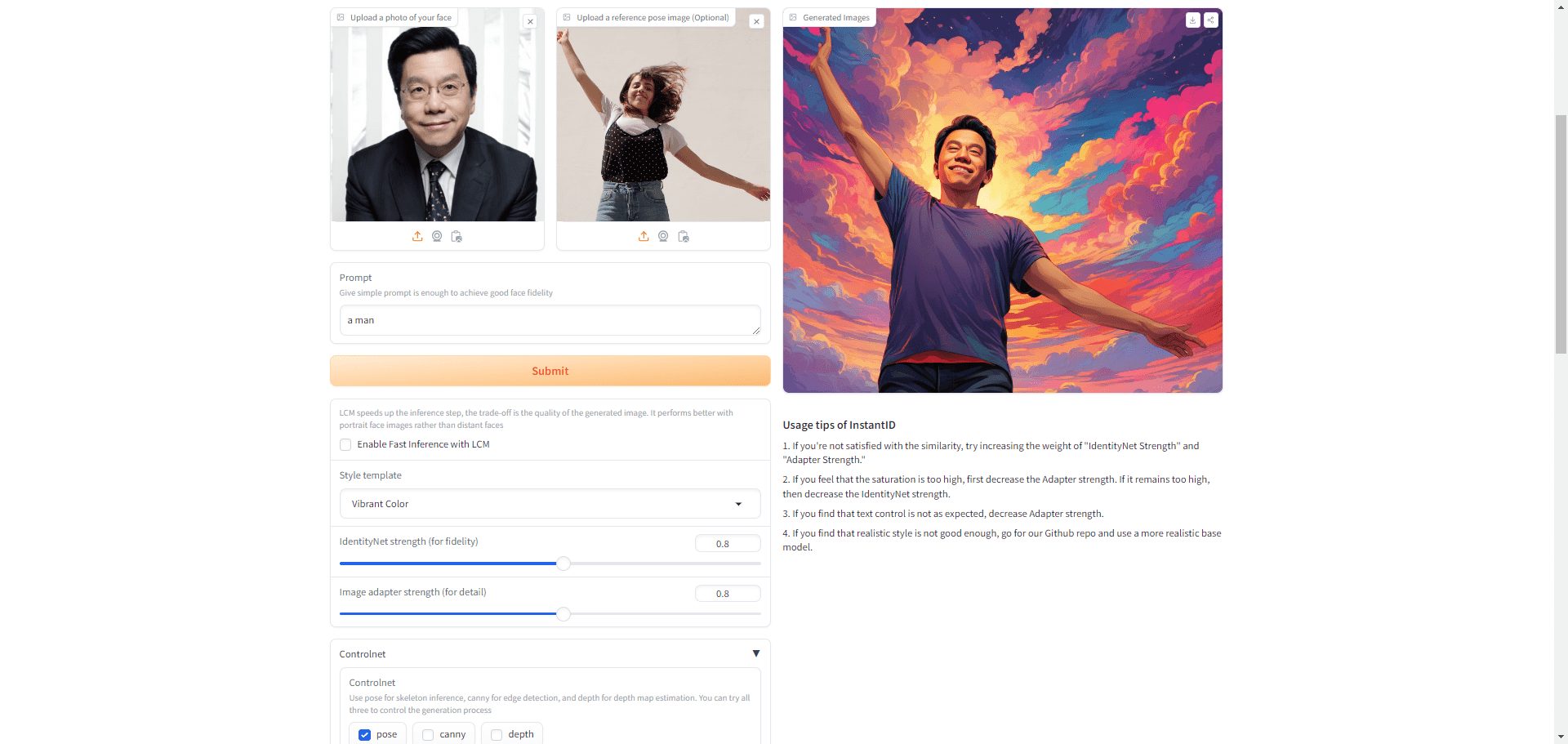

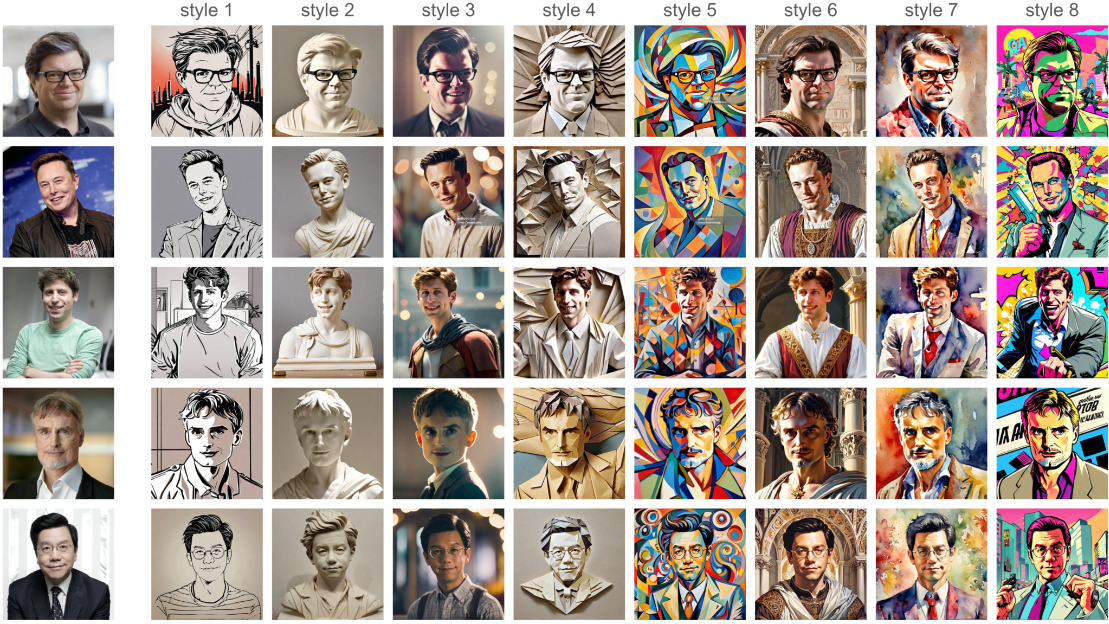

InstantID: upload an image and migrate the portrait features to generate different styles of images

General Introduction

InstantID is a state-of-the-art technology focused on generating images with personalized styles or poses in seconds using a single reference ID picture while ensuring a high level of fidelity. The technology employs a diffusion model-based solution that accurately guides the image generation process by integrating facial images, landmark images and textual cues. Key features include high-fidelity image generation, compatibility with popular pre-trained text-to-image diffusion models that can be used without extensive fine-tuning or multiple reference images, and high facial fidelity and text editing capabilities.

InstantID is a new state-of-the-art adjustment-free method for avatar feature ID retention generation from a single image, supporting a variety of downstream tasks. Clone a face from just one photo and use cue words to generate different style images of the same face.

Function List

- Zero sample identity retention generation: No need for multiple images, just one front face image to generate multiple styles of portraits.

- High fidelity generation: the generated results have high fidelity and can well preserve the identity features of the original image.

- Multiple downstream task support: Supports multiple downstream tasks such as style migration, image editing, etc.

- Open source code and models: open source code and pre-trained models are provided for easy download and use.

- Strong compatibility: Supports integration with other programs such as InstantStyle and Kolors of compatible use.

Using Help

Upload a person image. For multiple people images, we will only detect the largest faces. Make sure that the face is not too small or visibly obscured or blurred.

(Optional) Upload another character image as a reference pose. If not uploaded, we will use the first person image to extract the landmarks. If a cropped face was used in step 1, it is recommended to upload it to extract a new pose.

Input text prompts, just like normal text to image models.

Click the Submit button to start customizing.

Users are required to provide a single reference ID picture

Different styles and poses can be selected for personalized image generation

No need to fine-tune during testing or collect multiple images for fine-tuning

The generated images can be directly used for fusion with popular pre-trained models and control networks

Supports flexible addition of identity attributes to non-human roles

Installation process

- Clone a GitHub repository:

git clone https://github.com/instantX-research/InstantID.git cd InstantID - Install the dependencies:

pip install -r requirements.txt - Download the pre-trained model:

from huggingface_hub import hf_hub_download hf_hub_download(repo_id="InstantX/InstantID", filename="ControlNetModel/config.json", local_dir="./checkpoints") hf_hub_download(repo_id="InstantX/InstantID", filename="ControlNetModel/diffusion_pytorch_model.safetensors", local_dir="./checkpoints") hf_hub_download(repo_id="InstantX/InstantID", filename="ip-adapter.bin", local_dir="./checkpoints")

Usage Process

- Prepare the image:

from diffusers.utils import load_image image = load_image("your-example.jpg") - Load model:

from diffusers import StableDiffusionXLInstantIDPipeline, ControlNetModel controlnet = ControlNetModel.from_pretrained("./checkpoints/ControlNetModel", torch_dtype=torch.float16) pipe = StableDiffusionXLInstantIDPipeline.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", controlnet=controlnet, torch_dtype=torch.float16) pipe.cuda() pipe.load_ip_adapter_instantid("./checkpoints/ip-adapter.bin") - Generate an image:

prompt = "analog film photo of a man. faded film, desaturated, 35mm photo, grainy, vignette, vintage, Kodachrome, Lomography, stained, highly detailed, found footage, masterpiece, best quality" negative_prompt = "(lowres, low quality, worst quality:1.2), (text:1.2), watermark, painting, drawing, illustration, glitch, deformed, mutated, cross-eyed, ugly, disfigured" image = pipe(prompt, image_embeds=face_emb, image=face_kps, controlnet_conditioning_scale=0.8).images[0]

Detailed Operation Procedure

- Preparing the environment: Ensure that the necessary dependencies are installed and the pre-trained model is downloaded.

- Load Image: Use

load_imagefunction loads the image to be processed. - Loading Models: Use

from_pretrainedmethod loads the pre-trained ControlNet model and the StableDiffusionXLInstantIDPipeline. - Generating images: Set the cue word and negative cue word for the generated image by calling the

pipemethod to generate an image.

With the above steps, users can easily generate high fidelity identity retention images with InstantID.

ComfyUI Implementation Solution

Select the SDXL Base Dock. You can also try SDXL Turbo's 4-step process, which is very effective for quick testing.

The first load usually takes more than 60 seconds, but the node does its best to cache the model.

https://github.com/huxiuhan/ComfyUI-InstantID

InstantID Experience Address

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...