InspireMusic: Ali's open source unified music, song and audio generation framework

General Introduction

InspireMusic is an open source PyTorch-based toolkit focused on music, song, and audio generation. It provides a unified framework for generating high-quality audio with control over text cues, music structure, and music style.InspireMusic supports 24kHz and 48kHz audio generation and is capable of long audio generation. The toolkit not only provides inference and training code, but also supports mixed-precision training for model fine-tuning and inference.InspireMusic's goal is to help users innovate soundscapes and enhance harmonic aesthetics in music research through the process of audio labeling and de-labeling.

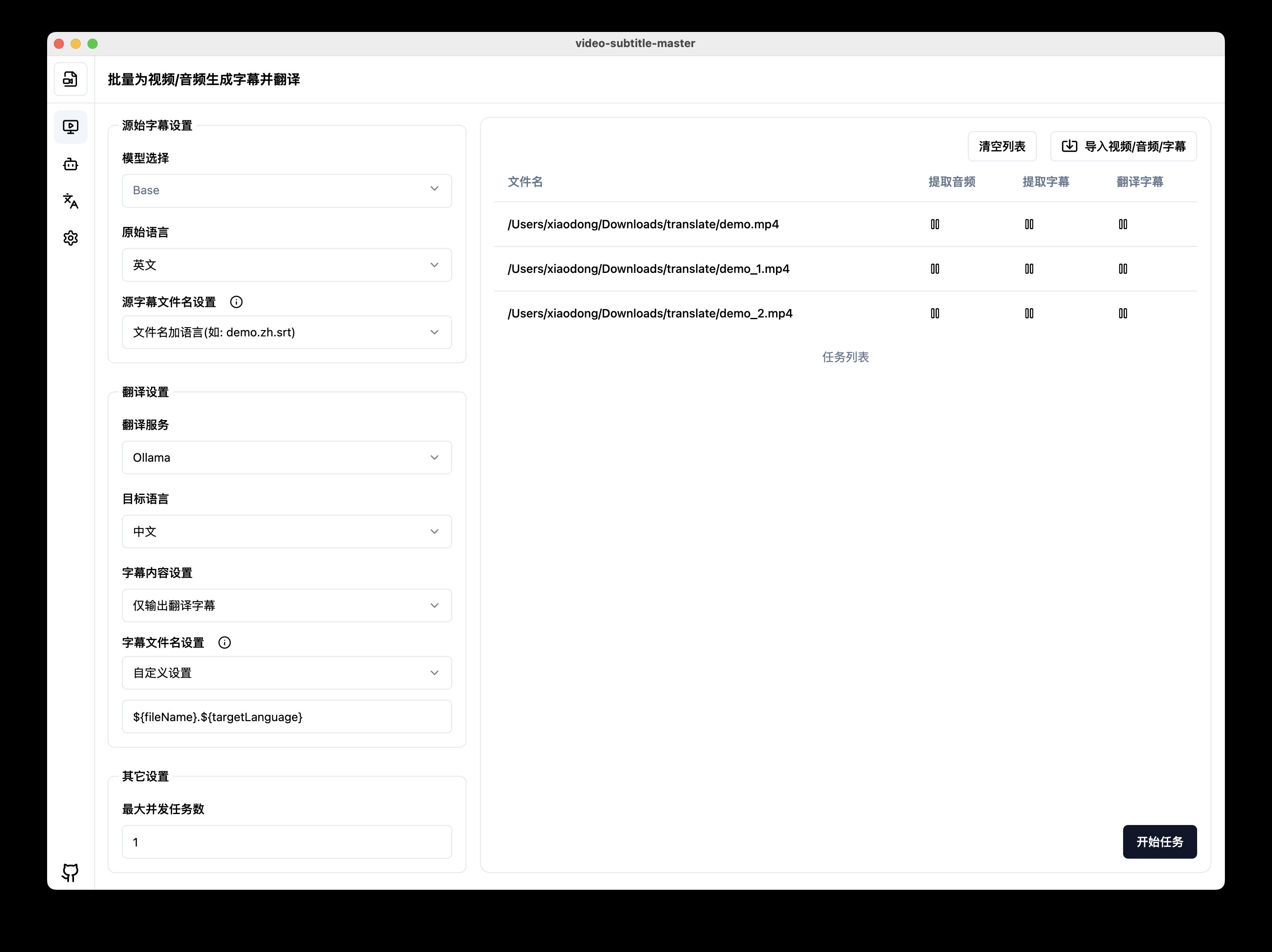

Demo: https://modelscope.cn/studios/iic/InspireMusic/summary

Function List

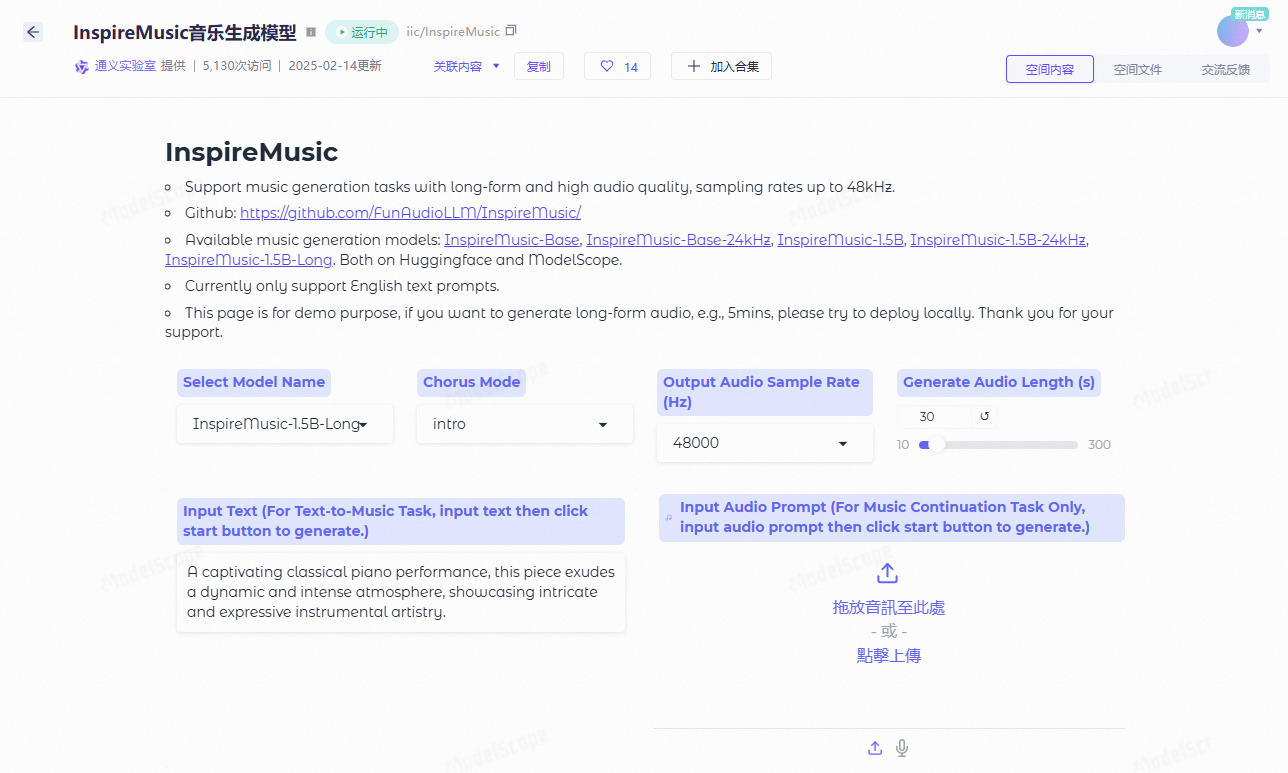

- Text-to-Music: Generate music from text cues.

- Music Structure Control: Support for generating music based on musical structures.

- Music Style Control: You can control the style of the generated music.

- High quality audio generation: Supports 24kHz and 48kHz audio generation.

- Long Audio Generation: Supports the generation of long duration audio.

- Mixed precision training: Supports BF16, FP16/FP32 mixed precision training.

- Model fine-tuning and inference: Provides easy fine-tuning and reasoning about scripts and strategies.

- Online Demo: An online demo is available and users can experience it on ModelScope and HuggingFace.

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/FunAudioLLM/InspireMusic.git

cd InspireMusic

- Install the dependencies:

pip install -r requirements.txt

- Install PyTorch (choose the appropriate install command for your version of CUDA):

pip install torch torchvision torchaudio

Guidelines for use

Text-to-Music

- Prepare text prompts, such as "Generate an upbeat piece of piano music."

- Run the generation script:

python app.py --text "生成一段欢快的钢琴音乐"

- The generated music will be saved in the specified output directory.

Music Structure Control

- Prepare a music structure file that defines the rhythms, chords, etc. of the music.

- Run the generation script:

python app.py --structure path/to/structure/file

- The generated music will be based on the structure file.

Music Style Control

- Select a predefined music style, e.g. "Classical", "Jazz", etc.

- Run the generation script:

python app.py --style "古典"

- The generated music will match the selected music style.

Model fine-tuning and inference

InspireMusic provides convenient fine-tuning and inference scripts that allow users to fine-tune the model and inference according to their needs. Below is a simple fine-tuning example:

- Prepare the training dataset.

- Run the fine-tuning script:

python finetune.py --data path/to/dataset --output path/to/output/model

- Inference using a fine-tuned model:

python app.py --model path/to/output/model --text "生成一段新的音乐"

Online Demo

Users can visit the online demo pages on ModelScope and HuggingFace to experience the power of InspireMusic. Simply enter text prompts to generate high-quality music.

jian27 Integration Pack

Quark: https://pan.quark.cn/s/4843d9c54615

Baidu: https://pan.baidu.com/s/1hKIHENqPbKRBjnbVRBni7Q?pwd=2727

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...