Inflection-2.5: Meet the World's Best Personal AIs

At Inflection, our mission is to create a personal AI for everyone, and last May, we launched [...].Pi] - an empathetic, helpful, and safe personal AI.In November, we announced the launch of a new primary base model [...Inflection-2], at the time the second best large-scale language model in the world.

Now, we are adding IQ (Intelligence Quotient) to Pi's exceptional EQ (Emotional Quotient).

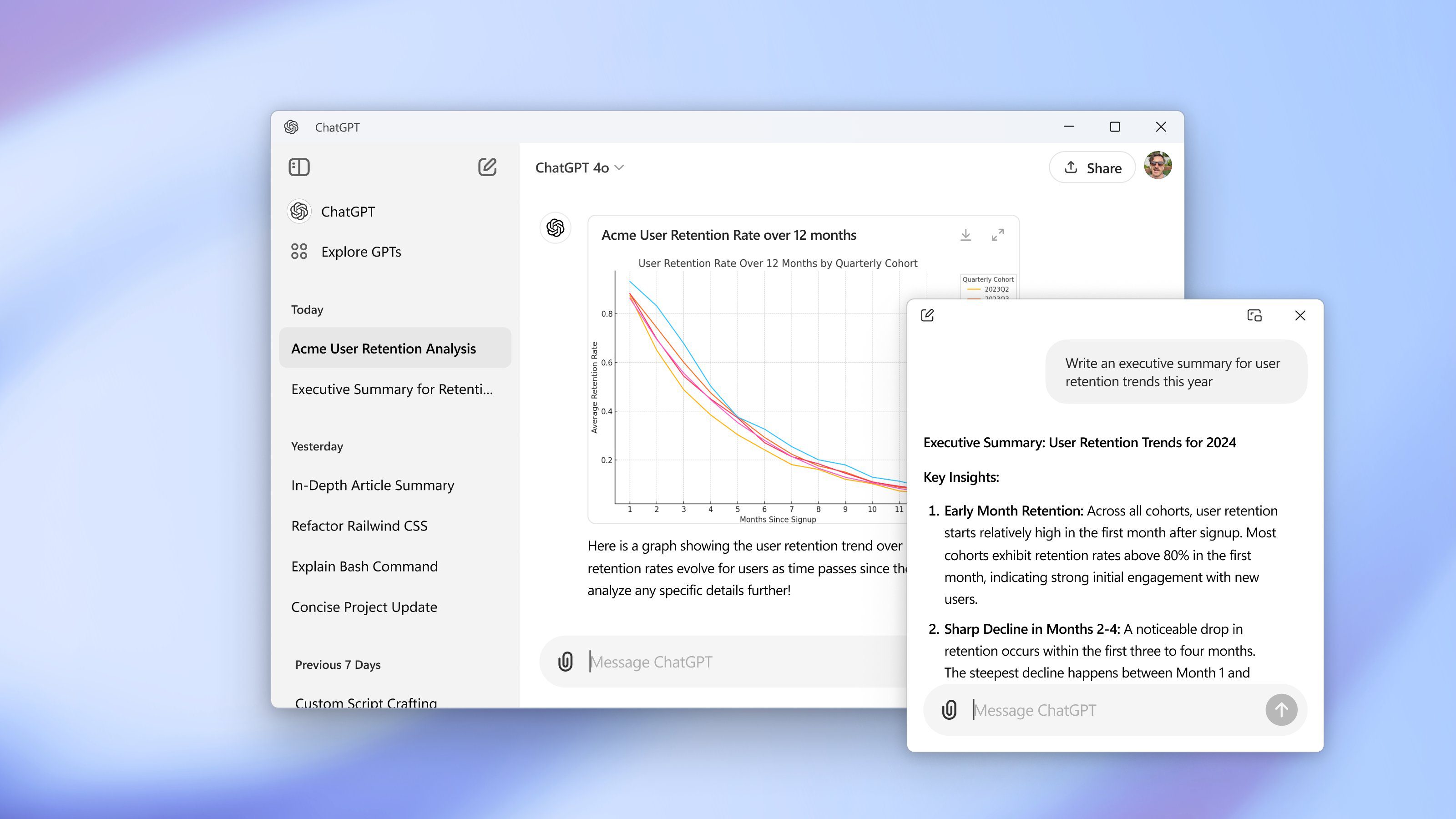

We have launched Inflection-2.5, our upgraded in-house model that is as good as the world's leading large-scale language models such as GPT-4 and Gemini. It combines raw power with our uniquely sympathetic personality and fine-tuning. Starting today, Inflection-2.5 will be available in [...pi.ai[( ), [iOS], [Android (operating system)] or our new [desktop] application for all Pi users.

We achieved this milestone with incredible efficiency: Inflection-2.5 has almost the same performance as GPT-4, but uses only about the same amount of computation for training as GPT-4.40%The

We have made particular advances in IQ areas such as coding and math. This is reflected in specific improvements in key industry benchmarks, ensuring that the Pi remains at the forefront of technology.The Pi now also includesWorld-class real-time web search capabilities:: Ensure that users have access to high-quality updates and up-to-date information.

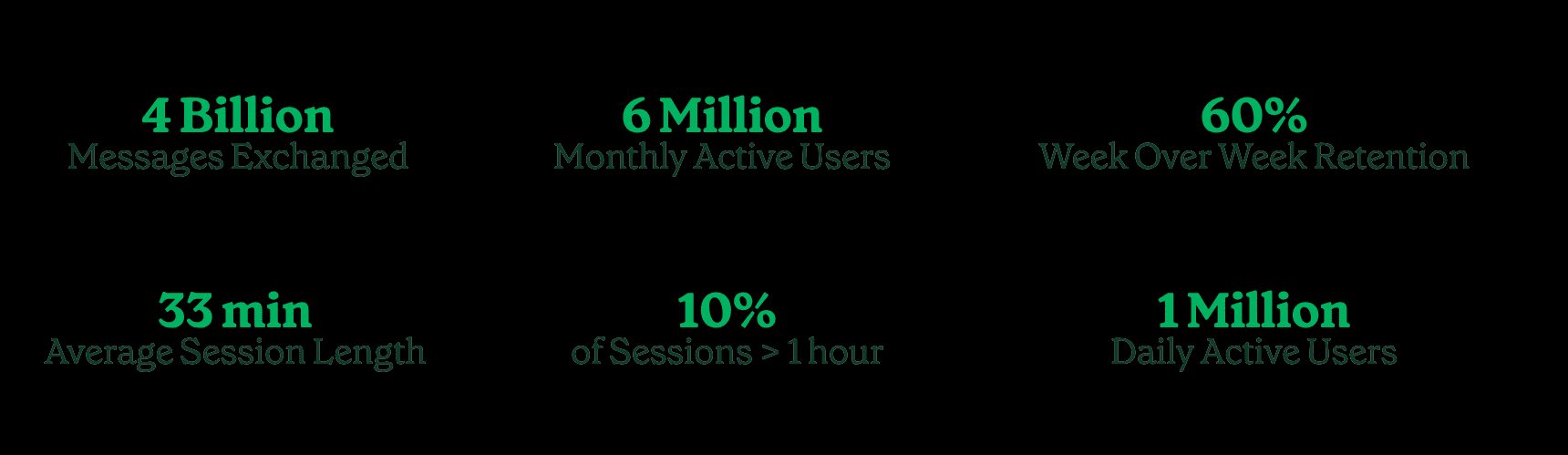

We've rolled out Inflection-2.5 to our users and they're really loving the Pi! We've seen a huge increase in user sentiment, engagement and retention, accelerating organic user growth.

We have one million active users a day, six million active users a month, who have exchanged over four billion messages with the Pi.

The average conversation length with Pi is33 minutes.One in 10 conversations lasts more than an hour each day. Of the people who talk to Pi in any given week, approximately60%We'll be talking again next week, and we're seeing a higher monthly stickiness than our main competitor.

With the power of Inflection-2.5, users can discuss a wider range of topics with Pi than ever before: discuss current events, get recommendations for local restaurants, study for a biology exam, draft a business plan, code, prepare for an important conversation, or just have fun discussing a hobby. We can't wait to show you what Pi can do.

Technical results

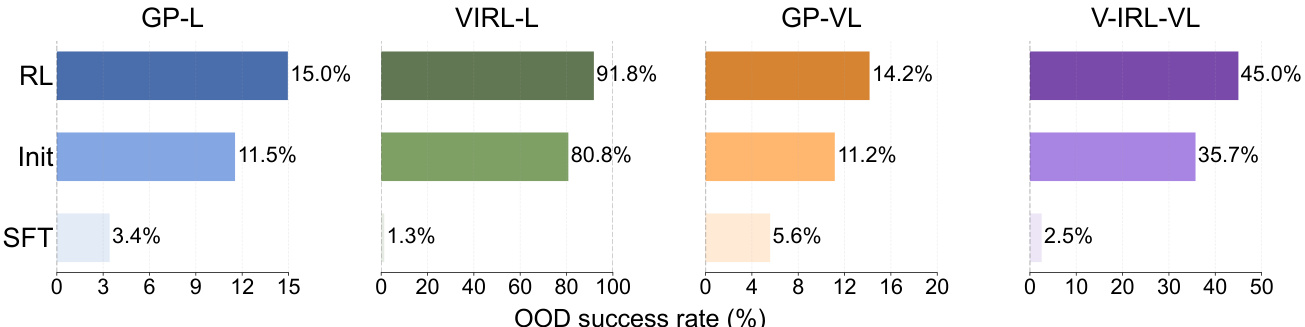

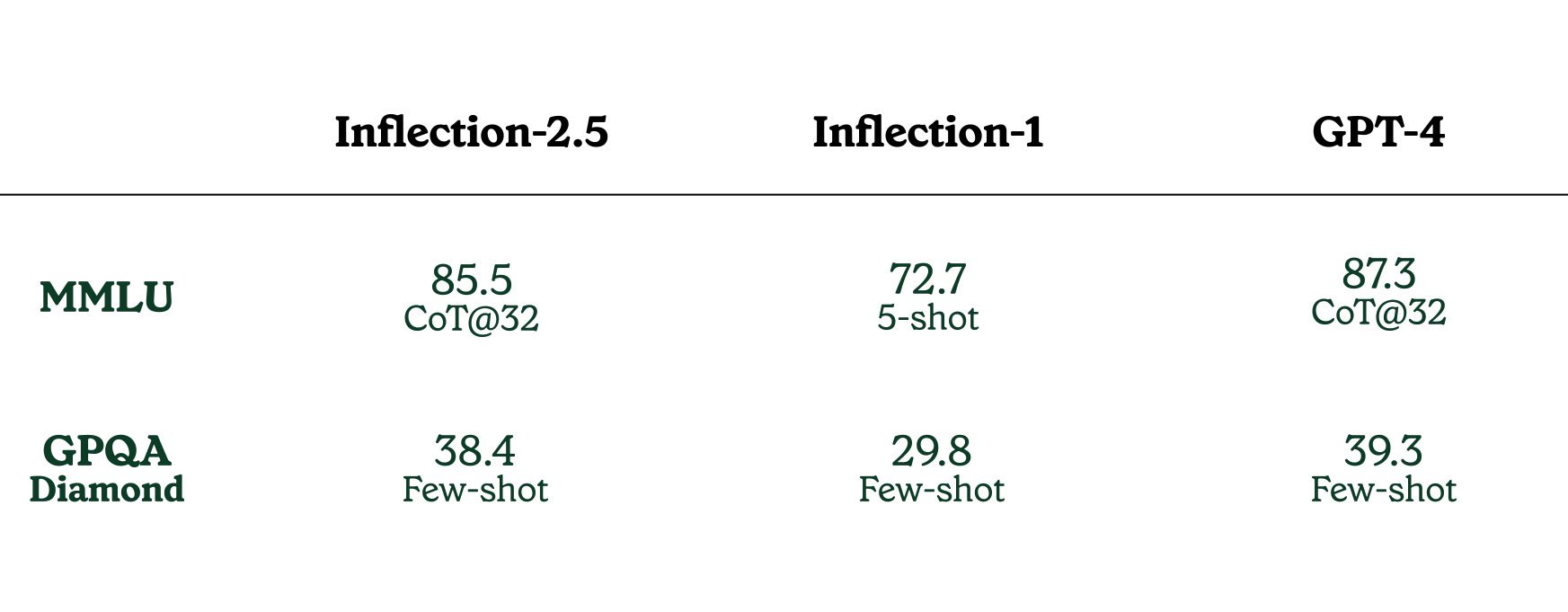

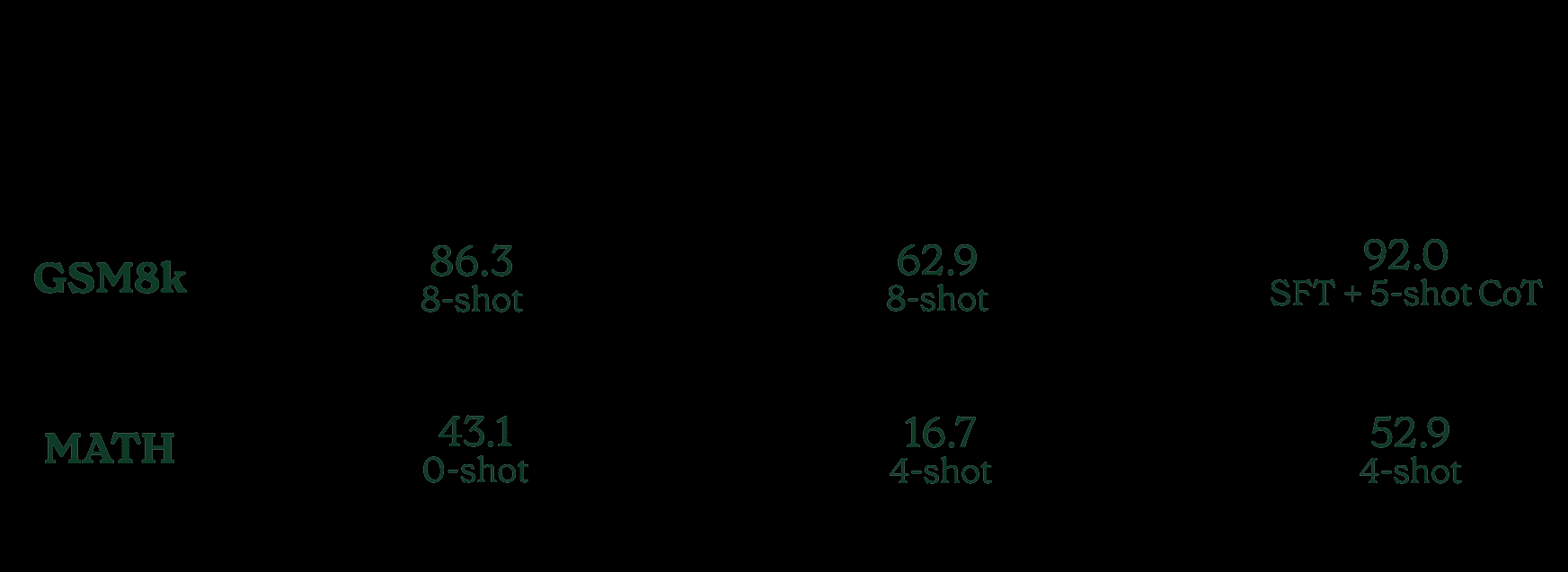

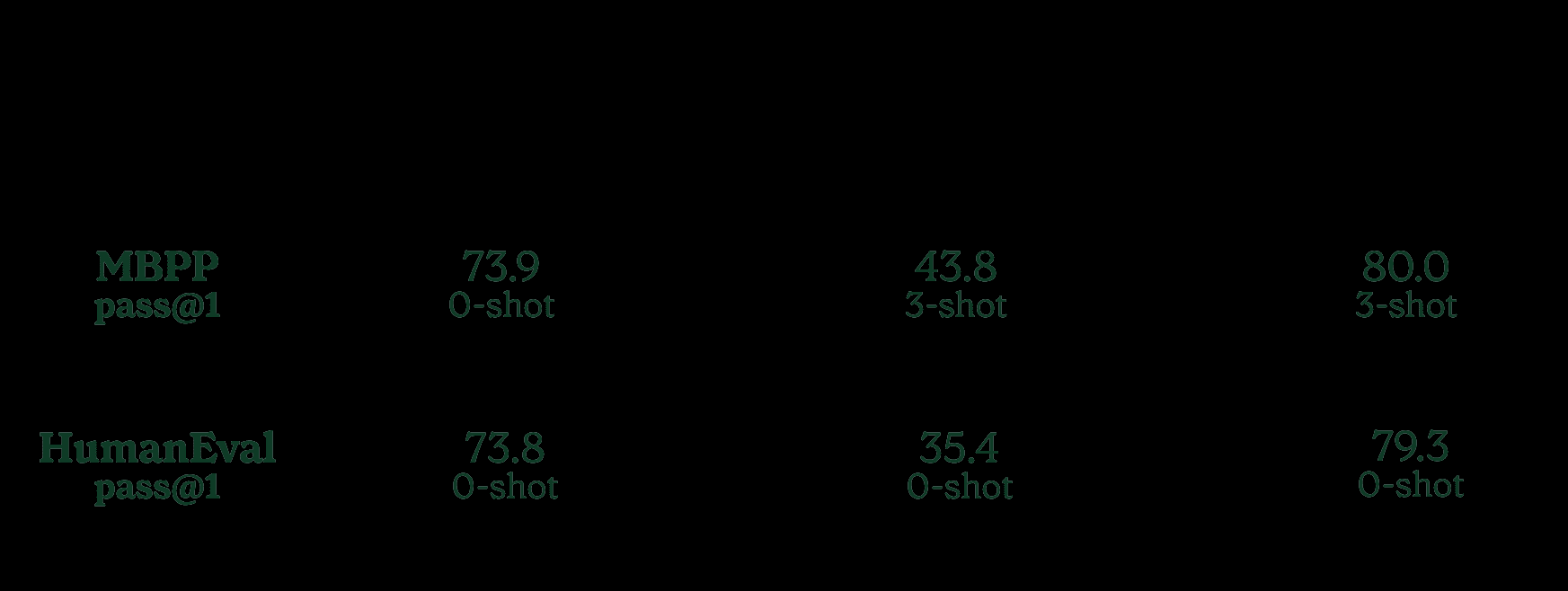

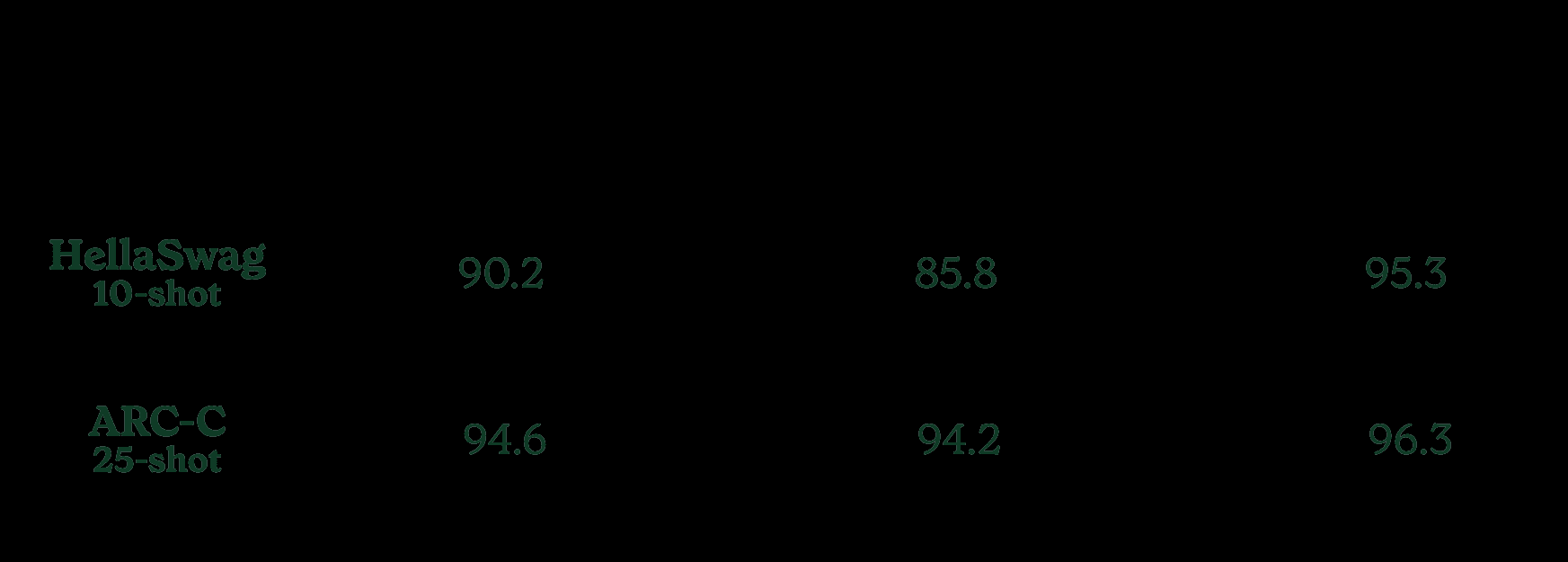

Below, we show the results of a series of key industry benchmark tests. For simplicity, we compare Inflection-2.5 to GPT-4. These results show that Pi now has IQ capabilities comparable to recognized industry leaders. Due to differences in reporting formats, we are paying attention to the format used for the evaluation.

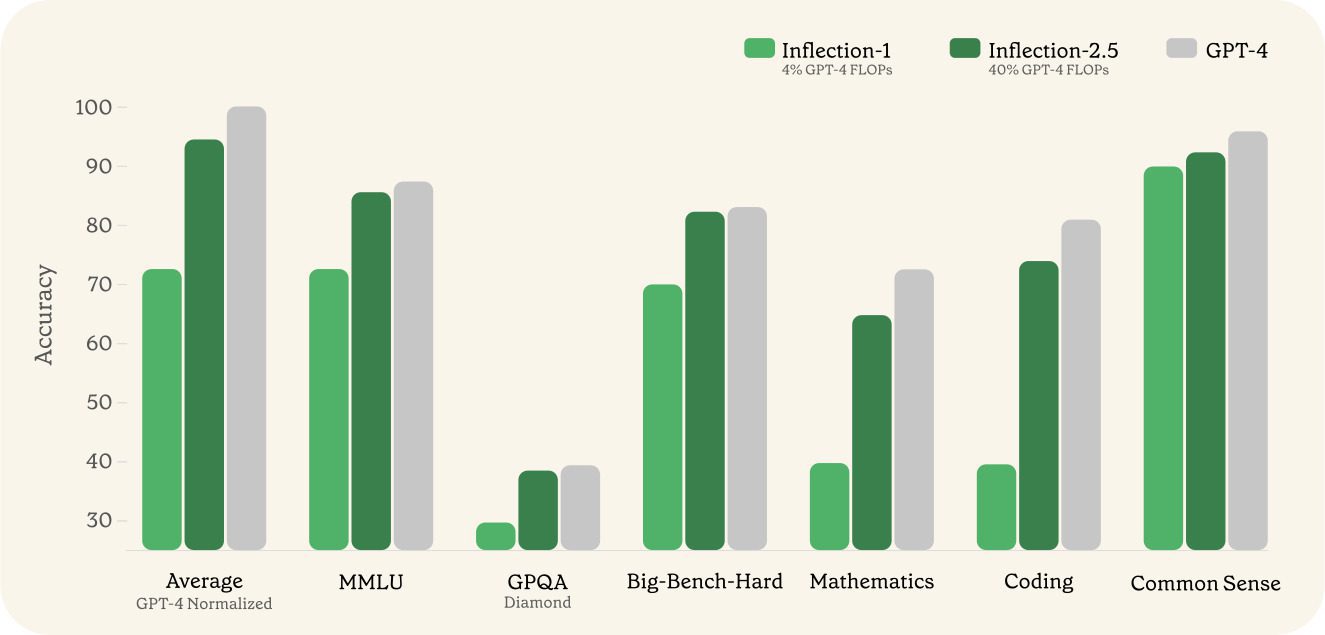

Inflection-1 used about 41 TP3T of training floating point operations (FLOPs) for GPT-4, and averaged about 721 TP3T of GPT-4 performance across a range of IQ-oriented tasks. Inflection-2.5, which now drives the Pi, achieves an average performance of over 941 TP3T for the GPT-4, despite using only 401 TP3T of training FLOPs. We saw significant performance gains in a wide range of domains, with the biggest improvements coming in the STEM domain.

Compared to Inflection-1, Inflection-2.5 has made significant progress on the MMLU benchmark, a diverse benchmark that measures performance on a variety of tasks ranging from high school to professional level difficulty. We also evaluated the extremely difficult GPQA Diamond benchmark, an expert-level benchmark.

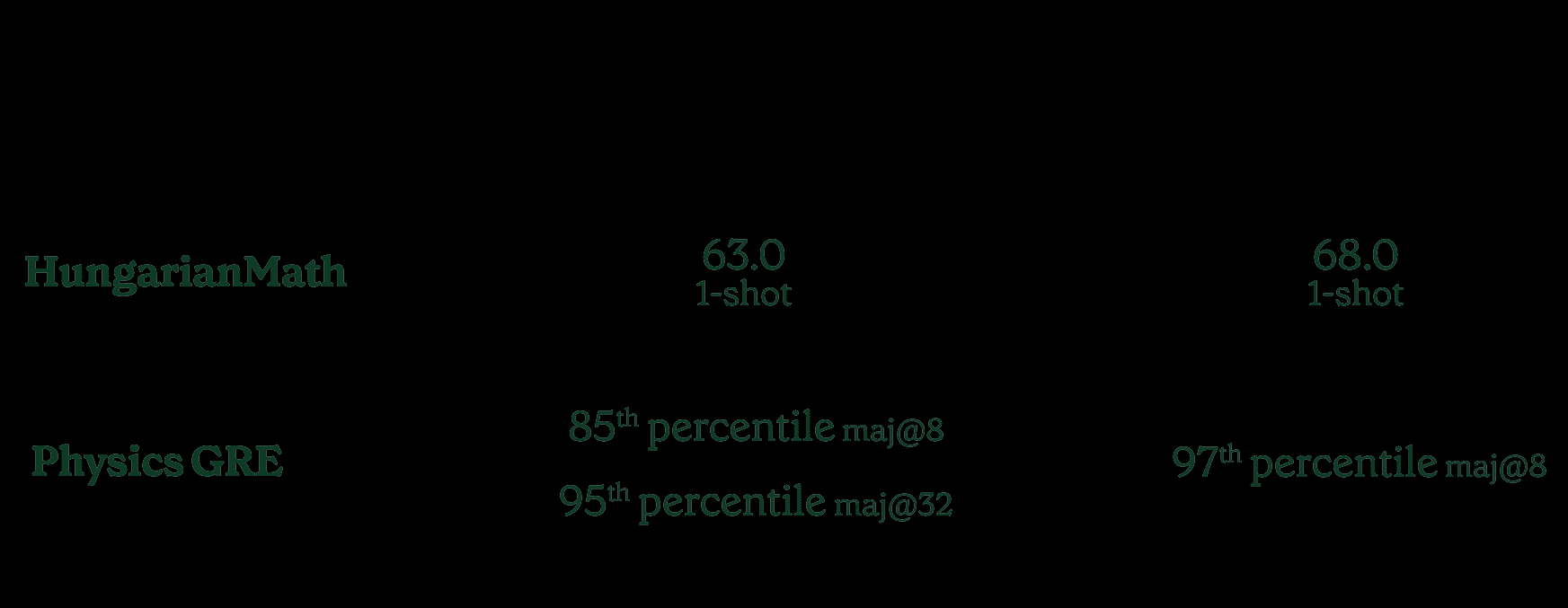

We also include the results of two different STEM exams:the Hungarian Math Exam and performance on the Physics GRE, the Physics Graduate Entrance Exam.

For Hungarian math, we use [here areA small number of sample hints and formats are provided to facilitate repetition. Inflection-2.5 uses only the first example in the hints.

We're still[...Posted.] published processed versions of the physical GRE exams (GR8677, GR9277, GR9677, GR0177) and compared Inflection 2.5's performance on the first exam to the GPT-4. We find that Inflection-2.5 reaches the 85th percentile of human test takers in MAJ@8, and earns nearly the highest score in MAJ@32. Some questions with images have been excluded from the results below in order to facilitate broad comparisons. In any case, we have published all the questions.

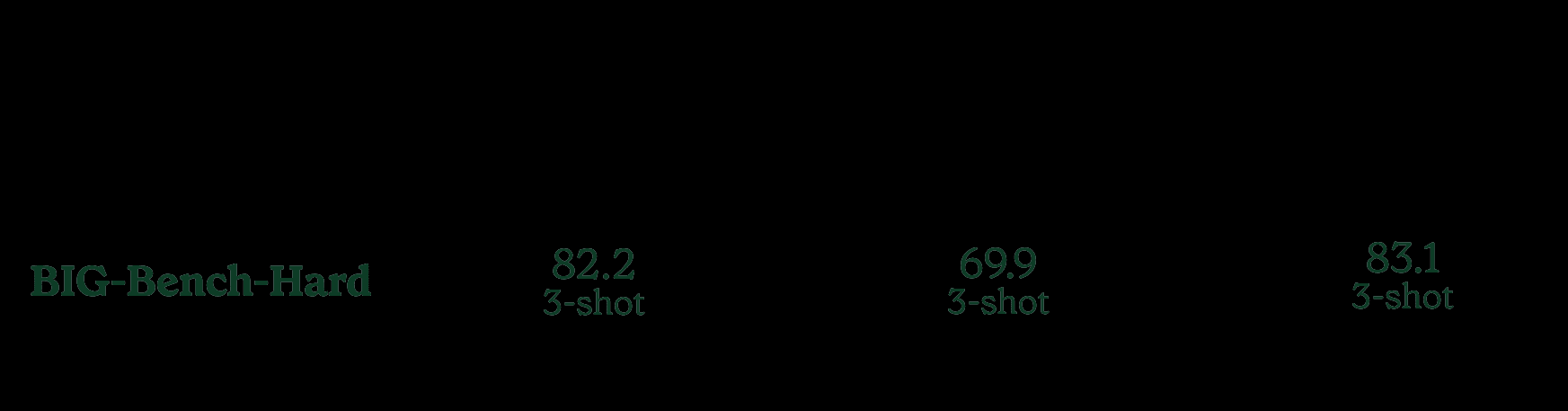

On BIG-Bench-Hard, a subset of BIG-Bench problems that are difficult for large language models, Inflection-2.5 has more than 101 TP3T improvement over Inflection-1, and is as good as the most powerful models.

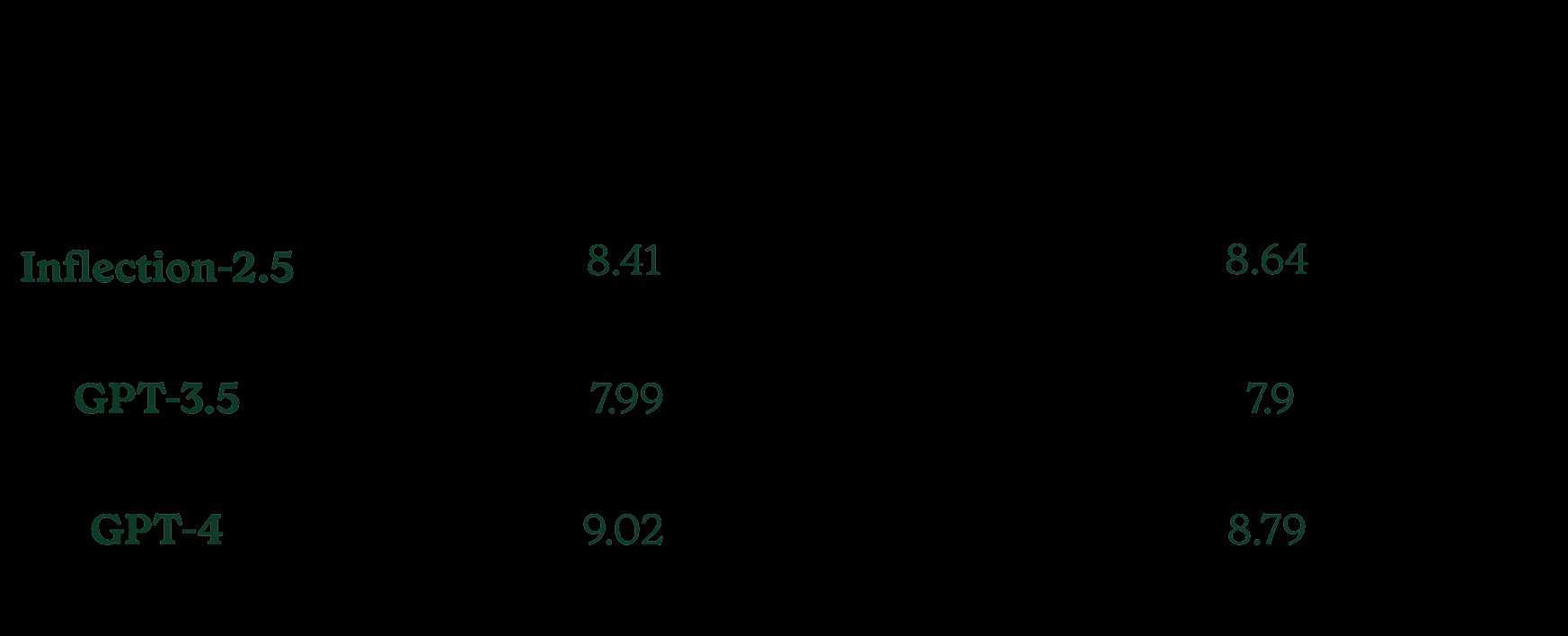

We're still here.MT-BenchWe evaluated our model on MT-Bench, a widely known community leaderboard for comparing models. However, after evaluating MT-Bench, we realized that nearly a quarter of the examples in the Reasoning, Math, and Coding categories had incorrect reference solutions or flawed problem premises. Therefore, we corrected these examples and published them in the [...here are] released a corrected version of the dataset.

In evaluating these two subsets, we find that in the correctly corrected version, our model performs more consistently with expectations based on other benchmark tests.

Inflection-2.5 offers particular improvements over Inflection-1 in terms of math and coding performance, as shown in the table below.

In both the MBPP+ and HumanEval+ coding benchmarks, we saw a dramatic improvement over Inflection-1.

For the MBPP, we report the results from [DeepSeek Coder] for the GPT-4 values. For HumanEval, we used the [EvalPlus] results on the leaderboard (GPT-4 in May 2023).

We also evaluated Inflection-2.5's performance on HellaSwag and ARC-C, two common common sense and scientific benchmarks that many models report on. In both cases, we see excellent performance on these near-saturated benchmarks.

All of the above evaluations were performed on the model that now drives Pi, but we note that the user experience may vary slightly due to the effects of web retrieval (none of the benchmarks above used web retrieval), the structure of the small number of example prompts, and other differences in production.

In short, the Inflection-2.5 retains the Pi's unique, approachable personality and exceptional safety standards, while becoming a more intimate model in every way.

We are grateful to our partners at Azure and CoreWeave for their support in bringing the state-of-the-art language model behind Pi to millions of users worldwide.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...