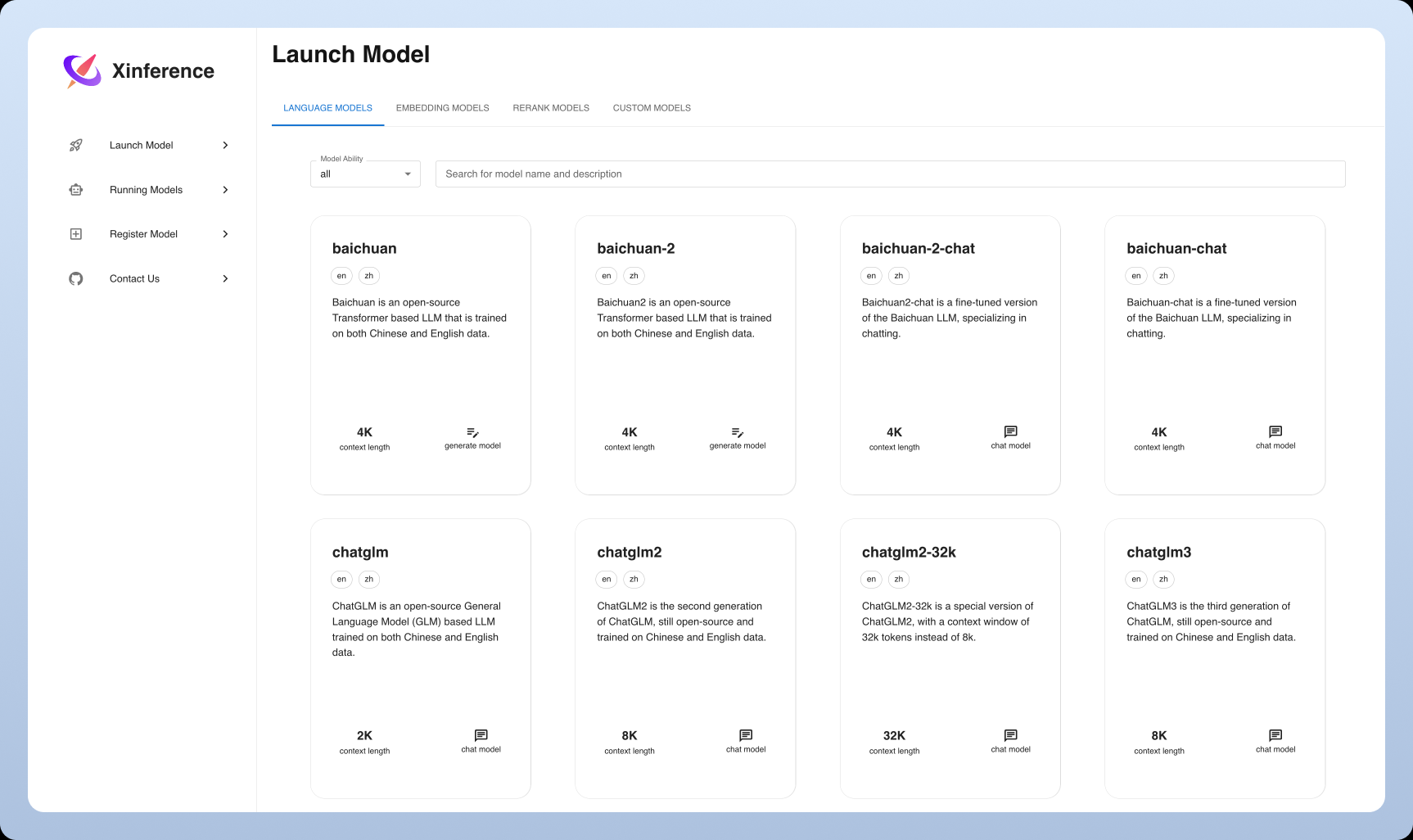

Xinference: Easy Distributed AI Model Deployment and Serving

General Introduction

Xorbits Inference (Xinference for short) is a powerful and versatile library focused on providing distributed deployment and serving of language models, speech recognition models, and multimodal models. With Xorbits Inference, users can easily deploy and serve their own models or built-in advanced models with a single command. Whether in the cloud, on a local server, or on a personal computer, Xorbits Inference runs efficiently. The library is especially suited for researchers, developers, and data scientists to help them realize the full potential of cutting-edge AI models.

Function List

- distributed deployment: Supports distributed deployment scenarios, allowing model inference tasks to be seamlessly distributed across multiple devices or machines.

- modeling service: Streamlining the process of serving large language models, speech recognition models, and multimodal models.

- Single-command deployment: Deploy and serve models with a single command, for both experimental and production environments.

- Heterogeneous hardware utilization: Intelligence utilizes heterogeneous hardware, including GPUs and CPUs, to accelerate model inference tasks.

- Flexible APIs and interfaces: Provide a variety of interfaces to interact with the model, supporting RPC, RESTful API (compatible with OpenAI API), CLI and WebUI.

- Built-in advanced models: Built-in support for a wide range of cutting-edge open-source models, which users can use directly to conduct experiments.

Using Help

Installation process

- environmental preparation: Ensure that Python 3.7 or later is installed.

- Installation of Xorbits Inference::

pip install xorbits-inference

- Verify Installation: After the installation is complete, you can verify that the installation was successful by using the following command:

xinference --version

Guidelines for use

Deployment models

- Loading Models: Use the following command to load a pre-trained model:

xinference load-model --model-name <模型名称>

Example:

xinference load-model --model-name gpt-3

- Starting services: After loading the model, start the service:

xinference serve --model-name <模型名称>

Example:

xinference serve --model-name gpt-3

- invoke an API: Once the service is started, it can be invoked via a RESTful API:

curl -X POST http://localhost:8000/predict -d '{"input": "你好"}'

Using the built-in model

Xorbits Inference has built-in support for a wide range of advanced models that can be used directly by the user to conduct experiments. Example:

- language model: e.g. GPT-3, BERT, etc.

- speech recognition model: e.g. DeepSpeech, etc.

- multimodal model: e.g. CLIP, etc.

distributed deployment

Xorbits Inference supports distributed deployment, allowing users to seamlessly distribute model inference tasks across multiple devices or machines. The steps are described below:

- Configuring a Distributed Environment: Install Xorbits Inference on each node and configure the network connection.

- Starting Distributed Services: Start distributed services on the master node:

xinference serve --distributed --nodes <节点列表>

Example:

xinference serve --distributed --nodes "node1,node2,node3"

- Calling the Distributed API: Similar to single-node deployments, it is invoked via a RESTful API:

curl -X POST http://<主节点IP>:8000/predict -d '{"input": "你好"}'

common problems

- How do I update the model? Use the following command to update the model:

xinference update-model --model-name <模型名称>

- How do I view the logs? Use the following command to view the service log:

xinference logs --model-name <模型名称>© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...