ICLR Surprisingly [10,10,10,10] Full Score Paper, ControlNet Author's New Work - IC-Light V2 Adaptation for Flux

Four 10s! A rare sight to behold, but how can it not be considered quite a blowout presence when placed in ICLR where the average score is only 4.76?

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/8989dba3bb6d866.png)

The paper that has won over the reviewers is ControlNet author Lumin Zhang's new work IC-Light, and it is not often that we see a paper that can get four reviewers to give a high degree of agreement on "Rating: 10: strong acceptance, should be highlighted at the conference".

IC-Light has been open-sourced on Github for half a year before it was submitted to ICLR, and has gained 5.8k stars, which is a clear indication of how good it is.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/4c3e253c8ca4cba.png)

The initial version was implemented based on SD 1.5 and SDXL, and just a few days ago the team released a V2 version, adapted to Flux and with even better results.

For those interested, you can just try it out.

- Github project: https://github.com/lllyasviel/IC-Light?tab=readme-ov-file

- V2 version: https://github.com/lllyasviel/IC-Light/discussions/98

- Trial link: https://huggingface.co/spaces/lllyasviel/IC-Light

IC-Light It is a lighting editing model based on the diffusion model, which can precisely control the lighting effect of an image through text.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/48466cd29c63f82.jpg)

In other words, the light and shadow effects that can only be done by opening the mask, opening the alpha channel, and debugging the separation of light and dark in PS, become "a matter of moving your lips" with IC-Light.

Enter prompt to get the light coming in through the window, so you can see the sunlight coming through the rainy window, creating a soft silhouette light on the side of the figure's face.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/03a622e9930bc1f.jpg)

IC-Light not only accurately reproduces the direction of light, but also accurately renders the diffuse effect of light through glass.

IC-Light works equally well with artificial light sources such as neon signs.

According to the cue word, the original scene in the classroom immediately explodes into cyberpunk style: the red and blue colors of neon lights hit the characters, creating a sense of technology and futuristic feeling unique to late-night cities.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/87b905ffff32707.jpg)

The model not only accurately reproduces the color penetration effect of neon, but also maintains the consistency of the figure.

IC-Light also supports uploading a background image to change the lighting of the original image.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/9def520176fca58.png)

When it comes to ControlNet, we should not be unfamiliar with it, as it has solved one of the most difficult problems in the AI painting world.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/bbcd67180a017b5.jpg)

Github project: https://github.com/lllyasviel/ControlNet

Previously, the biggest headache for Stable Diffusion was the inability to precisely control image details. Whether it was composition, movement, facial features or spatial relationships, even though the cue words had been specified in great detail, the results generated by SD still had to adhere to the AI's unique ideas.

But the advent of ControlNet was like putting a "steering wheel" on SD, and many commercialized workflows were born.

Academic applications blossomed, and ControlNet won the Marr Award (Best Paper Award) at ICCV 2023.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/8d79c3f201e6ece.jpg)

Although many industry insiders say that in the field of picture generation, which is so rolled up, it is getting harder and harder to make a real breakthrough. But Zhang Lumin always seems to be able to find another way, and every time he strikes, he can hit the user's needs precisely. This time is no exception.

In the real world, light and the material on the surface of an object are closely related. When you see an object, for example, it's hard to tell whether it's the light or the material that makes the object appear as we see it. As a result, it's also hard to let AI edit light without changing the material of the object itself.

Previous studies have tried to solve this problem by constructing specific datasets, but with little success. The authors of IC-Light found that synthetically generated data using AI with some manual processing can achieve good results. This finding is instructive for the entire field of research.

When ICLR 2025 was just released, IC-Light was the highest scoring paper with "10-10-8-8". The reviewers were also very complimentary in their comments:

"This is an example of a wonderful paper!"

"I think the proposed methodology and the resulting tools will be immediately useful to many users!"

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/a7220c2d1978be5.png)

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/2e3a45f17f8ed6e.png)

At the end of the rebuttal, some references and experiments were added. The two reviewers who gave it an 8 were happy to change it to a perfect score.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/fd6328ce5447bba.png)

Here, let's take a look at what exactly is written in the full essay.Research details![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/deb97e4fe1ce8ab.png)

- Thesis title: Scaling In-the-Wild Training for Diffusion-based Illumination Harmonization and Editing by Imposing Consistent Light Transport

- Link to paper: https://openreview.net/pdf?id=u1cQYxRI1H

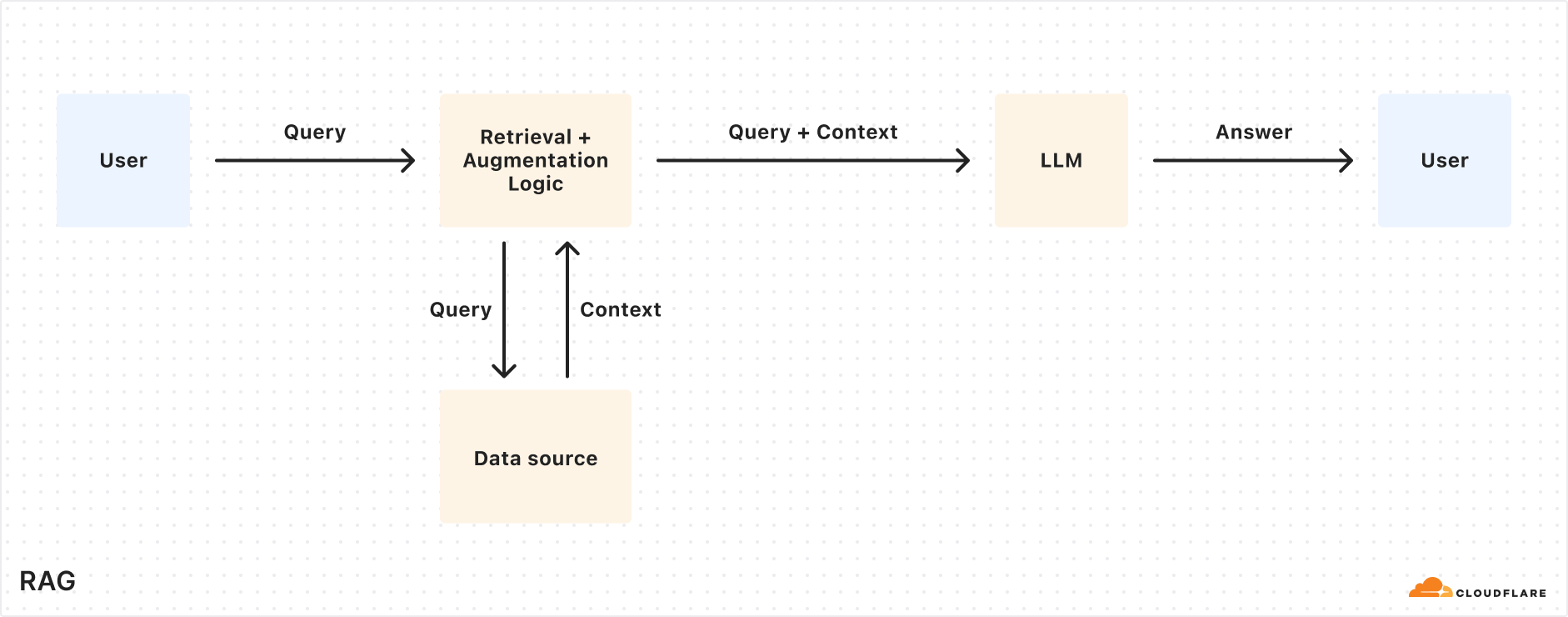

In this paper, the researcher proposes a method to impose consistent light (IC-Light) transmission during training based on the physical principle of independence of light transmission, which is the linear mixing of the appearance of an object under different light conditions with the appearance under mixed light.

As shown in Figure 2, the researcher modeled distributions of lighting effects using multiple available data sources: arbitrary images, 3D data, and light stage images. These distributions can capture a variety of complex lighting scenarios in the real world, backlighting, rimlighting, glow, etc. For simplicity, all data is processed here into a common format.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/120e61bf41390a4.png)

However, learning large-scale, complex and noisy data is challenging. Without proper regularization and constraints, the model can easily degenerate into random behavior that does not match the expected light editing. The solution given by the researchers is to implant consistent light (IC-Light) transmission during the training process. ![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/da84749c0b2be39.png)

By imposing this consistency, the researchers introduce a robust, physically-based constraint that ensures that the model modifies only the lighting aspects of the image, while preserving other intrinsic properties such as albedo and fine image detail. The method can be trained stably and scalably on more than 10 million different samples, including real photographs from light stages, rendered images, and field images with synthetic lighting enhancements. The method proposed in this paper improves the accuracy of light editing, reduces uncertainty, and minimizes artifacts without altering the underlying appearance details.

Overall, the contributions of this paper include:

(1) IC-Light, a method to extend the training of diffusion-based light editing models by imposing consistent light transmission, is proposed to ensure accurate light modification while preserving intrinsic image details;

(2) Pre-trained photo-editing models are provided to facilitate photo-editing applications in different domains of content creation and processing;

(3) The scalability and performance of this method is verified through extensive experiments, showing how it differs from other methods in dealing with various lighting conditions;

(4) Other applications, such as normal mapping generation and artistic lighting processing, are presented to further demonstrate the versatility and robustness of the method in real-world, practical scenes.

Results

In the experiments, the researchers verified that scaling up the training size and diversifying the data sources can enhance the robustness of the model and improve the performance of various light-related downstream tasks.

Ablation experiments have demonstrated that applying the IC-Light method during training improves the accuracy of light editing, thus preserving intrinsic properties such as albedo and image detail.

In addition, the method in this paper is applicable to a wider range of light distributions, such as edge lighting, backlighting, magic glow, sunset glow, etc., than other models trained on smaller or more structured datasets.

The researchers also demonstrate the method's ability to handle a wider range of field lighting scenarios, including artistic lighting and synthetic lighting effects. Additional applications such as generating normal maps are also explored, and differences between this approach and typical mainstream geometric estimation models are discussed.

ablation experiment

The researchers first restored the model in training, but removed the field image enhancement data. As shown in Figure 4, removing the field data severely affected the model's ability to generalize, especially for complex images such as portraits. For example, hats in portraits that were not present in the training data were often rendered in incorrect colors (e.g., changing from yellow to black).

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/9c1ad3fef19857c.png)

The researchers also attempted to remove light transmission consistency. Without this restriction, the model's ability to generate consistent light and preserve intrinsic properties such as albedo (reflected color) was significantly reduced. For example, red and blue differences disappeared from some images, and color saturation issues were evident in the output.

Instead, the complete approach combines multiple data sources and enhances the consistency of optical transmission, producing an equalization model that can generalize across a wide range of situations. It also preserves inherent properties such as fine-grained image detail and albedo, while reducing errors in the output image.

Other Applications

As shown in Fig. 5, the researchers also demonstrate other applications, such as light coordination using background conditions. By training on additional channels of the background condition, the model in this paper can generate illumination based solely on the background image without relying on environment mapping. In addition, the model supports different base models such as SD1.5, SDXL, and Flux, whose functionality is demonstrated in the generated results.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/e94edfc26e3fc2b.png)

quantitative assessment

For quantitative evaluation, the researchers used metrics such as Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), and Learning to Perceive Image Patch Similarity (LPIPS). And a subset of 50,000 unseen 3D rendering data samples were extracted from the dataset for evaluation to ensure that the model had not encountered these samples during training.

The methods tested were SwitchLight, DiLightNet, and variants of the methods in this paper that do not include certain components (e.g., no optical transport consistency, no enhancement data, no 3D data, and no lighting stage data).

As shown in Table 1, the method in this paper outperforms the other methods as far as LPIPS is concerned, indicating superior perceptual quality. The highest PSNR was obtained for the model trained on 3D data only, which may be due to the bias in the evaluation of the rendered data (since only 3D rendered data was used in this test). The complete method combining multiple data sources strikes a balance between perceptual quality and performance.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/635657ca6ca745f.png)

visual comparison

The researchers also made a visual comparison with previous methods. As shown in Figure 6, this paper's model is more robust to shading due to the larger and more diverse training dataset compared to Relightful Harmonization.SwitchLight and this paper's model produce competitive relighting results. The quality of normal mapping is a bit more detailed in this approach, thanks to the method of merging and deriving shadows from multiple representations. In addition, the model produces higher quality human normal maps compared to GeoWizard and DSINE.

![ICLR 惊现[10,10,10,10]满分论文,ControlNet作者新作——IC-Light V2适配Flux](https://aisharenet.com/wp-content/uploads/2024/12/68e139549709fc8.png)

More details of the study can be found in the original paper.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...