HyperChat: AI Conversation Client for Performing Complex Tasks with MCP Intelligence

General Introduction

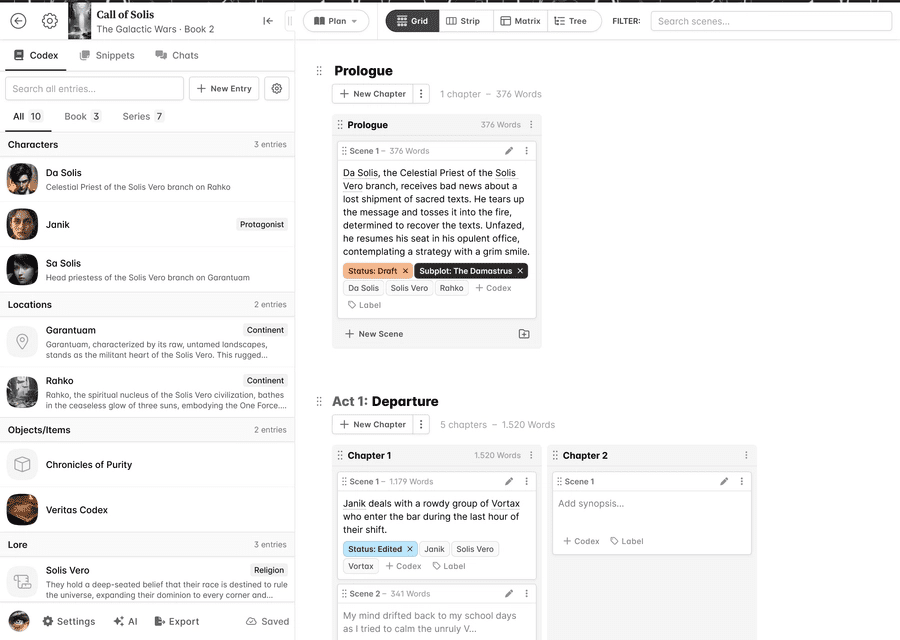

HyperChat is an open source chat client developed by BigSweetPotatoStudio and hosted on GitHub that aims to provide an efficient chat experience by integrating multiple large-scale language model (LLM) APIs (e.g. OpenAI, Claude, Qwen, etc.) while leveraging the MCP (Model Context Protocol) for task automation and productivity scaling. Protocol) protocol for task automation and productivity scaling. Supporting both macOS and Windows systems, HyperChat not only has a built-in plug-in marketplace, but also allows for manual installation of third-party MCP Plugin for developers and general users. Features include multi-session management (ChatSpace), Agent calls, timed tasks, etc. The current version is 0.3.4 and is still being actively updated.

HyperChat main dialog interface

HyperChat Timed Tasks

HyperChat Completes Complex Multi-Step, Multi-Modal Tasks

Function List

- Multiple LLM API integrations: Compatible with OpenAI, Claude (via OpenRouter), Qwen, Deepseek, GLM, Ollama and other models.

- MCP Plug-in Marketplace: Provides one-click installation and configuration, supports task automation and knowledge base expansion (RAG).

- Scheduled Task Scheduler: Set up and automate tasks with Agent to check status in real time.

- ChatSpace Multi-Session: Supports multiple conversations at the same time to improve efficiency.

- Agent Management: Preset prompts and MCP services, support for inter-agent invocations.

- cross-platform compatibilitySupport for macOS and Windows, including Dark Mode.

- content rendering: SVG, HTML, KaTeX formula presentation and code highlighting support.

- WebDAV synchronization: Enables data synchronization across devices.

- Developer Support: Open source code, allowing custom plug-ins and feature development.

Using Help

Installation process

The installation of HyperChat requires the preparation of a specific environment, and the following steps are detailed:

1. Environmental preparation

Ensure that the following tools are installed on your system:

- Node.js(running the core HyperChat environment):

- macOS: Terminal operation

brew install node(Homebrew needs to be installed first). - Windows (computer): Run

winget install OpenJS.NodeJS.LTSor download it from nodejs.org.

- macOS: Terminal operation

- uv(managing the Python environment):

- macOS: Run

brew install uvThe - Windows (computer): Run

winget install --id=astral-sh.uv -eThe

- macOS: Run

2. Download and installation

- Visit the GitHub repository (https://github.com/BigSweetPotatoStudio/HyperChat):

- Click "Code" > "Download ZIP" to download the source code, or run it:

git clone https://github.com/BigSweetPotatoStudio/HyperChat.git

- Click "Code" > "Download ZIP" to download the source code, or run it:

- Go to the project directory and install the dependencies:

cd HyperChat cd electron && npm install cd ../web && npm install cd .. && npm install

3. Launch HyperChat

- Run the development model:

npm run dev - macOS Permission Issues: If prompted for corruption, run:

sudo xattr -d com.apple.quarantine /Applications/HyperChat.app - Windows (computer): Double-click the generated application to launch it.

4. Configuration environment (optional)

- nvm user(macOS): Check if the PATH contains Node.js:

echo $PATHIf missing, add it manually; Windows nvm is available by default.

Main Functions Operation Guide

Configuring the LLM API

HyperChat supports a variety of LLMs, and the following are the configuration steps:

- Getting the API key::

- Register with the target LLM service (e.g., OpenAI, OpenRouter) and generate the key.

- Enter the key::

- Open HyperChat and go to Settings > API Configuration.

- Paste the key, select the service, and make sure it's compatible with OpenAI style APIs.

- test (machinery etc)::

- Type "1+1=?" in the chat box. and confirm that the correct result is returned.

Using the MCP Plug-in

The MCP plugin is a core extension for HyperChat:

- Access to the built-in marketplace::

- Click on the "Plugins" tab and browse the available plugins (e.g.

hypertools,fetch).

- Click on the "Plugins" tab and browse the available plugins (e.g.

- one-click installation::

- Select the plugin and click "Install" to automatically complete the configuration.

- Manual installation of third-party plug-ins::

- Download the plugin file, enter the "Plugin Management", fill in the

command,args,env, save.

- Download the plugin file, enter the "Plugin Management", fill in the

- usage example::

- mounting

searchAfter the plugin, enter "Search for the latest AI news" to see the results returned.

- mounting

Setting up timed tasks

Automating tasks is the highlight of HyperChat:

- Creating Tasks::

- Click New in the Tasks panel.

- Enter a task name (e.g., "Daily Summary"), time (e.g., "Everyday at 18:00"), and instructions (e.g., "Summarize Today's Calendar").

- Specify Agent::

- Select the configured Agent to perform the task.

- View Results::

- When the task is completed, the status is updated to "Completed" and the results can be downloaded from the "Task History".

ChatSpace Multi-Session Management

- Open a new session::

- Click the "+" button to create a new ChatSpace.

- Each session runs independently and can talk to different Agents at the same time.

- Switching Sessions::

- Quickly switch between different ChatSpaces by selecting them in the left column.

Agent calls Agent

- Configuring the HyperAgent::

- Create a new Agent in the Agent panel and set up prompts and MCP services.

- Calling Other Agents::

- Enter a command such as "Call Agent A to generate a report" and HyperAgent coordinates automatically.

Content Rendering and Synchronization

- Formulas and Codes: Input

$E=mc^2$Display KaTeX formulas, or paste code for highlighting. - WebDAV synchronization: Enter the WebDAV address and credentials in Settings to enable data synchronization.

Example of an operational process: generating a math report

- Configuring LLM: Access to the OpenAI API.

- Installation of plug-ins: Installation in the market

hypertoolsThe - New tasks: Set "Generate Math Report every Monday at 10:00" with the command "Parse and generate trigonometry report".

- (of a computer) run: The task is executed automatically and the result contains KaTeX formulas, which can be copied and used directly.

caveat

- LLM Compatibility: Deepseek, etc. may have errors in multi-step calls, prefer OpenAI or Claude.

- system requirements: Ensure that the uv and Node.js versions match the official recommendations.

- Community Support: Issues can be submitted to GitHub Issues, or refer to HyperChatMCP.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...