HiveChat: the AI chatbot for rapid deployment within companies

General Introduction

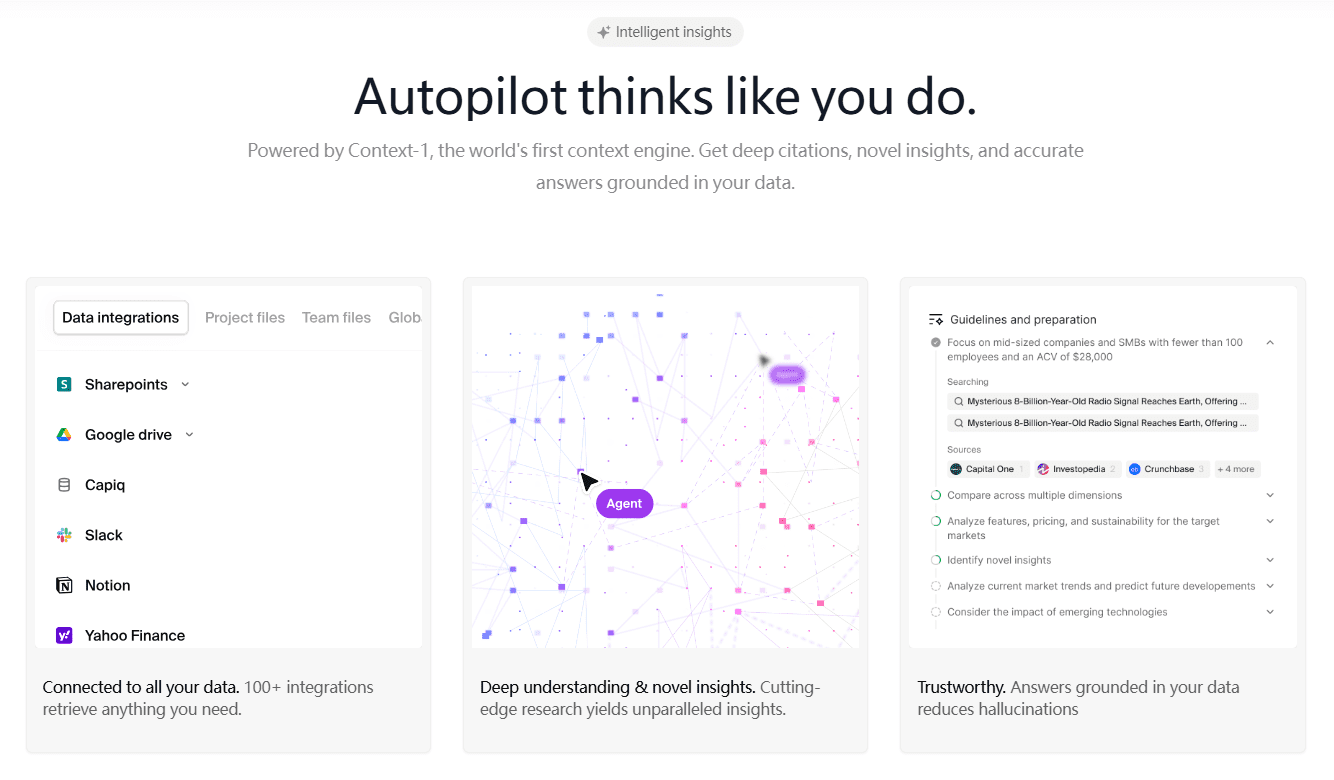

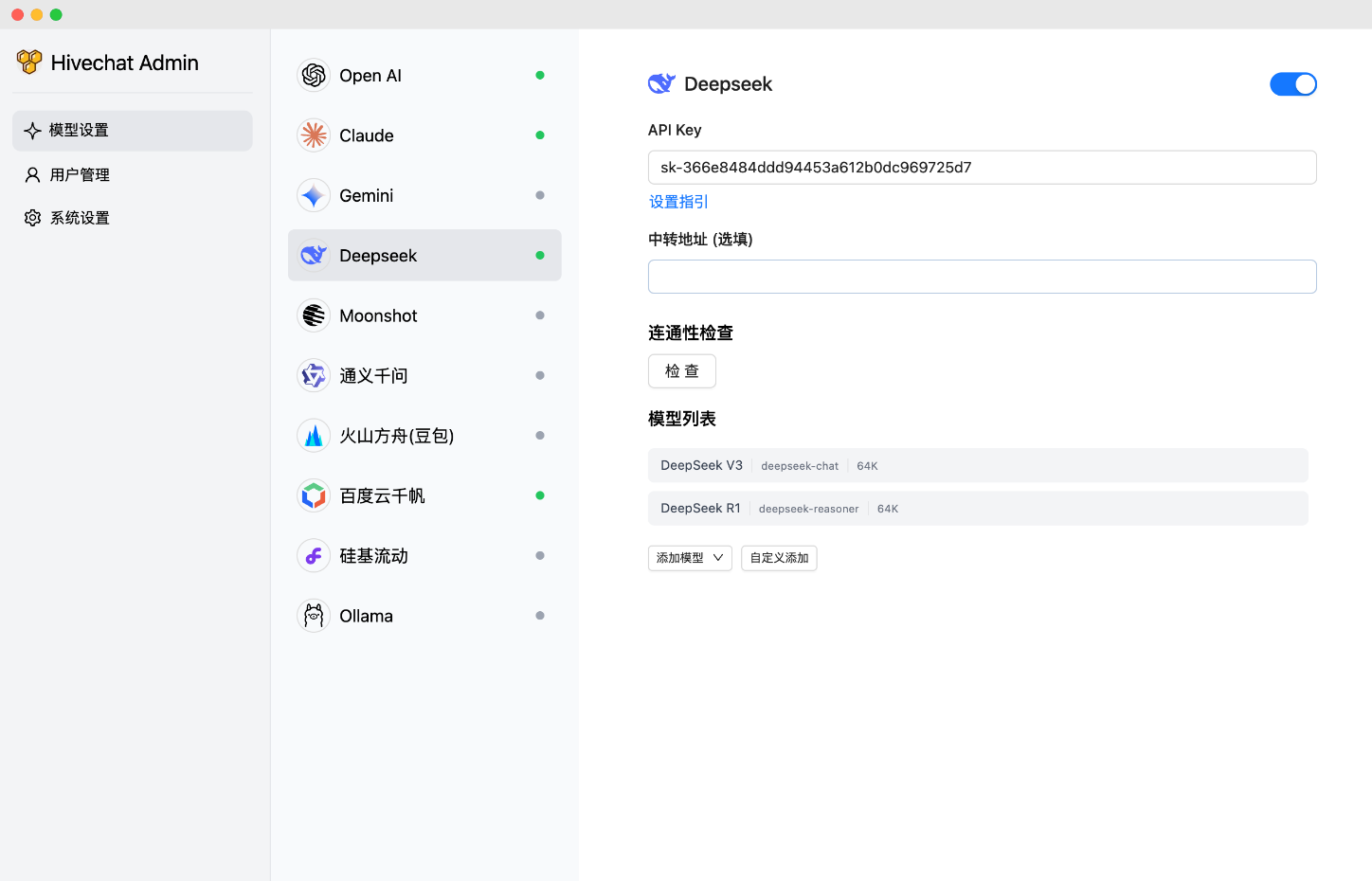

HiveChat is an AI chatbot for small and medium-sized teams that allows administrators to configure multiple AI models (such as Deepseek, OpenAI, Claude, and Gemini) at once for easy use by team members. It features LaTeX and Markdown rendering, DeepSeek inference chain display, image understanding, AI agents and cloud data storage, and supports 10 large model providers. The project utilizes the Next.js, Tailwindcss, and PostgreSQL technology stacks and can be deployed locally or via Vercel and Docker.

Function List

- Supported AI Models: HiveChat supports AI models including Deepseek, OpenAI, Claude, Gemini, Moonshot, Volcano Engine Ark, Ali Bailian (Qianwen), Baidu Qianfan, Ollama, and SiliconFlow 10 large-scale model providers, including domestic and international mainstream options, for globalized teams.

- Rendering and Display: Supports LaTeX and Markdown rendering, which is convenient for teams to deal with technical documents; DeepSeek inference chain display function helps users understand the inference process of AI.

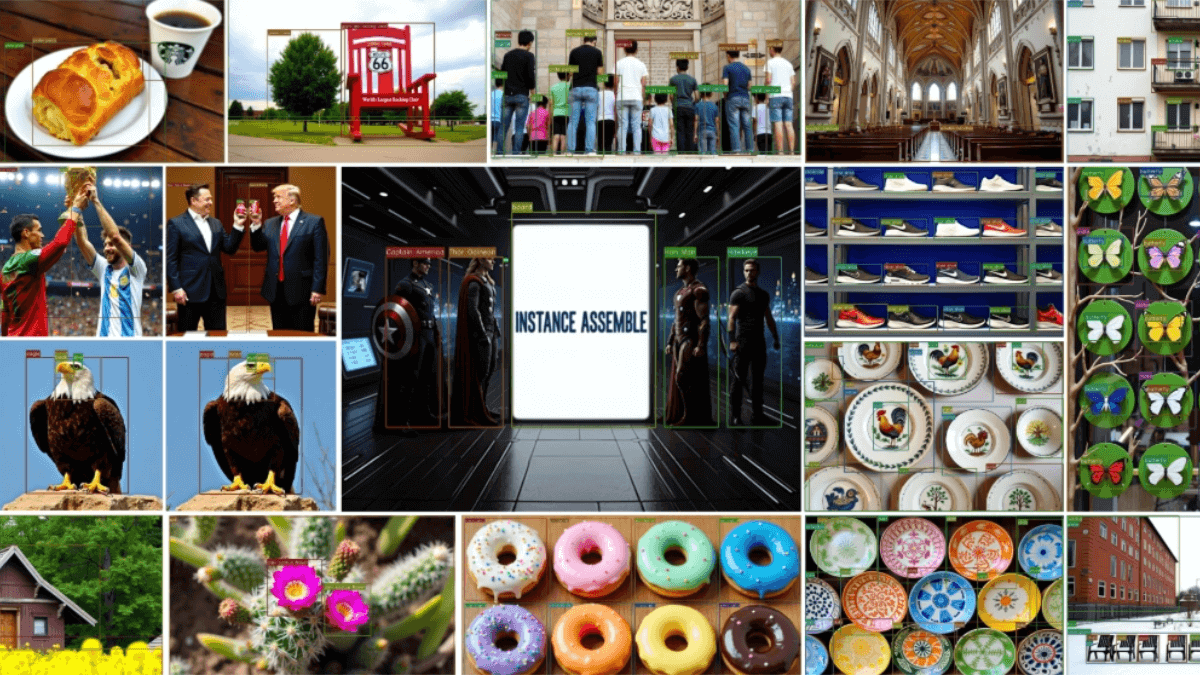

- Multimedia support: image understanding capabilities, suitable for handling vision-related tasks.

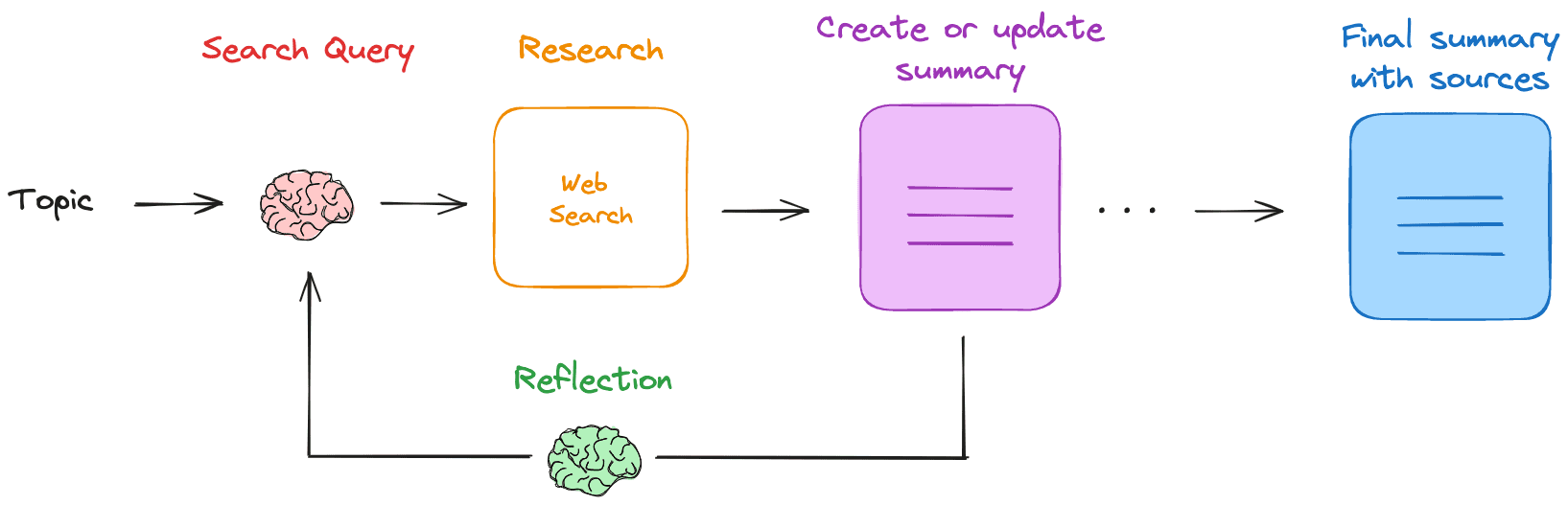

- AI Agent: Integrate AI agent functionality for enhanced automation capabilities.

- Data Management: Provides cloud data storage to ensure team data is secure and persistent.

Using Help

Technology Stacks and Deployment Options

HiveChat utilizes a modern front-end and back-end technology stack that includes:

| skill | descriptive |

|---|---|

| Next.js | used to build the server-side rendering of the React appliance |

| Tailwindcss | Provides a fast CSS framework |

| Auth.js | Handling user authentication |

| PostgreSQL | Relational database with data storage support |

| Drizzle ORM | Database Operations ORM Tools |

| Ant Design | UI component libraries to enhance the user experience |

Deployment options include local deployment, Docker deployment, and Vercel deployment:

- local deployment: Users need to clone the repository, run

npm installinstalling dependencies.npm run initdbinitialize the database.npm run devStarting the development environment.npm run buildcap (a poem)npm run startFor use in production environments. - Docker Deployment: Clone the repository, run

docker compose buildcap (a poem)docker compose up -dThe containerized service can be started. - Vercel deployment: By Vercel Deployment Links For one-click deployment, you need to configure environment variables such as DATABASE_URL, AUTH_SECRET and ADMIN_CODE.

Once the deployment is complete, the administrator will need to visit http://localhost:3000/setup (or the actual domain/port) to set up an administrator account.

Detailed help for use

To help users get started with HiveChat quickly, here is a detailed installation and usage process:

Installation process

- clone warehouse::

- Open a terminal and run the following command to clone your GitHub repository:

git clone https://github.com/HiveNexus/HiveChat.git cd HiveChat

- Open a terminal and run the following command to clone your GitHub repository:

- Select Deployment Method::

- local deployment::

- Ensure that Node.js and PostgreSQL are installed.

- (of a computer) run

npm installInstall the dependencies. - configure

.envfile, set the following environment variables:DATABASE_URL=postgres://postgres:password@localhost/hivechat(needs to be replaced with an actual database connection, example is local PostgreSQL).AUTH_SECRET: Useopenssl rand -base64 32Generates a 32-bit random string.ADMIN_CODE: Set the administrator authorization code, the example is22113344, it is recommended to replace it with a customized value.NEXTAUTH_URL=http://127.0.0.1:3000(The test environment can be kept as default, the production environment needs to be changed to the official domain name).

- (of a computer) run

npm run initdbInitialize the database. - Development environment running

npm run devProduction environment operationnpm run buildempressnpm run startThe

- Docker Deployment::

- Ensure that Docker and Docker Compose are installed.

- (of a computer) run

docker compose buildBuild the mirror image. - (of a computer) run

docker compose up -dStart the container. - Configuring environment variables is the same as local deployment, and needs to be done in the

docker-compose.ymlSpecified in.

- Vercel deployment::

- interviews Vercel Deployment LinksThe

- Follow the prompts to configure DATABASE_URL, AUTH_SECRET, and ADMIN_CODE.

- Click Deploy and wait for Vercel to finish building.

- local deployment::

- Administrator initialization::

- Once the deployment is complete, go to http://localhost:3000/setup (local deployment) or the actual domain name and enter ADMIN_CODE to set up the administrator account.

Usage

- Administrator Operation::

- Login to the administrator dashboard to configure the AI model provider (e.g., OpenAI API Key, etc.).

- Manage all user accounts by manually adding users or enabling the registration feature.

- View team usage statistics and adjust model configurations to optimize performance.

- General user operation::

- Login to access the chat interface, which supports text input and multimedia uploads (e.g. images).

- Format chats using LaTeX and Markdown for technical discussions.

- Select different AI models (e.g. Deepseek or Claude) to have a conversation and view the DeepSeek inference chain to understand the AI decision-making process.

- Data is automatically stored in the cloud, ensuring that session history can be viewed at any time.

- Featured Function Operation::

- graphic understanding: Upload images and AI can analyze the content and generate descriptions, suitable for product design or data analysis teams.

- AI Agent: Through configuration, the AI can automate specific tasks, such as generating reports or responding to frequently asked questions.

- Cloud Data Storage: All chats and configurations are saved in the cloud and can be accessed by team members across devices.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...