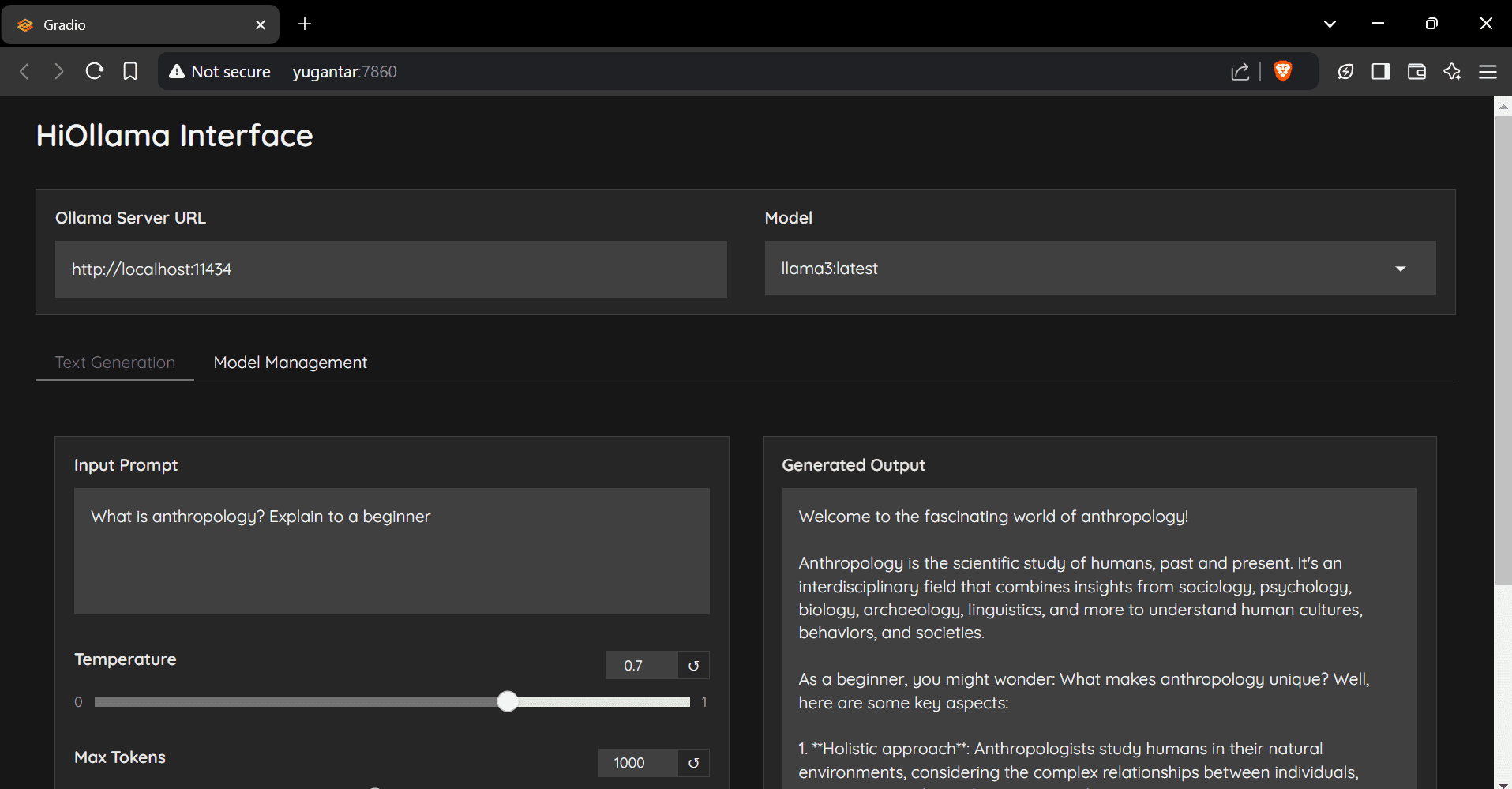

HiOllama: a clean chat interface for interacting with native Ollama models

General Introduction

HiOllama is a user-friendly interface built on Python and Gradio designed to interact with Ollama models. It provides a simple and intuitive web interface that supports real-time text generation and model management features. Users can adjust parameters such as temperature and maximum number of tokens, and it supports the management of multiple Ollama models and customized server URL configuration.

RECOMMENDATION: Ollama vs. Open WebUI Integration is more friendly, but deployment costs are slightly higher.

Function List

- Simple and intuitive web interface

- Real-time text generation

- Adjustable parameters (temperature, maximum number of tokens)

- Model Management Functions

- Support for multiple Ollama models

- Customized Server URL Configuration

Using Help

Installation steps

- Cloning Warehouse:

git clone https://github.com/smaranjitghose/HiOllama.git cd HiOllama - Create and activate a virtual environment:

- Windows.

python -m venv env .\env\Scripts\activate - Linux/Mac.

python3 -m venv env source env/bin/activate

- Windows.

- Install the required packages:

pip install -r requirements.txt - Install Ollama (if not already installed):

- Linux/Mac.

curl -fsSL https://ollama.ai/install.sh | sh - Windows.

Install WSL2 first, then run the above command.

- Linux/Mac.

Procedure for use

- Start the Ollama service:

ollama serve - Run HiOllama:

python main.py - Open your browser and navigate to:

http://localhost:7860

Quick Start Guide

- Select a model from the drop-down menu.

- Enter a prompt in the text area.

- Adjust the temperature and maximum number of tokens as needed.

- Click "Generate" to get the response.

- Use the Model Management option to pull in new models.

configure

The default settings can be set in themain.pyModified in:

DEFAULT_OLLAMA_URL = "http://localhost:11434"

DEFAULT_MODEL_NAME = "llama3"

common problems

- connection error: Make sure Ollama is running (

ollama serve), check that the server URL is correct and make sure that port 11434 is accessible. - Model not found: Pull the model first:

ollama pull model_name, check the available models:ollama listThe - port conflict: in

main.pyChange the port in theapp.launch(server_port=7860) # 更改为其他端口

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...