HealthGPT: A Medical Big Model to Support Medical Image Analysis and Diagnostic Q&A

General Introduction

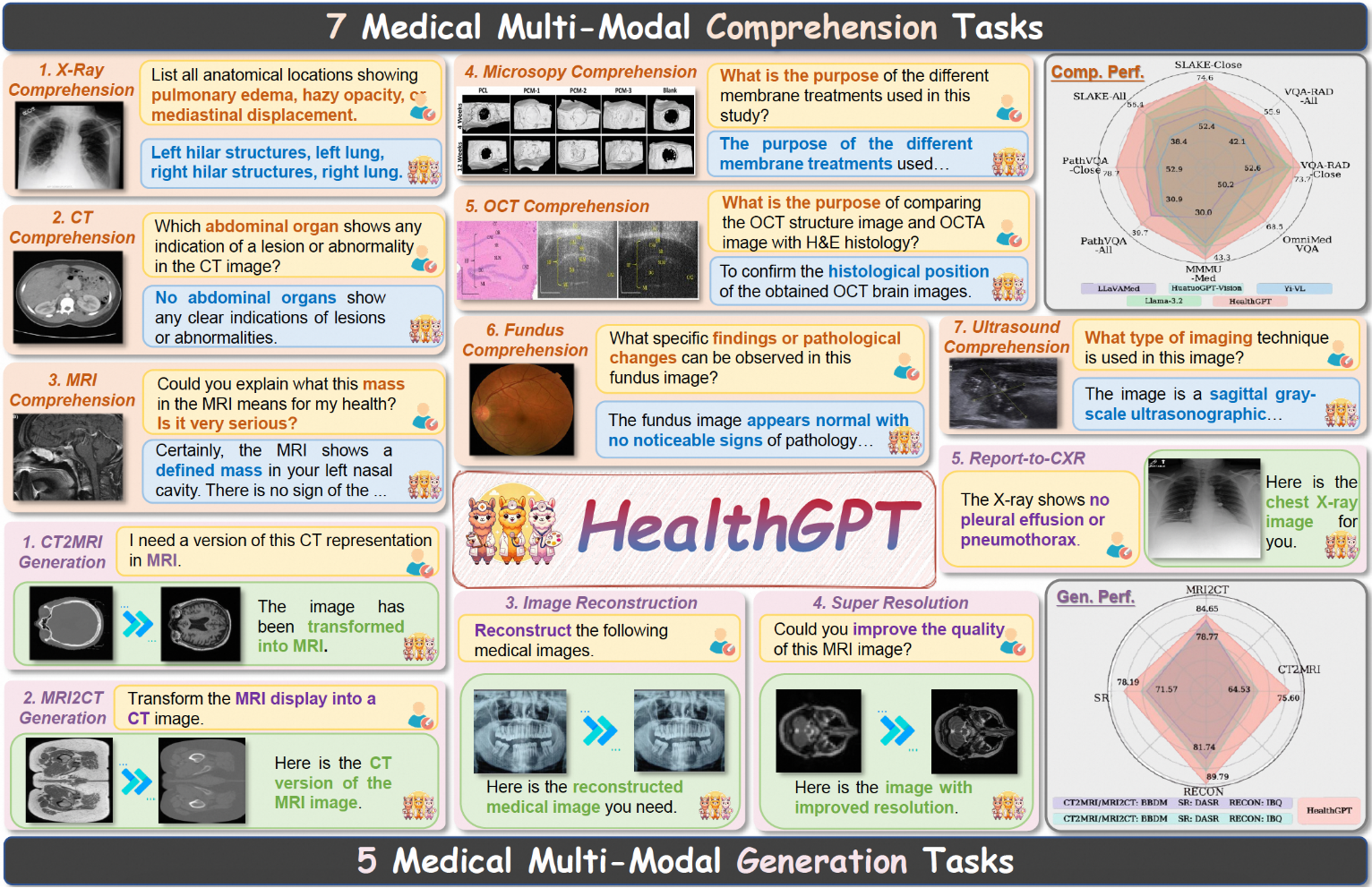

HealthGPT is a state-of-the-art medical grand visual language model that aims to achieve unified medical visual understanding and generation capabilities through heterogeneous knowledge adaptation. The goal of the project is to integrate medical visual understanding and generation capabilities into a unified autoregressive framework, which significantly improves the efficiency and accuracy of medical image processing.HealthGPT supports a wide range of medical comprehension tasks and generation tasks, and is able to perform well in various medical image processing scenarios. The project is jointly developed by Zhejiang University, University of Electronic Science and Technology, Alibaba, Hong Kong University of Science and Technology, National University of Singapore and other organizations, and has strong research and practical value.

Function List

- Medical Visual Q&A: Supports a wide range of medical imaging Q&A tasks to accurately answer medical questions posed by users.

- Medical Image Generation: capable of generating high quality medical images to assist in medical diagnosis and research.

- Task categorization support: supports 7 types of medical understanding tasks and 5 types of medical generation tasks, covering a wide range of medical application scenarios.

- Model architecture: textual and visual content is generated using hierarchical visual perception and H-LoRA plugins, selecting visual features and H-LoRA plugins.

- Multi-version model: Two configurations of HealthGPT-M3 and HealthGPT-L14 are provided to adapt to different needs and resources respectively.

Using Help

Installation process

- Preparing the environment

First, clone the project and create a Python runtime environment:git clone https://github.com/DCDmllm/HealthGPT.git cd HealthGPT conda create -n HealthGPT python=3.10 conda activate HealthGPT pip install -r requirements.txt

- Prepare pre-training weights

HealthGPT Usageclip-vit-large-patch14-336As visual encoders, HealthGPT-M3 and HealthGPT-L14 are based on, respectively, thePhi-3-mini-4k-instructcap (a poem)phi-4Pre-training.

Download the required model weights and place them in the appropriate directory:- ViT model:download link

- HealthGPT-M3 base model:download link

- HealthGPT-L14 base model:download link

- VQGAN model:download link

- Preparing H-LoRA and Adapter Weights

Download and place H-LoRA weights to enhance the model's medical visual understanding and generation capabilities. The full weights will be released soon, so stay tuned.

inference

Medical Vision Q&A

- Download the necessary documents

- Update Script Path

show (a ticket)llava/demo/com_infer.shscript, modify the following variables to the path of the downloaded file:- MODEL_NAME_OR_PATH: Base model path or identifier

- VIT_PATH: Visual Transformer Model Weight Path

- HLORA_PATH: Visual Understanding of H-LoRA Weight Paths

- FUSION_LAYER_PATH: Fusion Layer Weight Path

- Running Scripts

cd llava/demo bash com_infer.shIt is also possible to run Python commands directly:

python3 com_infer.py \ --model_name_or_path "microsoft/Phi-3-mini-4k-instruct" \ --dtype "FP16" \ --hlora_r "64" \ --hlora_alpha "128" \ --hlora_nums "4" \ --vq_idx_nums "8192" \ --instruct_template "phi3_instruct" \ --vit_path "openai/clip-vit-large-patch14-336/" \ --hlora_path "path/to/your/local/com_hlora_weights.bin" \ --fusion_layer_path "path/to/your/local/fusion_layer_weights.bin" \ --question "Your question" \ --img_path "path/to/image.jpg"

Image Reconstruction

commander-in-chief (military)HLORA_PATHset togen_hlora_weights.binfile path and configure other model paths:

cd llava/demo

bash gen_infer.sh

You can also run the following Python command directly:

python3 gen_infer.py \

--model_name_or_path "microsoft/Phi-3-mini-4k-instruct" \

--dtype "FP16" \

--hlora_r "256" \

--hlora_alpha "512" \

--hlora_nums "4" \

--vq_idx_nums "8192" \

--instruct_template "phi3_instruct" \

--vit_path "openai/clip-vit-large-patch14-336/" \

--hlora_path "path/to/your/local/gen_hlora_weights.bin" \

--fusion_layer_path "path/to/your/local/fusion_layer_weights.bin" \

--question "Reconstruct the image." \

--img_path "path/to/image.jpg" \

--save_path "path/to/save.jpg"© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...