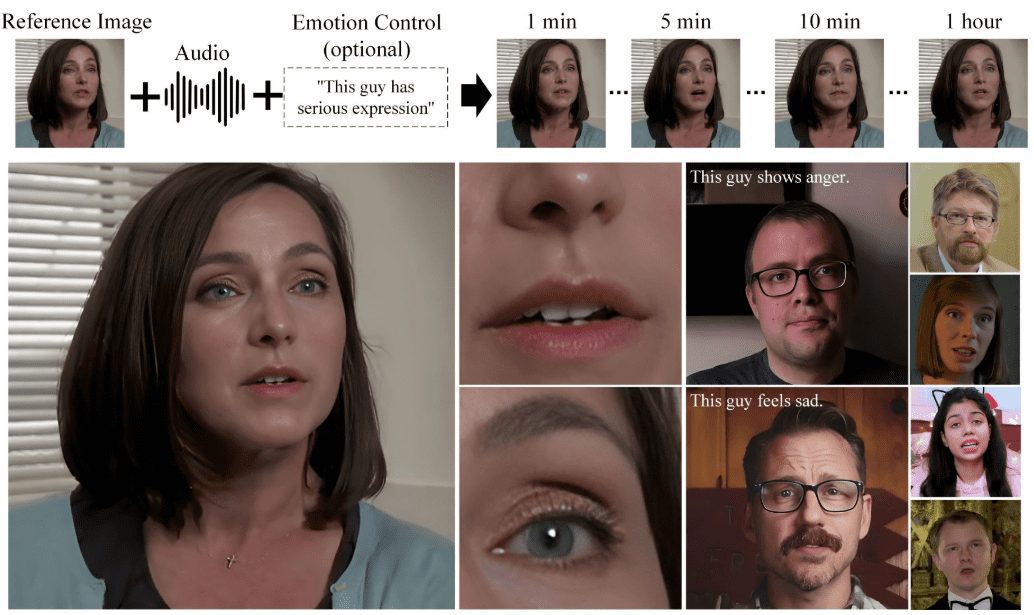

Hallo2: audio-driven generation of lip-synchronized/expression-synchronized portrait videos (Windows one-click installation)

General Introduction

Hallo2 is an open source project jointly developed by Fudan University and Baidu to generate high-resolution portrait animations through audio-driven generation. The project utilizes advanced Generative Adversarial Networks (GAN) and time alignment techniques to achieve 4K resolution and up to 1 hour of video generation.Hallo2 also supports text prompts to enhance the diversity and controllability of generated content.

Hallo3 was released and achieved significant lip synchronization by introducing a cross-attention mechanism for audio conditioning that effectively captures the complex relationship between audio signals and facial expressions.

Note that:Hallo3 has the following simple requirements on the input data for inference:

Reference Image: The reference image must have an aspect ratio of 1:1 or 3:2. Driver Audio: The driver audio must be in WAV format. Audio language: the audio must be in English, as the model's training dataset contains only this language. Audio clarity: ensure that vocals are clear in the audio; background music is acceptable.

Function List

- Audio Driven Animation Generation: Generate corresponding portrait animations from input audio files.

- High Resolution Support: Support for generating videos with 4K resolution to ensure clear picture quality.

- Long video generation: Can generate video content up to 1 hour long.

- Text Alert Enhancement: Control generated portrait expressions and actions with semantic text labels.

- open source: Full source code and pre-trained models are provided to facilitate secondary development.

- Multi-platform support: Supports running on multiple platforms such as Windows, Linux, etc.

Using Help

Installation process

- system requirements::

- Operating system: Ubuntu 20.04/22.04

- GPU: Graphics card supporting CUDA 11.8 (e.g. A100)

- Creating a Virtual Environment::

conda create -n hallo python=3.10 conda activate hallo - Installation of dependencies::

pip install torch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 --index-url https://download.pytorch.org/whl/cu118 pip install -r requirements.txt sudo apt-get install ffmpeg - Download pre-trained model::

git lfs install git clone https://huggingface.co/fudan-generative-ai/hallo2 pretrained_models

Usage Process

- Preparing to enter data::

- Download and prepare the required pre-trained model.

- Prepare the source image and driver audio files.

- Running inference scripts::

python scripts/inference.py --source_image path/to/image --driving_audio path/to/audio - View Generated Results::

- The generated video file will be saved in the specified output directory and can be viewed using any video player.

Detailed steps

- Download Code::

git clone https://github.com/fudan-generative-vision/hallo2 cd hallo2 - Create and activate a virtual environment::

conda create -n hallo python=3.10 conda activate hallo - Install the necessary Python packages::

pip install torch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 --index-url https://download.pytorch.org/whl/cu118 pip install -r requirements.txt - Install ffmpeg::

sudo apt-get install ffmpeg - Download pre-trained model::

git lfs install git clone https://huggingface.co/fudan-generative-ai/hallo2 pretrained_models - Running inference scripts::

python scripts/inference.py --source_image path/to/image --driving_audio path/to/audio - View Generated Results::

- The generated video file will be saved in the specified output directory and can be viewed using any video player.

Hallo2: One-Click Installer for Windows

https://pan.quark.cn/s/aa9fc15a786f

Extract code: 51XY

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...