Grok 3 Benchmark Data 'Watered Down'? OpenAI Employee Says xAI May Be Misrepresenting Performance

The debate over AI benchmarking and how AI labs publish their results is becoming increasingly public. AI performance has long been measured and reported in controversial ways, and now these behind-the-scenes debates are finally coming into the spotlight.

This week, an OpenAI employee publicly accused xAI, the AI company founded by Elon Musk, of publishing misleading benchmark results to promote their latest AI models. Grok 3. Igor Babushkin, one of xAI's co-founders, responded immediately to the accusations, insisting that there was nothing wrong with xAI's approach. The public debate has certainly brought the issue of transparency in AI performance evaluation to the forefront.

But the truth, perhaps, lies somewhere between the two sides. As in many technical disputes, the truth often lies beneath the surface and requires deeper digging and scrutiny.

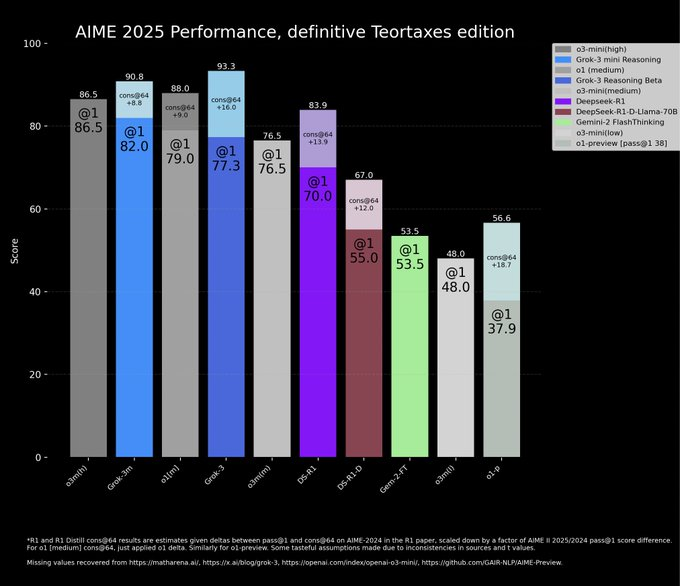

In a post on xAI's official blog, xAI presents a chart illustrating Grok 3's performance in the AIME 2025 benchmark. AIME 2025 is a collection of questions from a recent high-stakes, invite-only math exam, and is considered a litmus test of AI's mathematical capabilities. However, it is worth noting that experts have long questioned the validity of AIME as an AI benchmark. It may be interesting to see xAI's intentions in choosing such a controversial benchmark to demonstrate modeling capabilities. Nonetheless, AIME 2025 and its earlier versions are still widely used to assess the mathematical reasoning capabilities of AI models.

In its chart, xAI claimed that both versions of Grok 3, Grok 3 Reasoning Beta and Grok 3 mini Reasoning, outperformed OpenAI's current state-of-the-art model, o3-mini-high, in the AIME 2025 test. However, OpenAI staff were quick to point out on the X platform that xAI's chart had a critical flaw: it omitted o3-mini-high's AIME 2025 test scores under "cons@64" conditions. This selective presentation of data raises questions about xAI's true intentions.

You may ask, "What exactly is "consensus@64"? Simply put, it stands for "consensus@64" and is a special kind of evaluation method. In this method, the model tries 64 times to answer each question in a benchmark test, and the most frequent answer is the final answer. Unsurprisingly, the "cons@64" mechanism tends to significantly improve a model's benchmark score. xAI deliberately omits the "cons@64" data from its graphs, which may give the impression that Grok 3 outperforms other models, but this may not be the case. Is this "tricky" approach not fair game?

In terms of actual data, both Grok 3 Reasoning Beta and Grok 3 mini Reasoning actually scored lower than o3-mini-high on AIME 2025 in the "@1" condition, i.e., the model's first attempt at scoring in the benchmark. Even Grok 3 Reasoning Beta scores are only slightly ahead of OpenAI's o1 model (set to "medium" computation). However, even with this kind of data comparison, xAI is still publicizing Grok 3 as "the smartest AI in the world". This publicity strategy is not based on rigorous scientific evidence, but is more of a marketing tactic to grab the market's attention. At a time when AI technology is changing rapidly, is it more important to make down-to-earth technological progress, or is it more important to win the future through exaggerated marketing hype? This may be a question that the entire AI industry should seriously consider.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...