GPT4All: A Large Language Model Client with CPU Runtime Support, Emphasizing Localization and Data Security

GPT4All General Introduction

GPT-4All is an open source project developed by Nomic to allow users to run Large Language Models (LLMs) on local devices. The project emphasizes privacy protection and does not require an Internet connection for use, and is intended for both personal and business users.GPT-4All supports a wide range of hardware, including Mac M-series chips, AMD and NVIDIA GPUs, and can be easily run by users on desktop and laptop computers. The project also offers a rich open source model and community support, allowing users to customize the chatbot experience to their needs.

GPT4All is an ecosystem for running powerful and customizable large-scale language models that run natively on consumer CPUs and any GPU. Please note that your CPU needs to support AVX or AVX2 instructions.

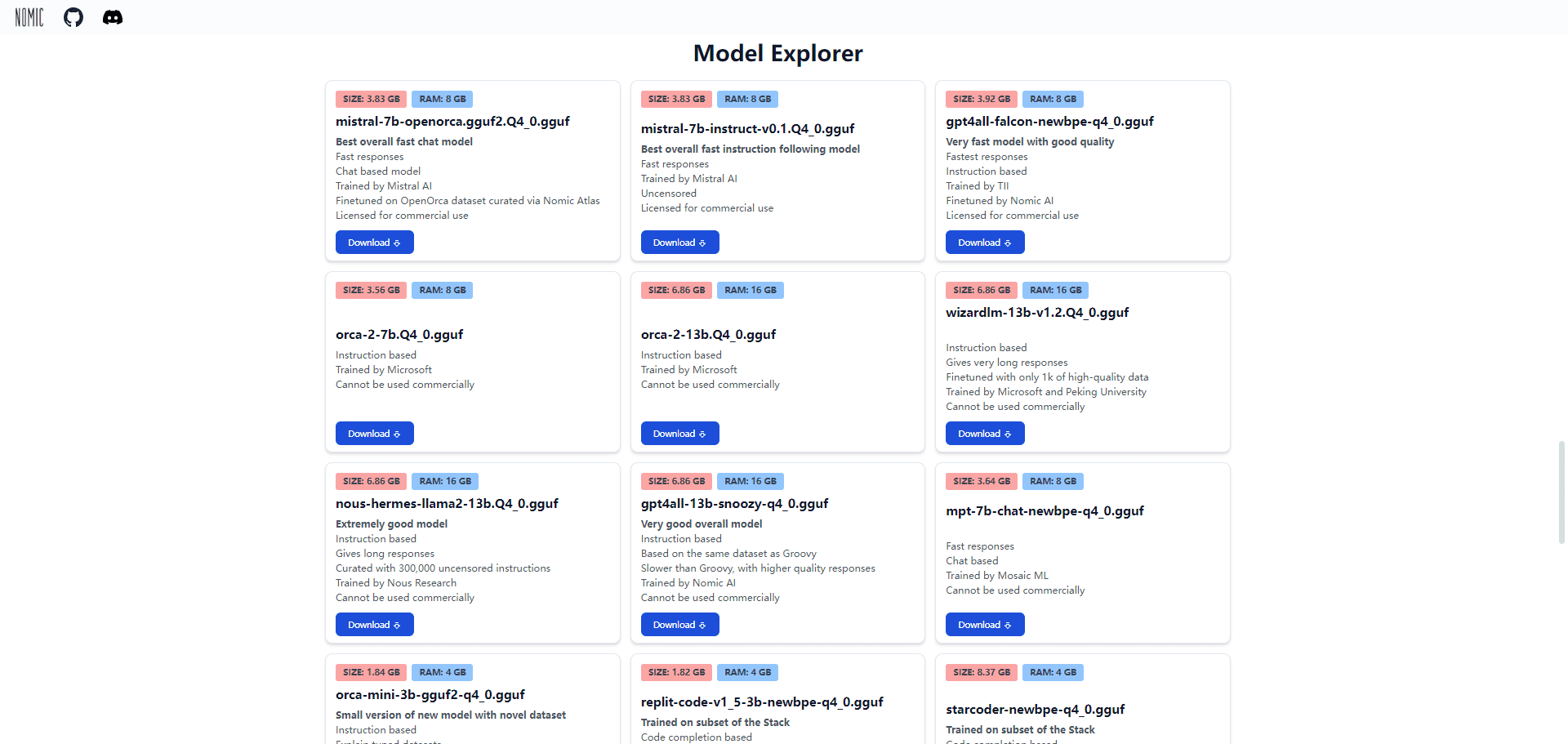

The GPT4All model is a 3GB - 8GB file that you can download and insert into the GPT4All open source ecosystem software.Nomic AI supports and maintains this software ecosystem to ensure quality and security and is spearheading the effort to make it easy for any individual or enterprise to train and deploy their own edge large-scale language models.

It does not require a GPU or an Internet connection and is capable of real-time inference latency on the M1 Mac.

distinctiveness

Your chat is private and never leaves your device!

GPT4All has privacy and security as its first priority. Use LLM to handle your sensitive local data that never leaves your device.

Running Language Models on Consumer Grade Hardware

GPT4All allows you to run LLM on both CPUs and GPUs, with full support for Mac M-series chips, AMD and NVIDIA GPUs.

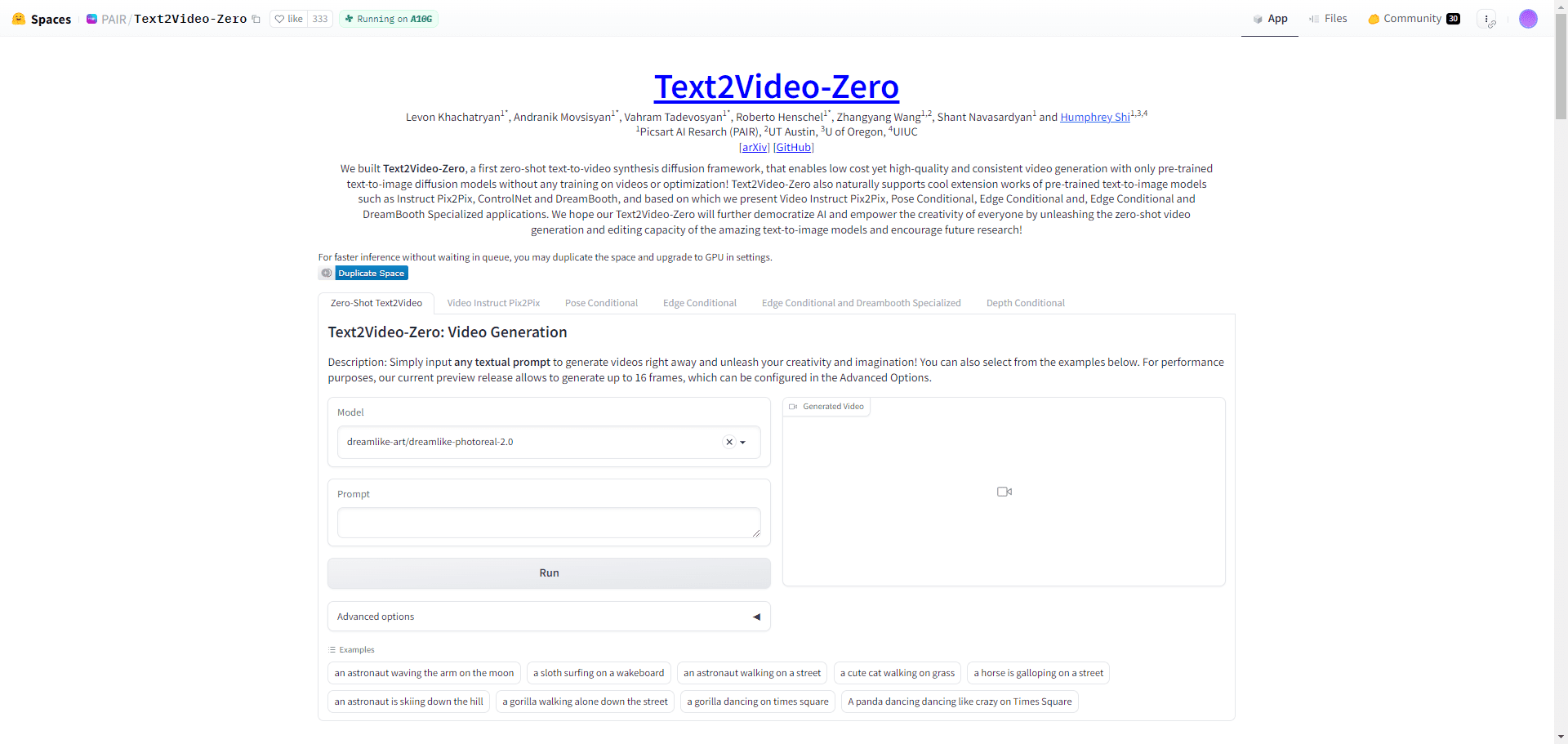

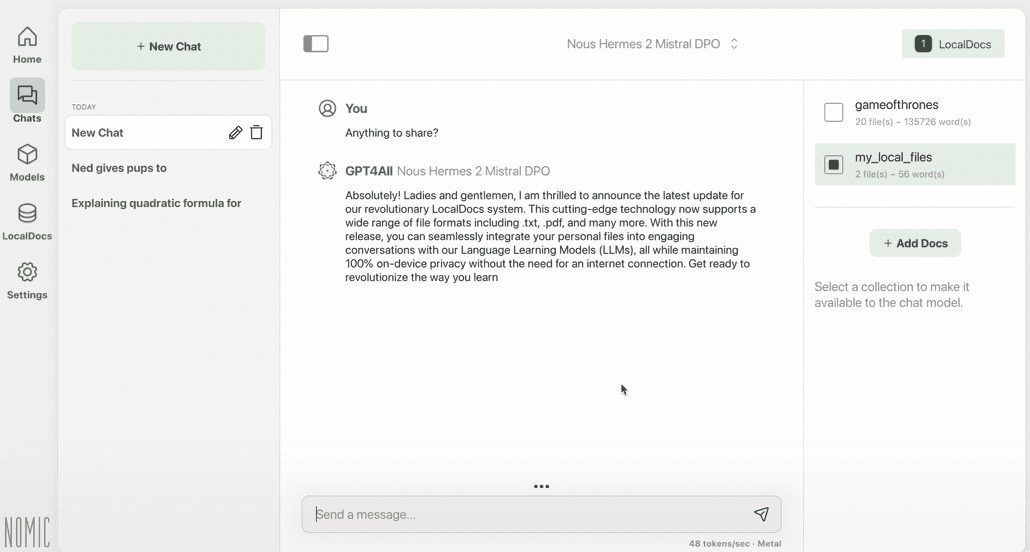

Chat with local files

Use LocalDocs to grant your local LLM access to your private and sensitive information. It works without the internet and no data leaves your device.

Explore more than 1000 open source language models

GPT4All supports LLaMa, Mistral, Nous-Hermes and hundreds of other popular models.

Function List

- local operation: Run LLMs on a local device without an Internet connection.

- Privacy: All data is stored locally to ensure user privacy.

- Multi-Hardware Support: Compatible with Mac M-series chips, AMD and NVIDIA GPUs.

- open source community: Rich open source modeling and community support.

- Customized Functions: Users can customize the chatbot's system prompts, temperature, context length, and other parameters.

- Enterprise Edition: Provides enterprise-level support and functionality for large-scale deployments.

Using Help

Installation process

- Download the application::

- interviews Nomic Official Website Download the installer for Windows, macOS or Ubuntu.

- Windows and Linux users should ensure that the device is equipped with an Intel Core i3 2nd Gen / AMD Bulldozer or higher processor.

- macOS users need to be running Monterey 12.6 or later, which works best on Apple Silicon M-series processors.

- Installation of the application::

- Windows users: Run the downloaded installation package and follow the prompts to complete the installation.

- macOS users: Drag the downloaded application to the Applications folder.

- Ubuntu users: Install the downloaded package file using the command line.

- Launching the application::

- Once installation is complete, open the GPT-4All application and follow the prompts for initial setup.

Guidelines for use

- Running a local model::

- After opening the application, select the desired language model to download and load.

- Multiple models are supported, including LLaMa, Mistral, Nous-Hermes, and more.

- Customized Chatbots::

- Adjust system prompts, temperature, context length and other parameters in the settings to optimize the chat experience.

- Use the LocalDocs feature to allow local LLMs to access your private data without an Internet connection.

- Enterprise Edition Features::

- Enterprise users can contact Nomic for an Enterprise Edition license to enjoy additional support and features.

- The Enterprise Edition is suitable for large-scale deployments and provides enhanced security and support.

- Community Support::

- Join the GPT-4All community to participate in discussions and contribute code.

- interviews GitHub repository Get the latest updates and documentation.

common problems

- How do you ensure data privacy? GPT-4All All data is stored locally on the device, ensuring that user privacy is not violated.

- Is an Internet connection required? No. The GPT-4All can be run completely offline on a local device.

- What hardware is supported? Supports Mac M-series chips, AMD and NVIDIA GPUs, and common desktop and laptop computers.

GPT4All Model Benchmarking

| Model | BoolQ | PIQA | HellaSwag | WinoGrande | ARC-e | ARC-c | OBQA | Avg |

|---|---|---|---|---|---|---|---|---|

| GPT4All-J 6B v1.0 | 73.4 | 74.8 | 63.4 | 64.7 | 54.9 | 36 | 40.2 | 58.2 |

| GPT4All-J v1.1-breezy | 74 | 75.1 | 63.2 | 63.6 | 55.4 | 34.9 | 38.4 | 57.8 |

| GPT4All-J v1.2-jazzy | 74.8 | 74.9 | 63.6 | 63.8 | 56.6 | 35.3 | 41 | 58.6 |

| GPT4All-J v1.3-groovy | 73.6 | 74.3 | 63.8 | 63.5 | 57.7 | 35 | 38.8 | 58.1 |

| GPT4All-J Lora 6B | 68.6 | 75.8 | 66.2 | 63.5 | 56.4 | 35.7 | 40.2 | 58.1 |

| GPT4All LLaMa Lora 7B | 73.1 | 77.6 | 72.1 | 67.8 | 51.1 | 40.4 | 40.2 | 60.3 |

| GPT4All 13B snoozy | 83.3 | 79.2 | 75 | 71.3 | 60.9 | 44.2 | 43.4 | 65.3 |

| GPT4All Falcon | 77.6 | 79.8 | 74.9 | 70.1 | 67.9 | 43.4 | 42.6 | 65.2 |

| Nous-Hermes | 79.5 | 78.9 | 80 | 71.9 | 74.2 | 50.9 | 46.4 | 68.8 |

| Nous-Hermes2 | 83.9 | 80.7 | 80.1 | 71.3 | 75.7 | 52.1 | 46.2 | 70.0 |

| Nous-Puffin | 81.5 | 80.7 | 80.4 | 72.5 | 77.6 | 50.7 | 45.6 | 69.9 |

| Dolly 6B | 68.8 | 77.3 | 67.6 | 63.9 | 62.9 | 38.7 | 41.2 | 60.1 |

| Dolly 12B | 56.7 | 75.4 | 71 | 62.2 | 64.6 | 38.5 | 40.4 | 58.4 |

| Alpaca 7B | 73.9 | 77.2 | 73.9 | 66.1 | 59.8 | 43.3 | 43.4 | 62.5 |

| Alpaca Lora 7B | 74.3 | 79.3 | 74 | 68.8 | 56.6 | 43.9 | 42.6 | 62.8 |

| GPT-J 6.7B | 65.4 | 76.2 | 66.2 | 64.1 | 62.2 | 36.6 | 38.2 | 58.4 |

| LLama 7B | 73.1 | 77.4 | 73 | 66.9 | 52.5 | 41.4 | 42.4 | 61.0 |

| LLama 13B | 68.5 | 79.1 | 76.2 | 70.1 | 60 | 44.6 | 42.2 | 63.0 |

| Pythia 6.7B | 63.5 | 76.3 | 64 | 61.1 | 61.3 | 35.2 | 37.2 | 56.9 |

| Pythia 12B | 67.7 | 76.6 | 67.3 | 63.8 | 63.9 | 34.8 | 38 | 58.9 |

| Fastchat T5 | 81.5 | 64.6 | 46.3 | 61.8 | 49.3 | 33.3 | 39.4 | 53.7 |

| Fastchat Vicuña 7B | 76.6 | 77.2 | 70.7 | 67.3 | 53.5 | 41.2 | 40.8 | 61.0 |

| Fastchat Vicuña 13B | 81.5 | 76.8 | 73.3 | 66.7 | 57.4 | 42.7 | 43.6 | 63.1 |

| StableVicuña RLHF | 82.3 | 78.6 | 74.1 | 70.9 | 61 | 43.5 | 44.4 | 65.0 |

| StableLM Tuned | 62.5 | 71.2 | 53.6 | 54.8 | 52.4 | 31.1 | 33.4 | 51.3 |

| StableLM Base | 60.1 | 67.4 | 41.2 | 50.1 | 44.9 | 27 | 32 | 46.1 |

| Koala 13B | 76.5 | 77.9 | 72.6 | 68.8 | 54.3 | 41 | 42.8 | 62.0 |

| Open Assistant Pythia 12B | 67.9 | 78 | 68.1 | 65 | 64.2 | 40.4 | 43.2 | 61.0 |

| Mosaic MPT7B | 74.8 | 79.3 | 76.3 | 68.6 | 70 | 42.2 | 42.6 | 64.8 |

| Mosaic mpt-instruct | 74.3 | 80.4 | 77.2 | 67.8 | 72.2 | 44.6 | 43 | 65.6 |

| Mosaic mpt-chat | 77.1 | 78.2 | 74.5 | 67.5 | 69.4 | 43.3 | 44.2 | 64.9 |

| Wizard 7B | 78.4 | 77.2 | 69.9 | 66.5 | 56.8 | 40.5 | 42.6 | 61.7 |

| Wizard 7B Uncensored | 77.7 | 74.2 | 68 | 65.2 | 53.5 | 38.7 | 41.6 | 59.8 |

| Wizard 13B Uncensored | 78.4 | 75.5 | 72.1 | 69.5 | 57.5 | 40.4 | 44 | 62.5 |

| GPT4-x-Vicuna-13b | 81.3 | 75 | 75.2 | 65 | 58.7 | 43.9 | 43.6 | 63.2 |

| Falcon 7b | 73.6 | 80.7 | 76.3 | 67.3 | 71 | 43.3 | 44.4 | 65.2 |

| Falcon 7b instruct | 70.9 | 78.6 | 69.8 | 66.7 | 67.9 | 42.7 | 41.2 | 62.5 |

| text-davinci-003 | 88.1 | 83.8 | 83.4 | 75.8 | 83.9 | 63.9 | 51 | 75.7 |

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...