gpt-oss - a family of open source inference models from OpenAI

What is gpt-oss

gpt-oss is OpenAI's family of open-source inference models that enable efficient, flexible, and easy-to-deploy AI solutions for developers. gpt-oss consists of two versions, gpt-oss-120B with 117 billion parameters running on 80GB GPUs, and gpt-oss-20B with 21 billion parameters running on 16GB of RAM. gpt-oss-20B has 21 billion parameters and is supported on regular devices with 16GB of RAM. Both are based on the MoE architecture, support 128k context length, and have fast inference with performance close to that of closed-source o4-mini and o3-minigpt-oss supports tool invocation, chain thinking, suitable for multi-step reasoning tasks, and provides open source weighting and reasoning strength adjustment functions to meet the needs of different scenarios.

Main features of gpt-oss

- Tooling capabilities: Support for calling external tools, such as doing web searches or executing Python code, to help solve complex tasks.

- Chained reasoning support: The model breaks down complex tasks step by step and then solves them one by one, and is suitable for dealing with problems that require multi-step reasoning.

- low resource requirementThe gpt-oss-20B supports running on an ordinary device with 16GB of RAM, and the gpt-oss-120B supports running on a single 80GB GPU, which can be adapted to different hardware environments.

- Rapid inference response: The model is capable of inference speeds of 40-50 tokens/s, and performs well in scenarios that require fast responses.

- Open Source and Customization: Full model weights and code are provided, and users fine-tune and customize them locally to better meet the requirements of specific tasks.

- Adjustable inference strengthSections: Support low, medium and high inference strength settings, users adjust according to specific needs and scenarios, balancing the relationship between latency and performance, to achieve the best use of the results.

The official website address for gpt-oss

- Project website:: https://openai.com/zh-Hans-CN/index/introducing-gpt-oss/

- GitHub repository:: https://github.com/openai/gpt-oss

- HuggingFace Model Library:: https://huggingface.co/collections/openai/gpt-oss-68911959590a1634ba11c7a4

- Online Experience Demo:: https://gpt-oss.com/

Performance of gpt-oss

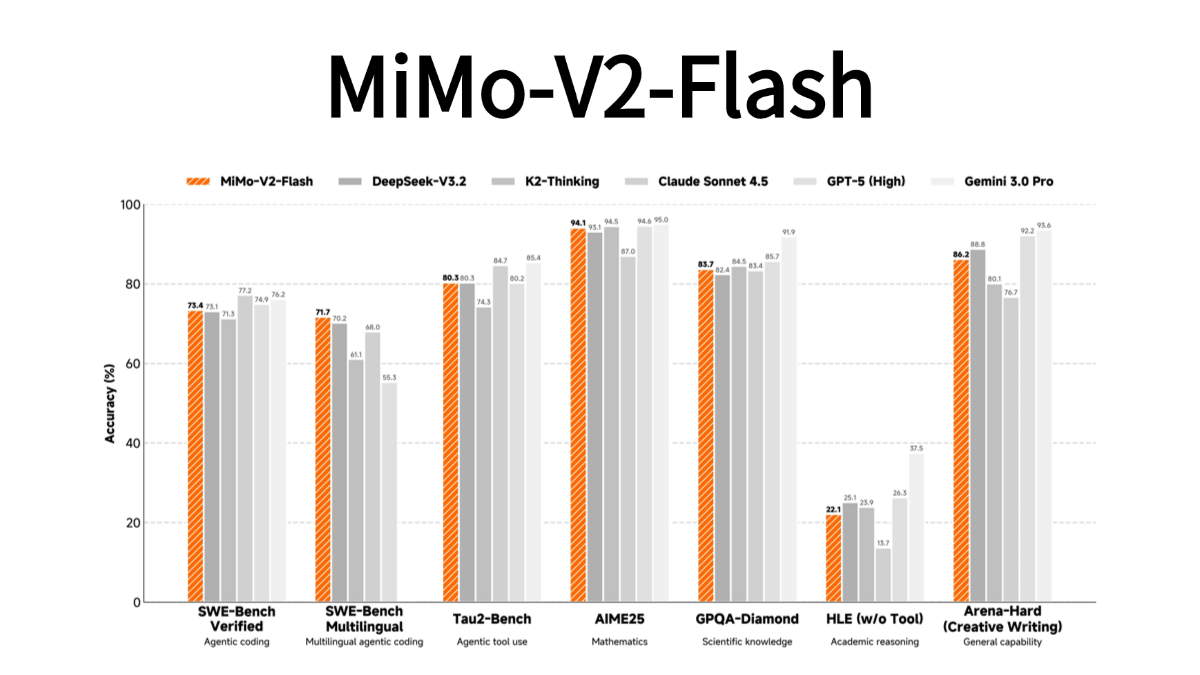

- Competition Programming: In the Codeforces competition programming test, gpt-oss-120B scored 2622 and gpt-oss-20B scored 2516. Both versions scored better than some of the open-source models, and slightly worse than the closed-source o3-mini and o4-mini, demonstrating strong programming capabilities.

- Generic problem solving: gpt-oss-120B outperforms OpenAI's o3-mini and approaches the level of o4-mini in MMLU (Multi-task Language Understanding) and HLE (Human Level Evaluation) tests. It shows that gpt-oss has high accuracy and logical reasoning ability when dealing with generalized problems.

- Tool Call: Both gpt-oss-120B and gpt-oss-20B outperform OpenAI's o3-mini in the TauBench Intelligent Body Evaluation Suite, and even reach or exceed the level of o4-mini. It shows that gpt-oss has high efficiency and accuracy in invoking external tools (e.g., web search, code interpreter, etc.), and can effectively solve complex problems.

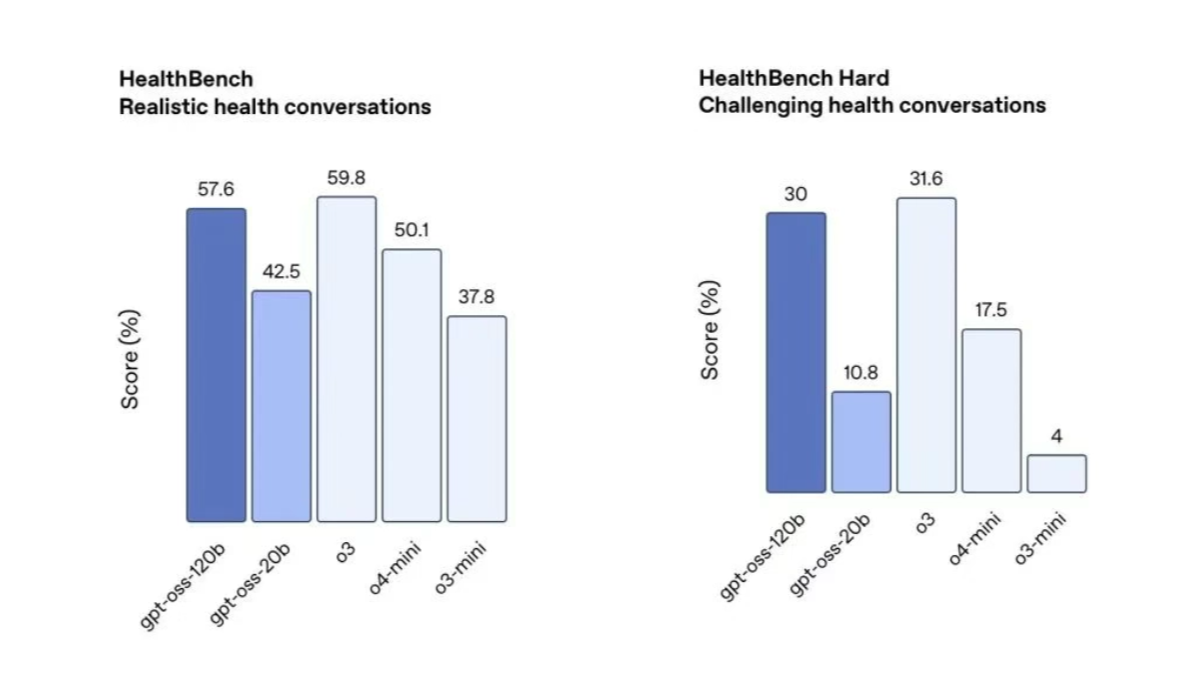

- Health Q&A: In the HealthBench test, gpt-oss-120B outperforms the o4-mini, and gpt-oss-20B reaches a level comparable to the o3-mini. This shows that gpt-oss has high accuracy and reliability in dealing with health-related issues and can provide valuable advice and information to users.

How to use gpt-oss

- Online Experience Platform::

- Online experience address: Visit the online experience at https://gpt-oss.com/

- procedure::

- Open the link above.

- Enter a question or instruction on the web page.

- Click "Submit" to get a response from the model.

- GitHub repository deployment::

- Visit the GitHub repository at:: https://github.com/openai/gpt-oss

- clone warehouse::

git clone https://github.com/openai/gpt-oss.git

cd gpt-oss- Installation of dependencies::

pip install -r requirements.txt- Download model weights: Select the weight file for gpt-oss-20b or gpt-oss-120b as required and place it in the specified directory.

- operational model: Run the model inference script according to the instructions in the repository. Example:

python run_inference.py --model gpt-oss-20b --input "你的输入文本"Core benefits of gpt-oss

- Open Source and Flexibility: Full model weights and code are provided to support local fine-tuning and customization to meet specific needs.

- Efficient inference performance: Inference speed up to 40-50 tokens/s, low latency design for fast response scenarios.

- Wide range of applicabilityThe company supports a wide range of hardware environments, from average devices with 16GB of RAM to high-performance devices with 80GB GPUs.

- Powerful reasoning: Supports chained reasoning and tool invocation, enabling step-by-step solutions to complex problems and expanding the range of applications.

- Safety and Reliability: The pre-training phase filters harmful data and performs adversarial fine-tuning to ensure that the model is safe and reliable.

People for gpt-oss

- Developers and engineers: Developers and engineers need open source models for project development, rapid prototyping, or customization, and the flexibility and open source code provided by the models can meet these needs.

- Data scientists and researchers: Data scientists and researchers are interested in the internal mechanisms of the model and want to fine-tune, experiment, or study them, and the open-source nature of the model supports them in exploring and optimizing the model in depth.

- business user: Business users need high-performance, low-cost inference models for intelligent customer service, data analytics, or automation tasks, where the model's free commercial and efficient inference capabilities are ideal.

- Educators and students: In education, as a learning aid to help students answer questions, provide writing advice, or perform programming exercises.

- creative worker: Including writers, screenwriters, game developers, etc., the model helps them generate creative content, inspire, and improve creative efficiency.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...