GPT-Crawler: Automatically Crawling Website Content to Generate Knowledge Base Documents

General Introduction

GPT-Crawler is an open source tool developed by the BuilderIO team and hosted on GitHub. It crawls page content by entering one or more website URLs, generating structured knowledge documents (output.json) for creating custom GPT or AI assistants. Users can configure crawling rules, such as specifying a starting URL and content selector, and the tool automatically extracts the text and organizes it into files. The tool is easy to use and supports local runs, Docker container deployments, and API calls, making it ideal for developers to quickly build proprietary AI assistants from website content. So far, it's gaining traction in the tech community, popularized for its efficiency and open-source nature.

Function List

- Crawls website content from one or more URLs to generate the

output.jsonDocumentation. - Support for custom crawling rules, including starting URLs, link matching patterns, and CSS selectors.

- Ability to handle dynamic web pages and crawl client-side rendered content using a headless browser.

- Provides an API interface to start crawling tasks via POST requests.

- Supports setting the maximum number of pages (

maxPagesToCrawl), document size (maxFileSize) and the number of tokens (maxTokens). - The generated files can be uploaded directly to OpenAI for creating custom GPTs or AI assistants.

- Supports Docker container running, easy to deploy in different environments.

- Specific resource types (e.g., images, videos, etc.) can be excluded to optimize crawling efficiency.

Using Help

Installation and operation (local mode)

GPT-Crawler is developed based on Node.js and needs to be installed to run. Here are the detailed steps:

- Checking the environment

Ensure that your computer has Node.js (version 16 or higher) and npm installed. run the following command to confirm:

node -v

npm -v

If you don't have it, download and install it from the Node.js website.

- cloning project

Enter the command in the terminal to download the project locally:

git clone https://github.com/BuilderIO/gpt-crawler.git

- Access to the catalog

Once the download is complete, go to the project folder:

cd gpt-crawler

- Installation of dependencies

Run the following command to install the required packages:

npm install

- Configuring the Crawler

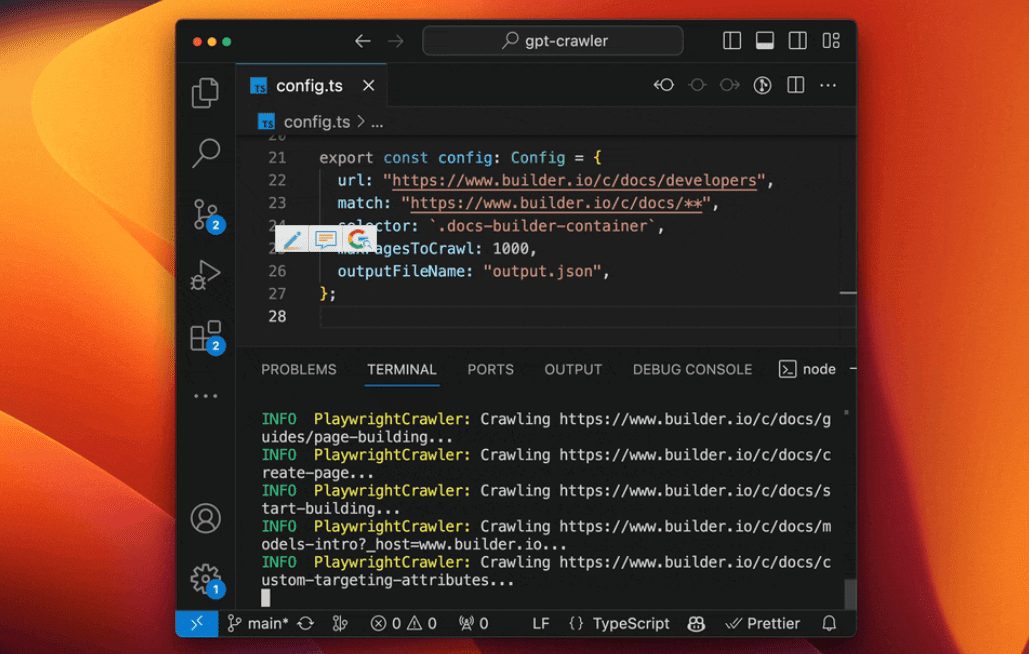

show (a ticket)config.tsfile, modify the crawl parameters. For example, to crawl the Builder.io document:

export const defaultConfig: Config = {

url: "https://www.builder.io/c/docs/developers",

match: "https://www.builder.io/c/docs/**",

selector: ".docs-builder-container",

maxPagesToCrawl: 50,

outputFileName: "output.json"

};

url: The starting crawl address.match: Link matching pattern with wildcard support.selector: CSS selector for extracting content.maxPagesToCrawl: Maximum number of pages to crawl.outputFileName: The name of the output file.

- Running the crawler

Once the configuration is complete, run the following command to start the crawl:

npm start

Upon completion.output.json file is generated in the project root directory.

Alternative modes of operation

Using Docker containers

- Ensure that Docker is installed (downloaded from the Docker website).

- go into

containerappFolder, Editconfig.tsThe - Run the following command to build and start the container:

docker build -t gpt-crawler .

docker run -v $(pwd)/data:/app/data gpt-crawler

- The output file is generated in the

datafolder.

Running with the API

- After installing the dependencies, start the API service:

npm run start:server

- The service runs by default in the

http://localhost:3000The - Send a POST request to

/crawl, example:

curl -X POST http://localhost:3000/crawl -H "Content-Type: application/json" -d '{"url":"https://example.com","match":"https://example.com/**","selector":"body","maxPagesToCrawl":10,"outputFileName":"output.json"}'

- accessible

/api-docsView the API documentation (based on Swagger).

Upload to OpenAI

- Creating custom GPTs

- Open ChatGPT.

- Click on your name in the lower left corner and select "My GPTs".

- Click "Create a GPT" > "Configure" > "Knowledge".

- upload

output.jsonDocumentation. - If the file is too large, the

config.tsset upmaxFileSizemaybemaxTokensSplit file.

- Creating custom assistants

- Open the OpenAI platform.

- Click "+ Create" > "Upload".

- upload

output.jsonDocumentation.

Functional operation details

- Crawl content

indicate clearly and with certaintyurlcap (a poem)selectorAfter that, the tool extracts the page text. For example..docs-builder-containerGrab only the content of the region. - Generate files

The output file format is:

[{"title": "页面标题", "url": "https://example.com/page", "html": "提取的文本"}, ...]

- Optimized Output

utilizationresourceExclusionsExclude extraneous resources (e.g.png,jpg), reducing file size.

caveat

- An OpenAI paid account is required to create custom GPTs.

- Dynamic web crawling relies on headless browsers to ensure dependency integrity.

- Configuration can be adjusted to split the upload when the file is too large.

application scenario

- Technical Support Assistant

Crawl product documentation websites to generate AI assistants that help users answer technical questions. - Content organizing tools

Grab articles from blogs or news sites to create a knowledge base or Q&A assistant. - Education and training assistant

Crawl online course pages to generate learning assistants that provide course-related answers.

QA

- Is it possible to crawl multiple websites?

Can. In theconfig.tsJust set multiple URLs and match rules in the - What if the file is too large to upload?

set upmaxFileSizemaybemaxTokens, splits the file into multiple smaller files. - Do you support Chinese sites?

Support. As long as the site content can be parsed by headless browsers, it can be crawled properly.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...