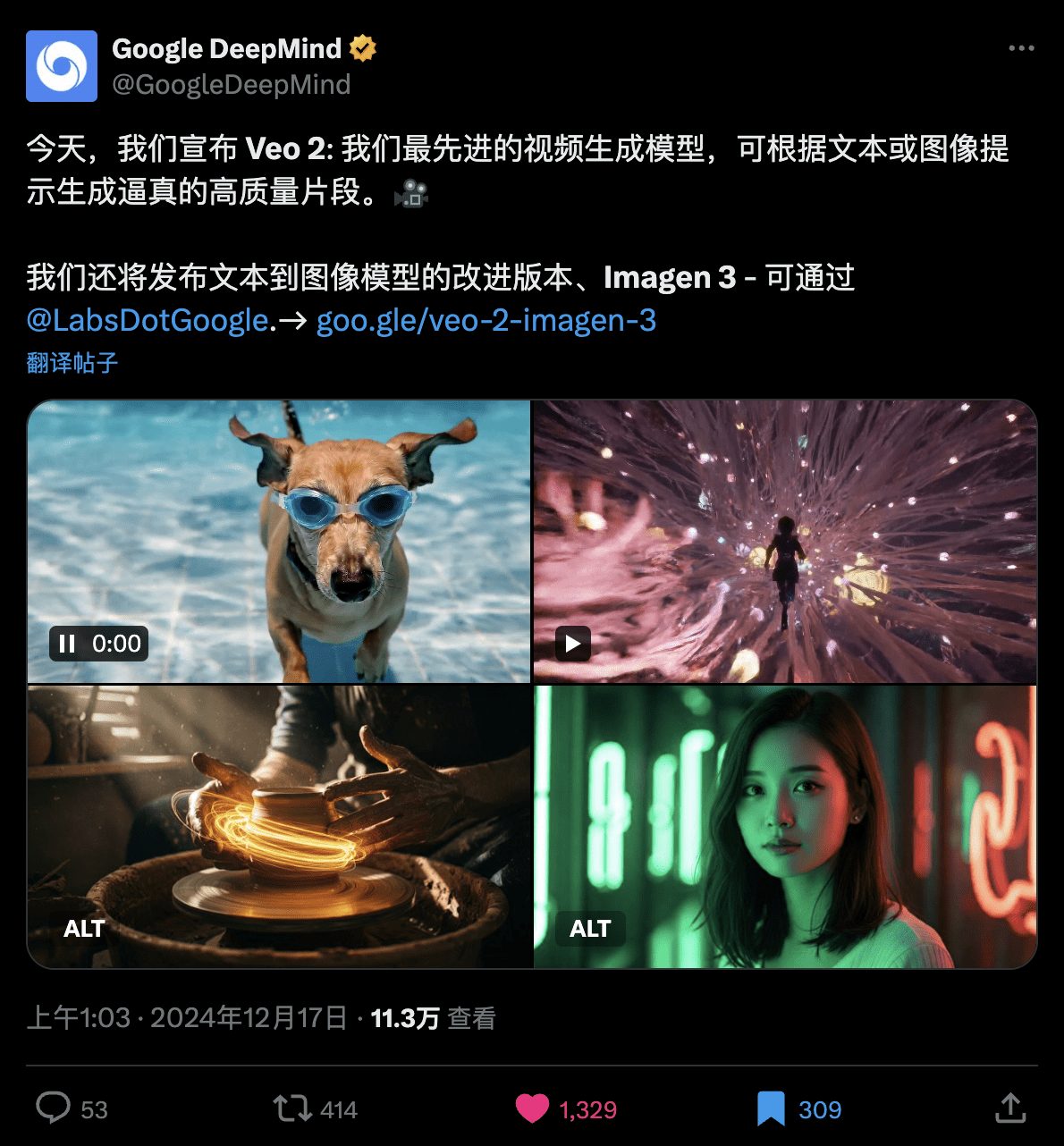

Google Newly Releases AI Video Veo2, AI Mapping Imagen3

Earlier this year, Google launched Veo, a video generation model, and Imagen 3, its newest image generation model, and since then, it's been exciting to see people bring their ideas to life with these models: YouTube creators are exploring the creative possibilities of creating video backdrops for YouTube Shorts, enterprise clients are enhancing their creative workflow with Vertex AI enterprise clients are enhancing their creative workflows with Vertex AI, and creatives are using VideoFX cap (a poem) ImageFX to tell their stories. With partners from all sides, from filmmakers to corporations, we continue to develop and evolve these technologies.

In the middle of the night, OpenAI posted a personalized AI search when there was nothing to see on the OpenAI crapcast. But Google, without teasing or marketing, silently posted two biggies on X.

Today, Google introduced a new video model, Veo 2, and the latest version, Imagen 3, both of which achieve state-of-the-art results. These models are now available in VideoFX, ImageFX, and our latest experimental project Whisk.

Veo 2: state-of-the-art video generation technology

Veo 2 creates extremely high-quality videos on a wide range of subjects and styles. In head-to-head comparisons by human reviewers, Veo 2 achieved state-of-the-art results against leading models.

It brings an improved understanding of real-world physics, as well as the details of human movement and expression, which contributes to an overall sense of detail and realism. veo 2 understands the unique language of cinematography: simply provide a genre, specify a shot, suggest a cinematic effect, and veo 2 does it - at resolutions of up to 4K, and at lengths that can extend to several minutes. Ask it to do a tracking shot across a scene from a low angle, or a close-up of a scientist looking through a microscope, and Veo 2 will create it. Simply type "18mm lens" into the prompt and Veo 2 knows how to capture the wide-angle effect that characterizes this lens, or blur the background to focus on the subject by adding "shallow depth of field" to the prompt.

Veo 2, the most advanced AI video model today, and Imagen 3, an improved version of the AI drawing model. A group of us, while looking at the results, kept exclaiming in awe of the blow-ups. I almost never use the word blowing up, but the AI Video Veo 2 really made me kind of want to cheer, even, kind of like watching Sora on that fateful night of February 16th. One by one.

I. AI Video Veo 2

Online effect Veo 2 unbuilt effect

While video models often "hallucinate" unwanted details - such as extra fingers or unexpected objects - Veo 2 produces these problems less frequently, resulting in more realistic output. This makes the output more realistic.

Our commitment to safety and responsible development guided the design of Veo 2. We have taken care in extending the usability of Veo to help identify, understand, and improve the quality and safety of the model as it is slowly rolled out through VideoFX, YouTube, and Vertex AI.

As with all of our image and video generation models, the output of Veo 2 contains an invisible SynthID watermark that helps identify it as AI-generated content, reducing the likelihood of misinformation and misattribution.

Today, we're bringing new Veo 2 features to the Google Labs video generation tool, VideoFX, and expanding the range of accessible users. Visit Google Labs to sign up for the waiting list. We also plan to expand Veo 2 to YouTube Shorts and other products next year.

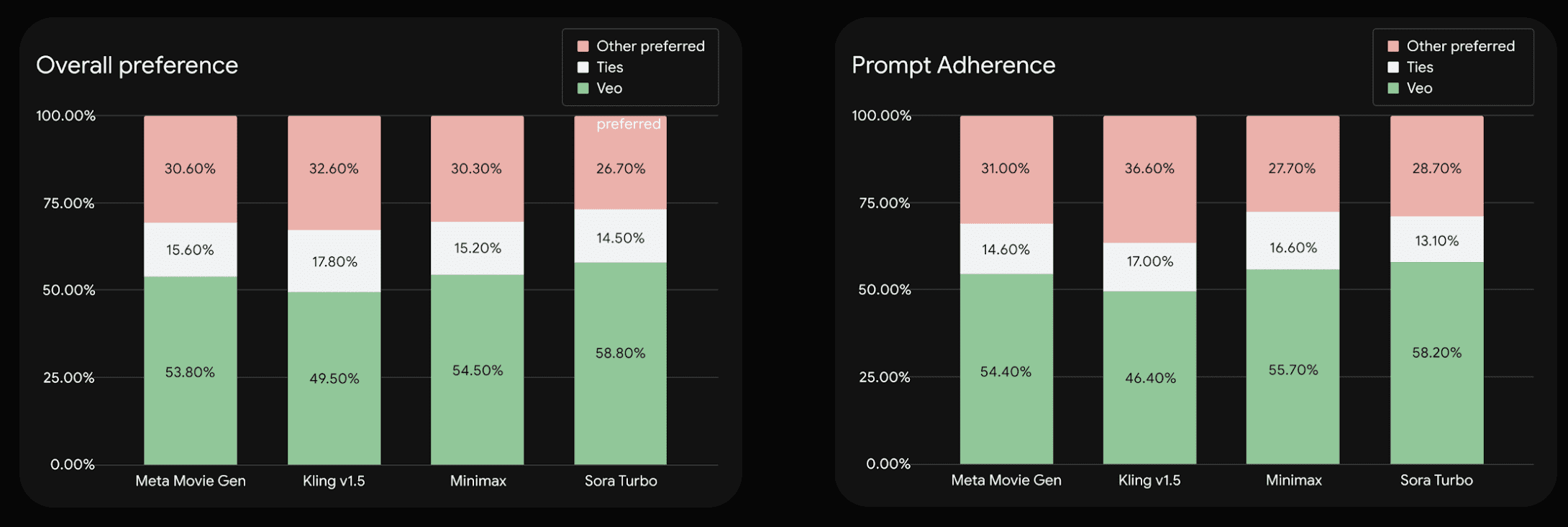

Google itself did a human observer review, through the benchmark dataset MovieGenBench released by Meta, and made 1003 data to let people blindly test which one works better. The results, as they were finally obtained, looked like this.

I'll explain this piece a little bit, there are two tables that are divided into Overall Preference (Overall Preference) and Prompt Adherence (Prompt Match).

The horizontal axis of each chart represents the different models being compared, which are Meta, Kerin v1.5, Minimax, and Sora Turbo. what Google did was to do a blind point-to-point test of the Veo 2 against these models.

Really, domestic models can actually be used as a benchmark for comparison now, and suddenly a rush of blood rushes to my heart.

And each column consists of three parts, with the color representing the resultant classification:

Green section (Veo): the percentage of Veo output that reviewers preferred in their comparisons.

White portion (Ties): the proportion of reviewers who consider the two to be indistinguishable, i.e., with no clear preference.

Pink section (Other preferred): the reviewer prefers the proportions of the other model (non-Veo).

In Google DeepMind's usual thick-browed style, it basically doesn't fake anything, so as you can see, Google's Veo 2 achieves optimal results in most cases.

And in Google's review, the strongest of the other four models, aside from the Veo 2, is the Korin v1.5, which is a pretty interesting result. And, one thing to note.Veo 2, which is capable of straight out 4K videoThe

The videos they uploaded on Youtube are also native 4K, and this one is pretty scary. They themselves say that the biggest difficulty and limitation at the moment is still in motion.

The original statement was, "Creating realistic, dynamic, or complex video with complete consistency across complex scenes or scenes with complex motion remains a challenge."

II. AI drawing Imagen 3

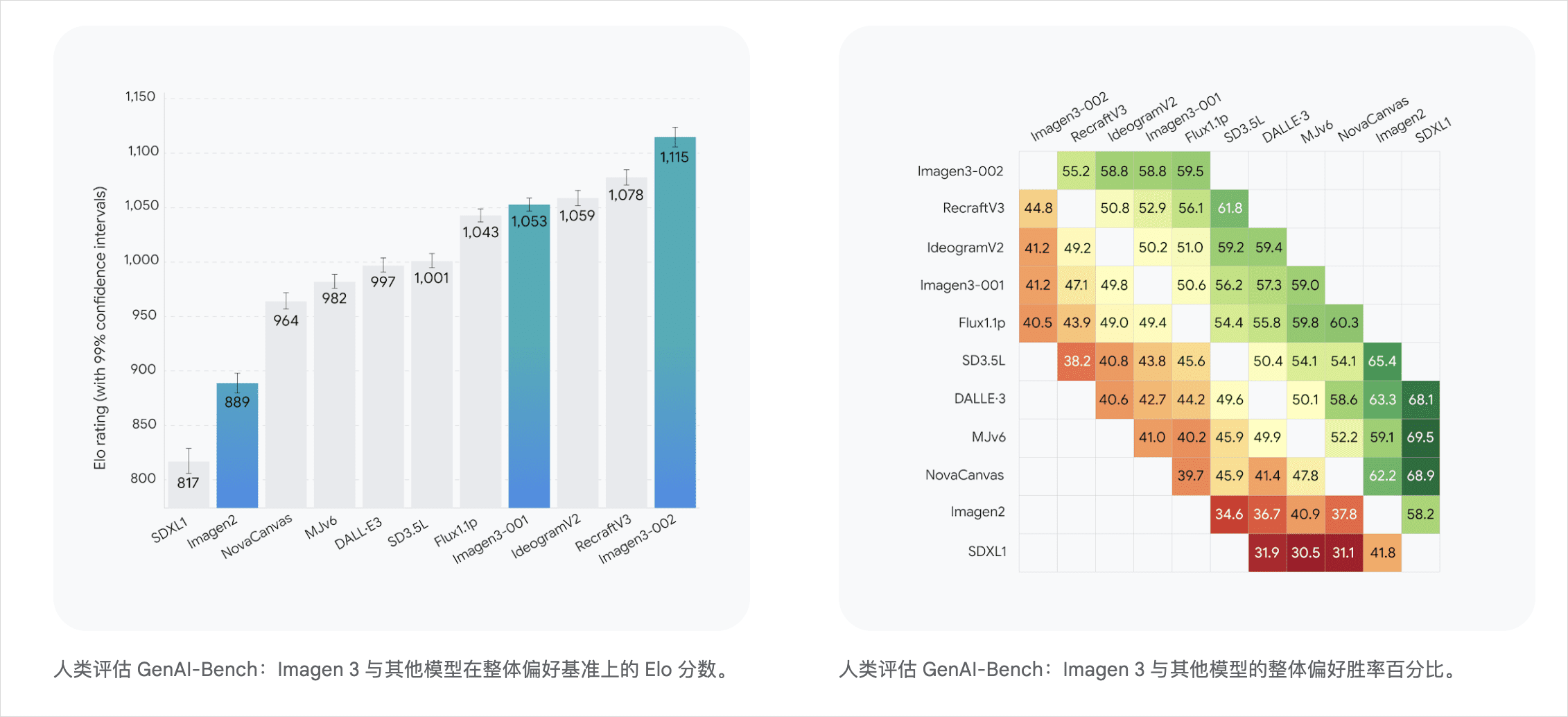

Google has also improved their Imagen 3 image generation model, which now produces brighter, better composed images. It is now capable of rendering a wider variety of art styles - from photorealism to impressionism, from abstract art to anime - with greater precision. The upgrade also enables the model to follow cues more faithfully and render richer details and textures. In comparisons with leading image generation models conducted by human reviewers, Imagen 3 reached the state-of-the-art.

Starting today, the latest Imagen 3 model will be available globally in ImageFX, Google Labs' image generation tool, in more than 100 countries. Visit ImageFX to get started.

In addition to the Veo 2, Google's wave also went straight to sending out their improved version of the AI mapping Imagen 3 model, which is actually technically the Imagen 3-002 model, the second generation of Imagen 3. The first generation of Imagen 3 was released on May 14, 2024, at Google's I/O developer conference. Six months on, Google has made a significant evolution of the Imagen 3, releasing an improved version of the second generation, and on their own review, it's straight up butchering the charts.

There is currently no queue, you can just play and, well, it's free.

Write Prompt directly in the input box and start playing.

Their design of this Prompt, but also very interesting, you can enter a variety of strange and strange a large series of Prompt, he will automatically give youdisambiguation clause, kind of like that capsule explosion Lao Luo had back in the day that split some words and turned them intodrop-down box, automatically associating several other options.

Here are some officially released renderings

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...