GLM Edge: Smart Spectrum Releases End-Side Large Language Model and Multi-Modal Understanding Model for Mobile, Car and PC Platforms

General Introduction

GLM-Edge is a series of large language models and multimodal understanding models designed for end-side devices from Tsinghua University (Smart Spectrum Light Language). These models include GLM-Edge-1.5B-Chat, GLM-Edge-4B-Chat, GLM-Edge-V-2B, and GLM-Edge-V-5B for mobile, car, and PC platforms, respectively.The GLM-Edge family of models focuses on ease of practical deployment and speed of inference while maintaining high performance, and excels in the Qualcomm Snapdragon and Intel platforms in particular. The GLM-Edge series models focus on ease of deployment and inference speed while maintaining high performance, especially on Qualcomm Snapdragon and Intel platforms. Users can download and use these models through Huggingface, ModelScope, etc., and perform model inference through various inference backends (e.g., transformers, OpenVINO, vLLM).

GLM Edge end-side text model

GLM Edge end-side vision model

Function List

- Multiple model options: Provides dialog models and multimodal comprehension models at different parameter scales for a wide range of end-side devices.

- Efficient Reasoning: Achieve efficient inference speeds on Qualcomm Snapdragon and Intel platforms with support for hybrid quantization schemes.

- Multi-platform support: Models can be downloaded from Huggingface, ModelScope, and other platforms, and support a wide range of inference backends.

- Easy to deploy: Provide detailed installation and use guide for users to get started quickly.

- Fine-tuning support: Provide fine-tuning tutorials and configuration files to support users in fine-tuning their models according to their specific needs.

Using Help

Installation of dependencies

Make sure your version of Python is 3.10 or higher. And install the dependencies as follows:

pip install -r requirements.txt

model-based reasoning

We provide vLLM, OpenVINO, and transformers for back-end inference, and you can run the model by running the following commands:

python cli_demo.py --backend transformers --model_path THUDM/glm-edge-1.5b-chat --precision bfloat16

python cli_demo.py --backend vllm --model_path THUDM/glm-edge-1.5b-chat --precision bfloat16

python cli_demo.py --backend ov --model_path THUDM/glm-edge-1.5b-chat-ov --precision int4

Note: The OpenVINO version model needs to be converted, please go to the relevant page to run the conversion code:

python convert_chat.py --model_path THUDM/glm-edge-1.5b-chat --precision int4

python convert.py --model_path THUDM/glm-edge-v-2b --precision int4

fine-tuned model

We provide code for fine-tuning the model, please refer to the fine-tuning tutorial. The following are the basic steps for fine-tuning:

- Prepare the dataset and configure the training parameters.

- Run the fine-tuning script:

OMP_NUM_THREADS=1 torchrun --standalone --nnodes=1 --nproc_per_node=8 finetune.py data/AdvertiseGen/ THUDM/glm-edge-4b-chat configs/lora.yaml

- If you need to continue fine-tuning from the save point, you can add a fourth parameter:

python finetune.py data/AdvertiseGen/ THUDM/glm-edge-4b-chat configs/lora.yaml yes

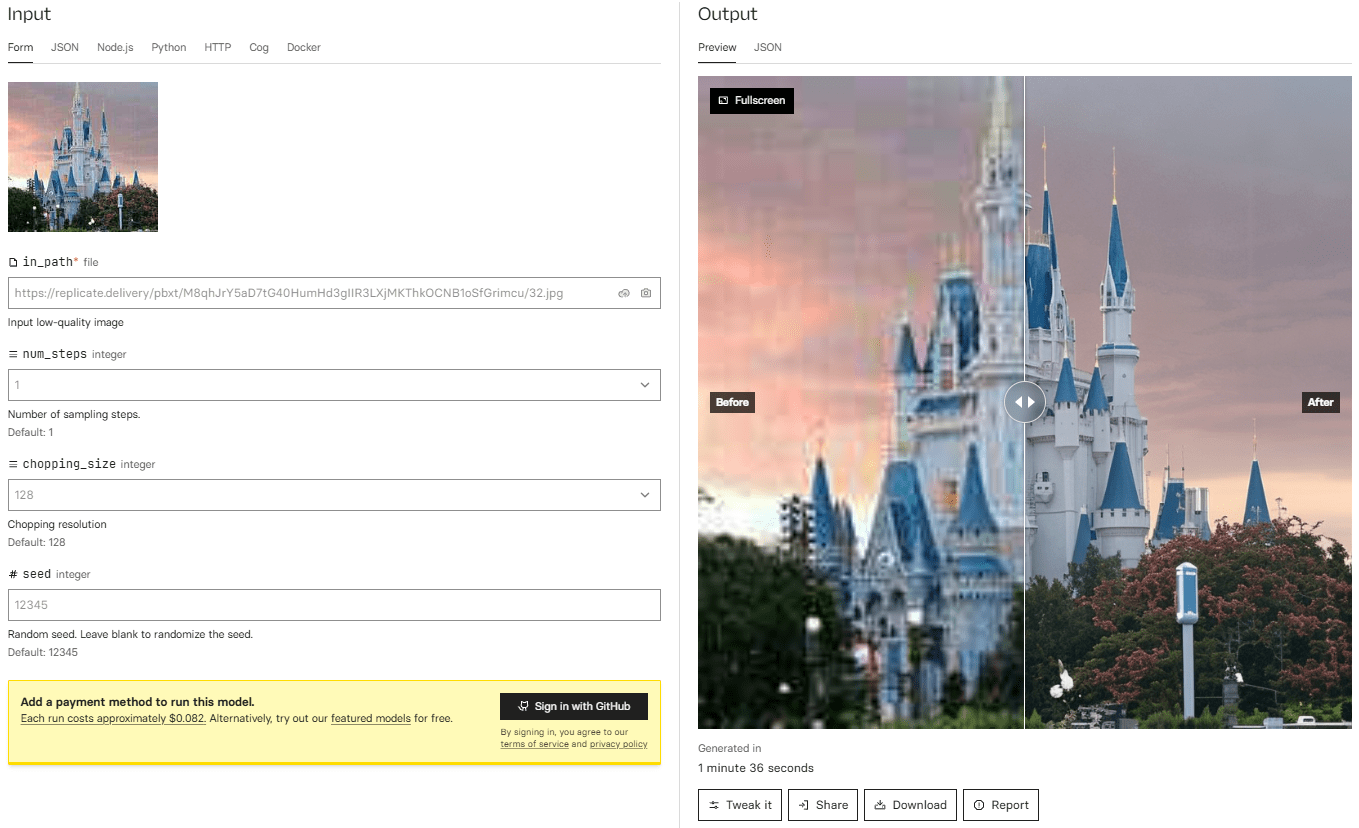

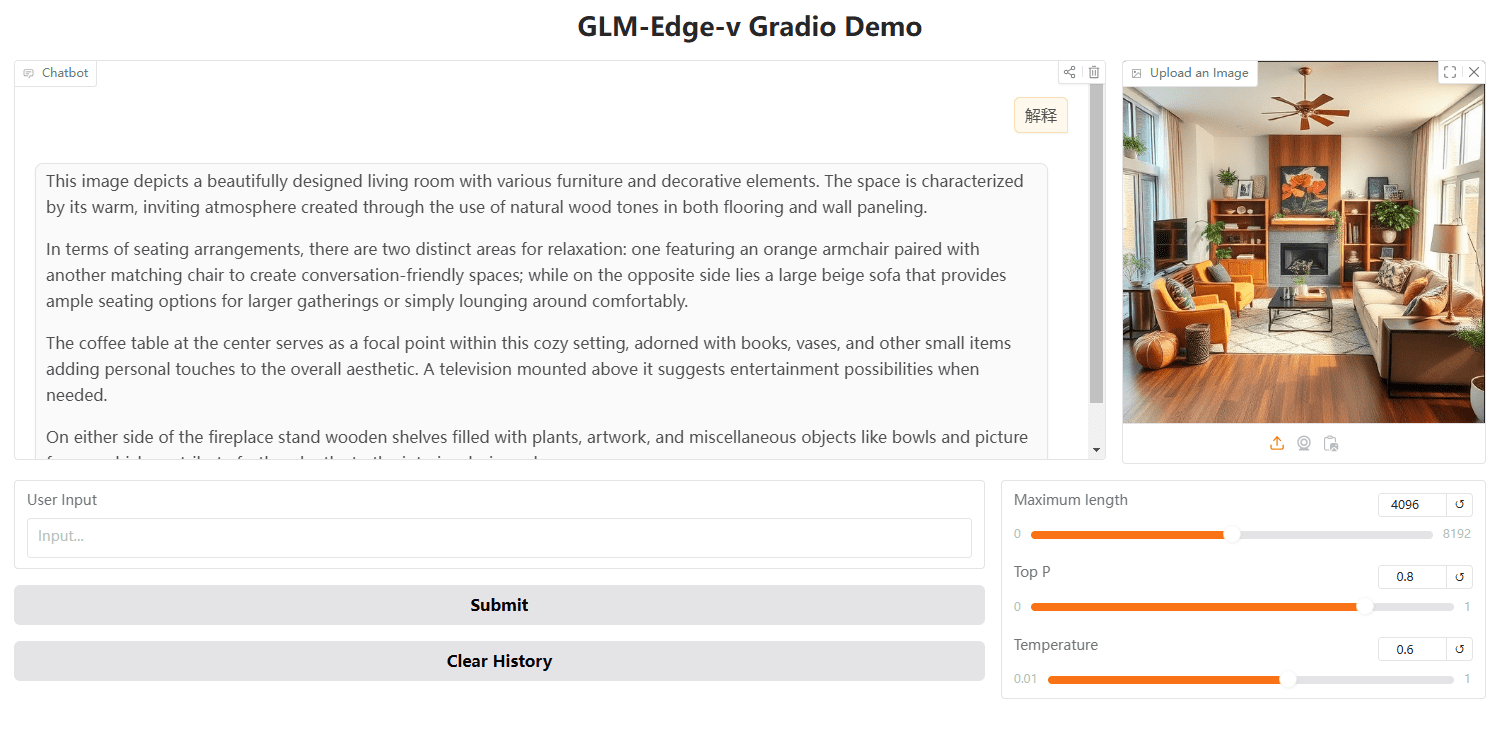

Launching the WebUI with Gradio

You can also use Gradio to launch the WebUI:

python cli_demo.py --backend transformers --model_path THUDM/glm-edge-1.5b-chat --precision bfloat16

Reasoning with the OpenAI API

import openai

client = openai.Client(api_key="your_api_key", base_url="http://<XINFERENCE_HOST>:<XINFERENCE_PORT>/v1")

output = client.chat.completions.create(

model="glm-edge-v",

messages=[

{"role": "user", "content": "describe this image"},

{"role": "image_url", "image_url": {"url": "img.png"}}

],

max_tokens=512,

temperature=0.7

)

print(output)© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...