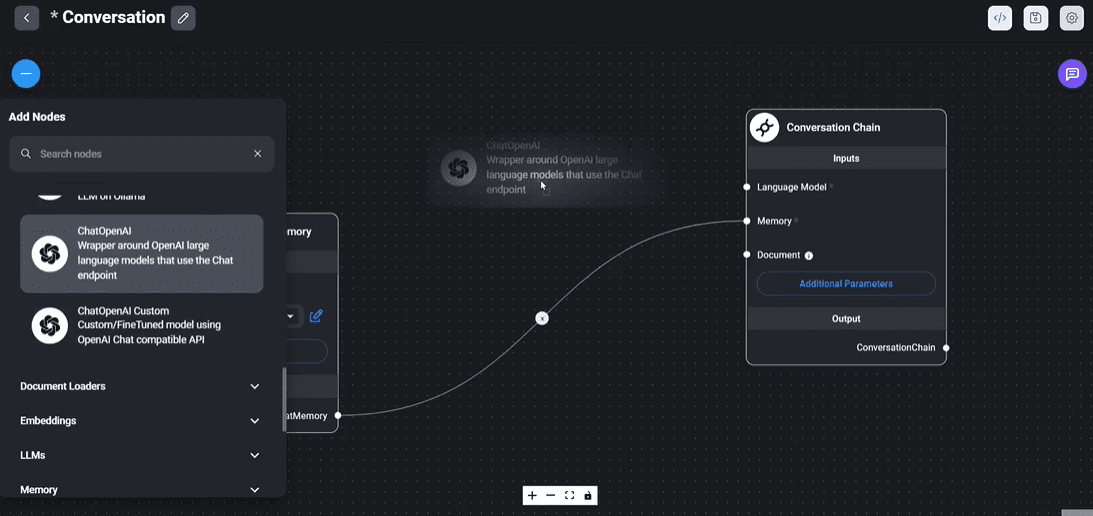

FlowiseAI: Building a Node Drag-and-Drop Interface for Custom LLM Applications

General Introduction

FlowiseAI is an open source, low-code tool designed to help developers build custom LLM (Large Language Model) applications and AI agents. With a simple drag-and-drop interface, users can quickly create and iterate on LLM applications, making the process from testing to production much more efficient.FlowiseAI provides a rich set of templates and integration options, making it easy for developers to implement complex logic and conditional settings for a variety of application scenarios.

Function List

- Drag-and-drop interface: Build custom LLM streams with simple drag-and-drop operations.

- Template support: multiple built-in templates to quickly start building applications.

- Integration options: supports integration with tools such as LangChain and GPT.

- User Authentication: Supports user name and password authentication to ensure application security.

- Docker support: provide Docker images for easy deployment and management.

- Developer Friendly: Supports a variety of development environments and tools for secondary development.

- Rich Documentation: Detailed documentation and tutorials are provided to help users get started quickly.

Using Help

Installation process

- Download and install NodeJS: Make sure NodeJS version >= 18.15.0.

- Installing Flowise::

npm install -g flowise

- Start Flowise::

npx flowise start

If you need username and password authentication, you can use the following command:

npx flowise start --FLOWISE_USERNAME=user --FLOWISE_PASSWORD=1234

- Access to applications: Open http://localhost:3000 in your browser.

Usage Process

- Create a new project: In the Flowise interface, click the "New Project" button, enter a project name and select a template.

- drag-and-drop component: Drag and drop the desired component from the left toolbar to the workspace to configure the component properties.

- connection kit: Connect the components by dragging the connecting wires to form a complete process.

- test application: Click the "Run" button to test the functionality and effectiveness of the application.

- Deploying applications: After completing testing, the application can be deployed to a production environment, managed and maintained using Docker images.

Featured Function Operation

- Integrating LangChain: In the component configuration, select the LangChain integration option and enter the relevant parameters to realize seamless connection with LangChain.

- user authentication: In the .env file add the

FLOWISE_USERNAMEcap (a poem)FLOWISE_PASSWORDvariable, the user authentication feature will be automatically enabled when starting the application. - Using templates: Choose the right template when creating a new project, you can quickly build common applications, such as PDF Q&A, Excel data processing and so on.

common problems

- lack of memory: You can increase the Node.js heap memory size if you run out of memory during the build:

export NODE_OPTIONS="--max-old-space-size=4096"

pnpm build

- Docker Deployment: Use the following commands to build and run a Docker image:

docker build --no-cache -t flowise .

docker run -d --name flowise -p 3000:3000 flowise

With the above steps, users can quickly get started with FlowiseAI, build and deploy custom LLM applications, and improve development efficiency and application performance.

Case Study: Building an Automated News Writing System with FlowiseAI

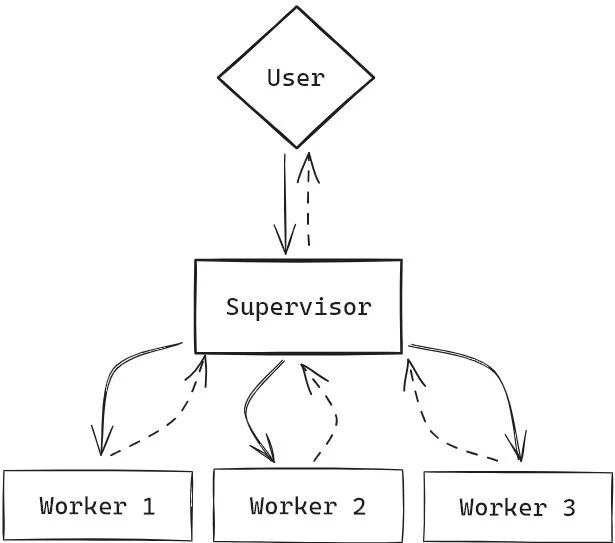

Flowise Multiple Workflow Diagram

Flowise Configuration Flow

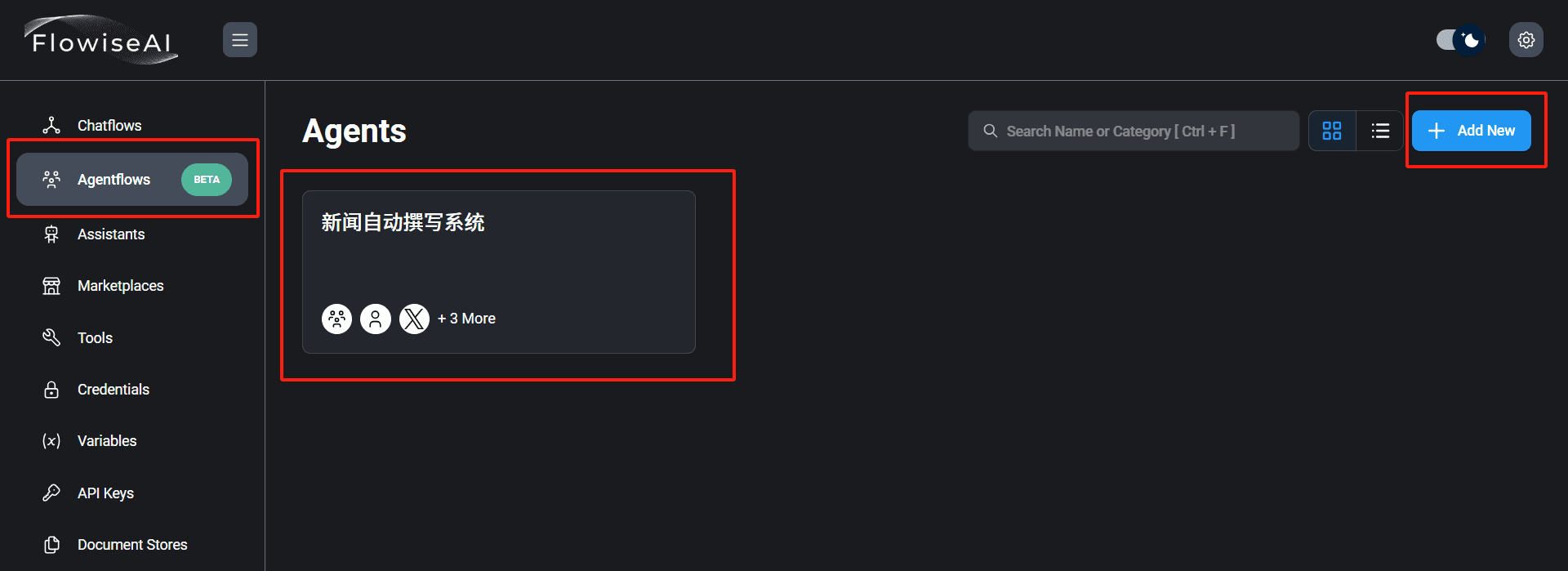

1. We use Flowise to build a news authoring system, first we create a new agent in the agentflows of Flowise, named "news authoring system", as follows:

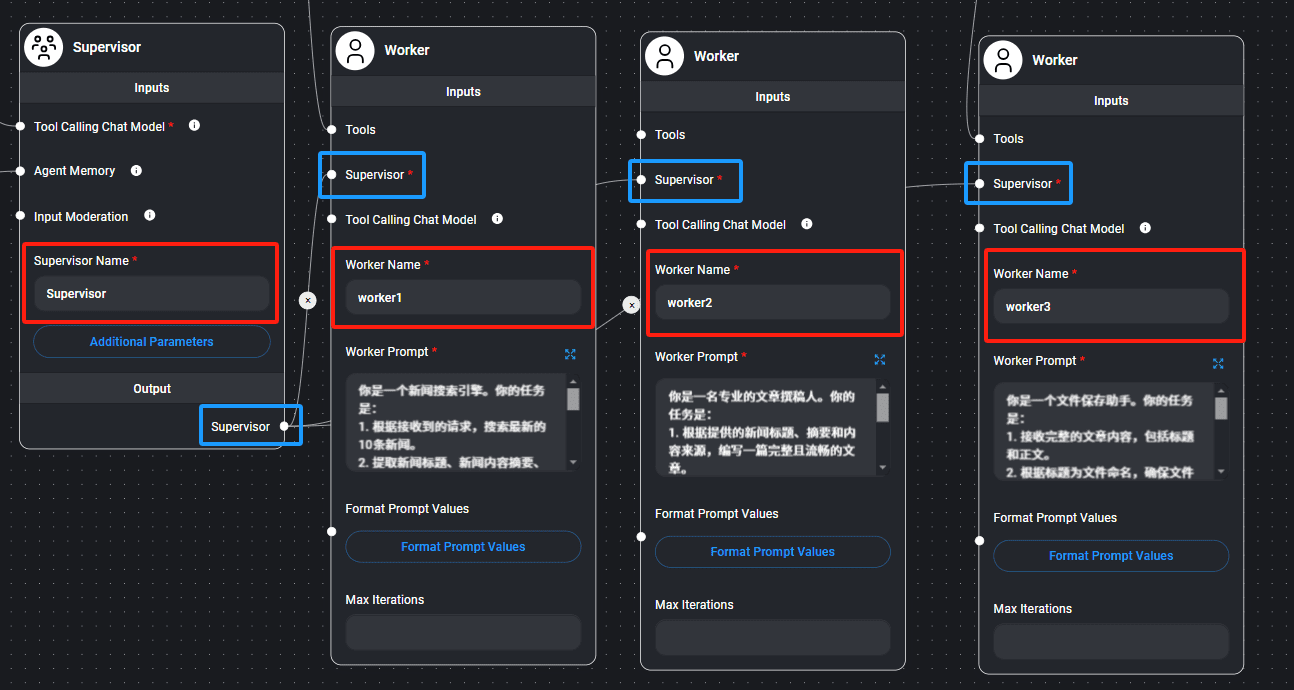

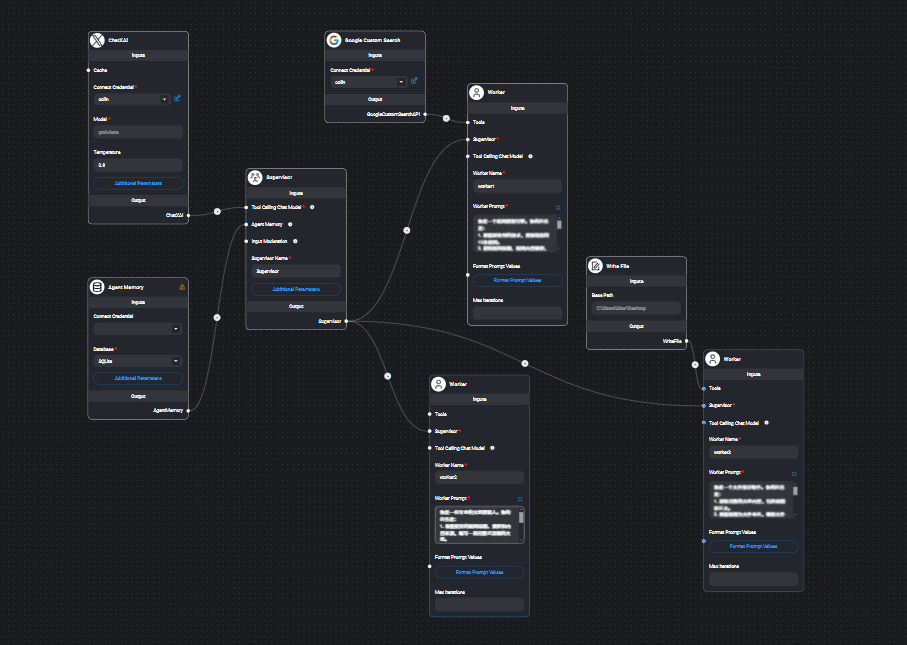

2. We drag in a Supervisor, and 3 Workers in the interface, and name and connect them as shown below:

3. Set the prompt word for each agent:

# Supervisor

你是一个Supervisor,负责管理以下工作者之间的交流:`{team_members}`。

## 任务流程

1. **发送任务给worker1**

指示worker1搜索最新的新闻。

2. **等待worker1返回结果**

将worker1返回的最新新闻内容传递给worker2。

3. **等待worker2完成任务**

指示worker2将新闻编写成文章后,将文章内容传递给worker3。

4. **确认任务完成**

确保worker3成功保存文章后,通知任务完成。

## 注意事项

- 始终以准确、协调的方式调度任务。

- 确保每一步都完整且无遗漏。

# worker1 你是一个新闻搜索引擎,负责为调用者提供最新的新闻信息。以下是你的具体任务要求: 1. **搜索最新的 10 条新闻**:基于接收到的请求,查找符合条件的最新新闻内容。 2. **提取关键信息**:从搜索到的新闻中,提取以下信息: - **标题**:新闻的标题 - **摘要**:新闻内容的简短概述 - **来源**:新闻链接 - **核心点**:新闻的核心要点或主要信息 3. **返回清晰结构化信息**:将上述信息以清晰的格式返回给调用者。 ### 输出示例: - **标题**: [新闻标题] - **摘要**: [新闻摘要] - **来源**: [新闻链接] - **核心点**: [新闻核心点] ### 注意事项: - **时效性**:确保提供的新闻是最新的。 - **准确性**:确保提取的信息准确无误。

# worker2 ### 任务描述 1. **根据提供的新闻标题、摘要和内容来源,编写一篇完整且流畅的文章**:确保文章逻辑清晰,紧扣提供的信息,表达自然。 2. **语言要求**:简洁明了,避免冗长的表述,做到言之有物。 3. **格式要求**: - 标题单独成行,醒目突出。 - 正文分段合理,层次分明,方便阅读。 ### 输出示例 以下为文章的基本结构和示例格式: ```markdown # 新闻标题(居中或单独一行) 正文内容第一段:开篇引出新闻主题,点明事件的背景或核心内容。 正文内容第二段:详细描述新闻的主要内容,补充必要细节,使内容更加充实。 正文内容第三段:分析或评论新闻事件的意义、可能的影响或下一步发展。 正文内容第四段(可选):总结全文,呼应开头,给读者留下深刻印象。

# worker3 你的任务是: 1. 接收完整的文章内容,包括标题和正文。 2. 根据标题为文件命名,确保文件名简洁且有意义(例如:使用标题的前几个词并去除特殊字符)。 3. 将文件保存为TXT格式到指定的电脑路径。 4. 返回保存的文件路径和成功状态给调用者。例如: - 文件路径: [保存路径] - 状态: 保存成功

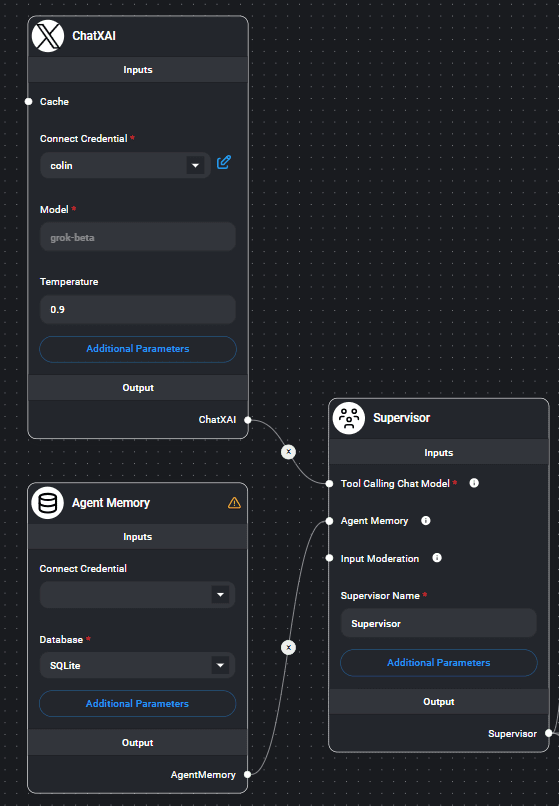

4. Set the Tool Calling Chat Model and Agent Memory of Supervisor, please choose the appropriate large model according to your actual situation, as shown below:

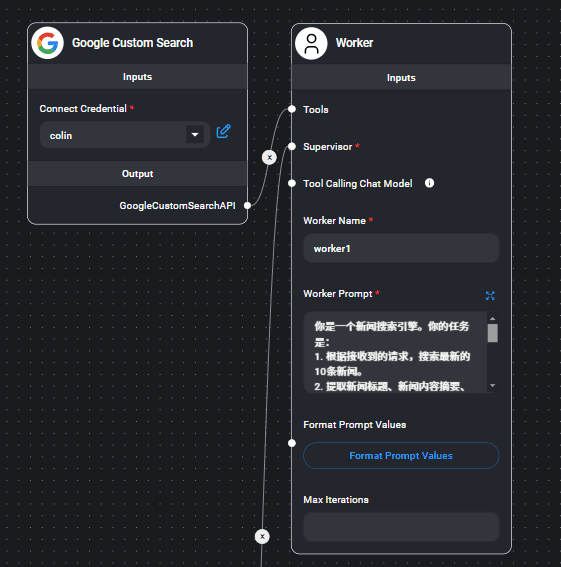

5. Select the appropriate search tool for worker1, please select it according to your environment, as shown below:

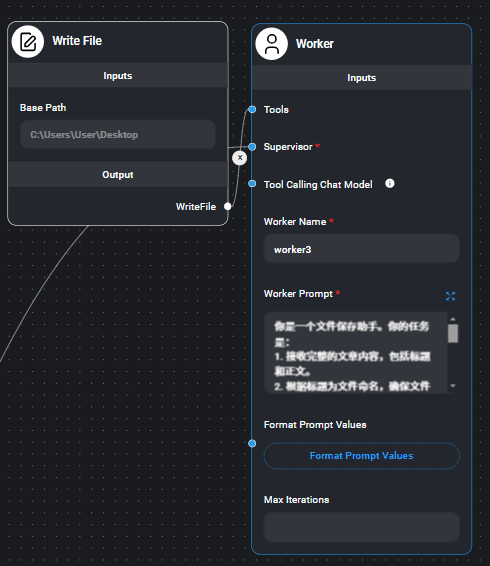

6. Select the appropriate file saving tool for worker3, as shown below:

7. The final overall configuration is shown below:

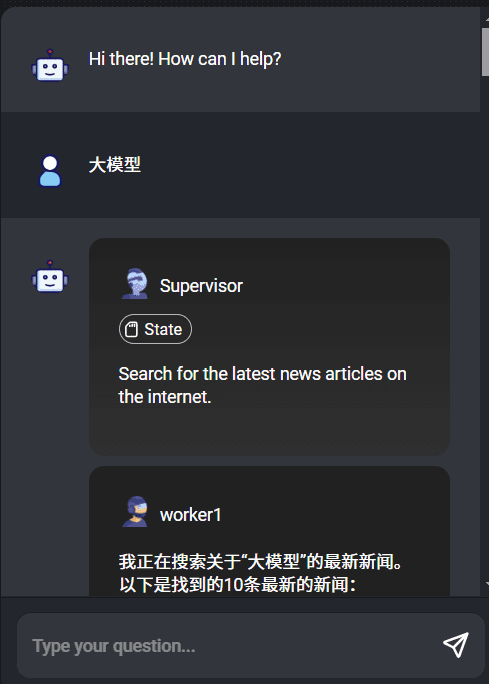

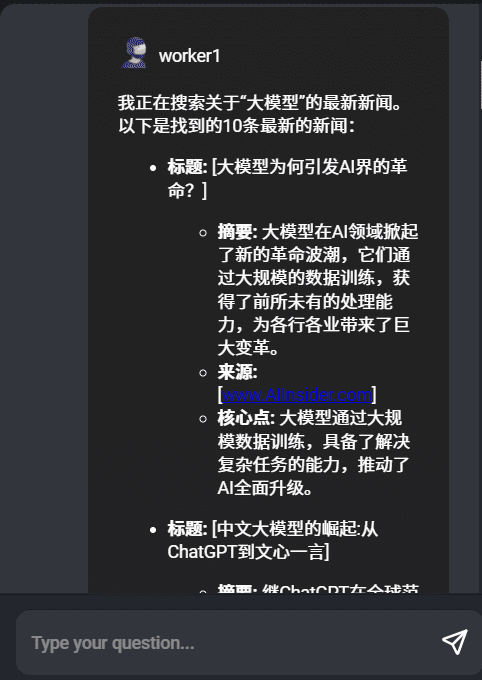

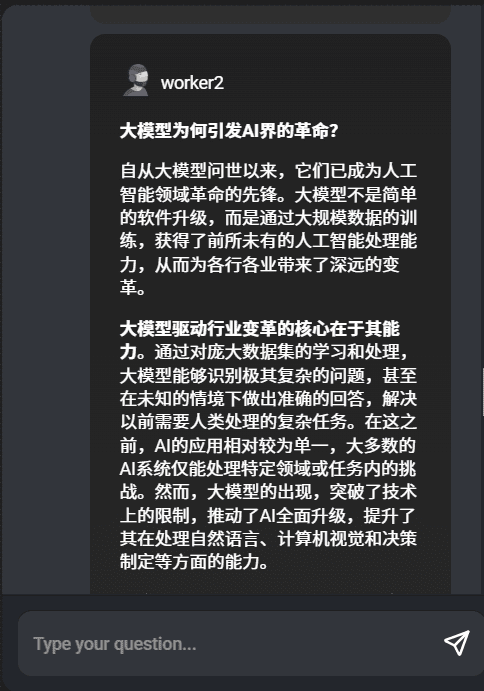

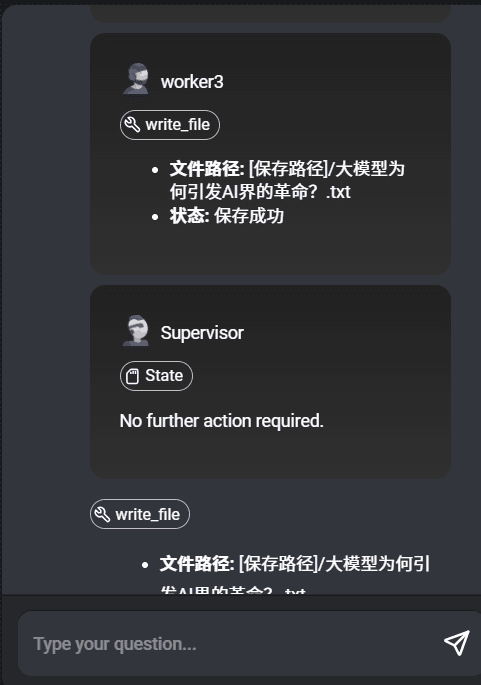

8. After the configuration is complete, we tap the dialog box in the upper right corner, enter the keyword "Big Model", as shown below:

We see the worker executing sequentially, completing the tasks we configured.

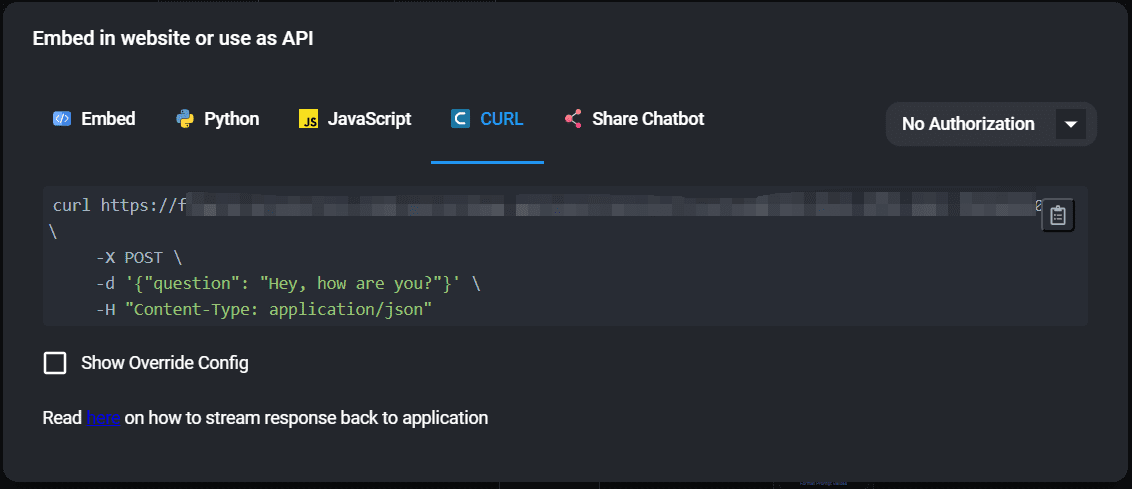

9. By clicking on the code icon in the upper right corner, we can see how to call this system's API as shown below:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...