FireRedASR: An Open Source Model for Multilingual High-Precision Speech Recognition

General Introduction

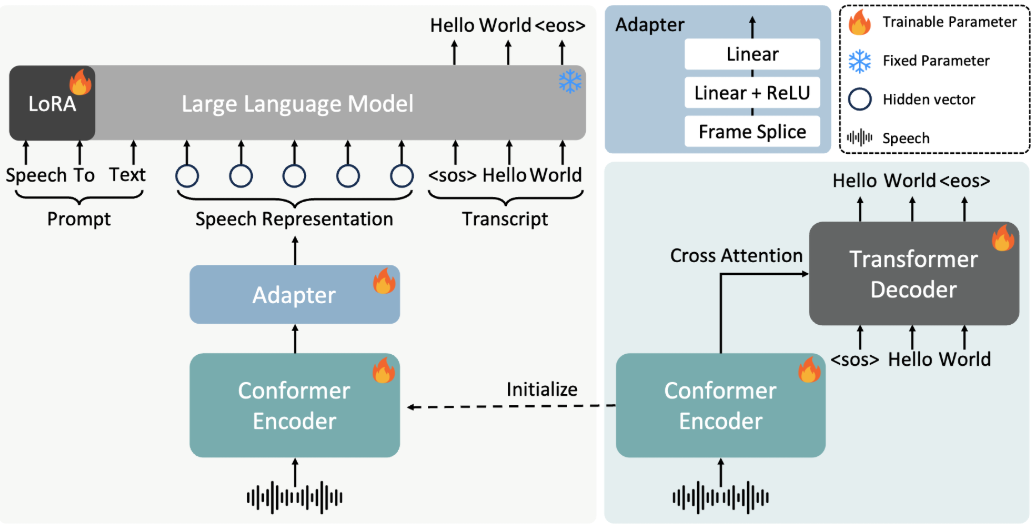

FireRedASR is a speech recognition model developed and open-sourced by the Little Red Book FireRed team, focusing on providing high-precision, multi-language support for automatic speech recognition (ASR) solutions. Hosted on GitHub, the project is geared towards developers and researchers, and offers an industrial-grade design that supports scenarios such as Mandarin, Chinese dialects, English, and lyrics recognition.FireRedASR is divided into two main versions: FireRedASR-LLM pursues extreme accuracy and is suitable for professional needs; FireRedASR-AED balances efficiency and performance and is suitable for real-time applications. As of 2025, the model has set the optimal record in the Chinese Mandarin test (CER 3.05%) and performed well in multi-scenario tests, which is widely applicable to intelligent assistants, video subtitle generation and other fields.

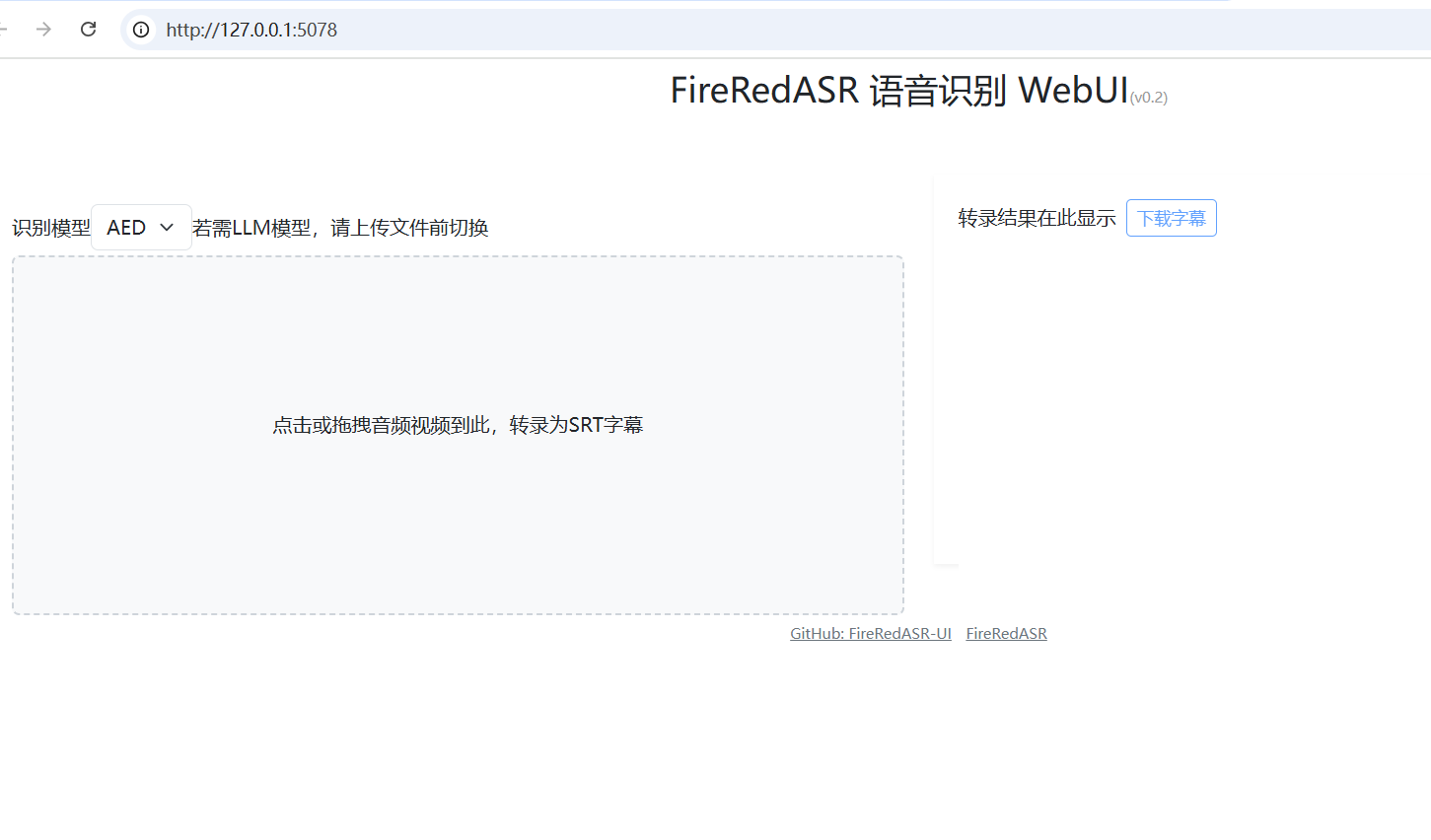

FireRedASR: WebUI one-click installer: https://github.com/jianchang512/fireredasr-ui

Function List

- Supports speech-to-text in Mandarin, Chinese dialects and English, with industry-leading recognition accuracy.

- Provides lyrics recognition, especially suitable for multimedia content processing.

- Two versions, FireRedASR-LLM and FireRedASR-AED, are included to fulfill the needs of high-precision and high-efficiency inference, respectively.

- Open source models and inference code to support community secondary development and customized applications.

- It can handle a wide range of audio input scenarios, such as short videos, live streaming, voice input, and so on.

- Supports batch audio processing, suitable for large-scale data transcription tasks.

Using Help

Installation process

FireRedASR requires certain development environment configurations to run, the following are the detailed installation steps:

1.Cloning Project Warehouse

Open a terminal and enter the following command to clone the FireRedASR project locally:

git clone https://github.com/FireRedTeam/FireRedASR.git

When finished, go to the project catalog:

cd FireRedASR

- Creating a Python Environment

It is recommended to create a separate Python environment using Conda to ensure dependency isolation. Run the following command:

conda create --name fireredasr python=3.10

Activate the environment:

conda activate fireredasr

- Installation of dependencies

The program provides arequirements.txtfile containing all the necessary dependencies. The installation command is as follows:

pip install -r requirements.txt

Wait for the installation to complete, make sure the network is smooth, you may need a scientific Internet tool to speed up the download.

- Download pre-trained model

- FireRedASR-AED-L: Download the pre-trained model directly from GitHub or Hugging Face, put it into the

pretrained_models/FireRedASR-AED-LFolder. - FireRedASR-LLM-L: In addition to downloading the model, it is also necessary to download the Qwen2-7B-Instruct model into the

pretrained_modelsfolder with theFireRedASR-LLM-Ldirectory to create soft links:

ln -s ../Qwen2-7B-Instruct

- Verify Installation

Run the following command to check if the installation was successful:

python speech2text.py --help

If a help message is displayed, the environment is configured correctly.

How to use

FireRedASR provides two ways to use command line and Python API, the following is a detailed description of the operation flow of the main functions.

command-line operation

- Single file transcription (AED model)

Use FireRedASR-AED-L to process audio files (up to 60 seconds):

python speech2text.py --wav_path examples/wav/BAC009S0764W0121.wav --asr_type "aed" --model_dir pretrained_models/FireRedASR-AED-L

--wav_path: Specifies the audio file path.--asr_type: Select the model type, in this case "aed".--model_dir: Specifies the model folder.

The output is displayed in the terminal, e.g. transcribed text content.

- Single file transcription (LLM model)

Use FireRedASR-LLM-L to process audio (up to 30 seconds):

python speech2text.py --wav_path examples/wav/BAC009S0764W0121.wav --asr_type "llm" --model_dir pretrained_models/FireRedASR-LLM-L

The meaning of the parameter is the same as above, and the output is transcribed text.

Python API Operations

- Load model and transcribe

Call the FireRedASR model in a Python script:

from fireredasr.models.fireredasr import FireRedAsr

# 初始化 AED 模型

model = FireRedAsr.from_pretrained("aed", "pretrained_models/FireRedASR-AED-L")

batch_uttid = ["BAC009S0764W0121"]

batch_wav_path = ["examples/wav/BAC009S0764W0121.wav"]

results = model.transcribe(

batch_uttid, batch_wav_path,

{"use_gpu": 1, "beam_size": 3, "nbest": 1, "decode_max_len": 0}

)

print(results)

- from_pretrained: Load the specified model.

- transcribe: Performs a transcription task and returns the result as a list of texts.

- Adjusting parameters to optimize results

- use_gpu: Set to 1 to use GPU acceleration, 0 to use CPU.

- beam_size: The width of the search bundle, the larger it is the more accurate it is but the more time-consuming it is, default 3.

- nbest: Returns the optimal number of results, default 1.

Featured Function Operation

- lyrics recognition

The FireRedASR-LLM excels at lyrics recognition. Feed the song audio in (make sure it doesn't take more than 30 seconds), run:python speech2text.py --wav_path your_song.wav --asr_type "llm" --model_dir pretrained_models/FireRedASR-LLM-L**Output as song text with industry-leading recognition rates. **

- Multi-language support

For dialect or English audio, use the above commands or APIs directly and the model will be adapted automatically. For example, processing English audio:model = FireRedAsr.from_pretrained("llm","pretrained_models/FireRedASR-LLM-L") results = model.transcribe(["english_audio"],["path/to/english.wav"],{"use_gpu":1}) print(results)

caveat

- Audio Length Limit: AED supported for up to 60 seconds, beyond which hallucinatory problems may occur; LLM supported for 30 seconds, with extra-long behavior undefined.

- batch file: Ensure that input audio lengths are similar to avoid performance degradation.

- hardware requirement: It is recommended to use the GPU to run large models, as the CPU may be slower.

Through the above steps, users can easily get started with FireRedASR, complete the whole process from installation to use, applicable to a variety of speech recognition scenarios.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...