FastAPI Deployment Ollama Visualization Dialog Interface

I. Catalog structure

The C6 folder of the repository notebook:

fastapi_chat_app/

│

├── app.py

├── websocket_handler.py

├── static/

│ └── index.html

└── requirements.txt

app.pyThe main setup and routing of FastAPI applications.websocket_handler.pyHandles WebSocket connections and message flows.static/index.htmlHTML page.requirements.txtRequired dependencies, installed via pip install -r requirements.txt.

II. Cloning of this warehouse

git clone https://github.com/AXYZdong/handy-ollama

III. Installation of dependencies

pip install -r requirements.txt

IV. Core code

app.py The core code in the file is as follows:

import ollama

from fastapi import WebSocket

async def websocket_endpoint(websocket: WebSocket):

await websocket.accept() # 接受WebSocket连接

user_input = await websocket.receive_text() # 接收用户输入的文本消息

stream = ollama.chat( # 使用ollama库与指定模型进行对话

model='llama3.1', # 指定使用的模型为llama3.1

messages=[{'role': 'user', 'content': user_input}], # 传入用户的输入消息

stream=True # 启用流式传输

)

try:

for chunk in stream: # 遍历流式传输的结果

model_output = chunk['message']['content'] # 获取模型输出的内容

await websocket.send_text(model_output) # 通过WebSocket发送模型输出的内容

except Exception as e: # 捕获异常

await websocket.send_text(f"Error: {e}") # 通过WebSocket发送错误信息

finally:

await websocket.close() # 关闭WebSocket连接

Accepts WebSocket connections:

await websocket.accept(): First, the function accepts a WebSocket connection request from the client and establishes a communication channel with the client.

Receive user input:

user_input = await websocket.receive_text(): Receive a text message from the client via WebSocket to get the user input.

Initialize the dialog stream:

stream = ollama.chat(...): Call the chat method from the ollama library, specifying that the model used is llama3.1. Pass the user's input as a message to the model, and enable streaming (stream=True) in order to incrementally fetch the model-generated reply.

Processing model output:

for chunk in stream: Iterate over the data blocks streamed from the model.model_output = chunk['message']['content']: Extract the model-generated textual content from each data block.await websocket.send_text(model_output): Send the extracted model responses back to the client via WebSocket to realize real-time dialog.

Exception handling:

except Exception as e: If any exception occurs during processing (e.g., network problem, model error, etc.), catch the exception and send an error message via WebSocket informing the client that an error has occurred.

Close the WebSocket connection:

finally: Whether or not an exception occurs, it is ultimately ensured that the WebSocket connection is closed to free up resources and end the session.

V. Running the app

- In the catalog (

fastapi_chat_app/); - Run the app.py file.

uvicorn app:app --reload

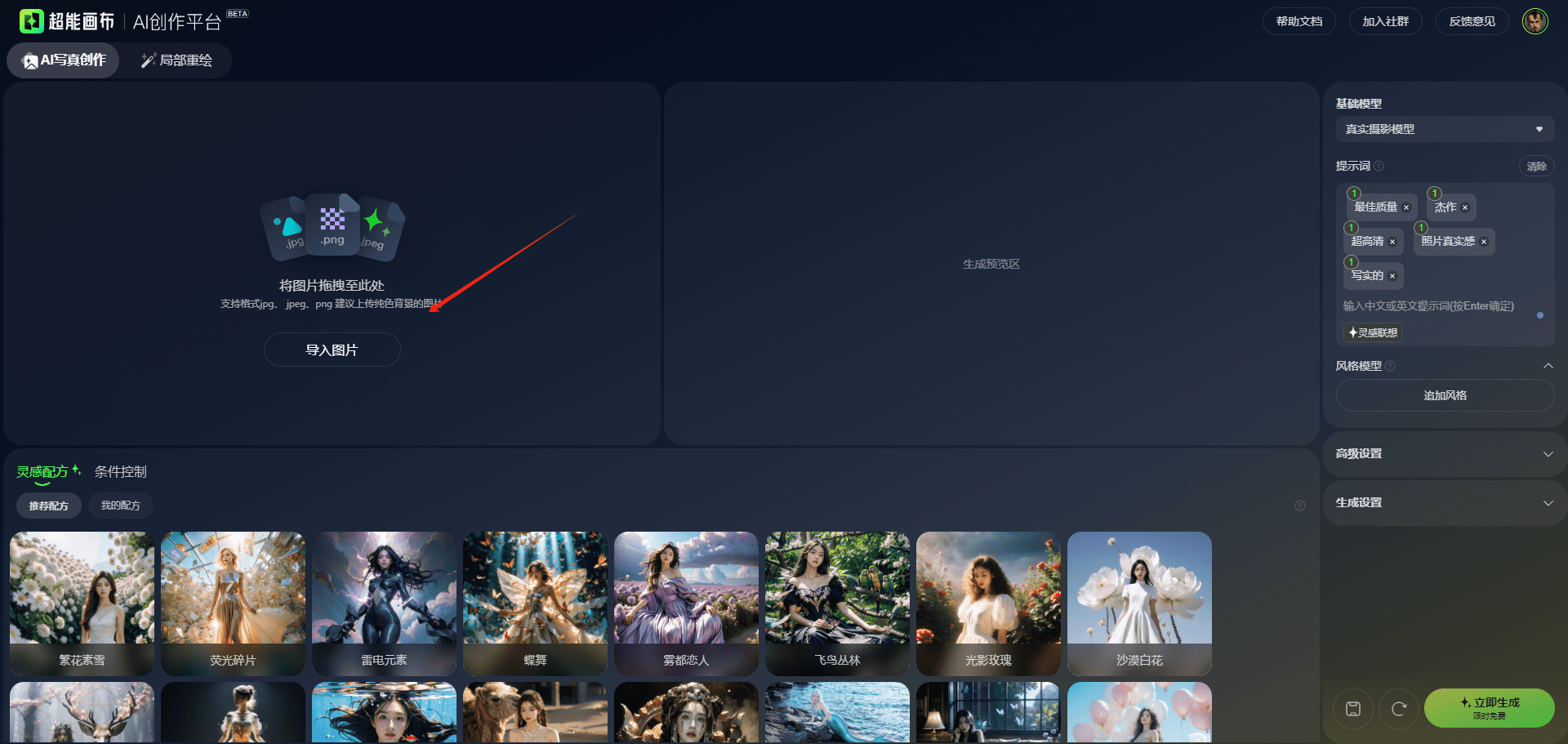

Open page.

Normal output displayed in the background.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...