EmotiVoice: Text-to-Speech Engine with Multi-Voice and Emotional Cueing Controls

General Introduction

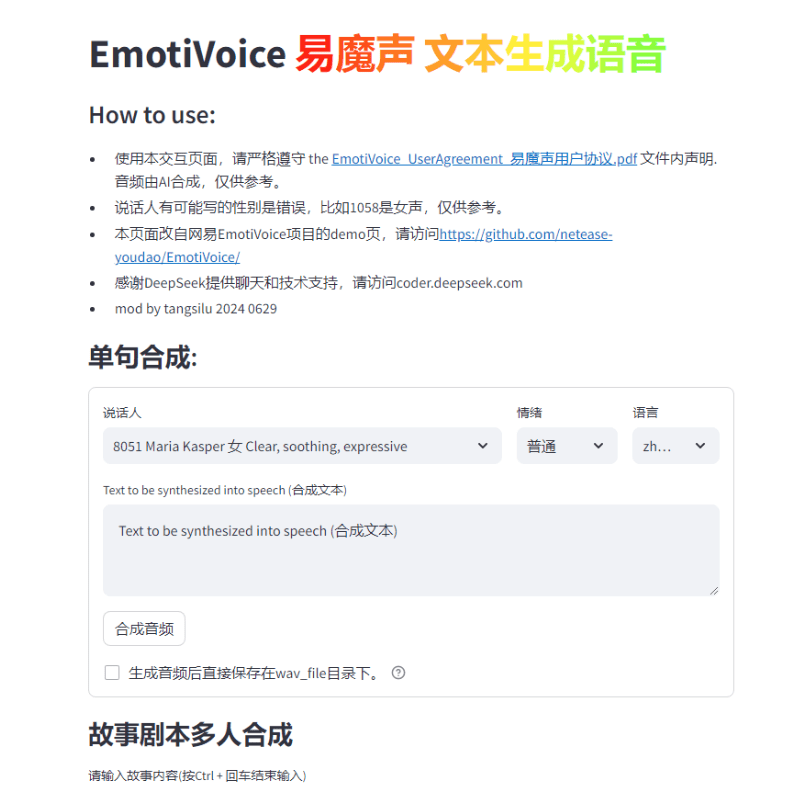

EmotiVoice is a text-to-speech (TTS) engine with multiple voices and emotional cue control developed by NetEaseYoudao. This open source TTS engine supports English and Chinese , with more than 2000 different voices and emotion synthesis capabilities to create speech with multiple emotions such as happy , excited , sad and angry . It provides an easy-to- use WEB interface and batch generation results of the script interface .

Hosted at Replicate demo address

Function List

Provide WEB interface and script batch processing interface

Support for emotion synthesis

Multiple sound options

Support for Chinese and English synthesis

Using Help

Check out the GitHub repository for installation, usage instructions

Run a Docker image to try out EmotiVoice

Refer to the Wiki page to download additional information such as pre-training models

Join WeChat to exchange feedback

In response to community demand, we are happy to release theSound cloning function, and provides two sample tutorials!

Caution.

- This feature requires at least one Nvidia GPU Graphics The

- target soundData is critical! Detailed requirements will be provided in the next section.

- Currently, this feature is only supported in Chinese and English, which means you can train with either Chinese data or English data toGet a model of a timbre that can speak two languagesThe

- Although EmotiVoice supports emotional control, the training data needs to be emotional as well if you want your voice to convey emotion.

- After training using only your data, from EmotiVoice'sThe original sound will be altered. This means that the new model will be completely customized to your data. If you want to use EmotiVoice's 2000+ original voices, it is recommended to use the original pre-trained model.

Detailed requirements for training data

- The audio data should be of high quality and needs to be clear and distortion-free for a single person's voice. Duration or number of sentences is not mandatory for the time being, a few sentences are fine, but more than 100 sentences is recommended.

- The text corresponding to each audio should strictly match the content of the speech. Prior to training, the raw text is converted to phonemes using G2P. Attention needs to be paid to pauses (sp*) and polyphonic conversion results, they have a significant impact on the quality of training.

- If you want your voice to convey emotion, the training data needs to be emotional as well. In addition, the content of the **label 'prompt'** should be modified for each audio. The content of the prompt can include any form of textual description of emotion, speech rate, and speaking style.

- Then, you get a file named

data directorydirectory, which contains two subdirectories, thetraincap (a poem)valid. Each subdirectory has adatalist.jsonlfile and each line is formatted:{"key": "LJ002-0020", "wav_path": "data/LJspeech/wavs/LJ002-0020.wav", "speaker": "LJ", "text": ["<sos/eos>", "[IH0]", "[N]", "engsp1", "[EY0]", "[T]", "[IY1]", "[N]", "engsp1", "[TH]", "[ER1]", "[T]", "[IY1]", "[N]", ".", "<sos/eos>"], "original_text": "In 1813", "prompt": "common"}The

Distribution training steps.

For Chinese, please refer to DataBaker RecipePlease refer to English:LJSpeech Recipe. The following is a summary:

- Prepare the training environment - it only needs to be configured once.

# create conda enviroment conda create -n EmotiVoice python=3.8 -y conda activate EmotiVoice # then run: pip install EmotiVoice[train] # or git clone https://github.com/netease-youdao/EmotiVoice pip install -e .[train]

- consultation Detailed requirements for training data Perform data preparation, recommended reference examplesDataBaker Recipe cap (a poem) LJSpeech Recipemethods and scripts in the

- Next, run the following command to create a directory for training:

python prepare_for_training.py --data_dir <data directory> --exp_dir <experiment directory>. Replacement<data directory>for the actual path to the prepared data directory.<experiment directory>is the desired path to the experimental directory. - Can be modified depending on server configuration and data

<experiment directory>/config/config.py. After completing the modifications, start the training process by running the following commandtorchrun --nproc_per_node=1 --master_port 8018 train_am_vocoder_joint.py --config_folder <experiment directory>/config --load_pretrained_model True. (This command will start the training process using the specified configuration folder and load any specified pre-trained models). This method is currently available for Linux, Windows may encounter problems! - After completing some training steps, select a particular checkpoint and run the following command to confirm that the results are as expected:

python inference_am_vocoder_exp.py --config_folder exp/DataBaker/config --checkpoint g_00010000 --test_file data/inference/text. Don't forget to modifydata/inference/textThe contents of the speaker field in the If you are satisfied with the result, feel free to use it! A modified version of the demo page is provided: thedemo_page_databaker.pyto experience the effect of the tone after cloning with DataBaker. - If the results are not satisfactory, you can continue training, or check your data and environment. Of course, feel free to discuss it in the community, or submit an issue!

Runtime reference information.

We provide the following runtime information and hardware configuration information for your reference:

- Pip package versions: Python 3.8.18, torch 1.13.1, cuda 11.7

- GPU Card Model: NVIDIA GeForce RTX 3090, NVIDIA A40

- Training Time Consumption: It takes about 1-2 hours to train 10,000 steps.

It can even be trained without a GPU graphics card, using only the CPU. Please wait for the good news!

One-click installation package download address

windows thunderbolt cloud drive

The zip unpacking password is jian27 or jian27.com

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...