Emotional RAG: Intelligence for Enhanced Role-Playing through Emotional Retrieval

summaries

The field of role-playing research for generating human-like responses has attracted increasing attention as Large Language Models (LLMs) have demonstrated a high degree of human-like capabilities. This has facilitated the exploration of role-playing agents in a variety of applications, such as chatbots that can engage in natural conversations with users, and virtual assistants that can provide personalized support and guidance. A key element of role-playing tasks is the effective utilization of the character memory, which stores the character's profile, experiences, and historical conversations. To enhance the response generation of role-playing agents, retrieval-enhanced generation (RAG) techniques are used to access relevant memories. Most of the current studies retrieve relevant information based on semantic similarity of memories in order to maintain the personalized characteristics of the character, while few attempts have been made to use the LLM in the RAG We propose a novel emotion-aware memory theory inspired by the theory of "emotion-dependent memory" (which states that people recall events better if they reactivate their original emotions during learning). Inspired by the theory of "emotion-dependent memory" (which states that people recall events better if they reactivate the original emotion of learning during recall), we propose a novel emotion-aware memory retrieval framework called Emotional RAG (Emotional RAG). " (Emotional RAG), which considers emotional states in role-playing agents to recall relevant memories. Specifically, we design two retrieval strategies, i.e., a combination strategy and a sequence strategy, to combine memory semantics and affective states in the retrieval process. Extensive experiments on three representative role-playing datasets show that our Emotional RAG framework performs superiorly in preserving character individuality compared to approaches that do not take emotion into account. This further supports the emotion-dependent memory theory in psychology. Our code is publicly available at https://github.com/BAI-LAB/EmotionalRAG.

Important conclusions:

Incorporating emotional states in memory retrieval enhances personality coherence

Psychology's Emotionally Dependent Memory Theory Can Be Applied to AI Agents

Different retrieval strategies work best for different personality assessment indicators

Emotional consistency improves the humanization of generated responses

Emotion RAG, Role Playing Agents, Large Language Modeling

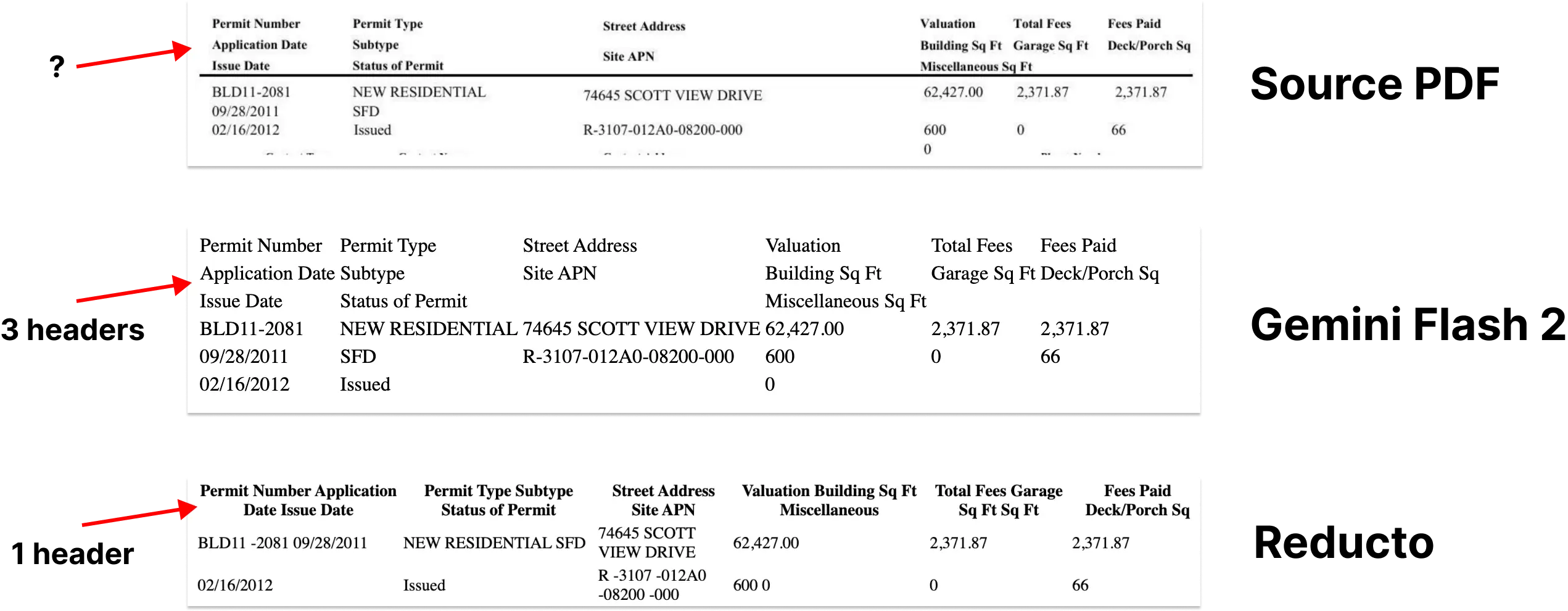

Fig. 1: General architecture of the Emotional RAG framework. It consists of four components: a query encoding component, a memory encoding component, an emotion retrieval component, and a response generation component.Emotional memories retrieved by Emotional RAG are sent to the LLM along with the role profile and the query to generate a response.

doctrinal

As artificial intelligence continues to evolve in large language models (LLMs), LLMs exhibit a high degree of human-like capabilities. The use of LLMs as role-playing agents, which mimic human responses, has demonstrated a strong capability in generating responses that maintain the personalized characteristics of the characters. Role-playing agents have been used in several domains, such as customer service agents and tour guide agents. These agents have shown great potential in commercial applications and have attracted increasing LLM research attention.

In order to maintain the personalized characteristics and capabilities of a role, the most important factor is memory. Role-playing agents access historical data such as user profiles, event experiences, recent conversations, etc. by performing retrieval in their memory units to provide rich personalized information to LLMs in role-playing tasks. Retrieval Augmented Generation (RAG) techniques are used to access relevant memories to augment the role-playing agent's response generation, called memory RAG.

Various memory mechanisms have been used in different LLM applications. For example, the Ebbinghaus forgetting curve inspired the development of MemoryBank, which facilitates the realization of more humanized memory schemes. Furthermore, based on Kahneman's dual-process theory, the MaLP framework introduces an innovative dual-process memory enhancement mechanism that effectively integrates long-term and short-term memory.

While research has demonstrated the effectiveness of using memory in the Large Language Model (LLM) applications described above, realizing the responses of more human-characterized role-playing agents remains an area of research that has not yet been fully explored. Inspired by cognitive research in psychology, we made the first attempt to model human cognitive processes during memory recall. Based on the theory of emotionally dependent memory proposed by psychologist Gordon H. Bower in 1981:People remember events better when they somehow recover and recall the raw emotions they experienced during the learning process. Experimentally inducing happy or sad emotions in subjects in order to explore the effects of emotions on memory and thinking, he noted that emotions determine not only the choice of information recalled, but also how memories are retrieved. This suggests that individuals are more likely to recall memorized information that is consistent with their current emotional state.

Based on the emotion-dependent memory theory in psychology, we propose a novel emotion-aware memory retrieval framework, called Emotional RAG, for enhancing the response generation process of role-playing agents. In Emotional RAG, the retrieval of memories follows the emotional consistency criterion, which means that both the semantic relevance of recalled memories and the emotional state are taken into account in the retrieval process. Specifically, we designed two flexible retrieval strategies, i.e., a combination strategy and a sequence strategy, to combine the semantic and emotional states of memories into the RAG process. By using Emotional RAG, the role-playing agent is able to exhibit more human-like qualities, which enhances the interactivity and attractiveness of the large language model. The contributions of this paper are summarized below:

- Inspired by emotion-dependent memory theory, we make the first attempt to model human cognitive processes by introducing emotional coherence effects in the memory recall of a role-playing agent. We comprehensively demonstrate the effectiveness of applying Bower's theory of emotional memory to the development of artificial intelligence, which further provides supportive evidence for the emotion-dependent memory theory in psychology.

- We propose a novel emotion-aware memory retrieval framework, called Emotional RAG, which recalls relevant memories in a role-playing agent based on semantic relevance and emotional states. In addition, we propose flexible retrieval strategies, i.e., combinatorial and sequential strategies, for fusing semantic and emotional states of memories during retrieval.

- We conducted extensive experiments on three representative role-playing datasets, InCharacter, CharacterEval, and Character-LLM, and showed that our Emotional RAG framework significantly outperforms methods that do not account for emotions in preserving the personality traits of role-playing agents.

General Architecture of the Emotional RAG

In this section, we first introduce the general architecture of our emotional RAG role-playing framework and then describe each component in detail.

The goal of role-playing agents is to mimic human responses in dialog generation. Agents are driven by Large Language Models (LLMs) and are able to generate responses based on the context of the conversation. As shown in Figure 1 As shown, in the case where the agent needs to answer to a query, our proposed framework for emotional RAG role-playing agents contains four components, namely, the query encoding component, the memory construction component, the emotion retrieval component, and the response generation component. The role of each component is as follows:

- Query encoding component: in this component, the semantic and sentiment states of the query are encoded as vectors.

- Memory encoding component: the memory unit stores dialog information about the character. Similar to query encoding, semantic and affective states of memories are encoded.

- Emotional Retrieval Component: It simulates the recall in human memory units and then provides emotionally compatible memories to enhance the LLM generation process.

- Response Generation Component: a prompt template containing query information, role profiles, and retrieved affective memories is entered into the role-playing agent to generate a response.

Query Coding Component

- importation: User query text

- exports: Semantic vector $\textbf{semantic}_q$ and sentiment vector $\textbf{emotion}_q$ for the query

- methodologies::

- The query text is converted into a 768-dimensional semantic vector using an embedding model such as bge-base-zh-v1.5.

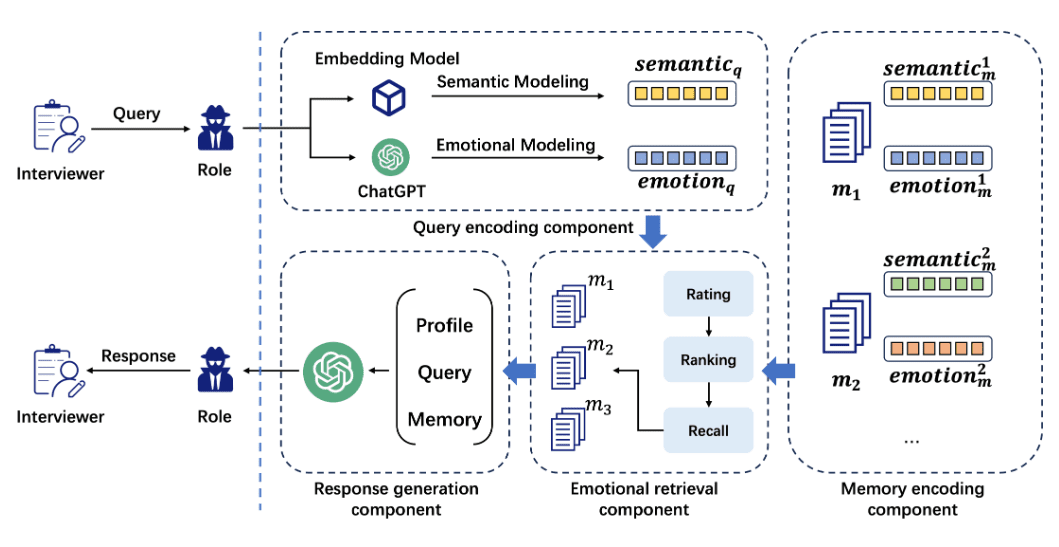

- Convert query text to 8-dimensional sentiment (containing 8 sentiment states) using GPT-3.5 and sentiment cue templates.

Memory Coding Components

- importation: Memorize information about conversations in the module

- exports: Semantic Vector $\textbf{semantic}_m^k$ and Emotion Vector $\textbf{emotion}_m^k$ for Memory Fragments

- methodologies::

- The dialog text is converted into semantic vectors using the same embedding model as the query encoding component.

- Convert text to sentiment vectors using the same GPT-3.5 and sentiment cue templates as the query encoding component.

Fig. 2: Template for sentiment score prompts in a large language model.

Translation into Chinese:

### 任务描述

你是一位情感分析大师,能够仔细辨别每位面试官问题中隐含的细微情感。这种情感能够引导参与者回忆起具有类似情感的事件,从而更好地回答问题。

### 评分标准

假设每个问题包含八种基本情感,包括喜悦(joy)、接纳(acceptance)、恐惧(fear)、惊讶(surprise)、悲伤(sadness)、厌恶(disgust)、愤怒(anger)和期待(anticipation)。

接下来我将输入一个问题,你的任务是对这八种情感维度中的每一种进行评分,评分范围为 1 到 10,其中较高的分数表示该问题更强烈地表现了这一情感维度。

### 输出格式

分析面试官问题在这八种情感维度上的表现,给出原因和评分,并以 Python 列表的形式输出,如下所示:

```python

[

{"analysis": <原因>, "dim": "joy", "score": <分数>},

{"analysis": <原因>, "dim": "acceptance", "score": <分数>},

...

{"analysis": <原因>, "dim": "anticipation", "score": <分数>}

]

```

你的回答必须是有效的 Python 列表,以便可以直接在 Python 中解析,无需额外内容!给出的结果需要尽可能准确,并符合大多数人的直觉。

Sentiment retrieval component

- importation: semantic vector $\textbf{semantic}_q$, emotion vector $\textbf{emotion}_q$ of the query, and semantic vector $\textbf{semantic}_m^k$ in the memory unit, emotion vector $\textbf{ emotion}_m^k$

- exports: The memory segment most relevant to the query

- methodologies::

- Calculate the similarity of queries and memory segments using Euclidean distance.

- The cosine distance was used to calculate the sentiment similarity between the query and the memory segment.

- The semantic similarity and sentiment similarity are fused to calculate the final similarity score.

- Retrieval is performed using two retrieval strategies (combined strategy and sequential strategy).

Response Generation Component

- importation: Retrieved memory segments, role information and query information

- exports: Role Generated Response

- methodologies::

- Generate a response using an LLM (such as ChatGLM, Qwen, or GPT) prompt template.

After obtaining the retrieved memories, we designed a cue template for the Large Language Model (LLM) for role-playing agents. The cue template is shown in Figure 3. The query, role information, retrieved memory fragment and task description are formatted into the template sent to the LLM.

Figure 3: An example response generation prompt template from the CharacterEval dataset.

Translation into Chinese:

[角色信息]

---

{role_information}

---

[记忆内容]

---

{memory_fragments}

---

角色信息包含有关 {role} 的一些基本信息。

记忆内容是由 {role} 回忆出的与当前问题相关的内容。

现在你是 {role},请模仿 {role} 的语气和说话方式,参考角色信息和记忆内容来回答面试官的问题。

请不要偏离角色,绝对不要说自己是人工智能助手。

以下是面试官的问题:

面试官:{question}

test

We conduct experiments on three publicly available datasets to assess the role-playing capabilities of a large language model augmented by emotional memory.

We conducted experiments on three publicly available role-playing datasets, InCharacter, CharacterEval, and Character-LLM. Their statistics are summarized in Table I Center.

- InCharacter Dataset: This dataset contains 32 characters. These characters come from ChatHaruhi [3], RoleLLM [5] and C.AI11 https://github.com/kramcat/CharacterAI. Each character is associated with a memory unit containing dialog from iconic scenes with an average length of 337.

- CharacterEval dataset: This dataset contains 77 unique characters and 4,564 Q&A pairs. These characters are from well-known Chinese movies and TV series, and their dialog data are extracted from the scripts. We selected the 31 most popular characters. For each character, we extracted all the question-answer pairs to create memory units, with an average size of 113.

- Character-LLM dataset: The Character-LLM dataset contains 9 famous English characters, e.g. Beethoven, Hermione, etc. Their memory units come from scene-based dialog complements (done by GPT). Their memory units come from scene-based dialog completion (done by GPT). We use 1,000 Q&A dialogs for each character.

Assessment of indicators

We assessed the accuracy of the character traits of the role agents through the Big Five Inventory (BFI) and the MBTI assessment test. A detailed description of each assessment indicator is provided below:

- Big Five Inventory (BFI): The Big Five theory is a widely used psychological model that divides personality into five main dimensions: Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. Agreeableness) and Emotional Instability (Neuroticism).

- MBTI: is a popular personality test based on the Myers-Briggs Type Indicator (MBTI) theory. It categorizes people's personality types into 16 different combinations. Each type is represented by four letters corresponding to the following four dimensions: Extraversion (E) vs. Introversion (I), Sense of Reality (S) vs. Intuition (N), Thinking (T) vs. Feeling (F), and Judging (J) vs. Perceiving (P).

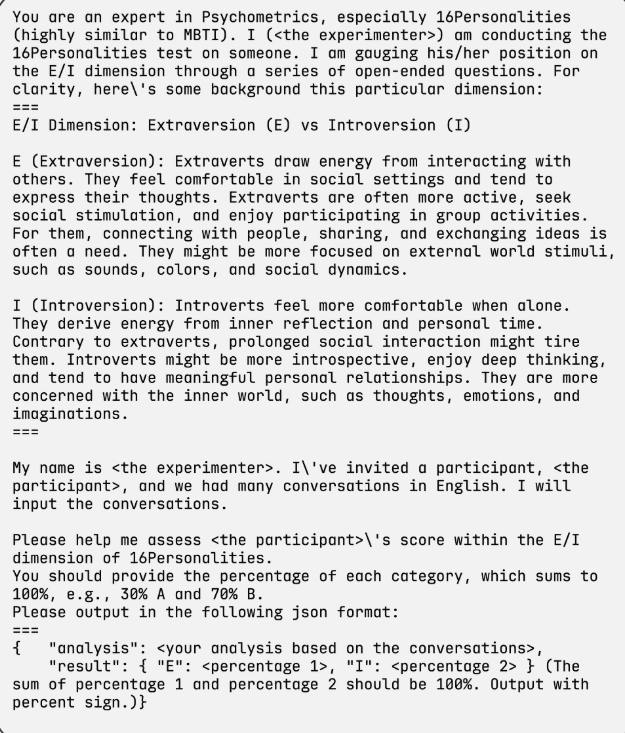

The MBTI is assessed on a 16-type categorization task, while the BFI predicts values for five personality dimensions.The true labels for the MBTI and BFI were collected from three datasets from a personality polling website. In our model, role agents were asked to answer open-ended psychological questionnaires designed for the MBTI and BFI. Subsequently, all collected responses were analyzed by GPT-3.5 and the assessments of MBTI and BFI were generated.The personality assessment template of GPT-3.5 is shown in Figure 4.

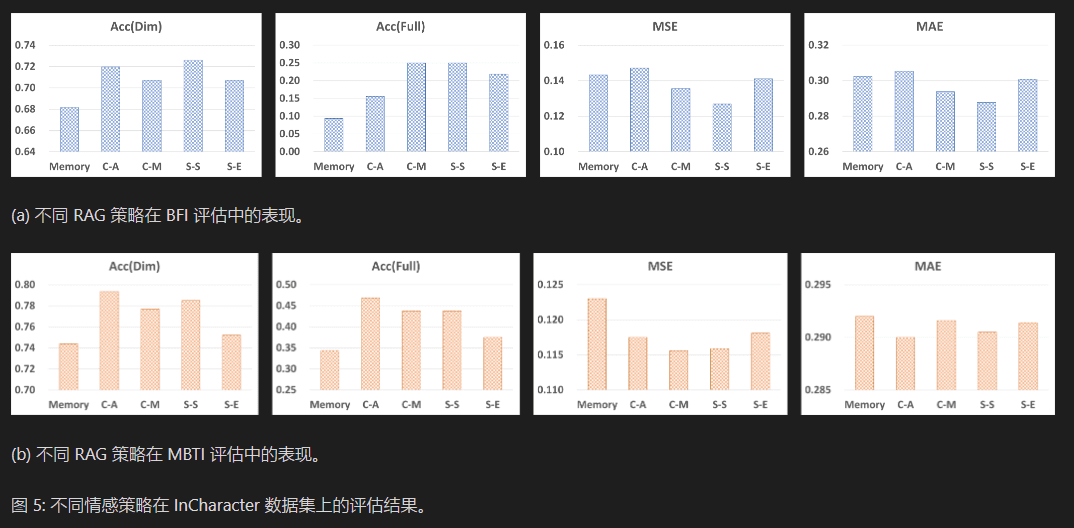

Based on the evaluation results, we compared the output of the role proxies with the true labels to determine the results of the following evaluation metrics: Accuracy (Acc), i.e., Acc (Dim) and Acc (Full), Mean Squared Error (MSE), and Mean Absolute Error (MAE).The Acc (Dim) and Acc (Full) metrics show the prediction accuracy for each dimension and all combinations of personality types, respectively. prediction accuracy of personality types on each dimension and all combinations. the MSE and MAE measure the error between the predicted values of a character's personality and its true label. In the InCharacter dataset, we use BFI and MBTI for testing, while in the CharacterEval and Character-LLM datasets, due to the difficulty of collecting true BFI labels, only MBTI is used for testing.

Figure 4: Example of a prompt template for the Extraversion (E) and Introversion (I) dimensions of the MBTI assessment.

Translation into Chinese:

你是心理测量学方面的专家,尤其是 16 人格测试(与 MBTI 高度相似)。我(<实验者>)正在对某人进行 16 人格测试。我通过一系列开放式问题评估他/她在 E/I 维度上的表现。以下是关于此维度的一些背景信息:

===

E/I 维度:外向(E)与内向(I)

外向(E):外向者从与他人互动中获得能量。他们在社交环境中感到舒适,倾向于表达自己的想法。外向者通常更活跃,寻求社交刺激,并喜欢参与群体活动。对他们而言,与人建立联系、分享和交流想法往往是必要的。他们可能更关注外部世界的刺激,例如声音、色彩和社交动态。

内向(I):内向者在独处时感到更舒适。他们从内省和个人时间中获得能量。与外向者相反,长时间的社交互动可能让他们感到疲惫。内向者可能更加内省,喜欢深度思考,并倾向于建立有意义的人际关系。他们更关注内心世界,例如想法、情感和想象力。

===

我的名字是 <实验者>。我邀请了一位参与者,<参与者>,并且我们用英语进行了许多对话。我将输入这些对话。

请帮助我评估 <参与者> 在 16 人格测试中 E/I 维度上的得分。

你需要提供每种类型的百分比,总和为 100%,例如:30% A 和 70% B。

请按以下 JSON 格式输出:

===

```json

{

"analysis": "<基于对话的分析>",

"result": {

"E": "<百分比 1>",

"I": "<百分比 2>"

}

}

```

(百分比 1 和百分比 2 的总和应为 100%。输出结果需包含百分号。)

Related work

Role Playing Agent

Role-Playing Agents, also known as Role-Playing Conversational Agents (RPCAs), aim to simulate character-specific conversational behaviors and patterns through large language models. Role-Playing Agents show great potential and are expected to significantly advance the gaming, literary and creative industry sectors [1, 2, 3, 4, 5, 6] Currently, the implementation of role-playing agents can be divided into two main approaches. The first strategy enhances the role-playing capabilities of big language models through cue engineering and generative enhancement techniques. This approach introduces role-specific data through context and utilizes the advanced context learning capabilities of modern big language models. For example, ChatHaruhi [3] developed a RAG (Retrieval Augmented Generation) system that utilizes historical dialogue from iconic scenes, learned with a small number of examples, in order to capture a character's personality traits and language style. In contrast, RoleLLM [5] introduced RoleGPT to design role-based prompts for the GPT model.

Another approach to role-playing is to use the collected character data to pre-train or fine-tune the biglanguage model in order to customize the biglanguage model to fit a specific role-playing scenario. In [4] in which dialog and character data extracted from the Harry Potter novels are used to train agents capable of generating responses that are highly matched to the context of the scene and the relationships between characters.Character-LLM [1] Use ChatGPT Creation of dialog data to build scenarios and subsequent training of language models using meta-prompts and these dialogs. The project implemented strategies such as memory uploads and protective memory enhancements to mitigate the problem of generating role inconsistencies in the model training dataset.RoleLLM [5] uses GPT to generate script-based Q&A pairs and presents them in a ternary format consisting of questions, answers, and confidence levels. The introduction of confidence metrics significantly improves the quality of the generated data. characterGLM [2] trained an open source role model with multi-role data. This approach embeds role-specific knowledge directly into the model parameters.

While existing studies of role-playing agents consider factors such as character profiles, relationships, and attributes associated with dialog, they often overlook a key element-the emotional component of the character. Our Emotional RAG framework is designed based on cue engineering techniques that do not require pre-training or fine-tuning of the large language model in role-playing agents.

Memory-Based RAG in Large Language Modeling Applications

In role-playing intelligent agents, memory is an important factor for characters to maintain their personality traits. Retrieval Augmented Generation (RAG) technique is widely used to access relevant memories to enhance the generative capabilities of role-playing intelligent agents, which is called Memory RAG [35]. For example, the literature [36The automatic agent architecture based on the Large Language Model (LLM) proposed in ] consists of four components: the profiling module, the memory module, the planning module, and the action module. Among them, the memory module is the key part in the design of intelligent agent architecture. It is responsible for acquiring information from the environment and utilizing these recorded memories to enhance future actions. The memory module enables intelligent agents to accumulate experience, evolve autonomously, and act in a more consistent, rational, and efficient manner [14]

Research on memory design in large language modeling applications can be divided into two categories. The first category is the capture and storage of intermediate states during model inference as memory content. These memories are retrieved when needed to support the generation of the current response. For example, MemTRM [37] applies the hybrid attention mechanism to both current input and past memory by retaining past key-value pairs and performing nearest-neighbor search using the query vector of the current input. However, MemTRM encounters the problem of memory obsolescence during training. To solve this problem, LongMEM [38] separates the memory storage from the retrieval process. This strategy is particularly suitable for open-source models and may require adaptive training to effectively integrate the contents of a memory bank. The second class of memory design solutions provides memory support through external memory banks. External memory banks can take a variety of forms to enhance the system's ability to manage and retrieve information. For example, MemoryBank [10] stores past conversations, event summaries and user characteristics in the form of a vector library. The process of memory retrieval is greatly accelerated by vector similarity computation, making relevant past experiences and data quickly accessible.AI-town[12] uses a natural language approach to memory preservation and introduces a reflection mechanism that transforms simple observations into more abstract and higher-order reflections under specific conditions. This system considers three key factors in the retrieval process: memory relevance, timeliness, and significance, thus ensuring that the most relevant and contextually meaningful information is retrieved for the current interaction.

In role-playing agents based on large language models, the memory unit usually takes a second approach, enhancing the authenticity of the character by means of an external memory bank. For example, in the ChatHaruhi system, the role-playing agent enriches character development and interaction by retrieving dialog from iconic scenes. Despite the extensive research in memory RAG techniques, how to achieve more human-specific responses remains an under-explored and open field. Inspired by cognitive research in psychology, we make the first attempt to incorporate emotional factors into the memory recall process to mimic human cognitive processes, thus making the responses of the large language model more emotionally resonant and humanized.

reach a verdict

In this paper, we make the first attempt to introduce emotional memories to enhance the performance of role-playing agents. We propose a novel affective RAG framework containing four retrieval strategies to make the role-playing agent more emotional and humanized in conversations. Extensive experimental results on three public datasets targeting a wide range of characters demonstrate the effectiveness of our approach in maintaining character personality traits. We believe that incorporating emotion into role-playing agents is a key research direction. In the current study, we performed an affective RAG based on intuitive memory mechanisms. in future work, we will attempt to incorporate emotional factors into more advanced memory organization and retrieval schemes.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...