dsRAG: A Retrieval Engine for Unstructured Data and Complex Queries

General Introduction

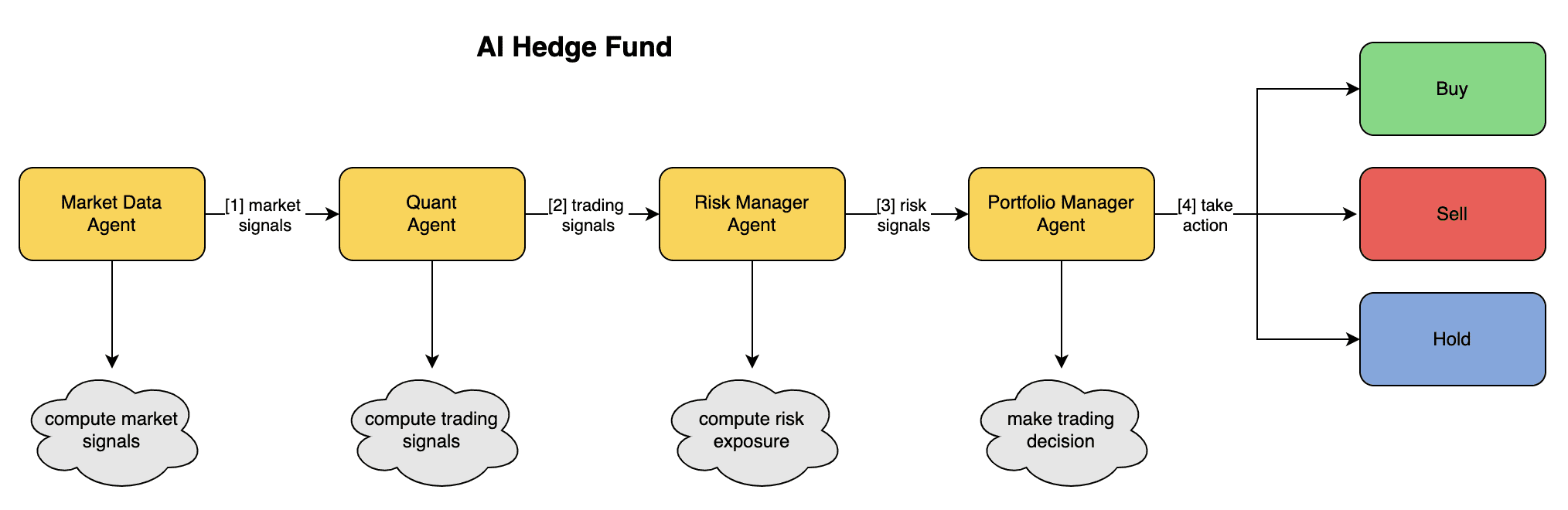

dsRAG is a high-performance retrieval engine designed to handle complex queries on unstructured data. It performs particularly well in handling challenging queries in dense texts such as financial reports, legal documents, and academic papers. dsRAG employs three key approaches to improve performance: semantic segmentation, contextual autogeneration, and relevant segment extraction. These approaches enable dsRAG to achieve significantly higher accuracy than the traditional RAG baseline in complex open-book quizzing tasks. In addition, dsRAG supports a wide range of configuration options that can be customized according to user needs. Its modular design allows users to easily integrate different components, such as vector databases, embedding models, and reorderers, for optimal retrieval.

Compared to traditional RAG (Retrieval-Augmented Generation) baselines, dsRAG achieves significantly higher accuracy rates in complex open-book quiz tasks. For example, in the FinanceBench benchmark test, dsRAG achieves an accuracy of 96.61 TP3T, compared to 321 TP3T for the traditional RAG baseline. dsRAG significantly improves the retrieval performance through key methods such as semantic segmentation, automatic context and relevant segment extraction.

Function List

- semantic segmentation: Utilize LLM to segment documents to improve retrieval accuracy.

- Automatic context generation: Generate block headers containing document and paragraph level context to improve embedding quality.

- Relevant segment extraction: Intelligently combine related blocks of text at query time to generate longer paragraphs.

- Multiple vector database support: e.g. BasicVectorDB, WeaviateVectorDB, ChromaDB, etc.

- Multiple embedded model support: e.g., OpenAIEmbedding, CohereEmbedding, etc.

- Multiple reorderer support: e.g. CohereReranker, VoyageReranker, etc.

- Persistent Knowledge Base: Support for persisting knowledge base objects to disk for subsequent loading and querying.

- Multiple Document Format Support: Support for PDF, Markdown and other document formats, such as parsing and processing.

Using Help

mounting

To install the Python package for dsRAG, you can run the following command:

pip install dsrag

Make sure you have the API keys for OpenAI and Cohere and set them as environment variables.

Quick Start

You can use thecreate_kb_from_filefunction creates a new knowledge base directly from a file:

from dsrag.create_kb import create_kb_from_file

file_path = "dsRAG/tests/data/levels_of_agi.pdf"

kb_id = "levels_of_agi"

kb = create_kb_from_file(kb_id, file_path)

Knowledge base objects are automatically persisted to disk, so they do not need to be saved explicitly.

Now, you can get a better understanding of the situation through itskb_idLoad the knowledge base and use thequerymethod for querying:

from dsrag.knowledge_base import KnowledgeBase

kb = KnowledgeBase("levels_of_agi")

search_queries = ["What are the levels of AGI?", "What is the highest level of AGI?"]

results = kb.query(search_queries)

for segment in results:

print(segment)

Basic customization

You can customize the configuration of the knowledge base, for example by using only OpenAI:

from dsrag.llm import OpenAIChatAPI

from dsrag.reranker import NoReranker

llm = OpenAIChatAPI(model='gpt-4o-mini')

reranker = NoReranker()

kb = KnowledgeBase(kb_id="levels_of_agi", reranker=reranker, auto_context_model=llm)

Then useadd_documentmethod to add a document:

file_path = "dsRAG/tests/data/levels_of_agi.pdf"

kb.add_document(doc_id=file_path, file_path=file_path)

build

The knowledge base object accepts documents (in the form of raw text) and performs chunking and embedding, as well as other preprocessing operations on them. When a query is entered, the system performs vector database search, reordering and relevant segment extraction, and finally returns the result.

Knowledge base objects are persistent by default and their full configuration is saved as a JSON file for easy rebuilding and updating.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...