Deployment of a personalized set of "small" model chat tools for low-cost computers

Why deploy a private "mini" model chat tool?

Many people have used ChatGPT, Wisdom Spectrum, Beanbag, Claude and other excellent large language models, and there is a need for in-depth use will also buy third-party paid services, after all, they are very outstanding performance. For example, my main working scenario is to write articles, then I will choose Claude.

Although I love using Claude, do I really use it on a daily basis with high frequency? The answer is of course no!

Thresholds such as limitations on usage, price factors, network issues, etc., naturally reduce the frequency of use when it is not necessary. --If a tool can't be "picked up and used" in any environment, it's not working.

In this case, using a "small" model may be a better choice, why?

"Small" model characteristics

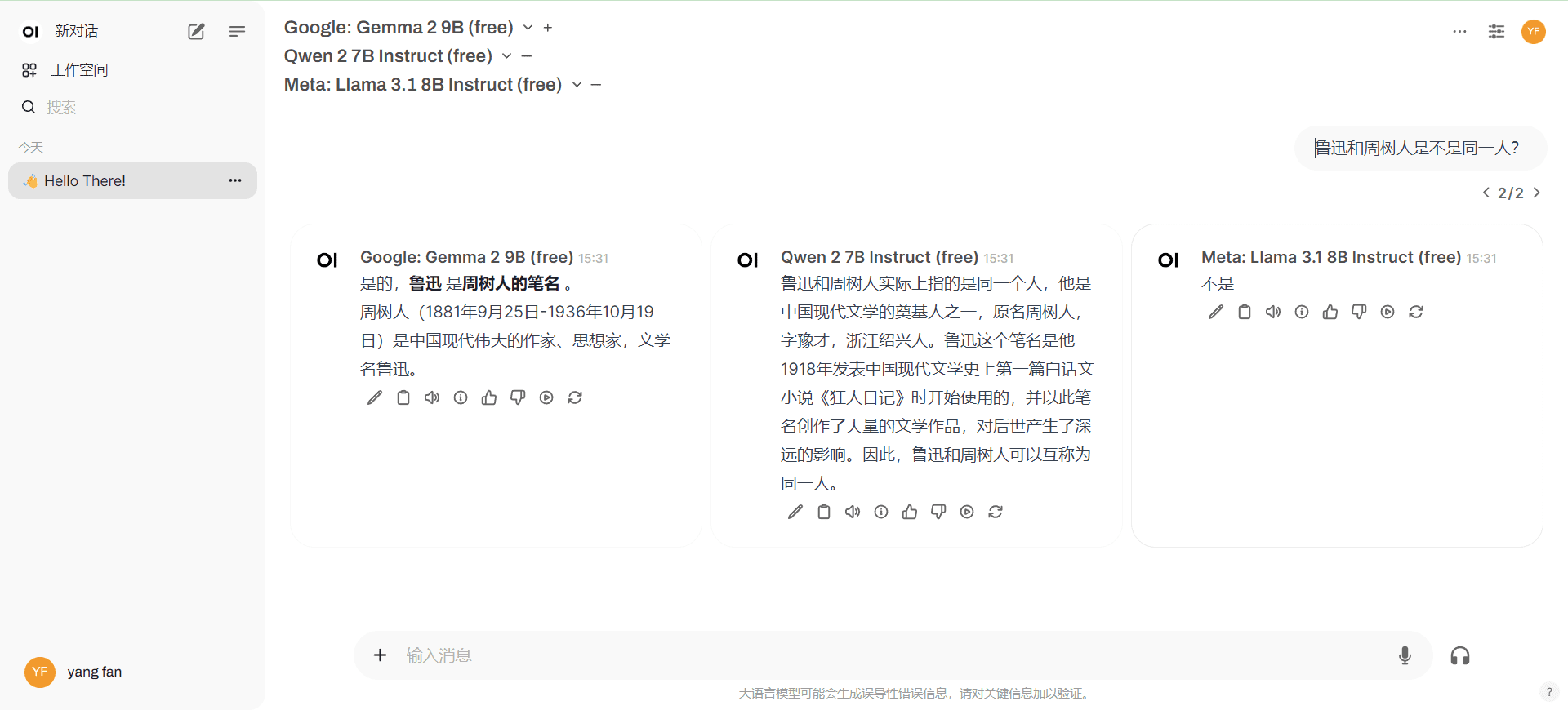

Gemma2, llama3.1:8b, qwen2:7b are small enough for daily use, with 32k long contextual input and output, most of the commands are followed, the ability to express Chinese, answer questions, and the whole thing is good, and there is no similar limitation of "Wenxin Yiyin" that can only input 2,000 characters. The limitations... It is sufficient for daily use, and we will consider specializing in special tasks. The advantages of the miniatures are as follows:

- Support for context sizes no smaller (or even larger) than the larger model

- Everyday writing tasks with not-so-low-quality output

- unlimited use

- Multiple miniatures can output answers concurrently for easy comparison.

- Faster execution

What is private deployment?

A private chat WEB interface for easy customization and free access to "small" models.

The most classic solution is to deploy Ollama+Open WebUI locally, the former is responsible for running the miniatures on the local computer and the latter hosts the chat interface. Considering extranet use anytime, anywhere, you can usecloudflaremaybecpolarMap the address to an external network (search for the tutorial yourself).

vantage

- Chatting data is local and private

- Flexibility to customize local models

drawbacks

- Difficult to run persistently (you always have to turn off your computer, right?) Difficult to publish to an extranet

- High computer hardware requirements

issue to be addressed

It's the shortcomings that we're trying to address:

1. The deployed AI chat interface needs to be published to the extranet and have a stable access URL to be used anytime and anywhere

2. Computer hardware threshold is mainly the use of Ollama to run the model locally, changed to well-known manufacturers of API services can be, privacy protection is relatively good and free. (General computer local can run up the small model, the network has a free API)

optimal program

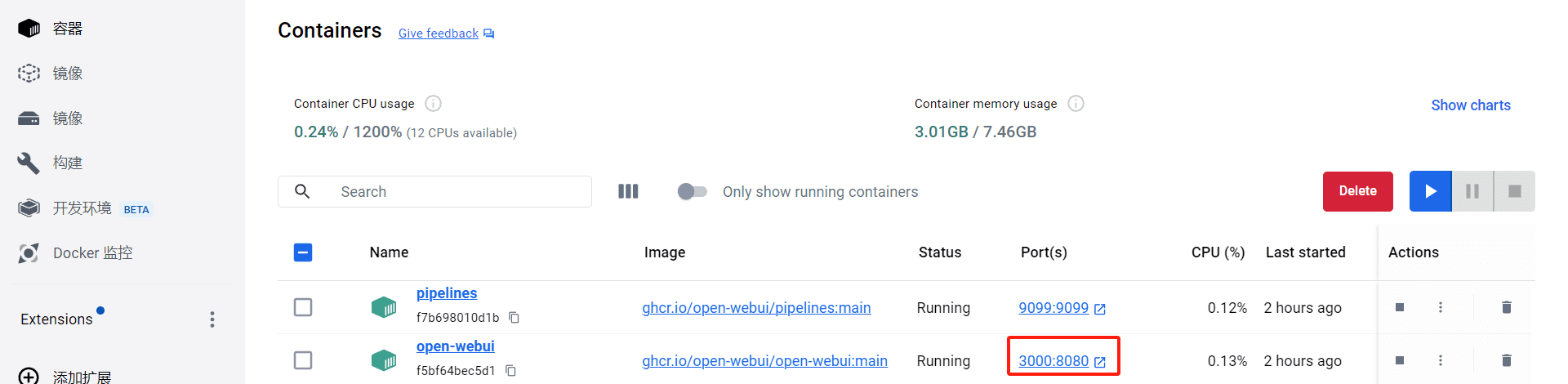

1. Local/cloud free doceker deployment Open WebUI + access to "small" model APIs

Local use only, computer hardware only needs to be able to run doceker

2. Self-deployment/use of tri-party NextChat + access to "small" model APIs

Self-deployment of NextChat requires your own domain name, and there is a risk of compromising your keys by using a three-party NextChat.

This deployment program is only for experienced people to operate, inexperienced white is not recommended, good use of mature products, or encounter abnormal problems delay is not worth it.

Optimal deployment option 1

1. Deployment of doceker

Local: local deployment of doceker tutorials search for yourself

Cloud: free doceker resources in the cloud, please search for yourself, here I use Koyeb.. (Intranet not directly accessible, requires science and technology)

2. Deploying Open WebUI in doceker

Local: DetailsRead the documentThe following installation commands are recommended (keep it up to date)

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

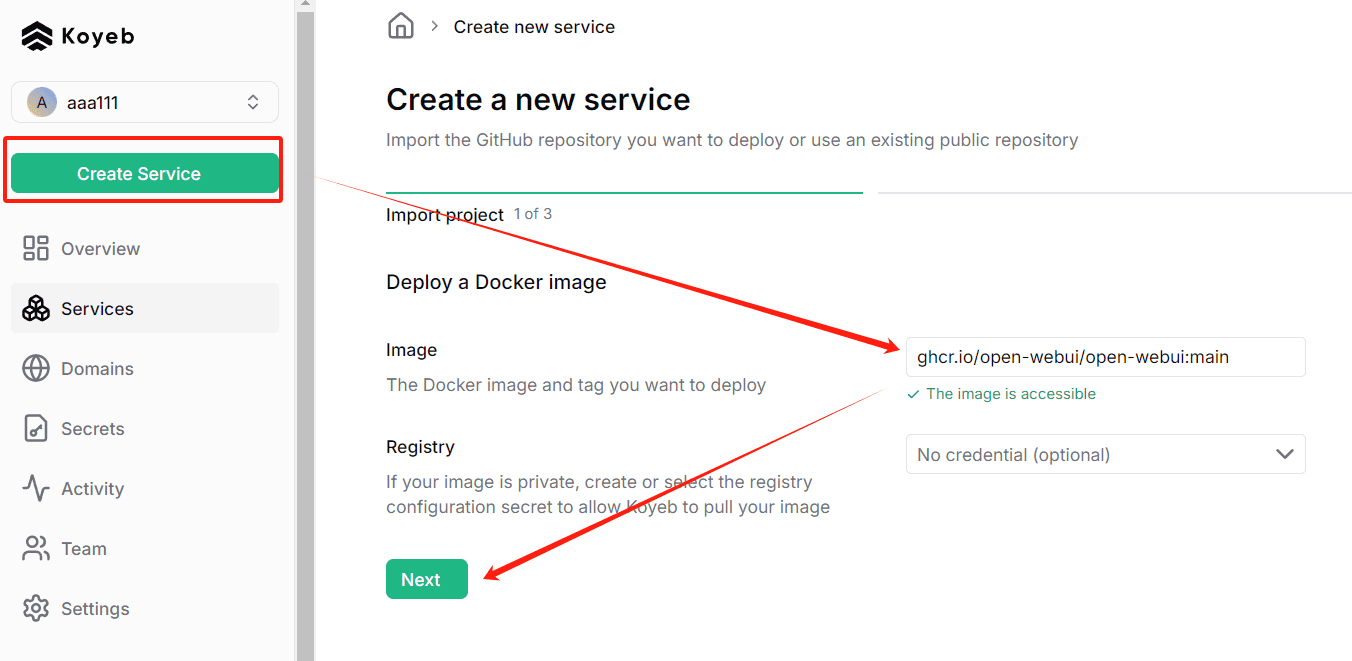

Cloud: RegistrationKoyebAfter that, click Create Service and enter the following command

ghcr.io/open-webui/open-webui:main

3. Start Open WebUI

Local startup, accessed by default at http://localhost:3000/

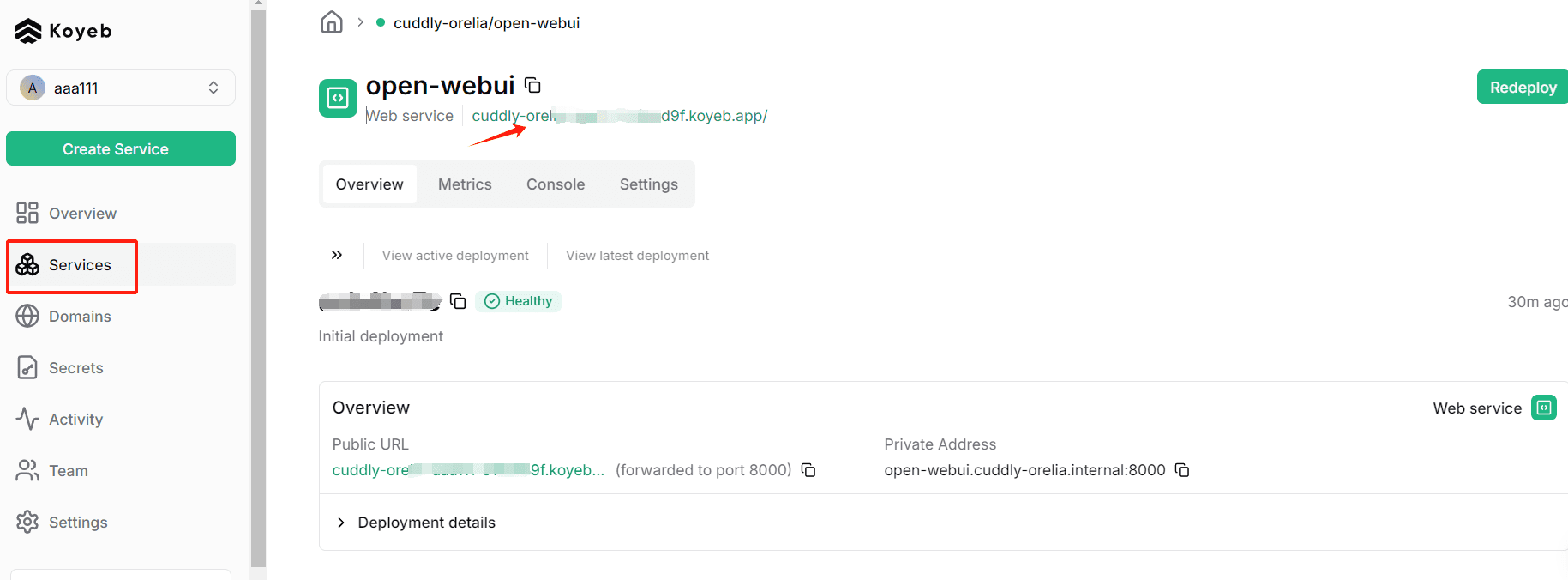

Cloud startup, after Koyeb deployment is complete, can be clicked here (the disadvantage is that this domain name can not be directly accessed by the intranet, and binding the domain name requires the opening of a paid account)

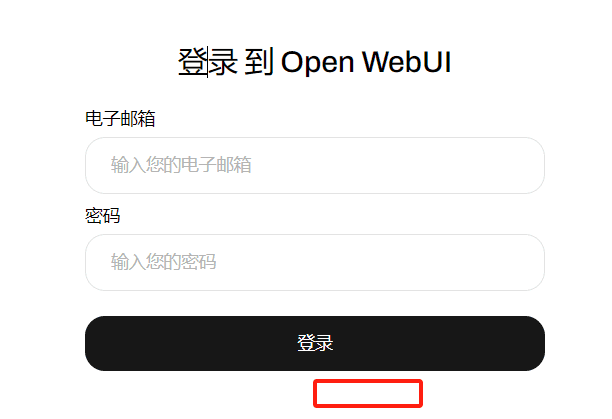

After startup, register an account, by default, the first registered account that is the administrator account. Already registered, so only the login interface, the first visit you can see the "registration" portal

4. Apply for free "small" model APIs

OpenRouter is recommended, and has been writing novels for a year using its free models. Here's an explanation of how to get OpenRouter's modeling APIs

PS: Domestic free small model API vendors: Silicon Flow

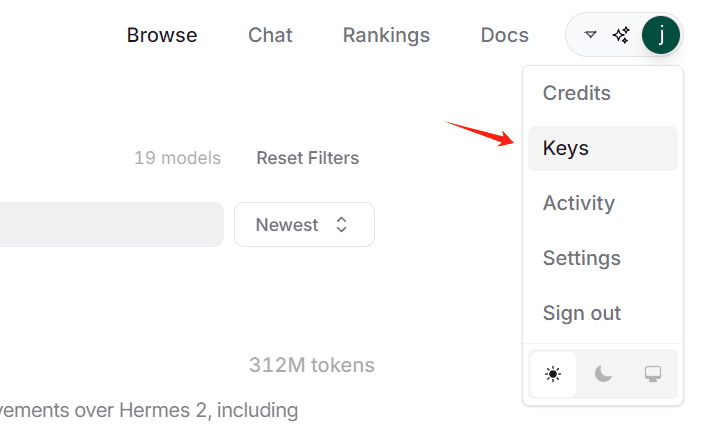

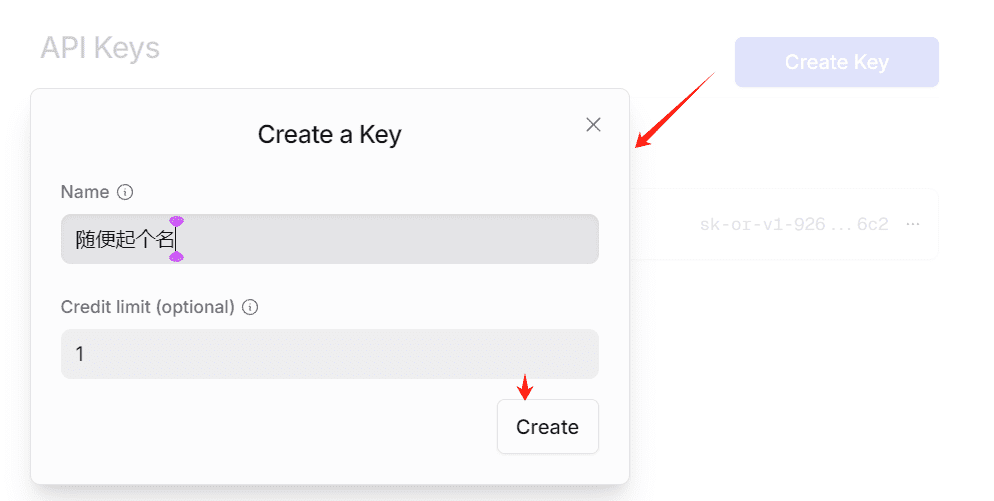

4.1 Creating a KEY

You will get a string of characters starting with sk-, this is KEY, please copy it and save it locally, you can't copy it again after the page is closed.

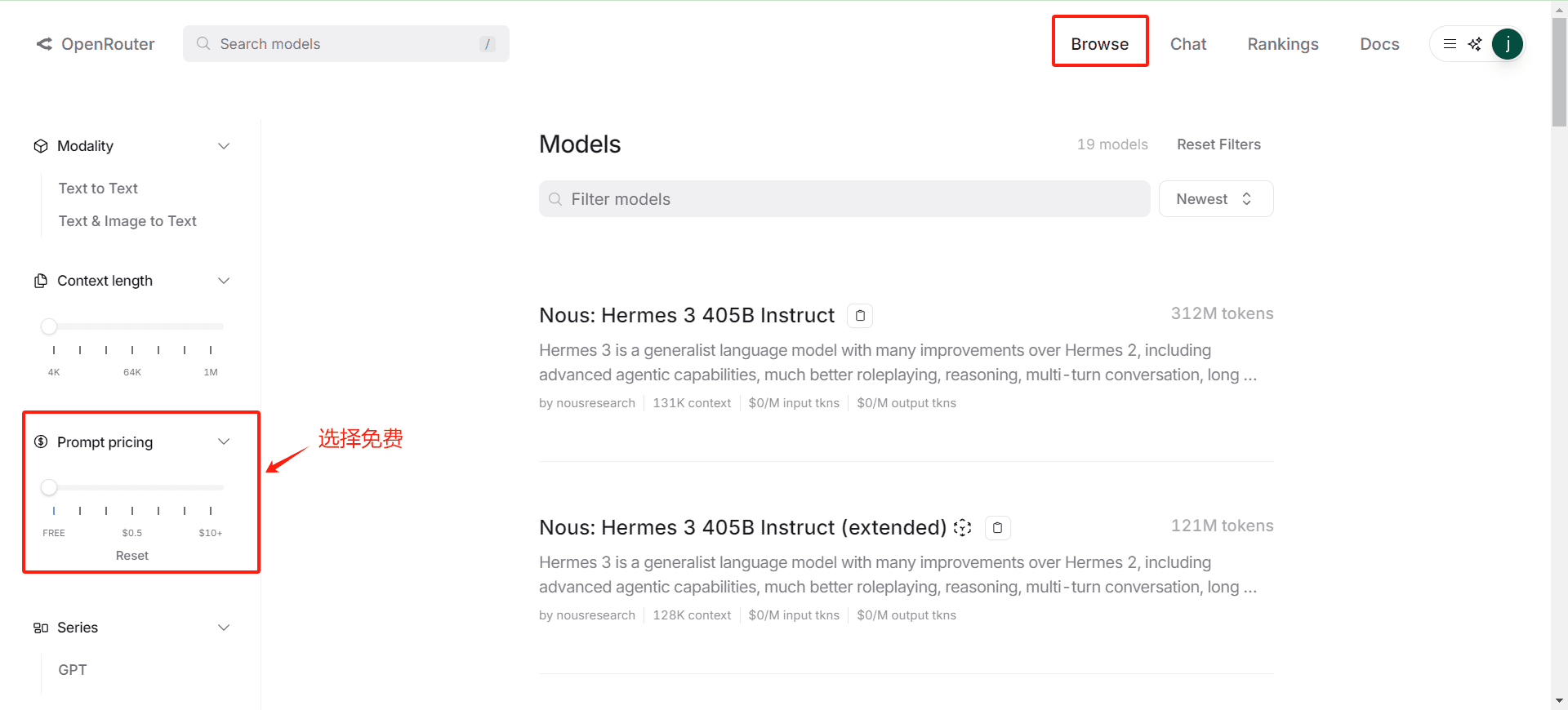

4.2 Confirmation of the list of free models

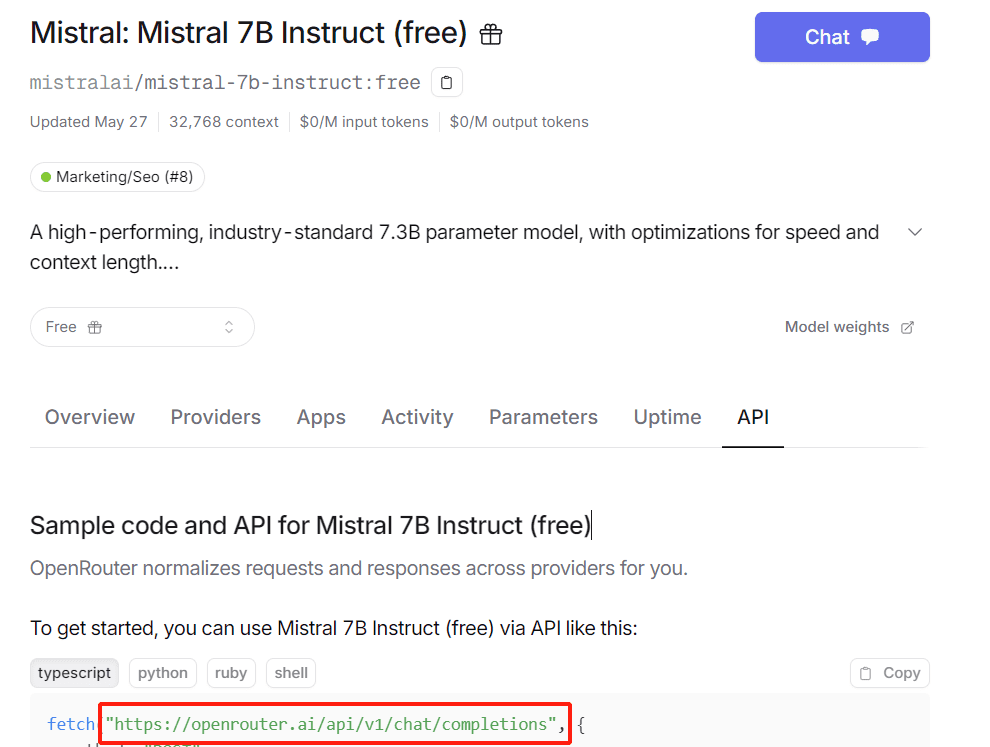

4.3 Getting the API request URL(math.) genus

Go to any model page to see it, generally: https://openrouter.ai/api/v1/chat/completions

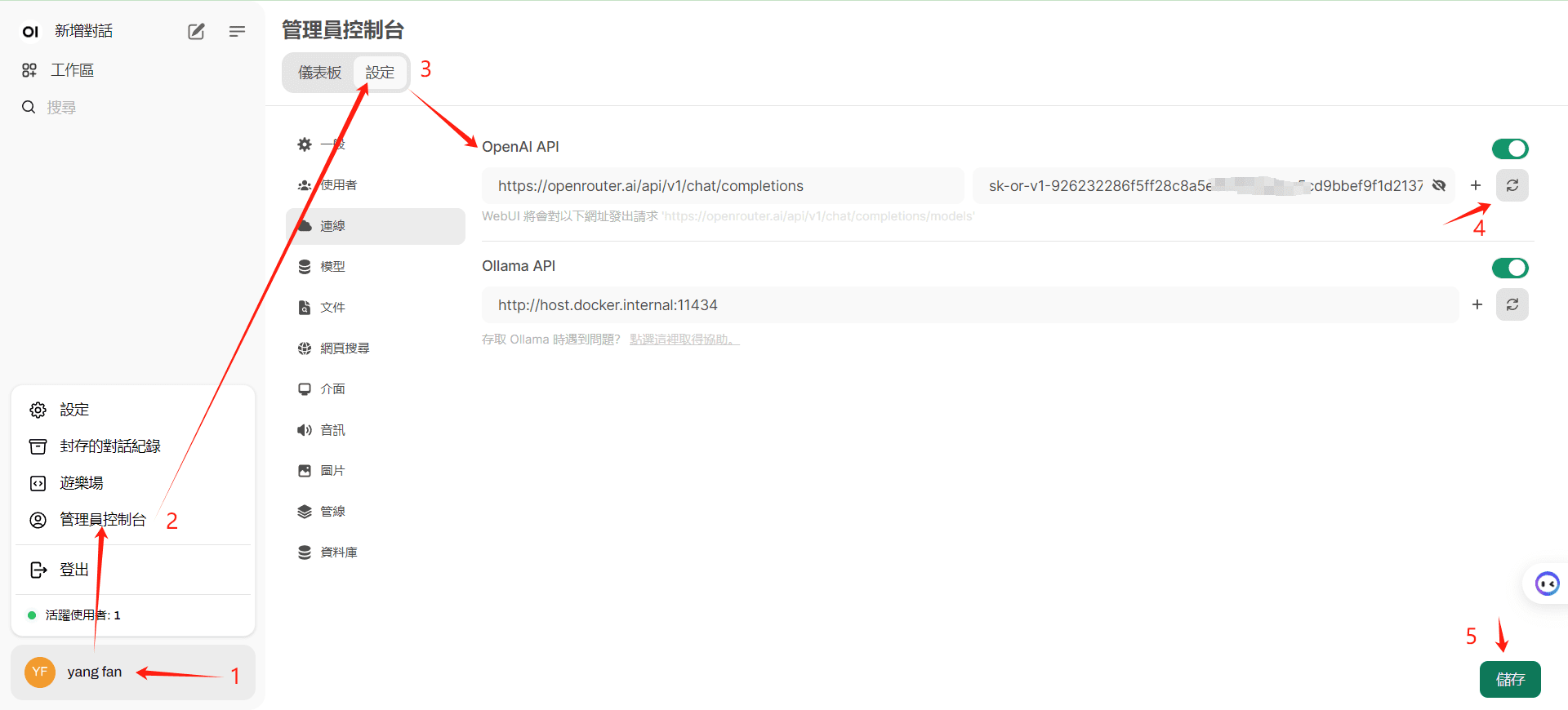

5. Enter the Open WebUI configuration model

Note that clicking on "4" confirms that the interface was accessed successfully before clicking on Save

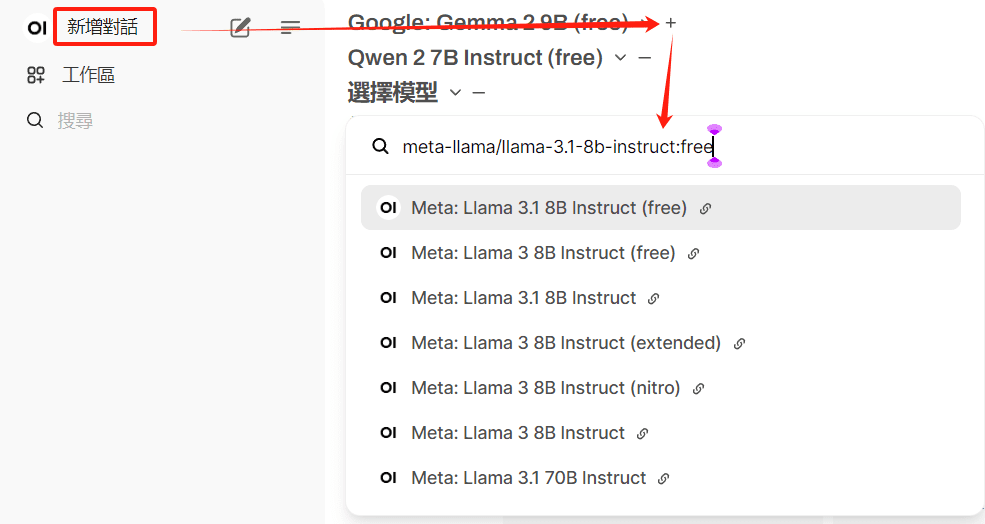

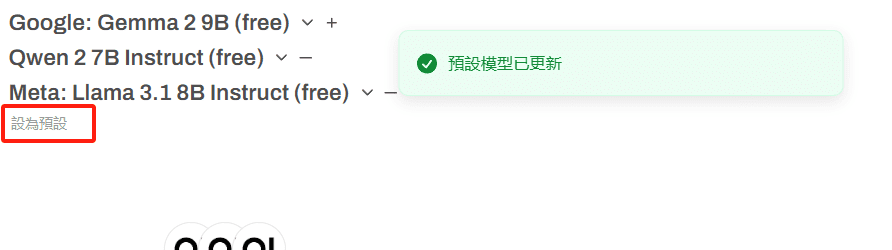

6. Configure the default model

Multiple free models can be selected

Use of paid models will result in account deactivation

Click Presets to save frequently used models

7. Try a first conversation

Optimal deployment option 2

1.Cloud Deployment of NextChat

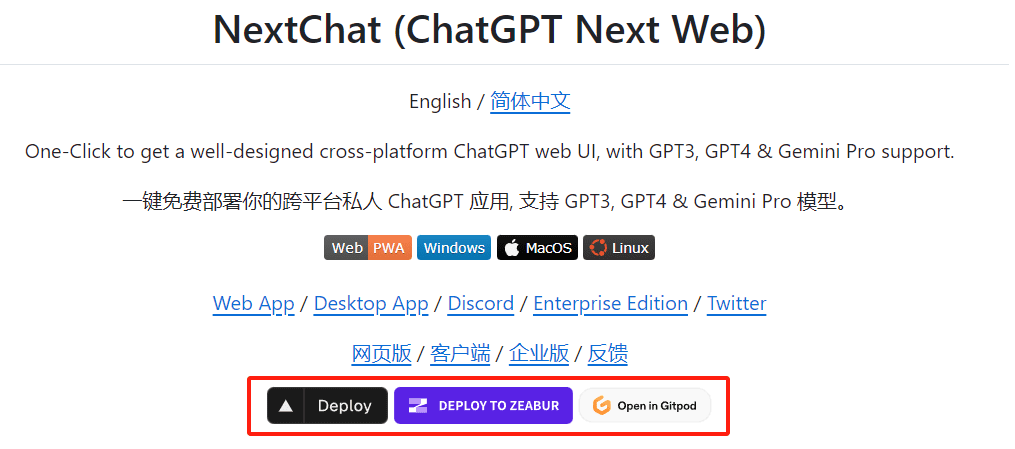

Free one-click cloud deployment, check out the help for yourself: https://github.com/ChatGPTNextWeb/ChatGPT-Next-Web

2. The first Deploy (vercel) deployment is used here.

Just follow the process, and here are three things to keep in mind:

- Be sure to read the help documentation carefully and follow the tutorial to set up your project to update automatically.

- Configure the KEY variable and access password during the vercel installation process, it is recommended to configure them in advance.

- Binding your own domain name allows direct access to domestic networks.

3. Configuration variables

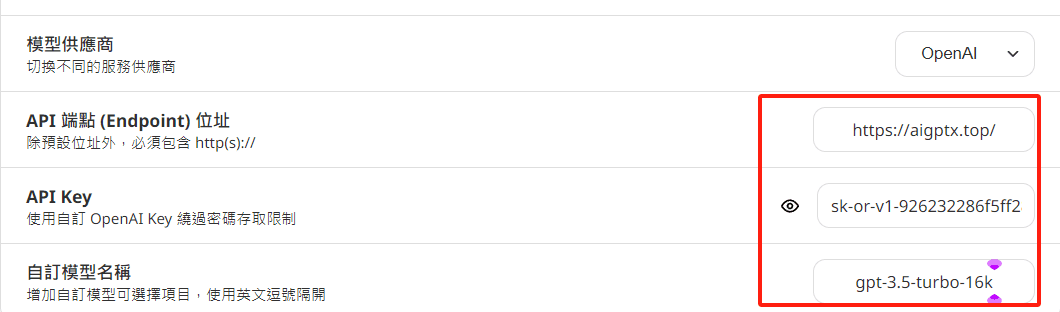

It is not like Option 1 can automatically read the model list, you need to define your own free model list, note the change of interface address

BASE_URL or OpenAI Endpoint: Set this to https://openrouter.ai/api

OPENAI_API_KEY or OpenAI API Key: Enter your OpenRouter API key here.

CUSTOM_MODELS or Custom Models: Specify the model name as it is listed within OpenRouter.

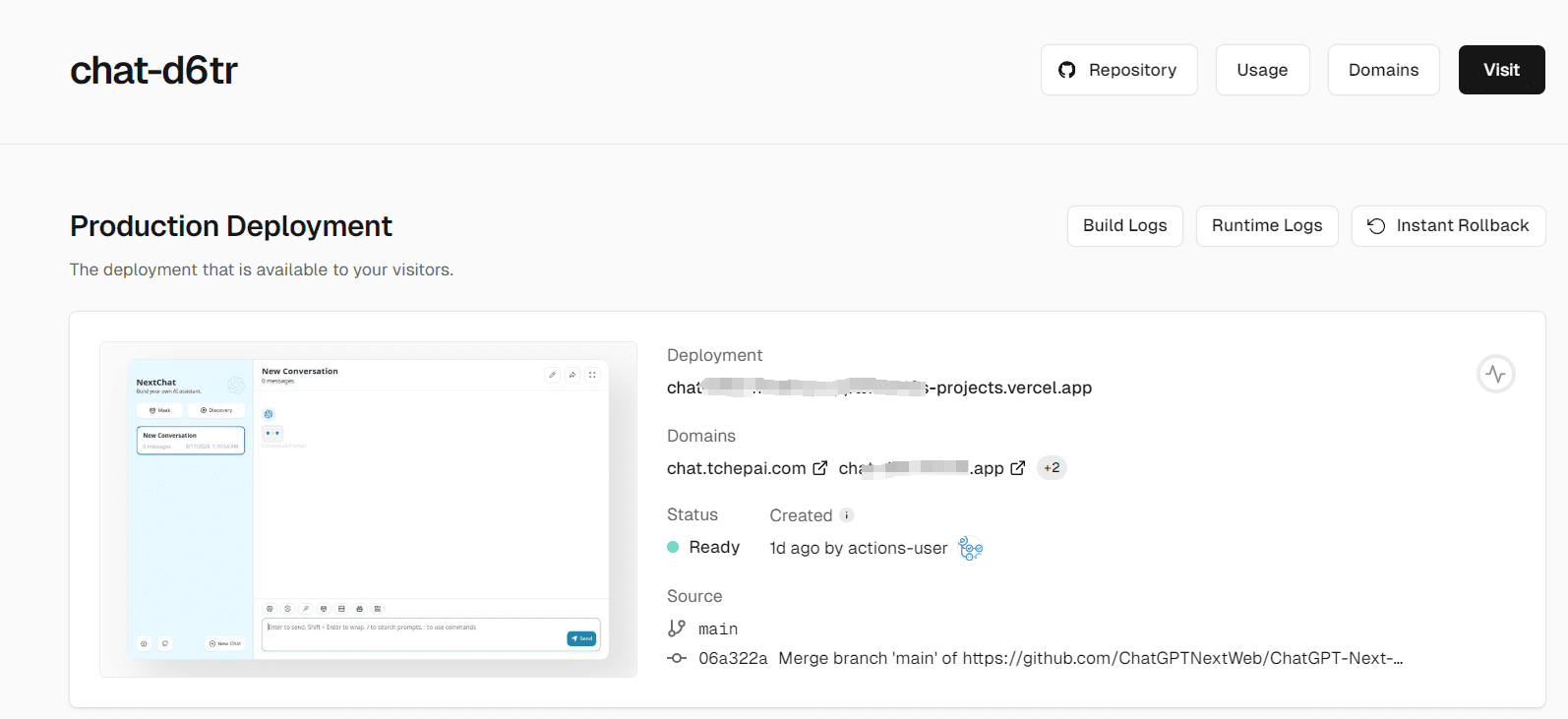

4. Deployment completion screen

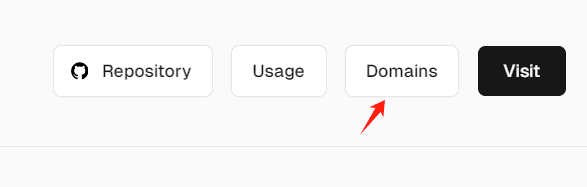

5. Binding domain name

Resolving in-country access issues

4. You can configure the API KEY for a model separately in the settings

You can configure aOhMyGPTA small amount of free GPT4 credits per day, another address for stable access to API KEY (to prevent abusive hiding):

Another free API KEY program: https://github.com/chatanywhere/GPT_API_free

Deployed NextChat address (be careful not to enter sensitive information, enter your own API KEY can be used): https://chat.tchepai.com/

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...