Diffbot GraphRAG LLM: LLM reasoning service relying on external real-time knowledge graph data

General Introduction

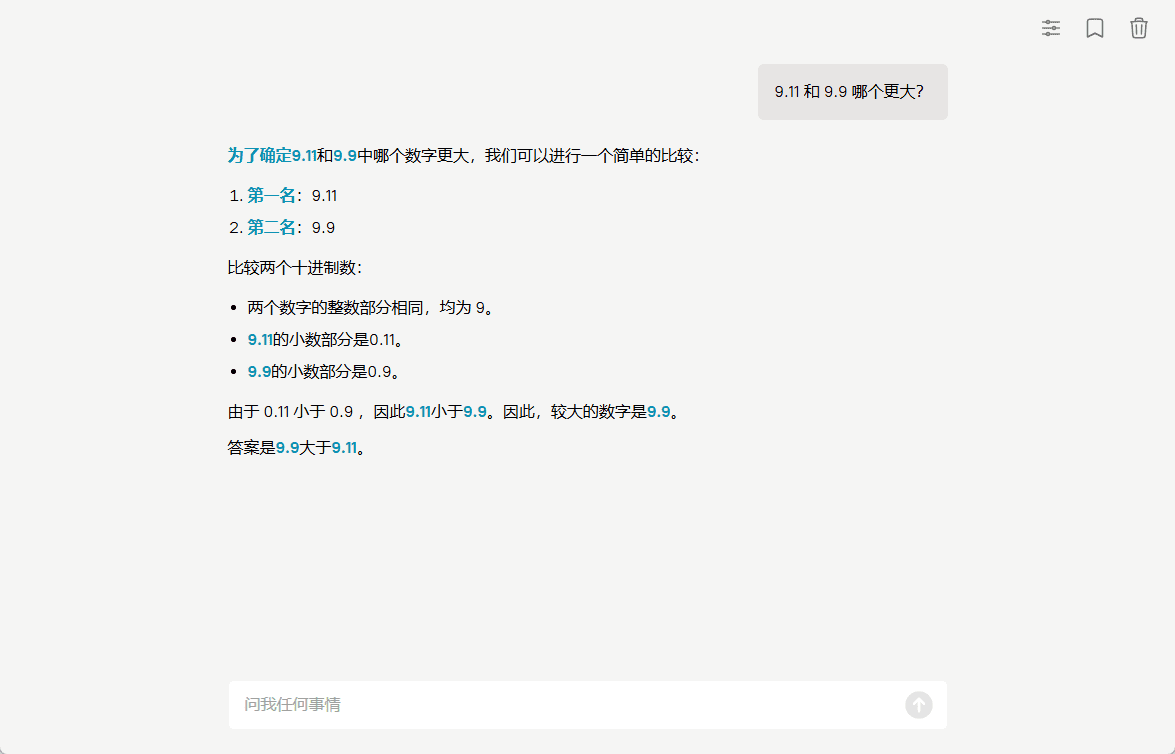

The Diffbot LLM Reasoning Server is an innovative large-scale language modeling system with special optimizations and improvements based on the LLama model architecture. The most important feature of the project is the integration ofReal-time Knowledge Graph(Knowledge Graph) vs.Search Enhanced Generation(RAG) technology was combined to create the unique GraphRAG system. It not only extracts and processes web page information in real time, but also performs accurate fact citation and knowledge retrieval. The system provides two versions of the model: an 8B parameter model based on Llama 3.1 (diffbot-small) and a 70B parameter model based on Llama 3.3 (diffbot-small-xl). In authoritative benchmarks such as MMLU-Pro, the system shows excellent performance, especially in real-time data processing, outperforming mainstream models including GPT-4 and ChatGPT. The project is completely open source and supports local deployment and cloud API calls, providing developers with flexible usage.

Experience: https://diffy.chat/

Function List

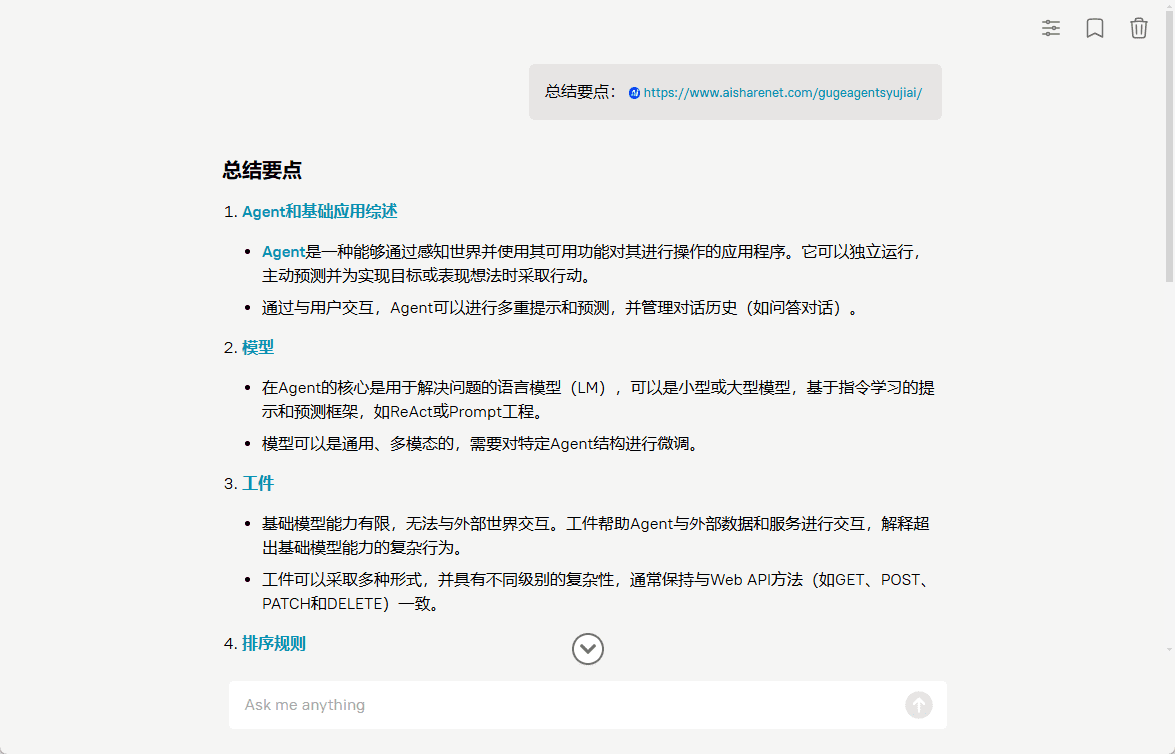

- Real-time web page URL content extraction and summarization

- Knowledge graph-based accurate fact retrieval and citation

- Support for Diffbot Knowledge Graph Query Language (DQL)

- Image comprehension and descriptive skills

- Integration of JavaScript interpreter code arithmetic functions

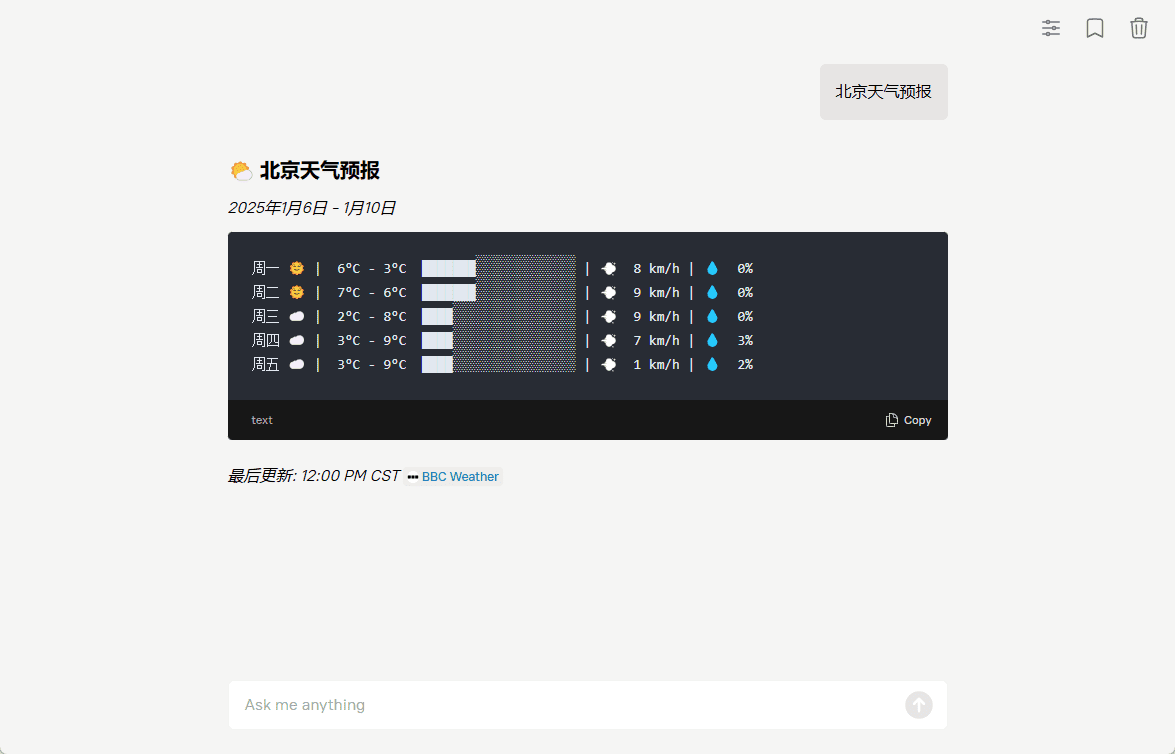

- ASCII Art Weather Forecast Generation

- Support for Docker containerized deployment

- Provide REST API interface service

- Support for customized tool extensions

- Supports multiple hardware configuration deployment options

Using Help

1. Deployment options

The system provides two ways to use it: local deployment and cloud API call.

Local Deployment Process:

- Hardware requirements confirmed:

- diffbot-small model: minimum Nvidia A100 40G graphics card required

- diffbot-small-xl model: minimum 2 Nvidia H100 80G graphics cards required (FP8 format)

- Docker deployment steps:

# 1. 拉取Docker镜像

docker pull docker.io/diffbot/diffbot-llm-inference:latest

# 2. 运行Docker容器(模型会自动从HuggingFace下载)

docker run --runtime nvidia --gpus all -p 8001:8001 --ipc=host \

-e VLLM_OPTIONS="--model diffbot/Llama-3.1-Diffbot-Small-2412 --served-model-name diffbot-small --enable-prefix-caching" \

docker.io/diffbot/diffbot-llm-inference:latest

Cloud API calls:

- Get access credentials:

- Visit https://app.diffbot.com/get-started to register and get your free developer token!

- Python code example:

from openai import OpenAI

client = OpenAI(

base_url = "https://llm.diffbot.com/rag/v1",

api_key = "你的diffbot_token"

)

# 创建对话请求

completion = client.chat.completions.create(

model="diffbot-xl-small",

temperature=0,

messages=[

{

"role": "user",

"content": "你的问题"

}

]

)

print(completion)

2. Description of the use of core functions

- Web page content extraction:

- The system can process any web page URL in real time

- Automatically extract key information and generate summaries

- Maintaining the integrity of the original source citation

- Knowledge Graph Query:

- Precise Search with Diffbot Query Language (DQL)

- Support for complex knowledge relationship queries

- Knowledge base access with real-time updates

- Image processing capabilities:

- Support for image understanding and description

- Can be combined with text to generate relevant image analysis

- Code Interpretation Function:

- Built-in JavaScript interpreter

- Supports real-time math calculations

- Simple program logic can be processed

3. Extension of customization tools

To extend the new functionality, refer to the add_tool_to_diffbot_llm_inference.md document in the project and follow the steps to add a custom tool.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...