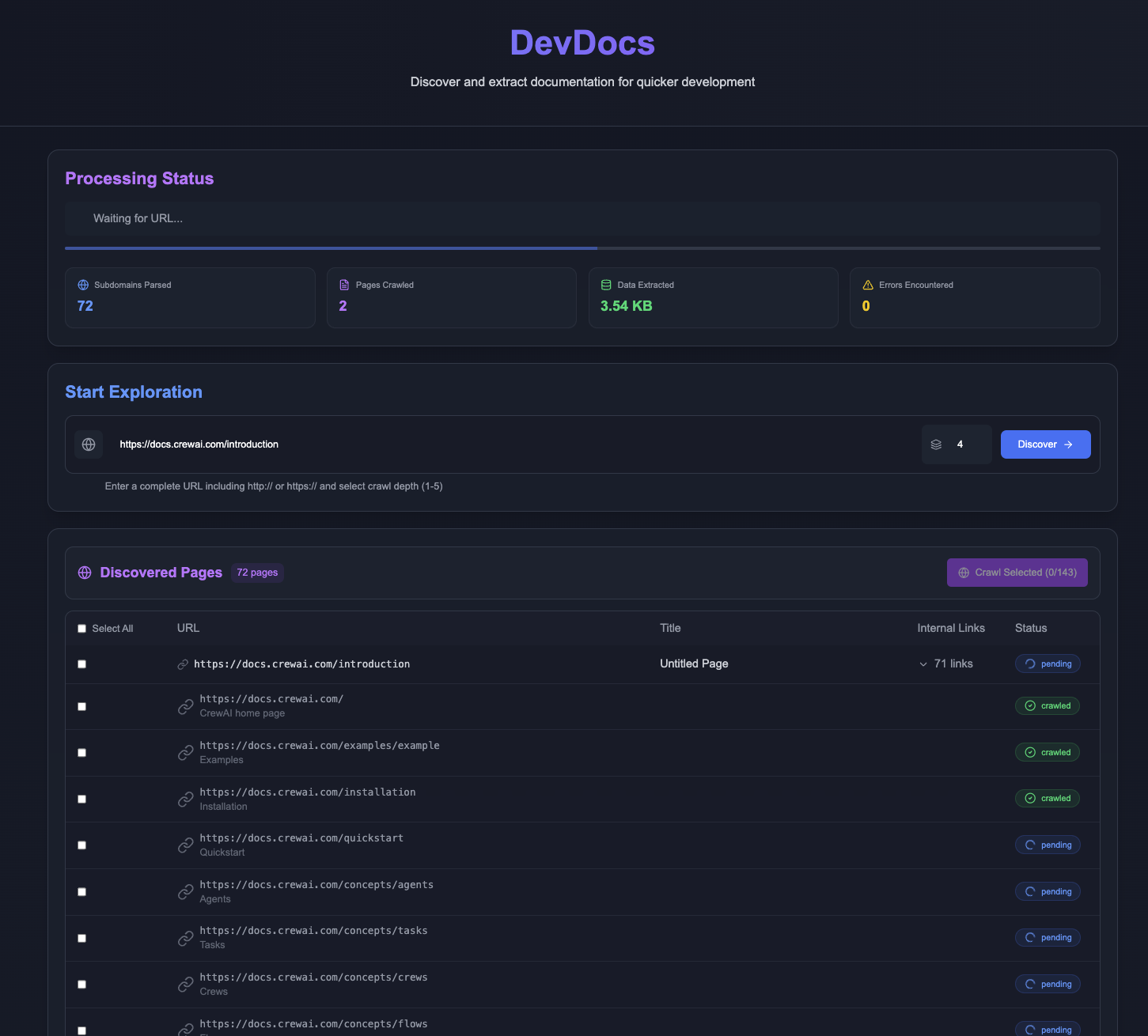

DevDocs: an MCP service for quickly crawling and organizing technical documentation

General Introduction

DevDocs is a completely free and open source tool developed by the CyberAGI team and hosted on GitHub. Designed for programmers and software developers, it starts at the URL of a technical document, automatically crawls the relevant page, and organizes it into a concise Markdown or JSON file. It has built-in MCP servers that support the use of the Claude and other big model integrations that allow users to query document content in natural language.DevDocs' goal is to reduce weeks of document research time to hours and help developers get up to speed quickly with new technologies. It's suitable for individual developers, teams, and enterprise users, and there's currently no fee, so anyone can download and use it.

Function List

- Intelligent document crawling: Input a URL and automatically crawl the relevant pages, supporting 1-5 levels of depth.

- Organize into multiple formats: Convert captured content into Markdown or JSON files for easy reading and further processing.

- MCP Server Integration: Built-in MCP server that works with big models like Claude to query documents intelligently.

- Automatic link discovery: Identify and categorize sub-links on a page to ensure complete content.

- Parallel processing acceleration: Crawl multiple pages in multiple threads to increase efficiency.

- selective crawling: Users can specify what to extract to avoid irrelevant information.

- Error recovery mechanism: Automatically retry when crawling fails to ensure stability.

- Complete logging: Record every step of the operation for easy troubleshooting.

Using Help

Installation process

DevDocs runs with Docker and is easy to install. Here are the detailed steps:

- Preparing the environment

- Git and Docker need to be installed:

- Git: Download and install it from git-scm.com.

- Docker: Download Docker Desktop from docker.com and install it.

- To check if Docker is running correctly, in the terminal type

docker --versionIf you see the version number, you have succeeded.

- Git and Docker need to be installed:

- Cloning Code

- Open a terminal (CMD or PowerShell for Windows, Terminal for Mac/Linux).

- Enter the command to download DevDocs:

git clone https://github.com/cyberagiinc/DevDocs.git - Go to the project catalog:

cd DevDocs

- Starting services

- Run the startup script according to the operating system:

- Mac/Linux:

./docker-start.sh - Windows:

docker-start.bat

- Mac/Linux:

- Windows users can manually set folder permissions if they are experiencing permission problems:

icacls logs /grant Everyone:F /T icacls storage /grant Everyone:F /T icacls crawl_results /grant Everyone:F /T - After starting, wait a few seconds and the terminal shows that the service ran successfully.

- Run the startup script according to the operating system:

- access tool

- Open your browser and type

http://localhost:3001to access the DevDocs front-end interface. - Other service addresses:

- Backend API:

http://localhost:24125 - Crawl4AI 服务:

http://localhost:11235

- Backend API:

- Open your browser and type

Main Functions

1. Crawl technical documentation

- move::

- Paste the target URL in the interface input box, such as

https://docs.example.comThe - Select the crawl depth (1-5 levels, default 5).

- Click "Start Crawling".

- Wait for it to finish, the interface will show the list of crawled pages.

- Paste the target URL in the interface input box, such as

- take note of::

- Crawling is fast, processing up to 1000 pages per minute.

- The results are saved in the

<项目目录>/crawl_resultsFolder.

- Advanced Options::

- Optional crawling is available to check off what is needed.

2. Organize the content of the document

- manipulate::

- After crawling, the content is automatically organized into Markdown or JSON files.

- exist

<项目目录>/crawl_resultsview, which is in Markdown format by default.

- Toggle format::

- Select "Export to JSON" in the interface settings for large model fine-tuning.

3. Use of MCP servers and large models

- intend::

- Download and install the Claude Desktop App (anthropic.com).

- DevDocs' MCP server runs locally by default and requires no additional configuration.

- move::

- Open the Claude App.

- Enter a question, such as "What does this document say".

- Claude will read the data from the MCP server and answer.

- Extended Usage::

- Place the local document into the

<项目目录>/storageClaude can be accessed directly. - Support for complex questions such as "How is this technology implemented?".

- Place the local document into the

4. Logging and monitoring

- View Log::

- The log file is in the

<项目目录>/logs, included:frontend.log: Front-end logs.backend.log: Back-end logs.mcp.log: MCP server logs.

- View live logs with Docker:

docker logs -f devdocs-backend

- The log file is in the

- Discontinuation of services::

- At the terminal press

Ctrl+C, shut down all services.

- At the terminal press

5. Practical scripts

- The project offers a variety of scripts located in the

<项目目录>/scripts::check_mcp_health.sh: Check the MCP server status.debug_crawl4ai.sh: Debugging the crawling service.view_result.sh: View crawl results.

- running mode::

- Go to the script directory in the terminal and type

./脚本名Implementation.

- Go to the script directory in the terminal and type

Summary of the operation process

- Install Docker and Git and download the code.

- Run the startup script to access the interface.

- Enter the URL, crawl and organize the document.

- Query content with Claude for efficiency.

application scenario

- Rapid learning of new technologies

Enter a technical documentation URL and DevDocs crawls and organizes all the pages. You can read them directly, or use Claude to ask questions about specific uses, and get up to speed with the new technology in a few days. - Teamwork

Crawl internal company documents and generate Markdown files. Team members query through the MCP server to quickly share knowledge. - Development of large model applications

Use DevDocs to collect technical information and output JSON files. Accelerate AI application development by combining MCP servers and big models. - Individual project development

Independent developers crawl documentation with DevDocs, work with VSCode and Claude, and prototype products in days.

QA

- Is there a fee for DevDocs?

No charge. It is an open source tool, free to use, and future API features are planned. - Programming experience required?

Not required. Installation is done with a few lines of commands, and after that it's just a matter of working through the interface. - What if the crawl fails?

Check the network, or view the<项目目录>/logsLog. The common problem is insufficient permissions, just follow the installation steps to adjust them. - Support for private sites?

Support, as long as the website is accessible. Internal websites need to ensure network connectivity. - What's the difference between that and FireCrawl?

DevDocs is free, crawls fast (1000 pages/minute), supports 5 levels of depth and MCP servers, while FireCrawl is paid and has limited features.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...