Behind the DeepSeek Storm: Wu Enda Warns Open Modeling Competition Will Reshape AI Values in China and the U.S.

Dear Friends.

this week DeepSeek The buzz generated has made several important trends clear to many: (i) China is catching up with the U.S. in the field of generative AI, which is having a significant impact on the AI supply chain; (ii) open weighting models are commoditizing the base model layer, creating opportunities for application developers; and (iii) scaling up is not the only way for AI to advance. Despite the industry's high focus and hype on arithmetic power, algorithmic innovations are rapidly reducing training costs.

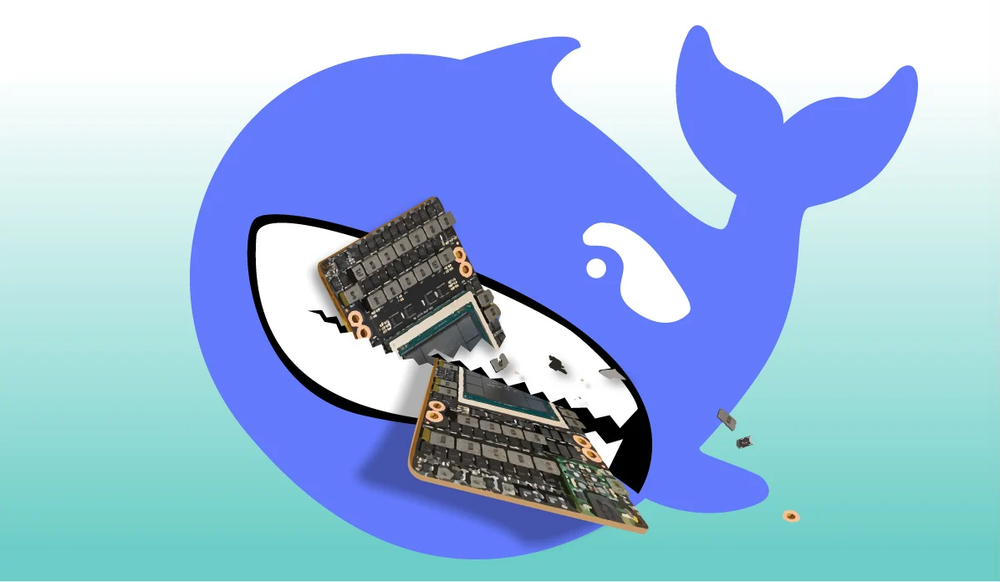

About a week ago, Chinese company DeepSeek released the DeepSeek-R1 The model, which performs comparably to OpenAI o1 in benchmarks, opens up the weights under the MIT license. Last week in Davos, many business leaders from non-technical backgrounds asked me about this. There was a "DeepSeek sell-off" in the stock market on Monday: shares of a number of US tech companies, including NVIDIA, tumbled (and have partially recovered at the time of writing).

I think DeepSeek drives home the following points:

Chinese generative AI is closing the gap with the US.. When ChatGPT is released in November 2022, the US is significantly ahead of China in generative AI. People's perceptions are slow to shift, so recently there are still friends in China and the US who think China is lagging behind. But the truth is that this gap has narrowed rapidly over the past two years. Via lit. ten thousand questions on general principles (idiom); fig. a long list of questions and answers (My team has been using it for months), Kimi With Chinese models such as InternVL and DeepSeek, it is clear that China is closing the gap, and even had a temporary lead in areas such as video generation.

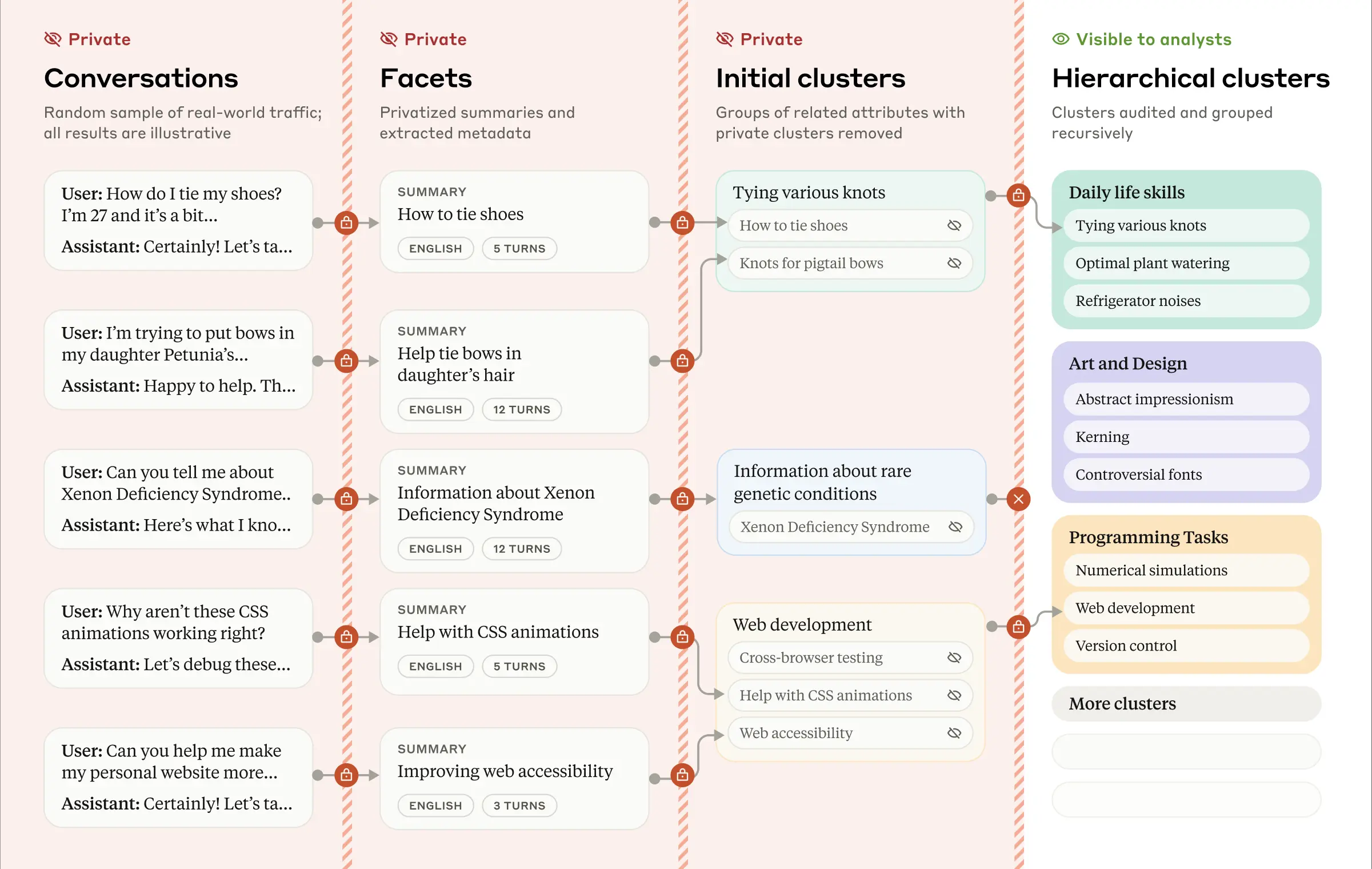

I am heartened that DeepSeek-R1 is being released in an open-weighted format with a detailed technical report. In contrast, several US companies have promoted policies that restrict open source by hyping hypothetical risks such as "AI extinction". It is now clear that open source/open weighting models have become a key part of the AI supply chain: many companies will adopt them. If the U.S. continues to block open source, China will dominate the supply chain, and eventually most companies will use models that reflect Chinese values more than U.S. values.

Open weighting models are commoditizing the base model layer. As I mentioned earlier, large language model Token prices are dropping rapidly, and open weighting accelerates this trend and provides developers with more options. openAI o1 charges $60 per million output Token.And the DeepSeek R1 is only $2.19The price difference is almost 30 times higher than the price difference. This nearly 30-fold spread has led to a trend of price reductions that have attracted widespread attention.

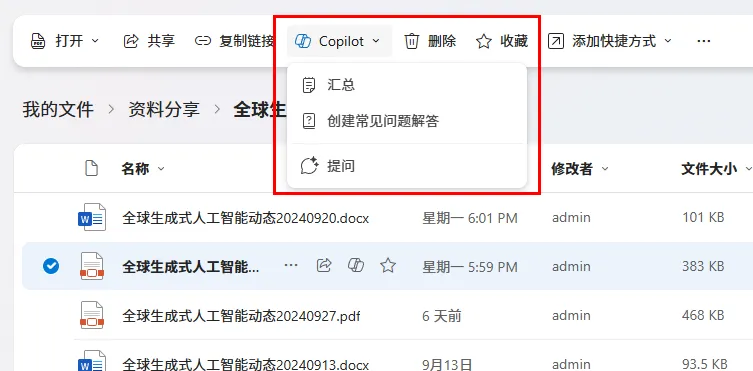

The business of training base models and selling API access is fraught with challenges. Many companies in this space are still looking for ways to recoup the high cost of training. The article "AI's $600 Billion Dilemma" eloquently describes this challenge (but to be clear, I think the fundamental modeling companies are doing a great job and look forward to their success). In contrast, there is a huge business opportunity in building applications based on basic models. Since others have invested billions in training models, you can get them for a few dollars to develop customer service chatbots, email summarization tools, AI doctors, legal document assistants, and more.

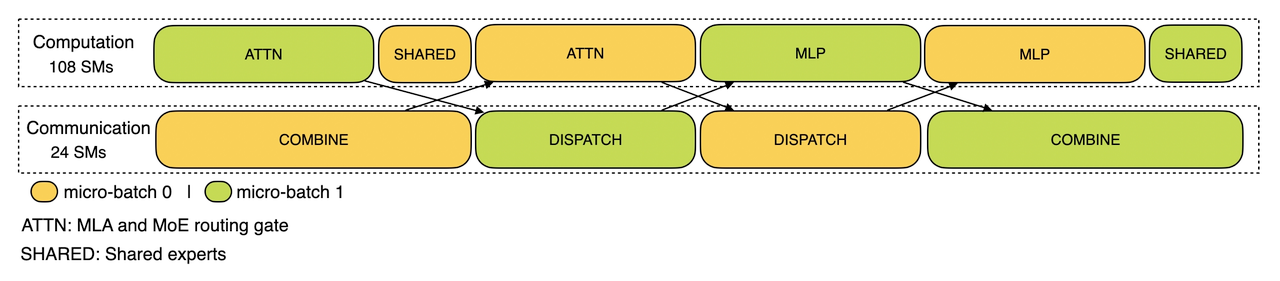

AI advances don't just rely on scaling. The argument that scaling up models drives progress is rampant. To be fair, I was an early advocate of the scale argument. Companies raised billions of dollars by promoting the narrative that more capital would (i) scale and (ii) steadily improve performance. This has led to an over-focus on scale-up at the expense of multiple other avenues of progress. Driven by the U.S. AI chip ban, the DeepSeek team had to innovate a lot of optimizations on the weaker H800 GPUs, ultimately keeping the model training cost (excluding research investment) under $6 million.

It remains to be seen whether this will actually reduce arithmetic demand. Sometimes a decrease in the unit price of goods leads to an increase in total spending instead. I think in the long run there is almost no upper limit to human demand for intelligence and arithmetic power, so even if the cost decreases, humans will still consume more intelligent resources.

Social media interpretations of DeepSeek's progress have been varied, mapping out different positions like a Rorschach inkblot test. I don't think the geopolitical impact of DeepSeek-R1 has been fully realized yet, but it is a boon for AI application developers. My team has already begun brainstorming new ideas that can only be realized with open, advanced inference models. This is still the prime time to build AI applications!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...