DeepSeek API large-scale application of hard disk caching technology: a key step in the civilianization of large models

-- Partial Discussion of the Deeper Logic of Big Model API Price Wars, User Experience Optimization, and Technology Inclusion

Image credit: DeepSeek official documentation

At a time when competition in the field of big AI models is heating up, DeepSeek recently announced that its API service innovatively uses theHard Disk Cache TechnologyDeepSeek's price adjustment is a shocking one - the price of the cache hit portion is directly reduced to one tenth of the previous price, once again refreshing the bottom line of the industry price. As a third-party reviewer who has long been concerned about big model technology and industry trends, I believe that DeepSeek's move is not only a cost revolution driven by technological innovation, but also an important step to optimize the depth of the big model API user experience and accelerate the generalization of big model technology.

Technological Innovations: Hard Disk Cache Subtleties and Performance Jumps

DeepSeek recognizes that the high cost and latency of large model APIs has long been a constraint to their widespread adoption, and DeepSeek is deeply aware that the contextual repetition that is prevalent in user requests is a key contributor to these problems. For example, in multi-round conversations, each round needs to repeat the input of the previous conversation history; in long text processing tasks, Prompt often contains repeated references. Duplicate Computing Token It wastes arithmetic and increases latency.

To address this pain point, DeepSeek creatively introduces contextual hard disk caching technology. The core principle is to intelligently cache contextual content that is predicted to be reused in the future, such as conversation history, system presets, Few-shot examples, etc., into a distributed hard disk array. When a user initiates a new API request, the system automatically detects whether the prefix part of the input matches the cached content (note: the prefix must be identical to hit the cache). If it does, the system reads the duplicate directly from the high-speed drive cache without recalculating, optimizing both latency and cost.

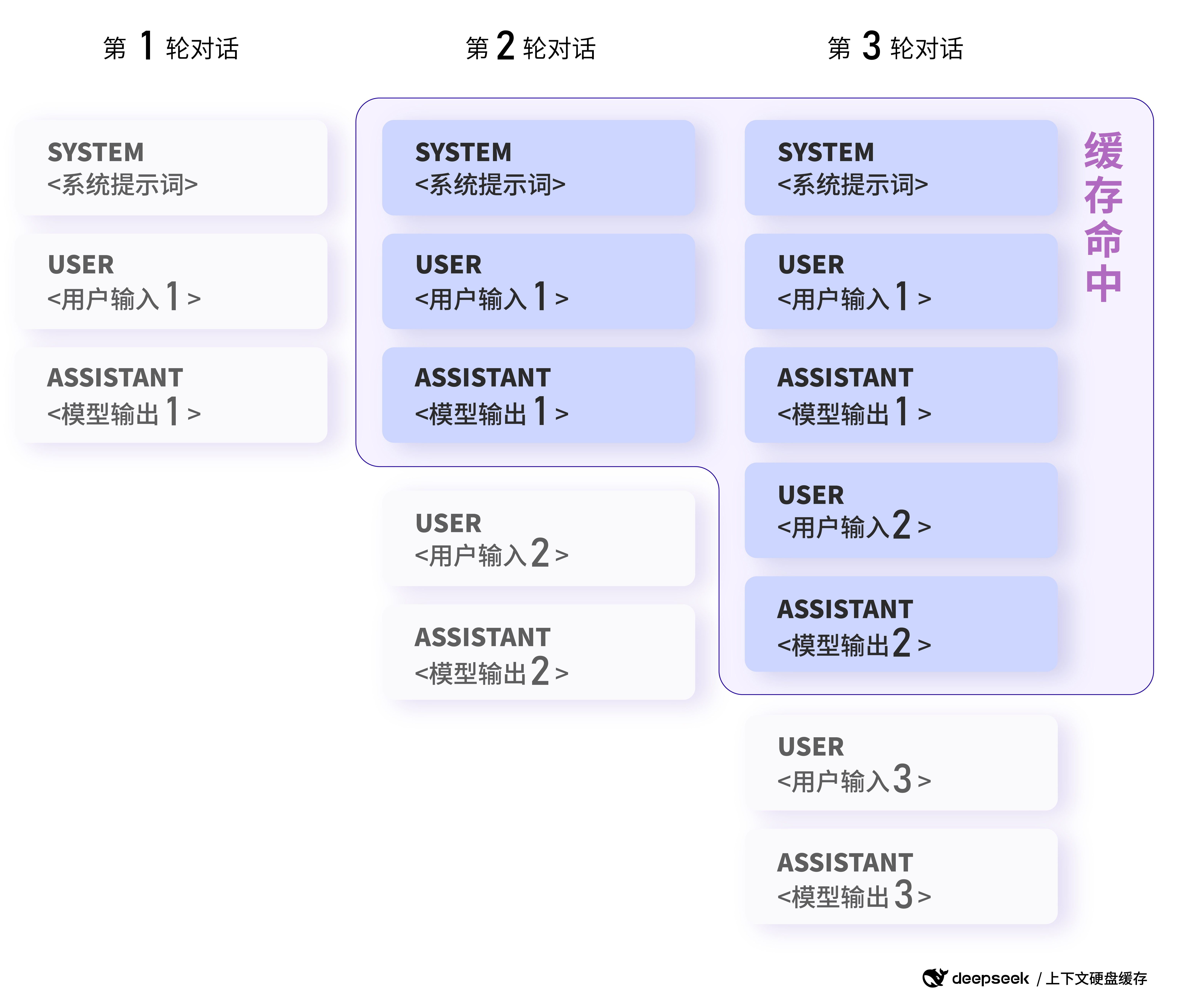

To get a clearer understanding of how caching works, let's look at a couple of DeepSeek Official example provided:

Example 1: Multi-Round Dialogue Scenario

Core feature: subsequent dialog rounds automatically hit the context cache of the previous round.

In multi-round conversations, where users typically ask consecutive questions around a single topic, DeepSeek's hard disk caching can effectively reuse the conversation context. For example, in the following dialog scenario:

First request:

messages: [

{"role": "system", "content": "你是一位乐于助人的助手"},

{"role": "user", "content": "中国的首都是哪里?"}

]

Second request:

messages: [

{"role": "system", "content": "你是一位乐于助人的助手"},

{"role": "user", "content": "中国的首都是哪里?"},

{"role": "assistant", "content": "中国的首都是北京。"},

{"role": "user", "content": "美国的首都是哪里?"}

]

Image credit: DeepSeek official documentation

Example of a cache hit in a multi-round dialog scenario: subsequent dialog rounds automatically hit the context cache of the previous round.

On the second request, since the prefix part (system message + first user message) is exactly the same as the first request, this part will be hit by the cache without having to repeat the computation, thus reducing latency and cost.

According to DeepSeek's official data, for extreme scenarios with 128K inputs and most repetitions, the measured latency of the first token plummeted from 13 seconds to 500 milliseconds, which is an amazing performance improvement. Even in non-extreme scenarios, it can effectively reduce latency and improve user experience.

What's more, DeepSeek's hard disk caching service is fully automated and user-independent. You can enjoy the performance and price advantages of caching without having to change any code or API interface. The new prompt_cache_hit_tokens (cache hits) in the usage field returned by the API lets you see how many cache hits you've received. tokens (number of tokens) and prompt_cache_miss_tokens (number of missed tokens) fields to monitor cache hits in real time to better evaluate and optimize caching performance.

DeepSeek is able to take the lead in applying hard disk caching technology on a large scale because of its advanced model architecture. The MLA structure (Multi-head Latent Attention) proposed by DeepSeek V2 significantly compresses the size of the contextual KV Cache while guaranteeing the performance of the model, making it possible to store the KV Cache on a low-cost hard disk, which lays the foundation for the realization of hard disk caching technology. This makes it possible to store the KV Cache on a low-cost hard disk, laying the foundation for the realization of hard disk caching technology.

understandings DeepSeek-R1 API Cache Hit vs. Price:Using the DeepSeek-R1 API Frequently Asked Questions

Application scenarios: from long text Q&A to code analysis, the boundaries are infinitely expandable!

Hard disk caching technology has a wide range of scenarios that can benefit almost any large model application involving contextual input. The original article lists the following typical scenarios and provides more specific examples:

- A quiz assistant with long preset prompt words:

- Core feature: Fixed System Prompts can be cached, reducing cost per request.

- Example:

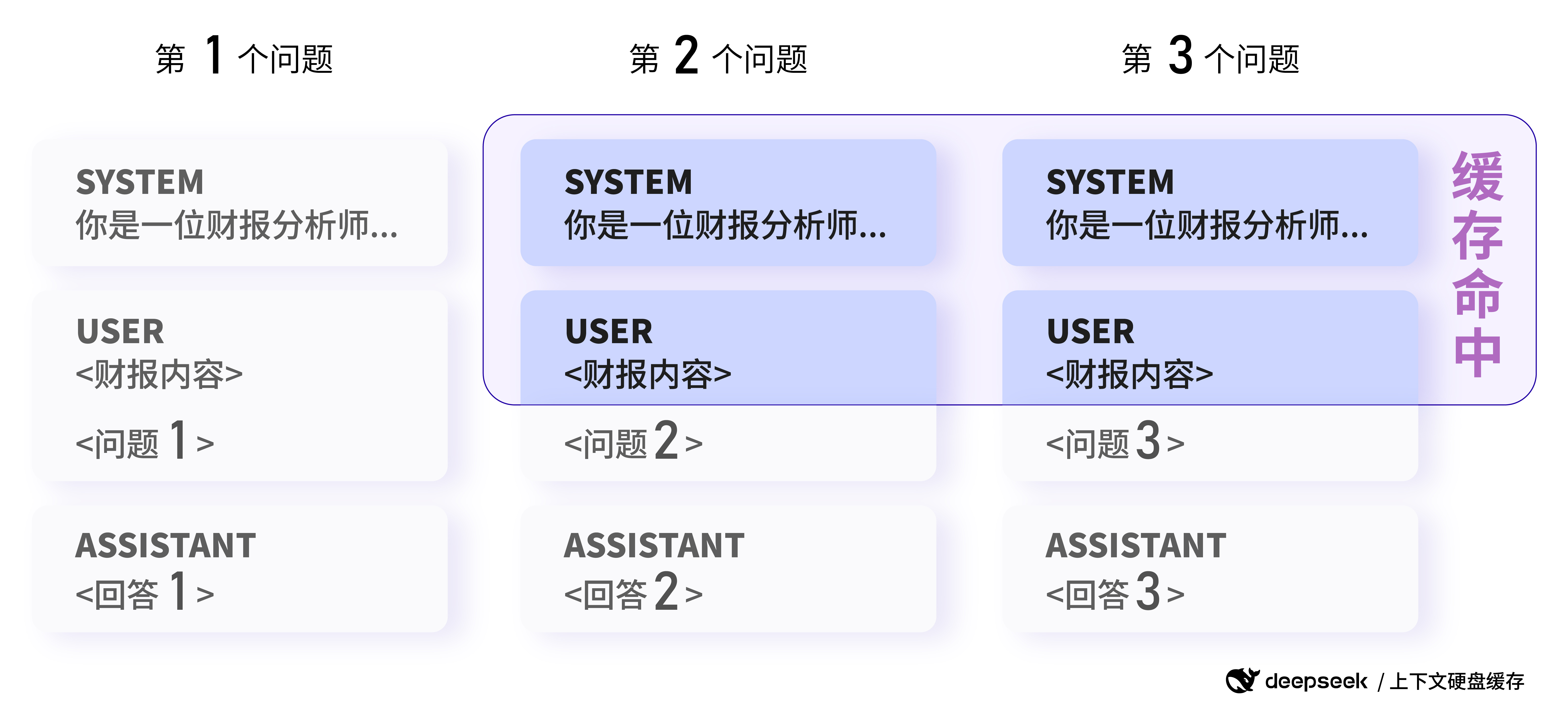

Example 2: Long Text Q&A Scenario

Core feature: multiple analysis of the same document can hit the cache.

Users need to analyze the same earnings report and ask different questions:

First request:

messages: [

{"role": "system", "content": "你是一位资深的财报分析师..."},

{"role": "user", "content": "<财报内容>\n\n请总结一下这份财报的关键信息。"}

]

Second request:

messages: [

{"role": "system", "content": "你是一位资深的财报分析师..."},

{"role": "user", "content": "<财报内容>\n\n请分析一下这份财报的盈利情况。"}

]

In the second request, since the part of the system message and user message has the same prefix as the first request, this part can be hit by the cache, saving computing resources.

- Role-playing app with multiple rounds of dialog:

- Core features: high reuse of dialog history and significant caching.

- (Example 1 has been shown in detail)

- Data analysis for fixed text collections:

- Core features: multiple analyses and quizzes on the same document with high prefix repetition.

- For example, analyzing and quizzing the same financial report or legal document multiple times. (Example 2 has been shown in detail)

- Code analysis and troubleshooting tools at the code repository level:

- Core feature: Code analysis tasks often involve a large number of contexts, and caching can effectively reduce costs.

- Few-shot learning:

- Core feature: Few-shot examples prefixed with Prompt can be cached, reducing the cost of multiple Few-shot calls.

- Example:

Example 3: Few-shot Learning Scenario

Core feature: the same Few-shot example can be hit cached as a prefix.

Users use Few-shot learning to improve the model's effectiveness in historical knowledge quizzing:

First request:

messages: [

{"role": "system", "content": "你是一位历史学专家,用户将提供一系列问题,你的回答应当简明扼要,并以`Answer:`开头"},

{"role": "user", "content": "请问秦始皇统一六国是在哪一年?"},

{"role": "assistant", "content": "Answer:公元前221年"},

{"role": "user", "content": "请问汉朝的建立者是谁?"},

{"role": "assistant", "content": "Answer:刘邦"},

{"role": "user", "content": "请问唐朝最后一任皇帝是谁"},

{"role": "assistant", "content": "Answer:李柷"},

{"role": "user", "content": "请问明朝的开国皇帝是谁?"},

{"role": "assistant", "content": "Answer:朱元璋"},

{"role": "user", "content": "请问清朝的开国皇帝是谁?"}

]

Second request:

messages: [

{"role": "system", "content": "你是一位历史学专家,用户将提供一系列问题,你的回答应当简明扼要,并以`Answer:`开头"},

{"role": "user", "content": "请问秦始皇统一六国是在哪一年?"},

{"role": "assistant", "content": "Answer:公元前221年"},

{"role": "user", "content": "请问汉朝的建立者是谁?"},

{"role": "assistant", "content": "Answer:刘邦"},

{"role": "user", "content": "请问唐朝最后一任皇帝是谁"},

{"role": "assistant", "content": "Answer:李柷"},

{"role": "user", "content": "请问明朝的开国皇帝是谁?"},

{"role": "assistant", "content": "Answer:朱元璋"},

{"role": "user", "content": "请问商朝是什么时候灭亡的"},

]

On the second request, since the same 4-shot example is used as a prefix, this portion can be hit by the cache and only the last question needs to be recomputed, thus significantly reducing the cost of Few-shot learning.

Image credit: DeepSeek official documentation

Example of a cache hit in a data analytics scenario: requests with the same prefix can hit the cache (note: the image here follows the original data analytics example and focuses more on the concept of prefix duplication, which can be interpreted in the same way as the Few-shot example scenario).

These scenarios are just the tip of the iceberg. The application of hard disk caching technology really opens up the imagination for the application of long context large model APIs. For example, we can build more powerful long text authoring tools, handle more complex knowledge-intensive tasks, and develop conversational AI applications with more depth and memory.

Ref: Inspired by Claude

Claude Innovates API Long Text Cache to Dramatically Improve Processing Efficiency and Reduce Costs

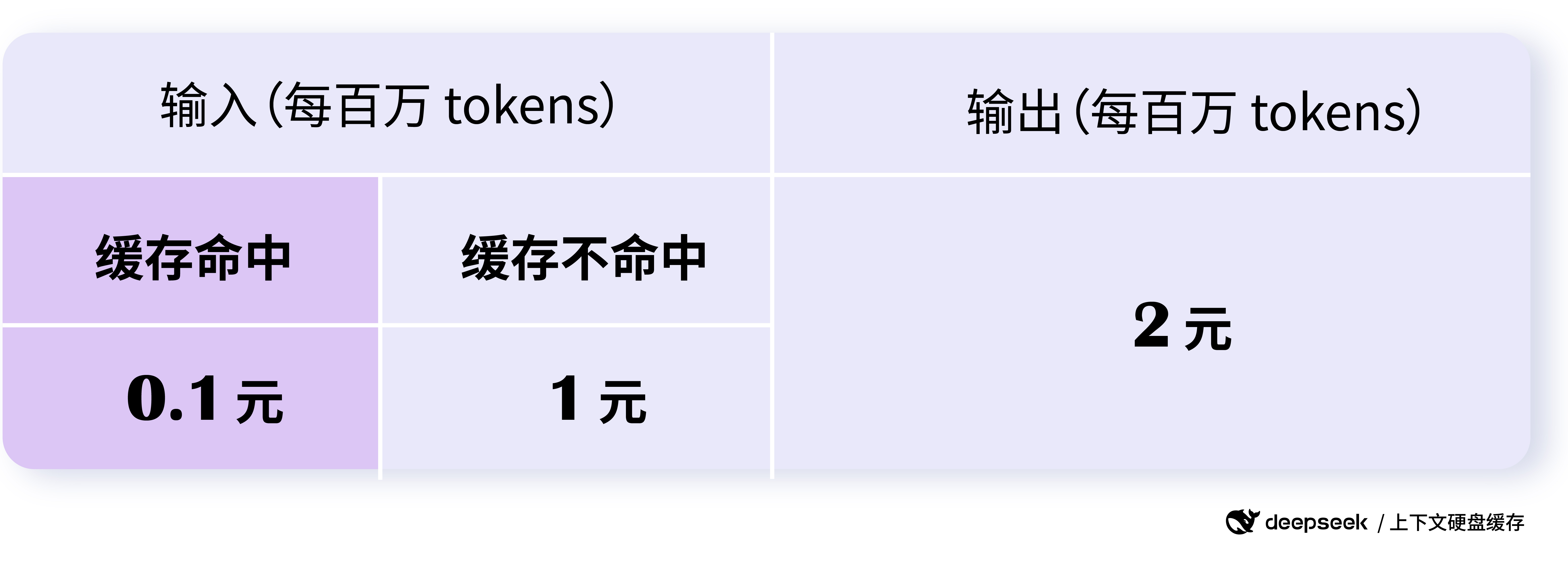

Cost Advantage: Price straight down by orders of magnitude, universal large model ecology

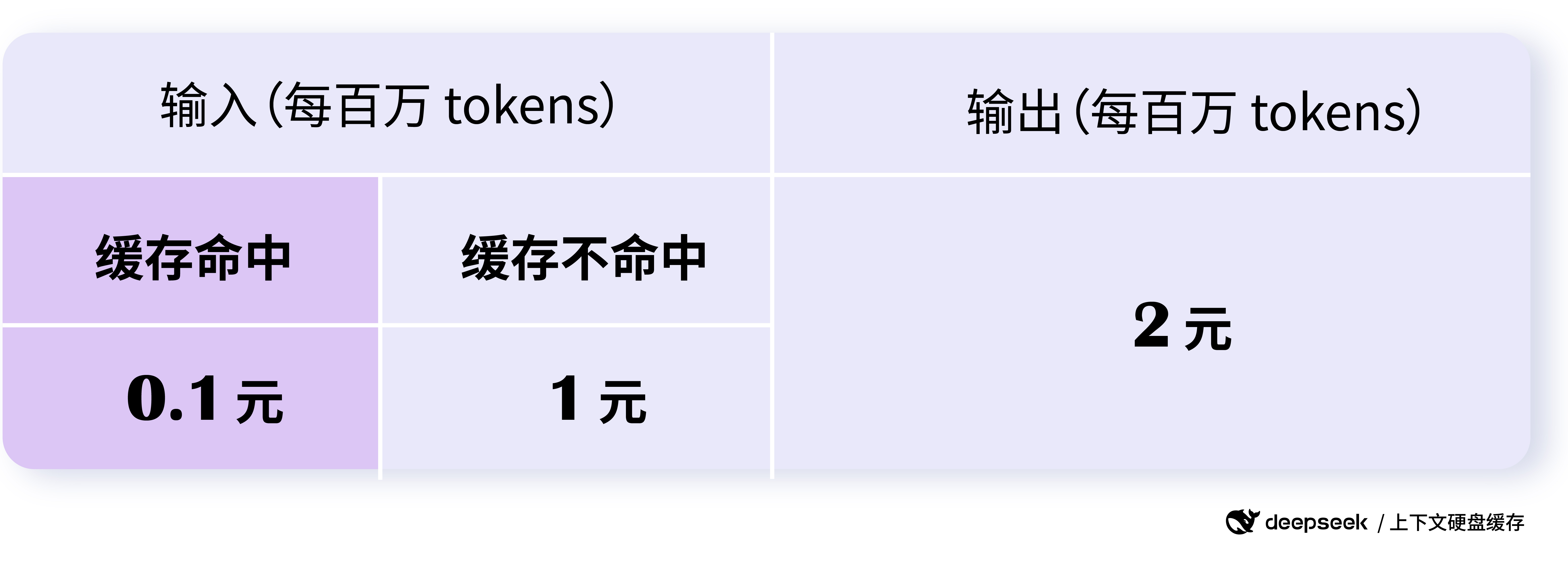

The price adjustment for the DeepSeek API is "epic", with the cache hit portion of the API priced at only $0.10/million tokens and the hit portion at $1/million tokens. The price of the cache hit portion is only $0.1 per million tokens, and the hit portion is only $1 per million tokens, an order of magnitude lower than the previous price.

According to the official data of DeepSeek, it can save up to 90%, and even without any optimization, users can save more than 50% overall. This cost advantage is of great significance in lowering the threshold of large model application and accelerating the popularization of large models.

More noteworthy is DeepSeek's pricing strategy. Cache hits and misses are priced in steps, encouraging users to utilize the cache as much as possible, optimizing the Prompt design, increasing the cache hit rate, and thus further reducing costs. At the same time, neither the cache service itself nor the cache storage space charges any additional fees, which is truly user-friendly.

Image credit: DeepSeek official documentation

DeepSeek API prices have been dramatically reduced, with cache hits costing as little as $0.1 per million tokens

DeepSeek API's significant price reduction will undoubtedly accelerate the evolution of the big model price war. However, unlike the previous simple price competition, DeepSeek's price reduction is a rational price reduction based on technological innovation and cost optimization, which is more sustainable and industry-driven. This healthy price competition will ultimately benefit the entire big model ecosystem, so that more developers and enterprises can enjoy advanced big model technology at a lower cost.

Unlimited streaming and concurrency, secure and reliable caching services

In addition to performance and price advantages, DeepSeek API's stability and security are also trustworthy. DeepSeek API service is designed according to the capacity of 1 trillion tokens per day, with unlimited streaming and concurrency for all users, which ensures the quality of service under high load conditions.

DeepSeek has also given full consideration to data security. Each user's cache is separate and logically isolated from each other, ensuring the security and privacy of user data. Caches that have not been used for a long time will be automatically emptied (usually a few hours to a few days) and will not be kept for a long time or used for other purposes, further reducing potential security risks.

It should be noted that the cache system uses 64 tokens as a storage unit, and anything less than 64 tokens will not be cached. Also, the cache system is "best effort" and does not guarantee 100% cache hits. In addition, cache construction takes seconds, but for long context scenarios, this is perfectly acceptable.

Model Upgrade and Future Outlook

It is worth mentioning that along with the launch of the hard disk caching technology, DeepSeek also announced that the deepseek-chat model has been upgraded to DeepSeek-V3, and the deepseek-reasoner model is the new model DeepSeek-R1. The new models offer improved performance and capabilities at a significantly lower price, further improving the the competitiveness of the DeepSeek API.

According to the official pricing information, DeepSeek-V3 API (deepseek-chat) enjoys a discounted price until 24:00, February 8, 2025, with the price of $0.1/million tokens for cache hit input, $1/million tokens for hit input, and $2/million tokens for output. The DeepSeek-R1 API (deepseek-reasoner) is positioned as an inference model with 32K thought chain length and 8K maximum output length, with the price of $1/million tokens for input (cache hit). DeepSeek-R1 API (deepseek-reasoner) is positioned as an inference model with 32K thought chain lengths and 8K maximum output lengths, with an input price of $1/million tokens for cached hits, $4/million tokens for missed inputs, and $16/million tokens for outputs (all the tokens for the thought chain and the final answer). and all tokens of the final answer).

DeepSeek's series of innovative initiatives demonstrates its continuous technology investment and user-oriented philosophy. We have every reason to believe that with DeepSeek and more innovative forces, big model technology will accelerate its maturity, become universal, and bring more far-reaching changes to all industries.

concluding remarks

DeepSeek API's innovative use of hard disk caching technology and significant price reduction is a milestone breakthrough. It not only solves the long-standing cost and latency pain points of big model APIs, but also brings users better quality and more inclusive big model services through technological innovation.DeepSeek's step may redefine the competition pattern of big model APIs, accelerate the popularization and application of AI technology, and ultimately build a more prosperous, open, and inclusive ecosystem of big models.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...