DeepClaude: A Chat Interface Fusing DeepSeek R1 Chained Reasoning and Claude Creativity

General Introduction

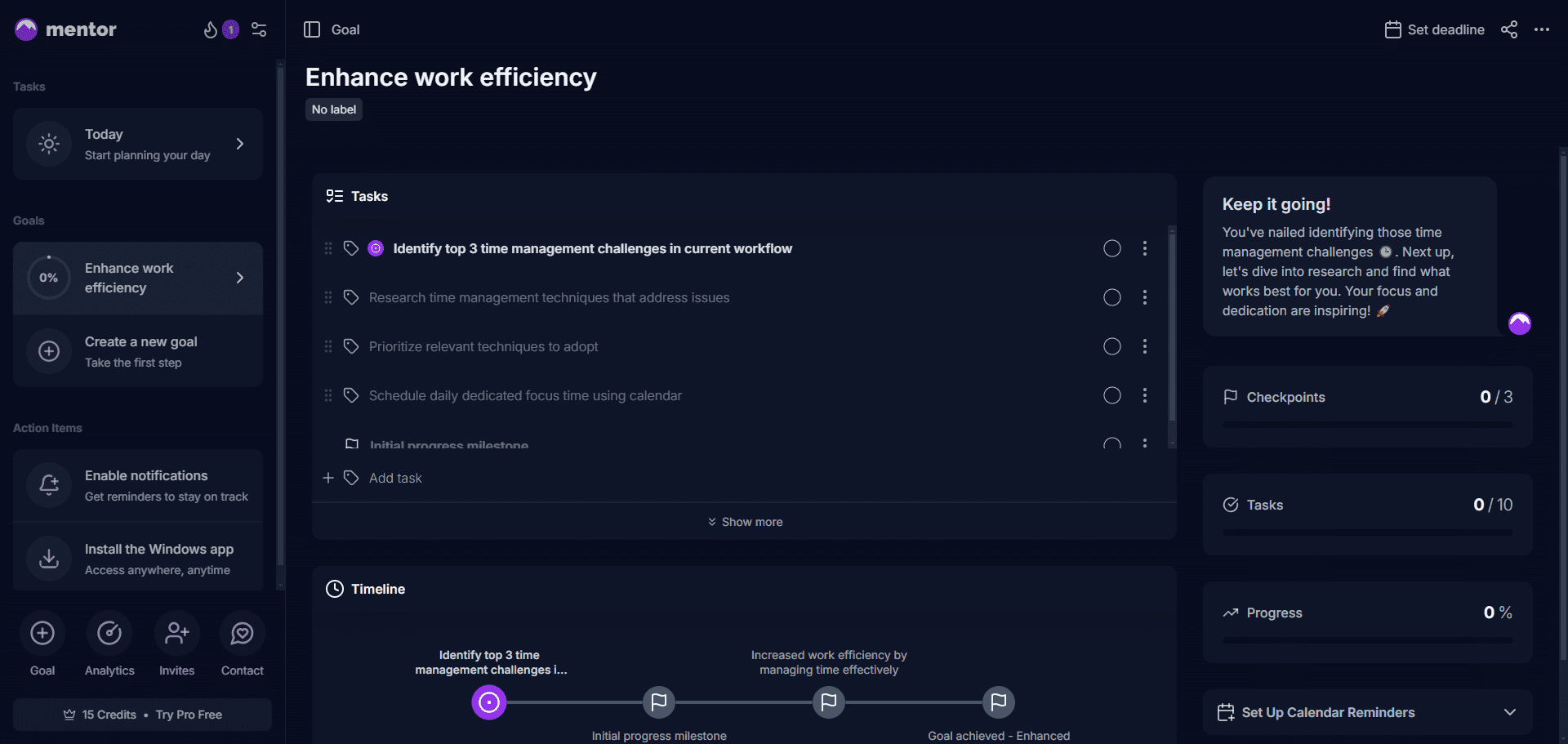

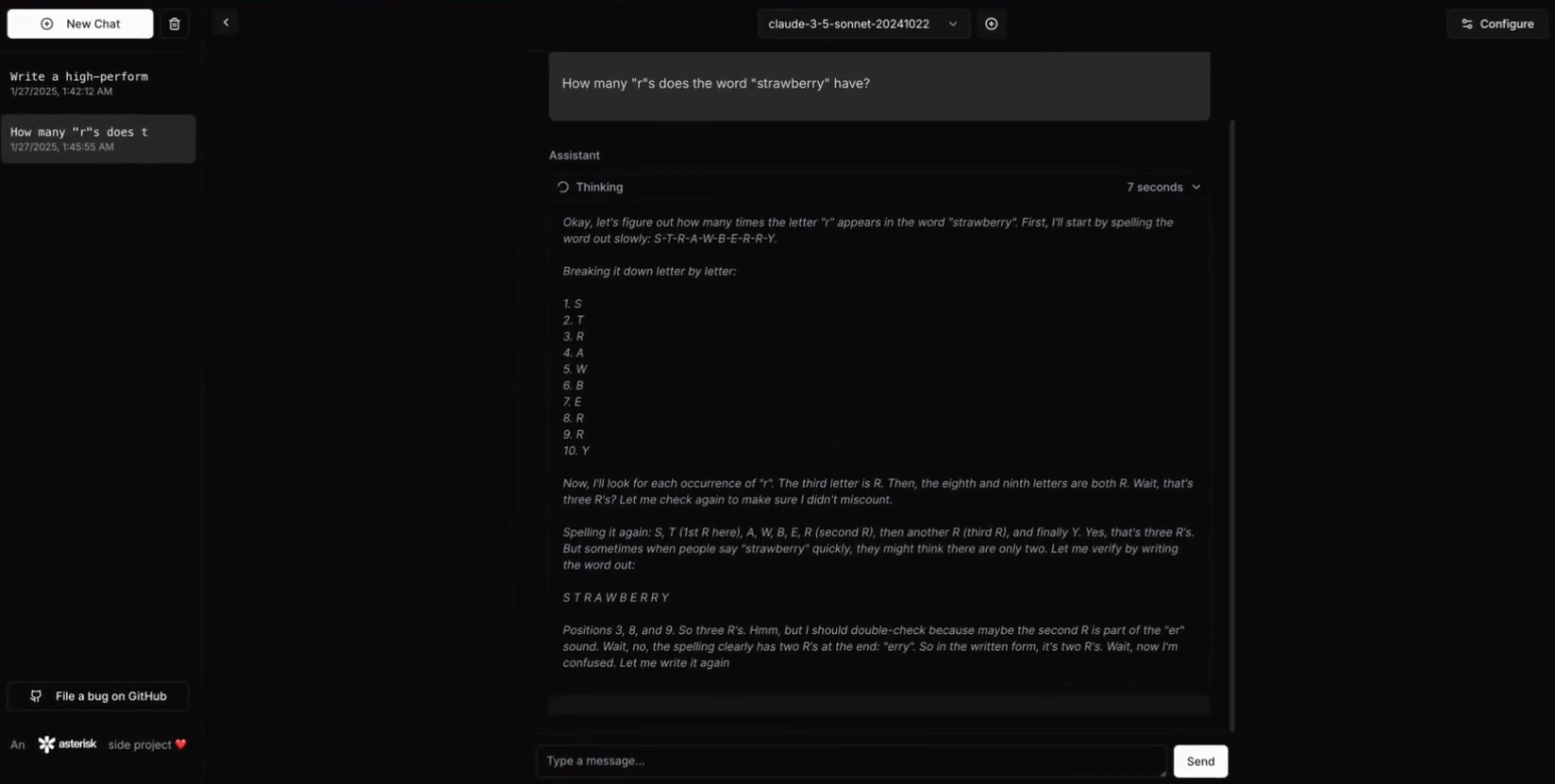

DeepClaude is a high-performance Large Language Model (LLM) inference API and chat interface that integrates DeepSeek R1's Chained Reasoning (CoT) capabilities with the AnthropicClaude modeling creativity and code generation capabilities. This project significantly outperforms OpenAI o1, DeepSeek R1 and Claude Sonnet 3.5, provides a unified interface that leverages the strengths of both models while maintaining full control over API keys and data. DeepClaude features include zero-latency response, end-to-end security, high configurability, and an open source code base. Users can manage their API keys with their own, ensuring data privacy and security. Best of all, DeepClaude is completely free and open source.

DeepClaude uses R1 for inference and then lets Claude output the result

DeepClaude also offers a high-performance LLM inference API

Function List

- Zero latency response: Instant response through a high-performance Rust API.

- private and secure: Local API key management to ensure data privacy.

- Highly configurable: Users can customize all aspects of the API and interface to suit their needs.

- open source: Free and open source code base, users are free to contribute, modify and deploy.

- Dual AI capabilities: Combine the creativity and code generation power of Claude Sonnet 3.5 with the reasoning power of DeepSeek R1.

- Hosted BYOK API: Managed using the user's own API key to ensure complete control.

Using Help

Installation process

- pre-conditions::

- Rust 1.75 or higher

- DeepSeek API Key

- Anthropic API Key

- clone warehouse::

git clone https://github.com/getAsterisk/deepclaude.git

cd deepclaude

- Build the project::

cargo build --release

- configuration file: Create the project root directory

config.tomlDocumentation:

[server]

host = "127.0.0.1"

port = 3000

[pricing]

# 配置定价选项

- Operational services::

cargo run --release

Guidelines for use

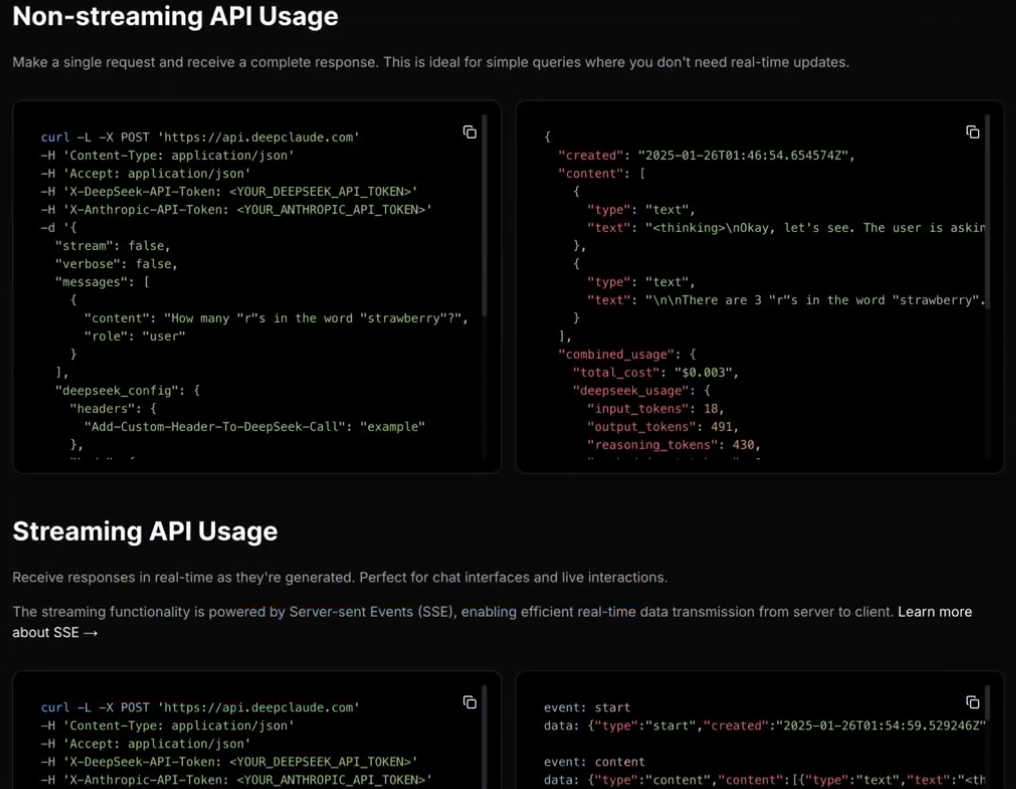

- API Usage::

- basic example::

import requests url = "http://127.0.0.1:3000/api" payload = { "model": "claude", "prompt": "Hello, how can I help you today?" } response = requests.post(url, json=payload) print(response.json())- Streaming Response Example::

import requests url = "http://127.0.0.1:3000/api/stream" payload = { "model": "claude", "prompt": "Tell me a story." } response = requests.post(url, json=payload, stream=True) for line in response.iter_lines(): if line: print(line.decode('utf-8')) - self-hosted::

- Configuration options: Users can modify the

config.tomlconfiguration options in the documentation to customize aspects of the API and interface.

- Configuration options: Users can modify the

- safety::

- Local API key management: Ensure the privacy of API keys and data.

- end-to-end encryption: Protects the security of data transmission.

- dedicate::

- Contribution Guidelines: Users can contribute code and improve the project by submitting Pull Requests or reporting issues.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...