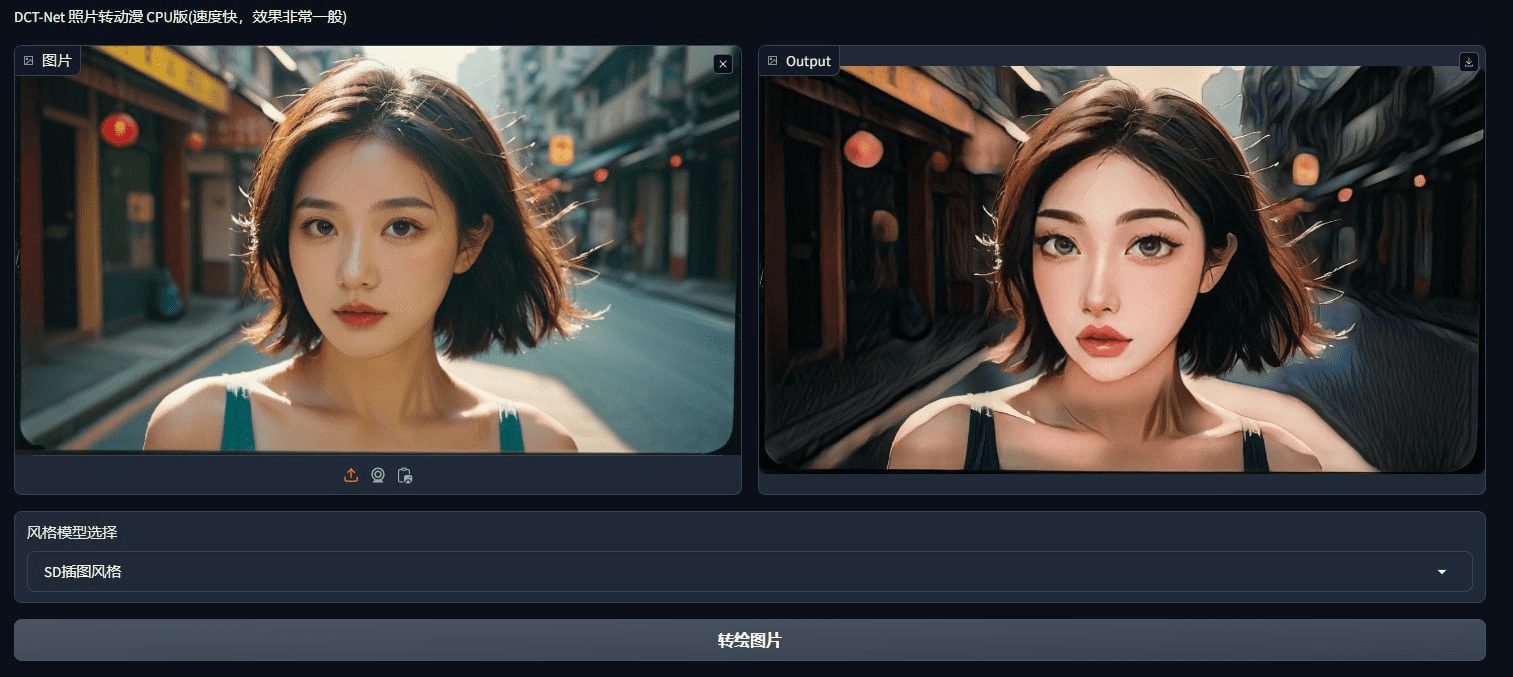

DCT-Net: An Open Source Tool for Transpainting Photos and Videos to Anime Stylization

General Introduction

DCT-Net is an open-source project developed by DAMO Academy and Wang Xuan Institute of Computer Technology of Peking University, aiming to realize the stylized conversion of images for animation. The project utilizes deep learning techniques to seamlessly convert natural photographs into various art styles such as animation, 3D, hand-painted, and sketched by means of Domain-Calibrated Translation.DCT-Net provides a variety of pre-trained models and supports the training of customized stylized data for personal entertainment, creative design, and the movie and game industries.

Function List

- Provides a variety of pre-trained models covering a wide range of artistic styles

- Support for training with customized style data

- Online trial, no local environment configuration required

- Efficient performance, supporting both CPU and GPU environments

- Style conversion for images and videos

Using Help

Installation and Configuration

- Installation of dependencies: First you need to install the

modelscopelibrary, which can be installed with the following command:pip install "modelscope[cv]" -f https://modelscope.oss-cn-beijing.aliyuncs.com/releases/repo.html - Download pre-trained model: On the first run of the code, the model automatically downloads the pre-training file.

Image Style Conversion

- Define the model: Define five types of face styles to be converted by the DCT-Net model:

model_dict = { "anime": "damo/cv_unet_person-image-cartoon_compound-models", "3d": "damo/cv_unet_person-image-cartoon-3d_compound-models", "handdrawn": "damo/cv_unet_person-image-cartoon-handdrawn_compound-models", "sketch": "damo/cv_unet_person-image-cartoon-sketch_compound-models", "art": "damo/cv_unet_person-image-cartoon-artstyle_compound-models" } - Load images and convert them::

import os import cv2 from IPython.display import Image, display, clear_output from modelscope.pipelines import pipeline from modelscope.utils.constant import Tasks from modelscope.outputs import OutputKeys style = "anime" # 可选 "anime", "3d", "handdrawn", "sketch", "art" filename = "4.jpg" img_path = 'picture/' + filename img_anime = pipeline(Tasks.image_portrait_stylization, model=model_dict["anime"]) result = img_anime(img_path) save_name = 'picture/images/' + os.path.splitext(filename)[0] + '_' + style + '.jpg' cv2.imwrite(save_name, result[OutputKeys.OUTPUT_IMG]) clear_output() display(Image(save_name))

Video Style Conversion

- Extract video frames::

video = 'sample_video.mp4' video_file = 'movie/' + video image_dir = 'movie/images/' vc = cv2.VideoCapture(video_file) i = 0 if vc.isOpened(): rval, frame = vc.read() while rval: cv2.imwrite(image_dir + str(i) + '.jpg', frame) i += 1 rval, frame = vc.read() vc.release() - Converting video frames: Style each frame using the same method as for image conversion, and then merge the converted frames into a video.

One-Click Installer Download

Lite (CPU version, only manga style retained)

https://drive.uc.cn/s/eab2a6fad2dd4 Password: XTQi

Full Version:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...