Dharma Institute's "Searchlight" Video Creation Platform Full Review

Received earlier today"search for light"A notification that the internal test application has been approved, and a brief review posted before bedtime.

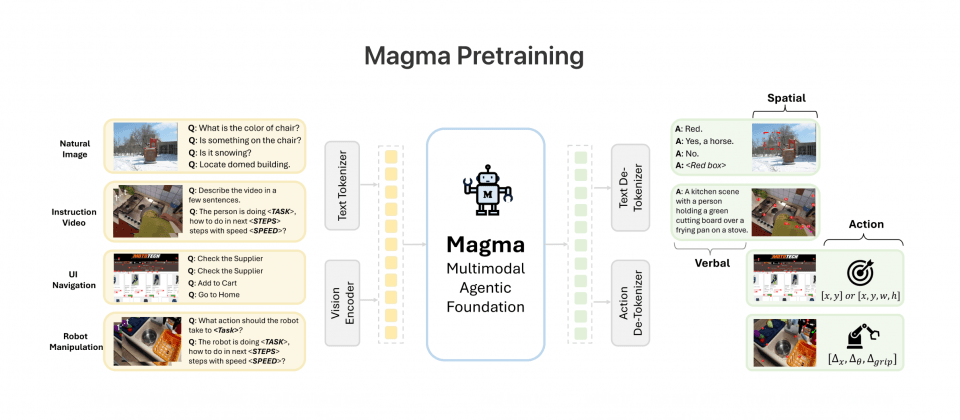

The platform is positioned as the "vision technology capability application platform" of Dharma Institute, and there are fewer applications at present (compared with the launch), and we expect to gradually open up more vision applications.

The light search is divided into two addresses:

https://xunguang.damo-vision.com/ (Light-seeking AI)

https://damo-vision.com/ (looks like a platform for testing less mature features, put it at the end of the explanation, here we call it "Seek Light AI small feature test")

Light-seeking AI

All the features list, a screen simply can not put, looks like he wants to apply all kinds of the latest technology to cover all kinds ofvisual editorScene. It looks like Seeking Light is going to be the country's RunwayML The

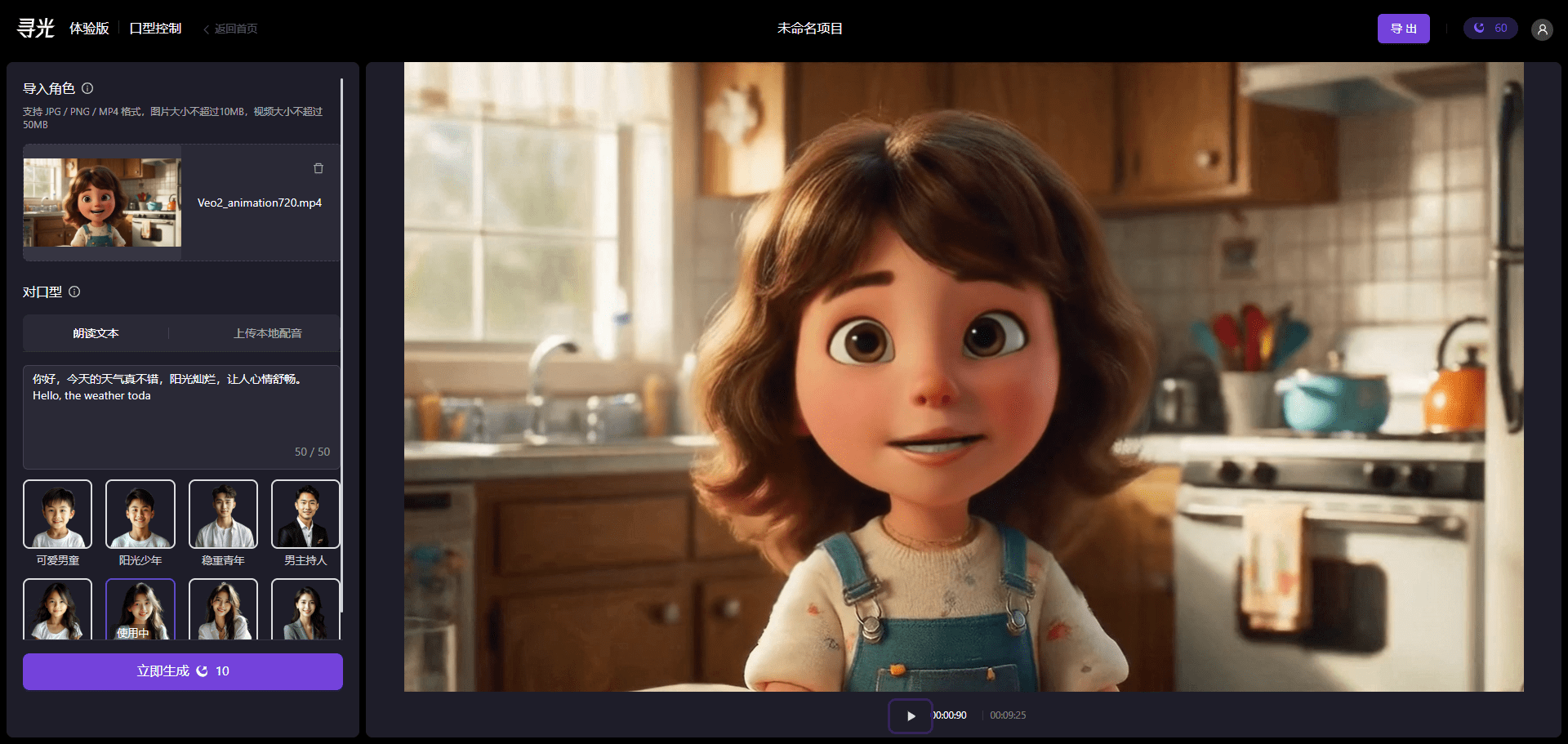

Mouth Control

We viewed the effect of mouth synchronization using real people, anime images, pictures, and videos, respectively, for each of the four types of materials.

Note: The total number of pixels (length*width) of the current test video/image clip resolution is required (256*256 ~ 2048*2048).

Generally using anime images, small looping videos generate the best results, start here:

Test effect: (compressed about 3 times)

Use the image to generate it again:

Problems with the lighting of anime characters' faces, unnatural facial lighting after mouth synchronization.

Generate it again using live action images (without trying live action video, the overall result will be a bit better than a single character image):

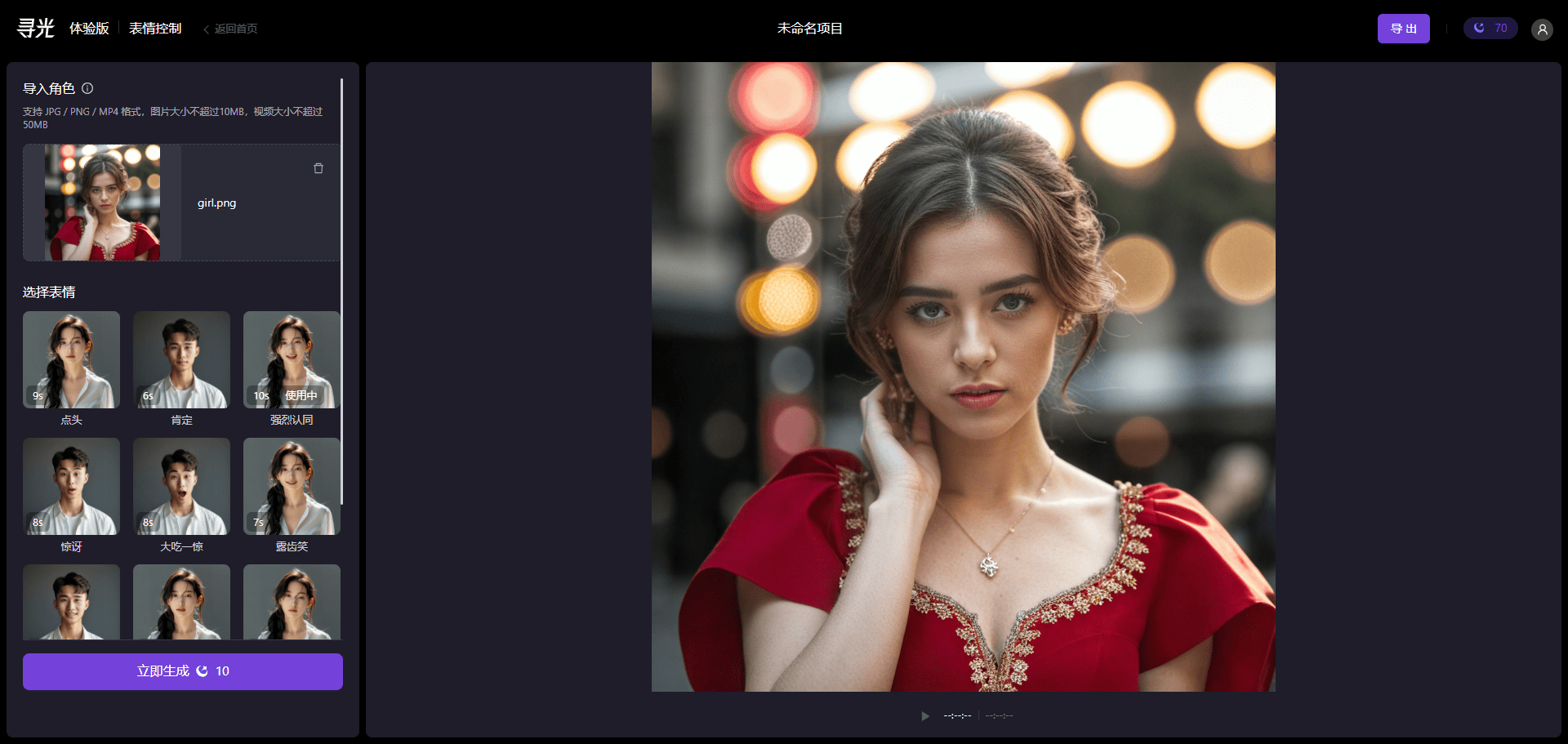

Expression control

Accurately control the facial expressions of people in an image or video to generate a video that matches the expression template.

Test effects:

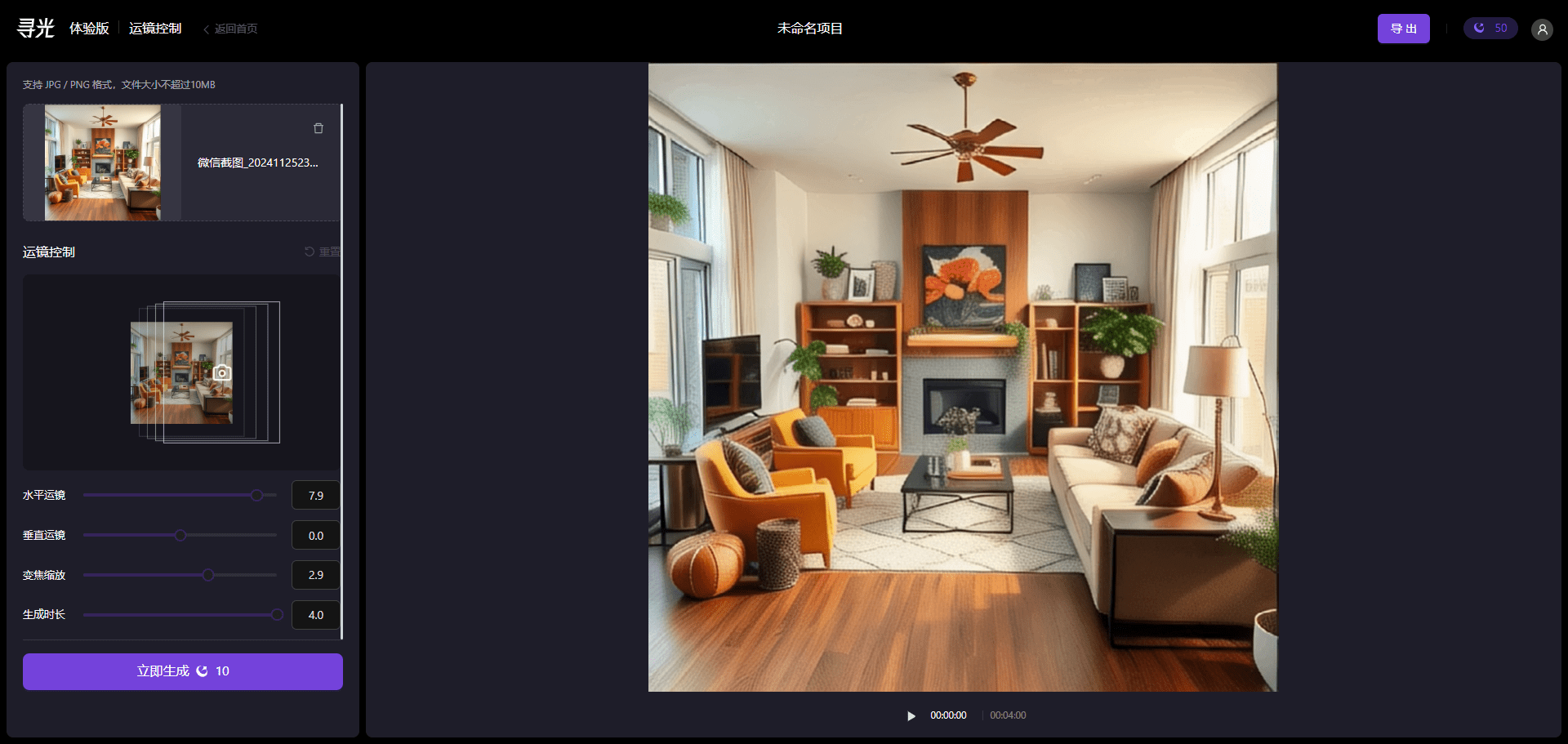

mirror control

Test effects:

The motion is a bit large and you can see that the last few frames of the captured image are poorly rendered:

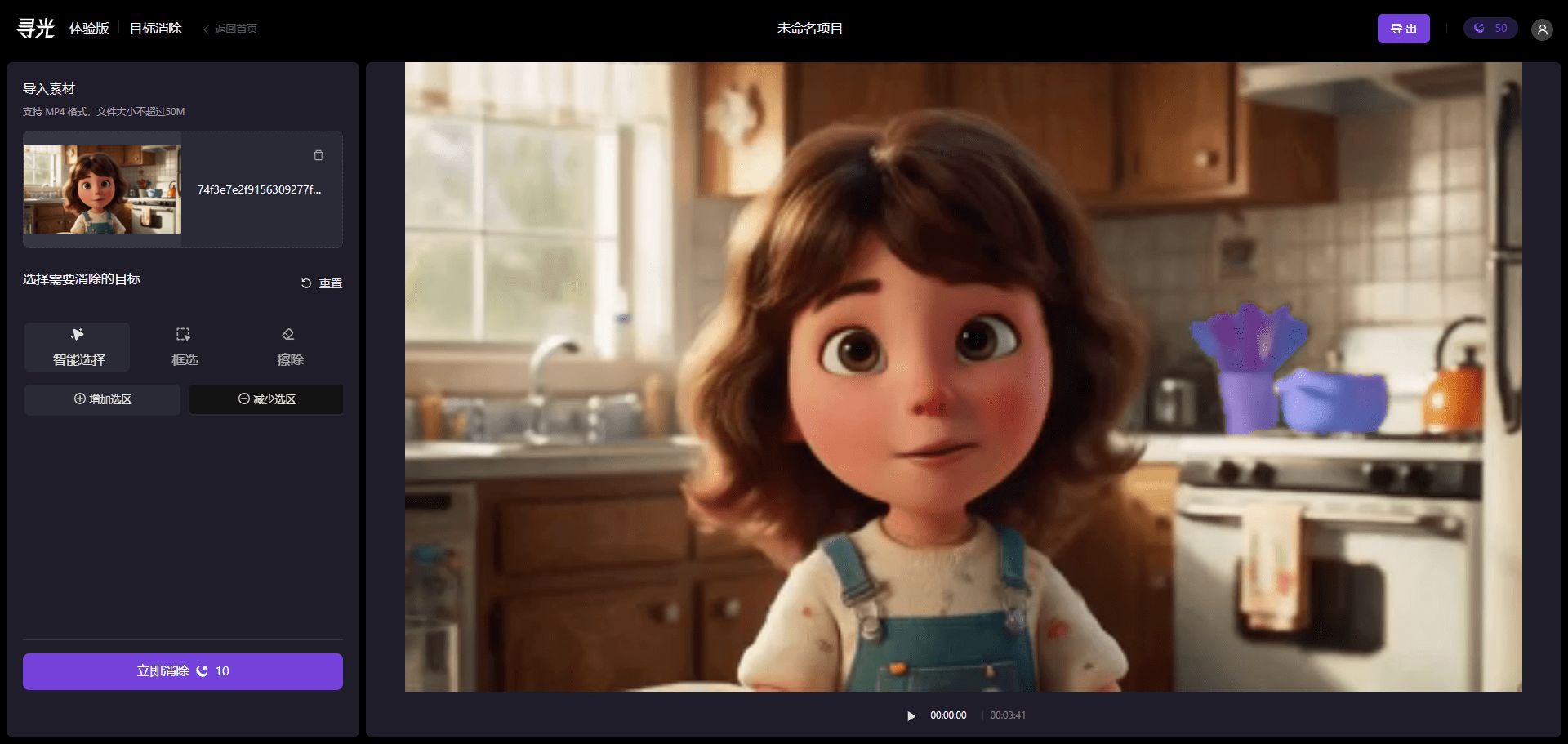

Target elimination

Clip elimination in video, offering smart selection, framing and erasing. Here an attempt is made to eliminate static targets, but static targets have slightly more complex backgrounds.

You can try to eliminate elements that are moving dramatically on your own, such as speeding cars, walking pedestrians, and still objects in mirrors.

test effect

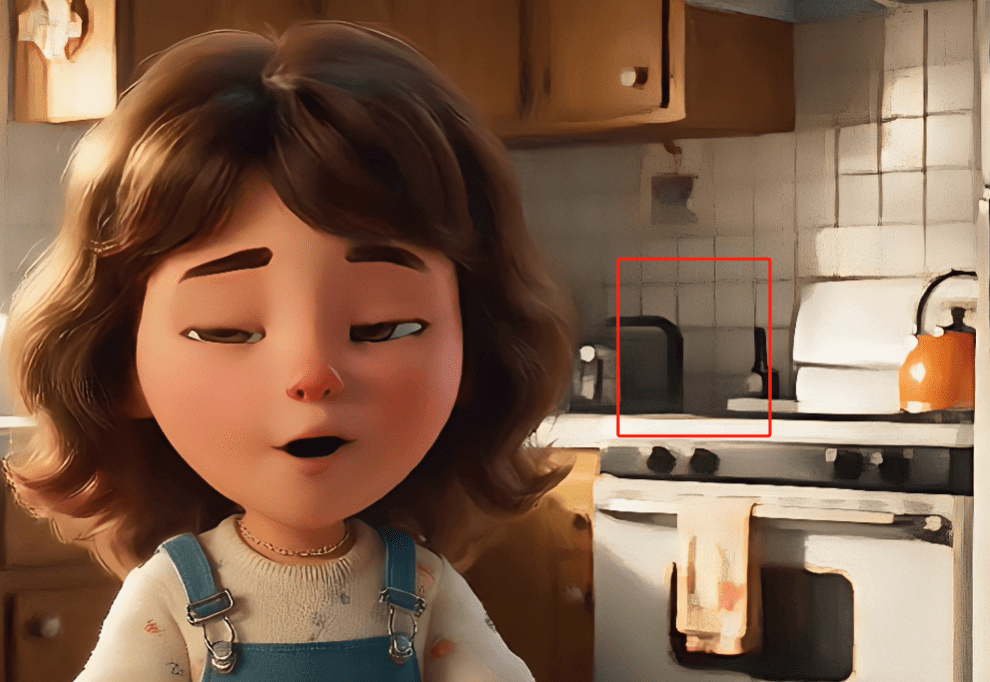

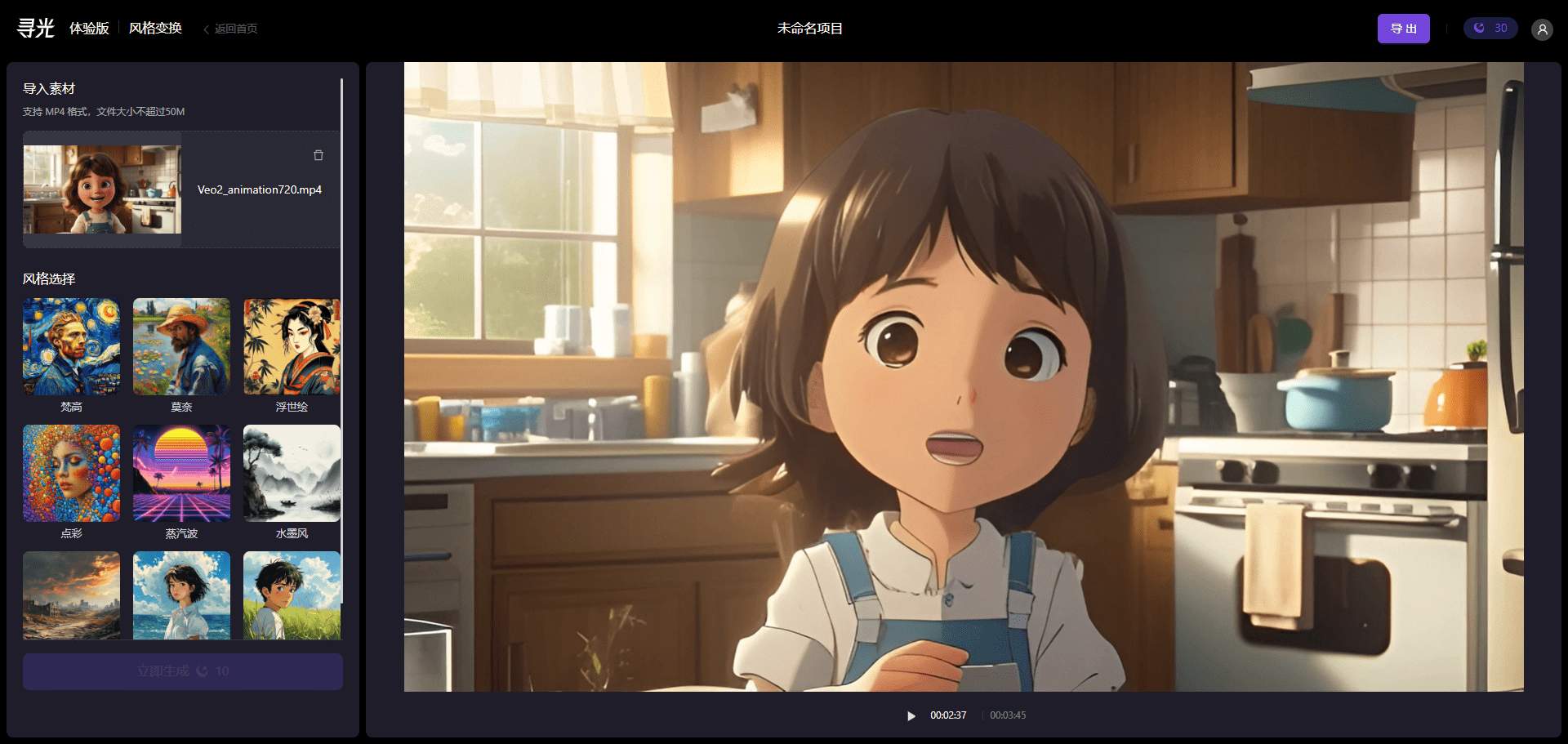

change of style

Without putting up a video, the results are slightly worse, with a flickering screen, so here's an intercept of a slightly better image.

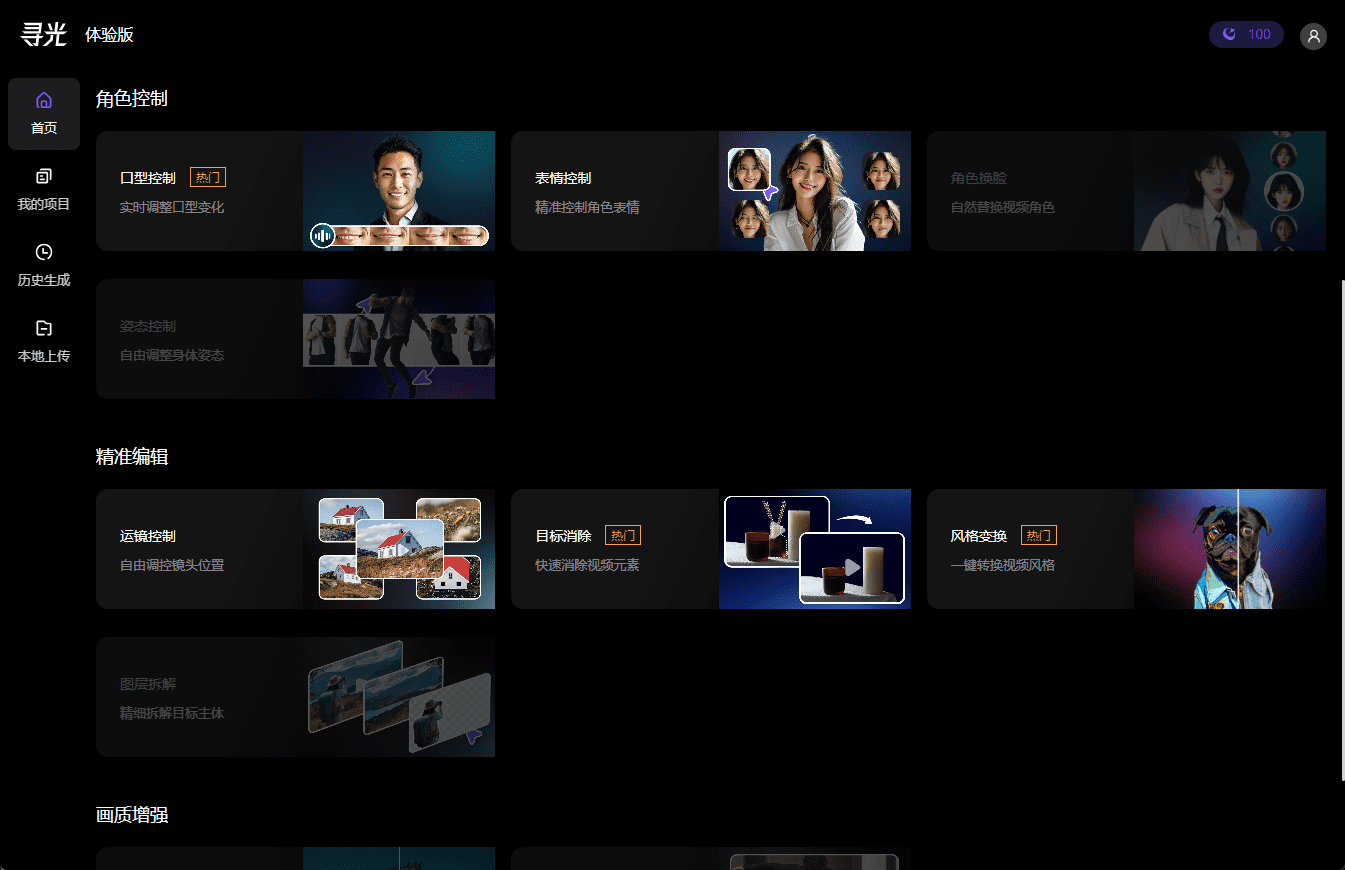

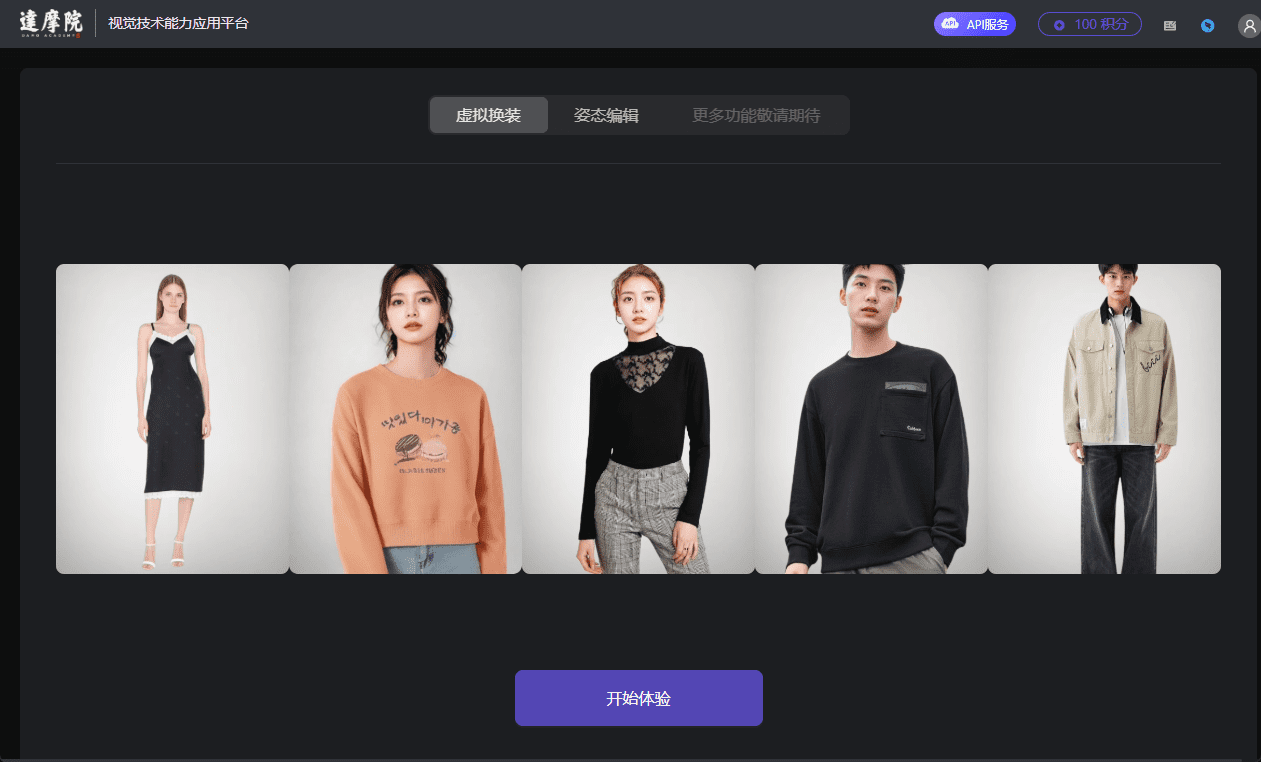

Light-seeking AI mini-function test

After logging in and going to the home page, you only see virtual dressing and posture editing, the function is still relatively basic.

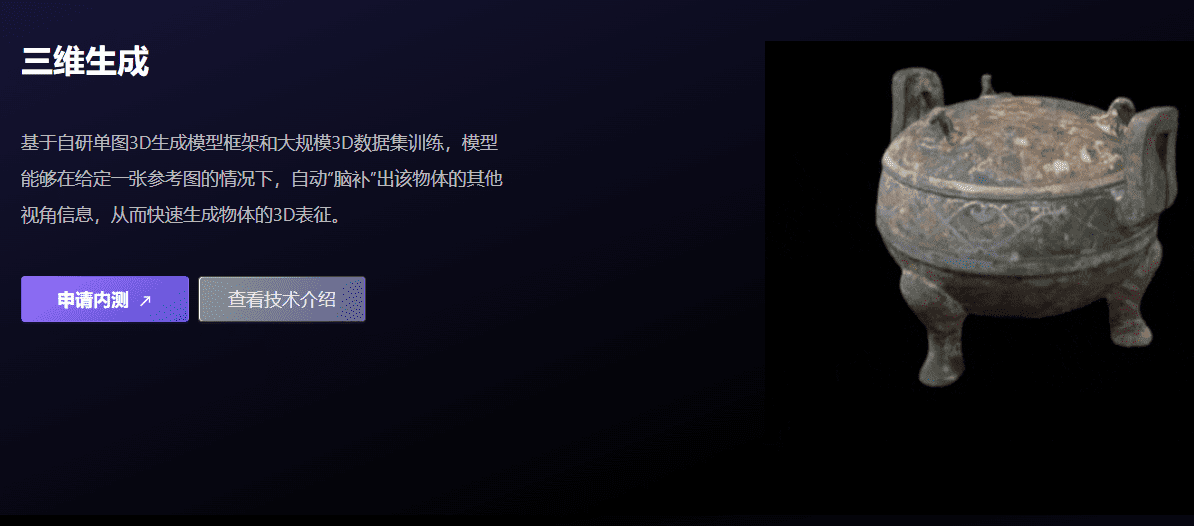

The 3D generation function displayed on the home page requires a separate application.

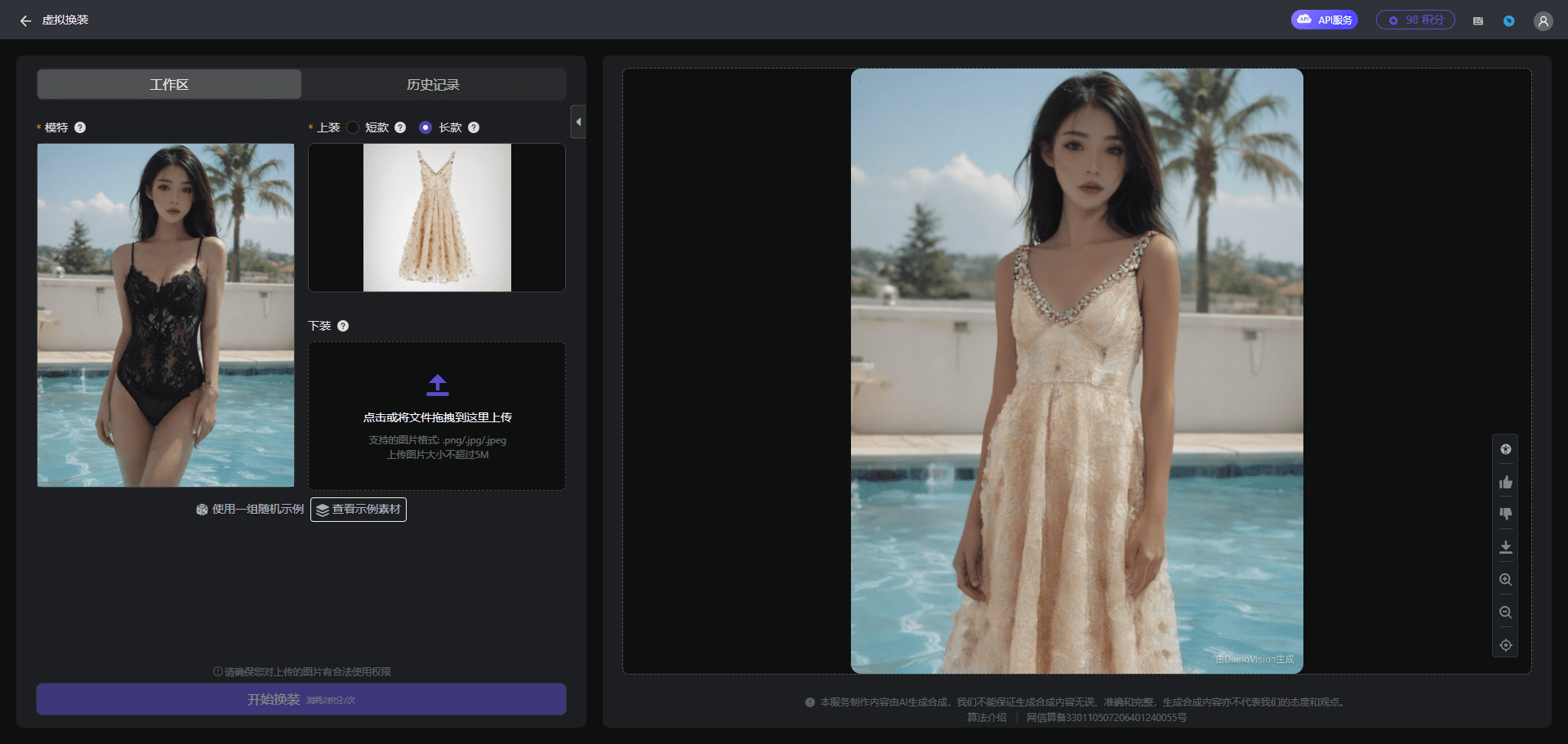

Virtual Dressup Review

Let's start with a quick positive energy from a young lady who is wearing less. Here you can see the hands correctly displayed on the costume. However, that large part may be limited by the model and is generally shrunken and not handled according to the original character's body shape, and the skin color has changed.

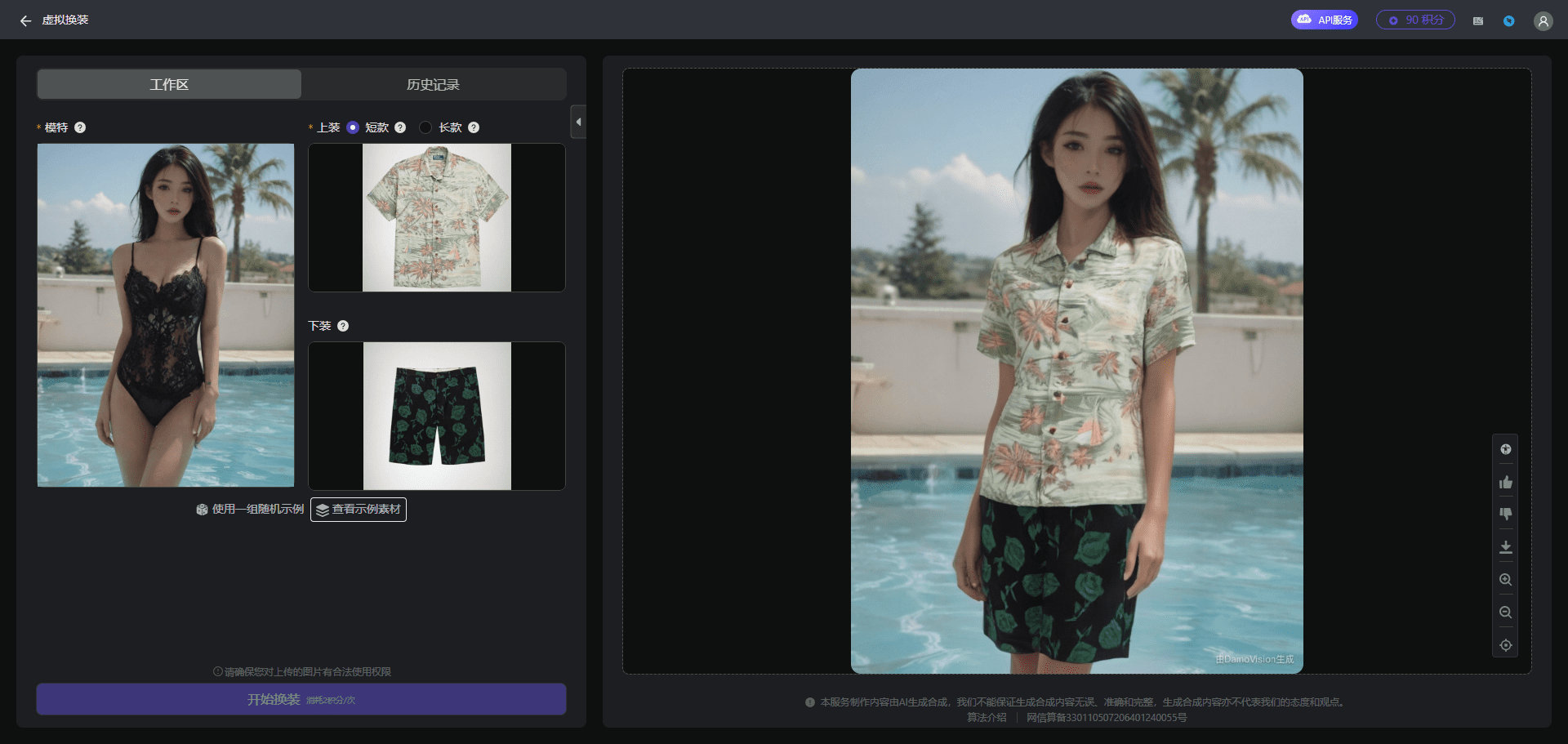

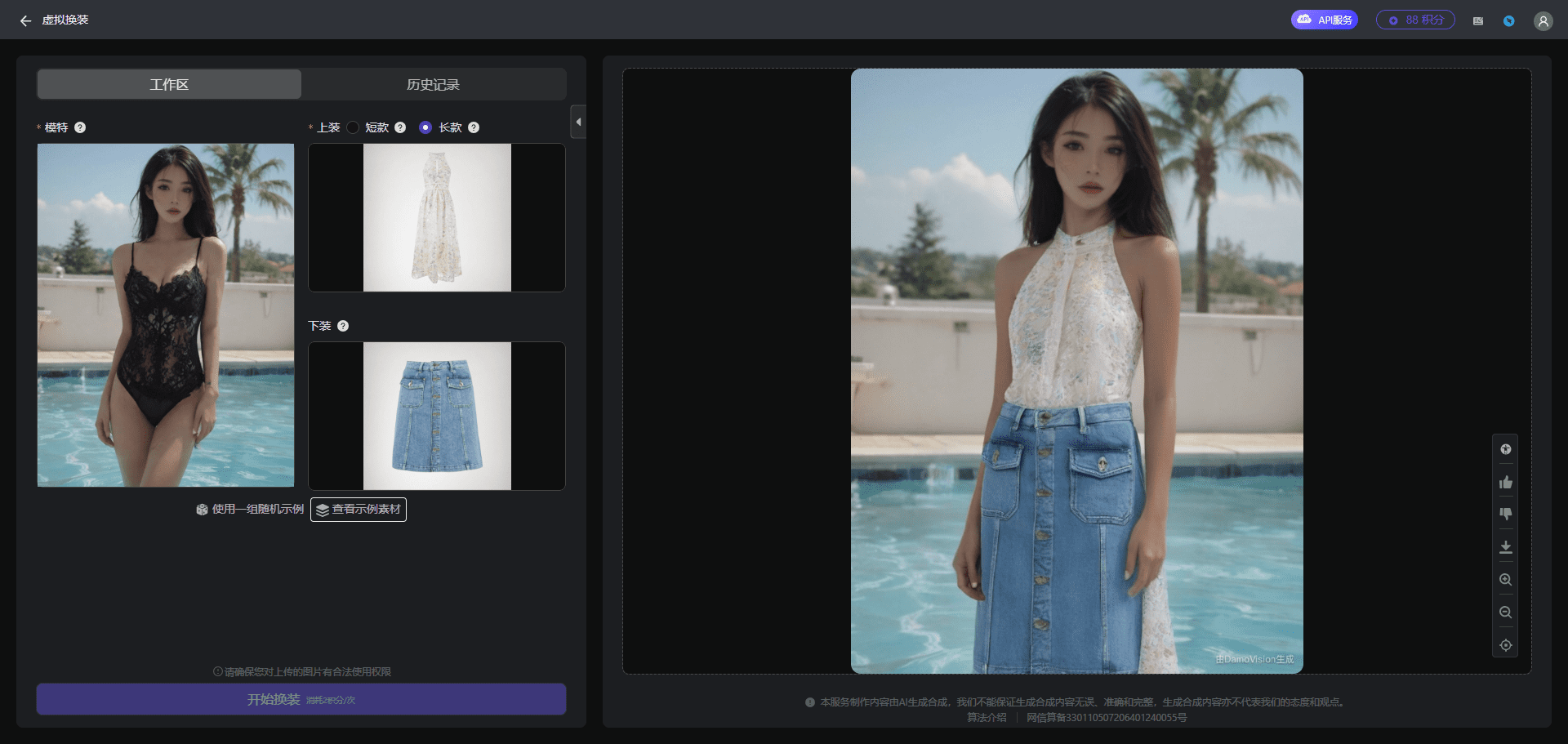

Previously tested single long garment, then real-time short top plus bottom effect:

I know some of you are wondering if the long plus bottoms will tuck the long skirt into your pants? I urge you not to do that...

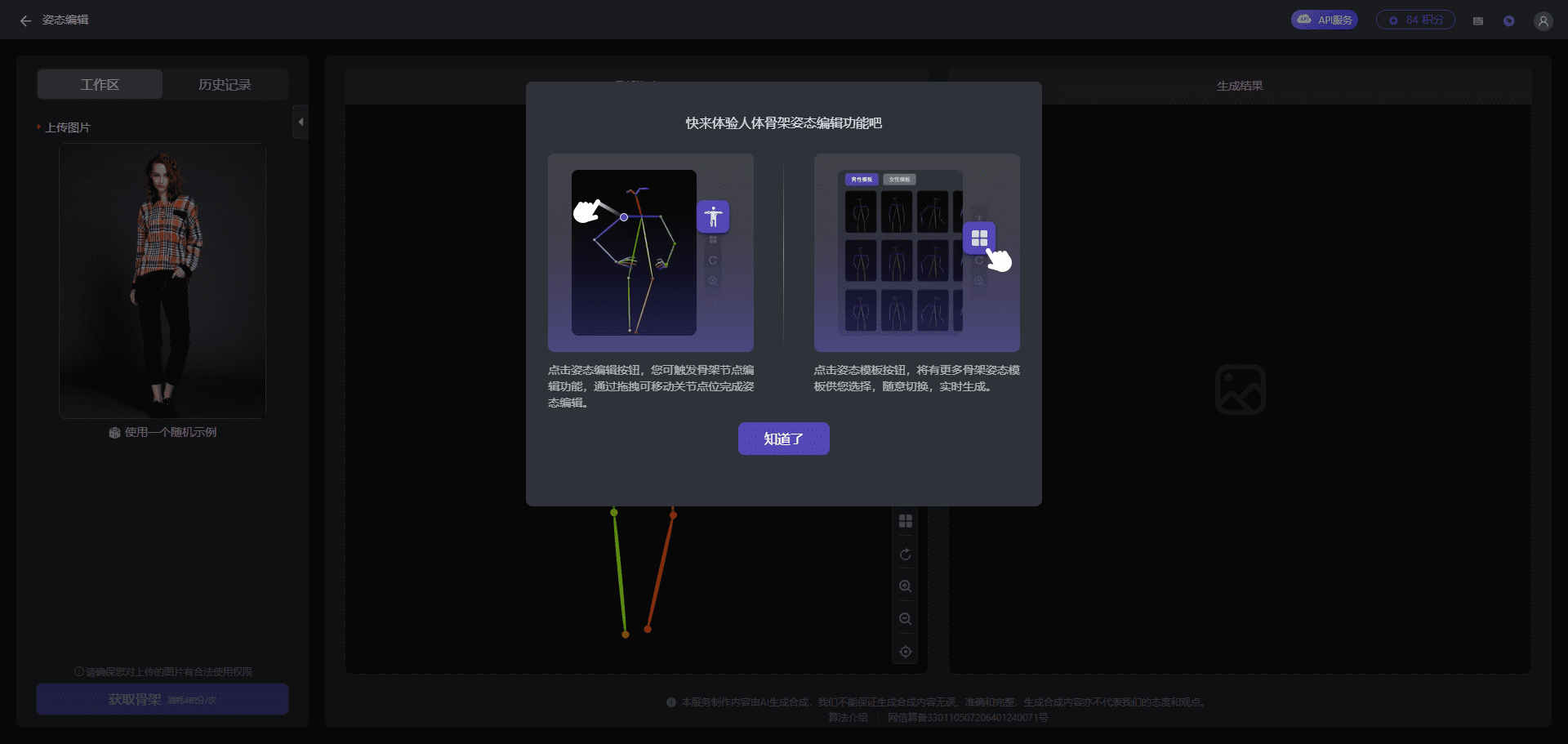

Stance Editor

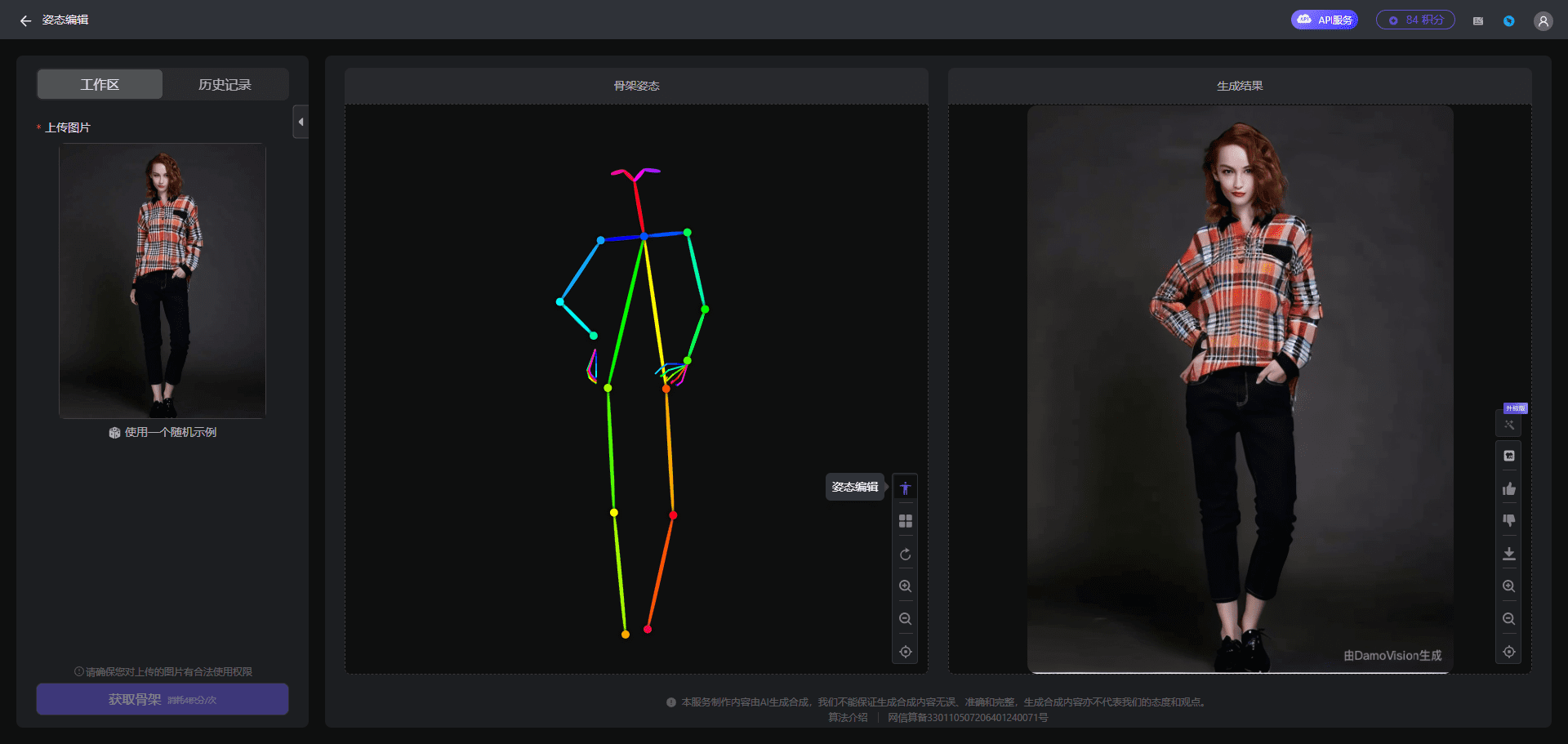

This time, let's change to a professional model to adjust the posture. After uploading the model's picture, the first step is to "get the skeleton".

Dragging the skeleton node and having the model pinch doesn't work as well as it should.

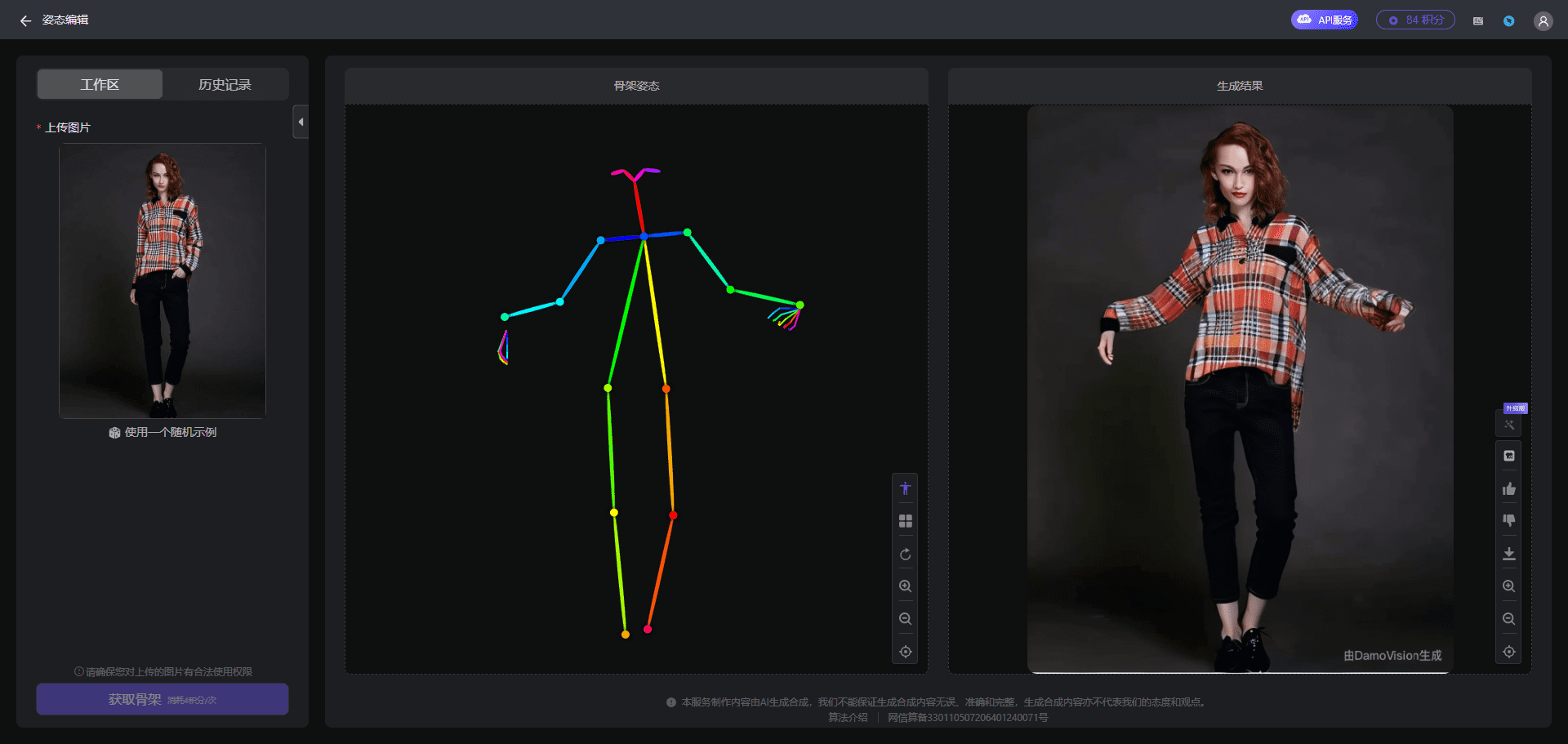

Continuing to drag and drop the bones to try and get the effect of the hands spreading out, but his hand bones are not adjustable by their own pushing and dropping.

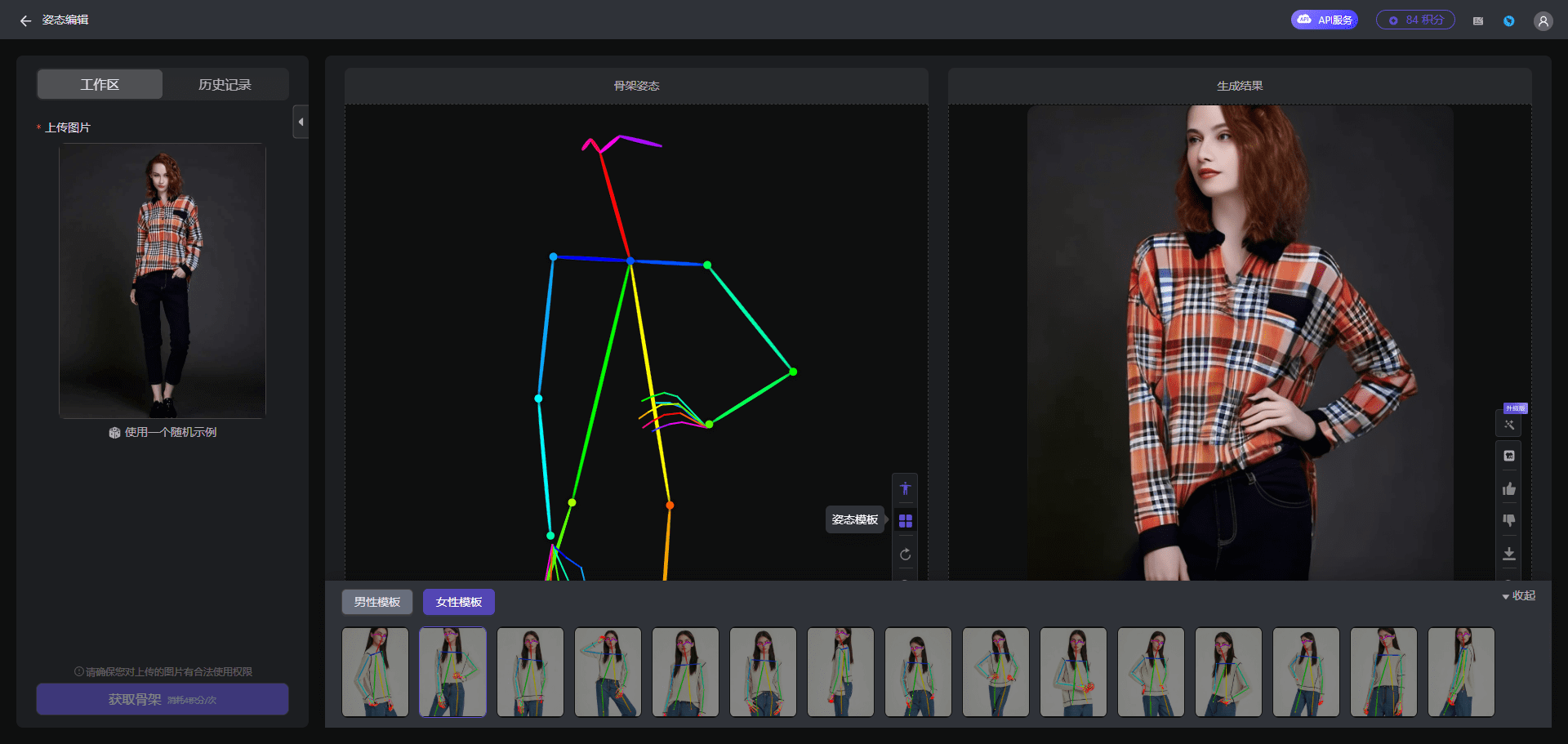

Using the skeleton pose template, you can see here that the "hand skeleton" in the template is changed and the correct hand is generated.

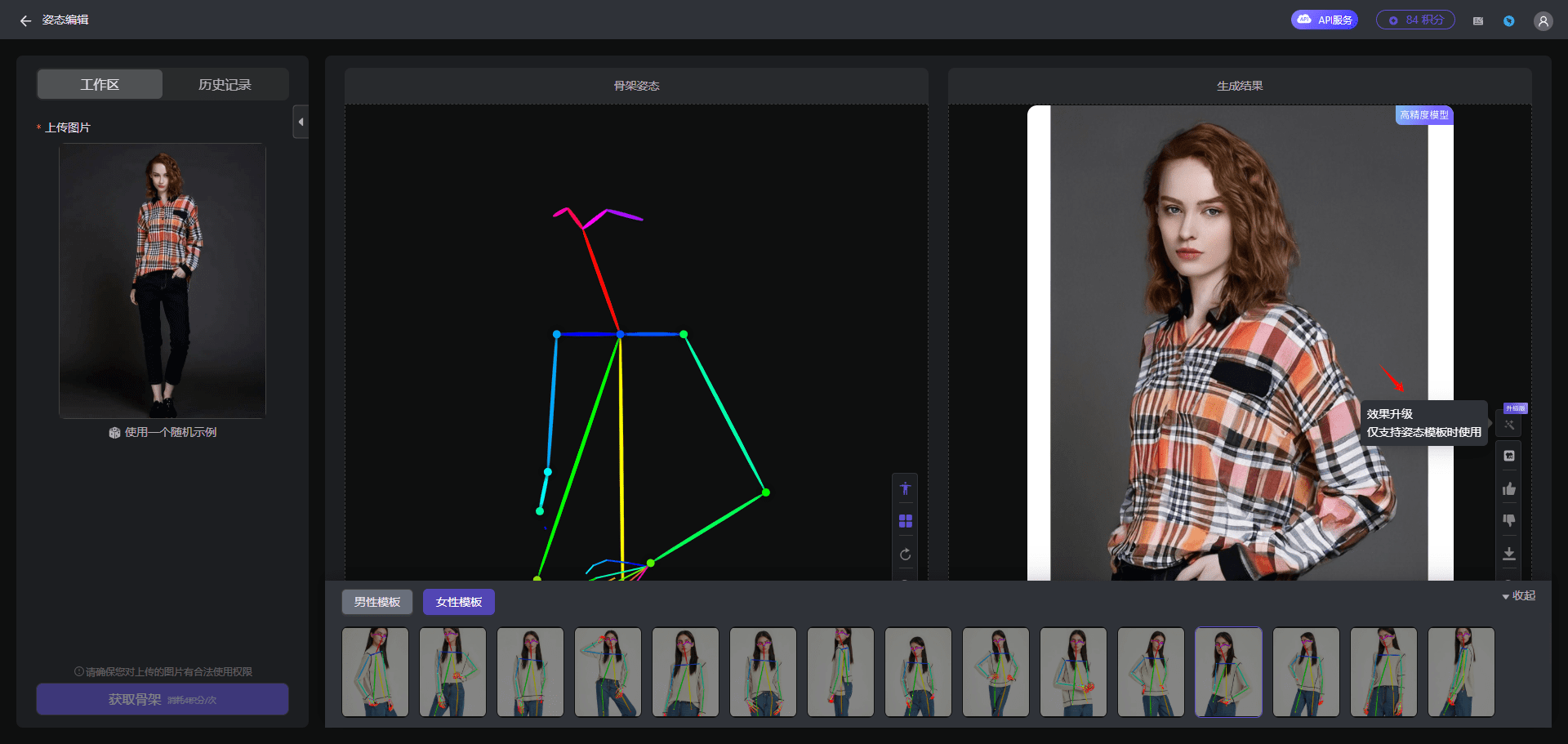

When using a pose template, you can select "Effect Upgrade" to significantly improve the image quality.

Before Effect Upgrade: (10 randomly selected, deliberately picked one of the harder ones for easy comparison)

The effect is upgraded:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...