Structured Data Output Methods for Large Models: A Selected List of LLM JSON Resources

This curated list focuses on resources related to generating JSON or other structured output using the Large Language Model (LLM).

A list of resources for generating JSON using LLM via function calls, tools, CFGs, etc., covering Libraries, Models, Notebooks, and more.

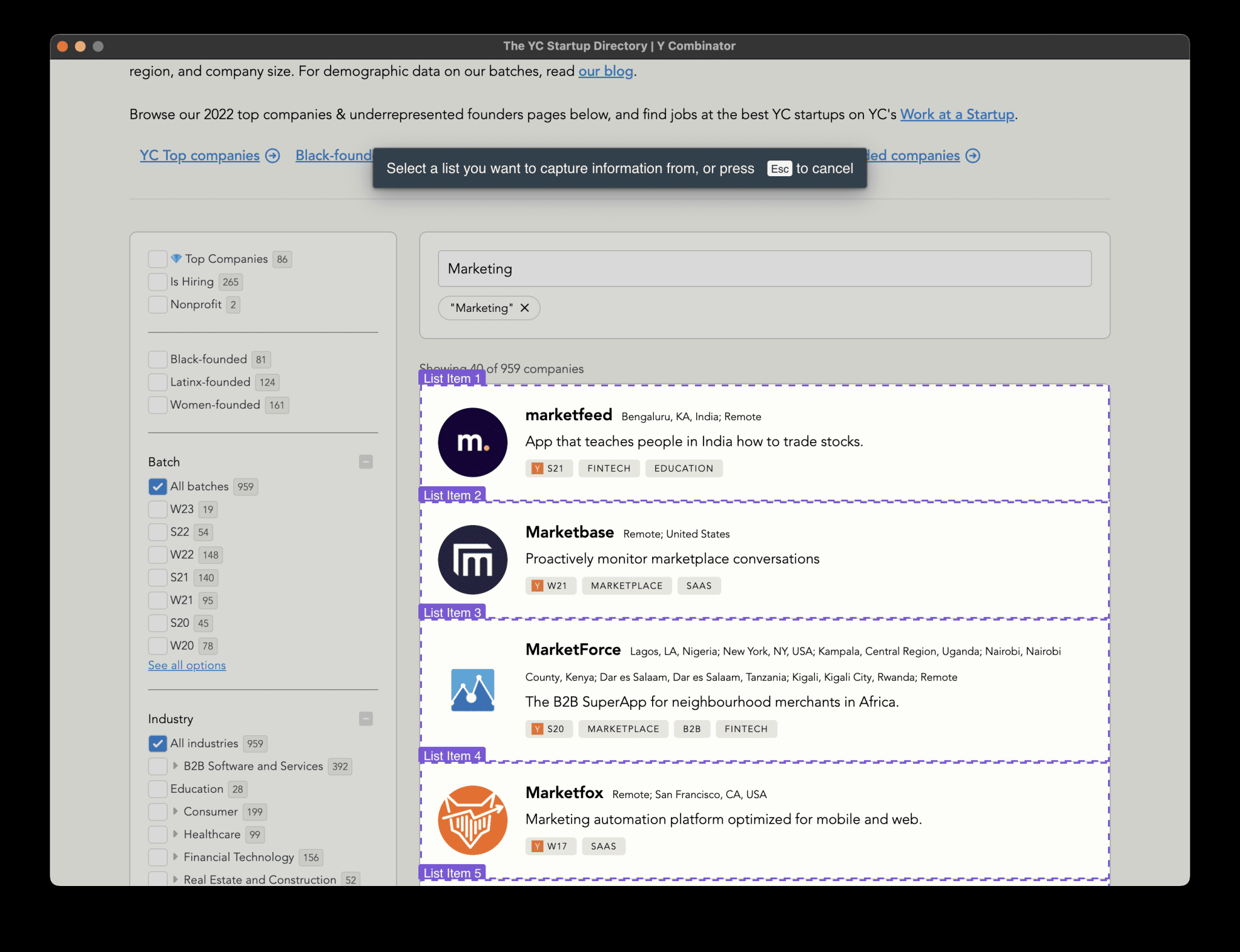

catalogs

- nomenclature

- hosting model

- local model

- Python libraries

- Blog Posts

- video

- Jupyter Notebooks

- the charts (of best-sellers)

nomenclature

There are a number of different names for generating JSON, although the meanings are basically the same:

- Structured Output: Use LLM to generate any structured output, including JSON, XML, or YAML, regardless of the specific technology (e.g., function calls, bootstrap generation).

- function call: Provide the LLM with a hypothetical (or actual) function definition, prompting it to "call" the function in a chat or completion response; the LLM doesn't actually call the function, it just indicates the intent of the call via a JSON message.

- JSON mode: Specify that LLM must generate valid JSON. depending on the vendor, a schema may or may not be specified and LLM may create unexpected schemas.

- Tool Use: Provides LLM with a selection of tools such as image generation, web search, and "function calls". Function call parameters in API requests are now called "tools".

- priming: Enables LLM to generate text following a specific specification, such as context-independent grammarThe

- GPT Operation: ChatGPT is based on the specified OpenAPI specification The endpoints and parameters in the API server are used to invoke operations (i.e., API calls). Unlike the feature called "function calls", this feature does call functions hosted on the API server.

Each of the above terms may have a different meaning, so I've named this list "Selected LLM JSON".

hosting model

parallel function call

Below is a list of hosted API models that support multiple parallel function calls. These calls may include checking the weather in multiple cities, or finding a hotel location first and then checking the weather at that location.

- anthropic

- claude-3-opus-20240229

- claude-3-sonnet-20240229

- claude-3-haiku-20240307

- azure/openai

- gpt-4-turbo-preview

- gpt-4-1106-preview

- gpt-4-0125-preview

- gpt-3.5-turbo-1106

- gpt-3.5-turbo-0125

- cohere

- command-r

- together_ai

- Mixtral-8x7B-Instruct-v0.1

- Mistral-7B-Instruct-v0.1

- CodeLlama-34b-Instruct

local model

Mistral 7B Instruct v0.3 (2024-05-22, Apache 2.0) is a fine-tuned version of Mistral's commands that adds function call support.

C4AI Command R+ (2024-03-20, CC-BY-NC, Cohere) is a 104B-parameter multilingual model with advanced Retrieval Augmented Generation (RAG) and tool usage optimized for reasoning, summarization, and Q&A in 10 languages. Quantization is supported to improve usage efficiency, and unique multi-step tool integration in complex task execution is demonstrated.

Hermes 2 Pro - Mistral 7B (2024-03-13, Nous Research) is a 7B parametric model that specializes in function calls, JSON structured output, and generic tasks. Trained based on the updated OpenHermes 2.5 dataset and the new function call dataset, using special system cues and multi-round structure. Achieved 91% and 84% accuracy in function call and JSON model evaluation, respectively.

Gorilla OpenFunctions v2 (2024-02-27, Apache 2.0 License. Charlie Cheng-Jie Ji et al.) Interpretation and execution of functions based on JSON schema objects, support for multiple languages and the ability to detect functional relevance.

NexusRaven-V2 (2023-12-05, Nexusflow) is a 13B model that outperforms GPT-4 by up to 7% on zero-sample function calls, enabling efficient use of software tools. Further instruction fine-tuning based on CodeLlama-13B-instruct.

Functionary (2023-08-04,. MeetKai) Based on the JSON schema object interpretation and execution of functions to support a variety of computational needs and call types. Compatible with OpenAI-python and llama-cpp-python for efficient execution of function calls in JSON generation tasks.

Hugging Face TGI because ofMultiple local modelsJSON output and function call support is enabled.

Python libraries

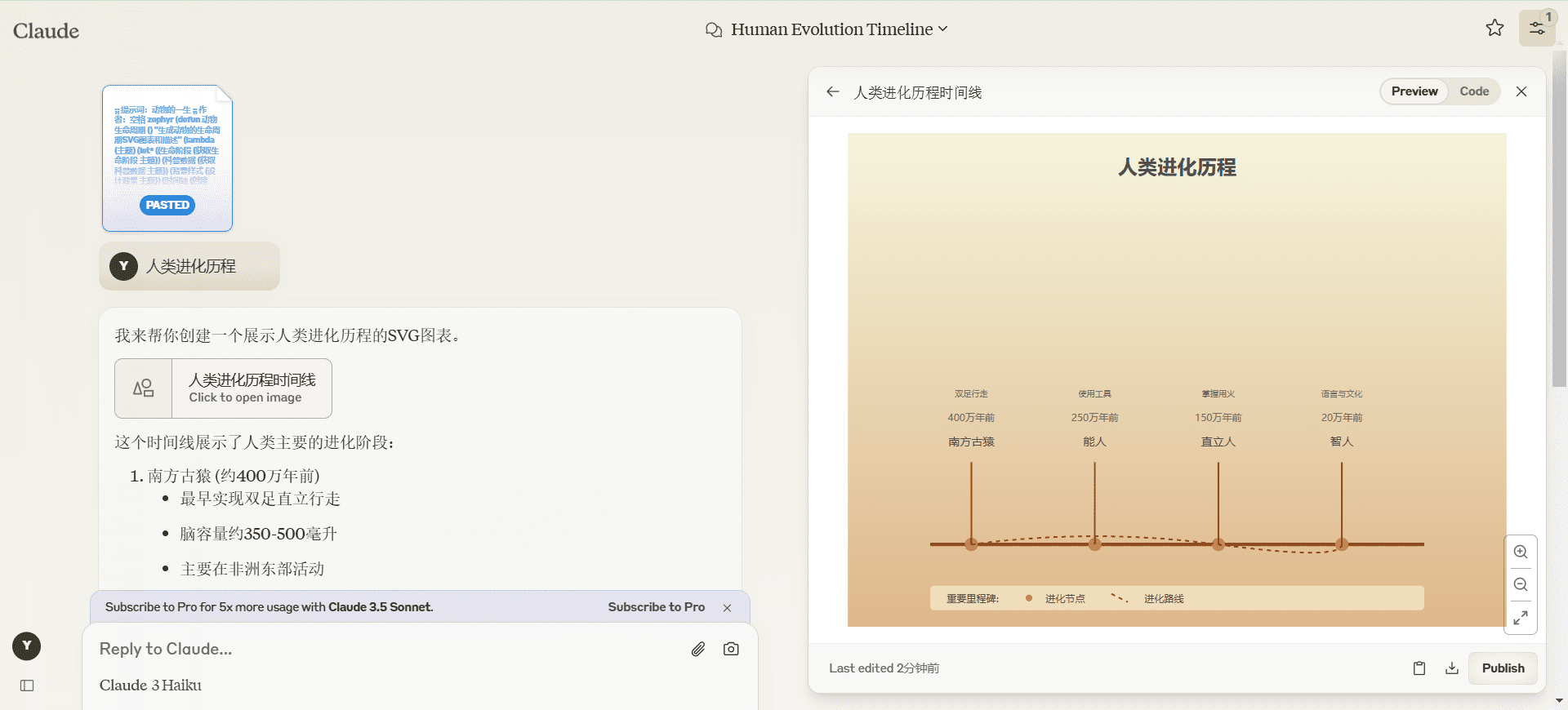

DSPy (MIT) is a framework for algorithmically optimizing language model (LM) hints and weights.DSPy introduces theType predictors and signaturesThe program can be used by using the Pydantic Improved string-based fields by enforcing type constraints on inputs and outputs.

FuzzTypes (MIT) extends Pydantic to provide auto-correction of annotation types to enhance data normalization and to handle complex types such as emails, dates, and custom entities.

guidance (Apache-2.0) supports constraint generation, combines Python logic with Large Language Model (LLM) calls, and supports reusing functions and calling external tools to optimize hints for faster generation.

Instructor (MIT) simplifies structured data generation for LLMs using function calls, tool calls, and constrained sampling patterns. Validation is based on Pydantic and supports multiple LLMs.

LangChain (MIT) provides link ports, integration with other tools, and chains for applications.LangChain offersChain of structured outputsand cross-modelingfunction callSupport.

LiteLLM (MIT) simplifies the calling of more than 100 LLMs in OpenAI format, supportsfunction callThe JSON mode is used for the following purposes.

LlamaIndex (MIT) provides different levels of abstraction for theStructured Output Module, including an output parser for text-completion endpoints, an output parser for mapping hints to structured output Pydantic programThe Pydantic program is a predefined program with a specific type of output.

Marvin. (Apache-2.0) is a lightweight toolkit for building reliable natural language interfaces with self-documentation tools such as entity extraction and multimodal support.

Outlines (Apache-2.0) Generates structured text using multiple models, Jinja templates, and support for regular expression patterns, JSON patterns, Pydantic models, and context-independent grammars.

Pydantic (MIT) simplifies the use of data structures and JSON by defining data models, validation, JSON schema generation, and seamless parsing and serialization.

SGLang (MPL-2.0) allows JSON patterns to be specified using regular expressions or Pydantic models for constrained decoding. Its high-performance runtime accelerates JSON decoding.

SynCode (MIT) is a framework for grammar-guided generation of large language models (LLMs). It supports context-independent grammars (CFGs) for Python, Go, Java, JSON, YAML, and more.

Mirascope (MIT) is an LLM toolkit that supports structured extraction and provides an intuitive Python API.

Magnetic (MIT) Call LLM in Python in three lines of code. simply create LLM functions that return structured output using the @prompt decorator, powered by Pydantic.

Formatron (MIT) is an efficient and extensible constraint decoding library that supports the use of f-string templates to control language model output formats, regular expressions, context-independent grammars, JSON schemas, and Pydantic models.Formatron seamlessly integrates with a wide range of model inference libraries.

Transformers-cfg (MIT) extends Context-Free Grammar (CFG) support to Hugging Face Transformers via the EBNF interface, which implements syntax-constrained generation with minimal changes to the Transformers code and support for JSON modes and JSON patterns.

Blog Posts

How fast are syntactic structures generated? (2024-04-12, .txt Engineering) demonstrates a near-zero-cost method for generating grammatically correct text. On C syntax, it outperforms the llama.cpp Up to 50 times.

Structured Generation Enhances LLM Performance: GSM8K Benchmarks (2024-03-15, .txt Engineering) Demonstrates consistency enhancement on 8 models, emphasizing the benefits of "cue consistency" and "thought control".

LoRAX + Outlines: Better JSON Extraction with Structured Generation and LoRA (2024-03-03, Predibase Blog) will Outlines Combine with LoRAX v0.8 to improve extraction accuracy and pattern integrity with structured generation, fine-tuning and LoRA adapters.

FU, a quick look at the hard-to-understand LLM framework tips. (2023-02-14, Hamel Husain) Provides a hands-on guide to intercepting API calls using mitmproxy in order to understand the tool's capabilities and evaluate its necessity. Emphasizes reducing complexity and tight integration with the underlying LLM.

Coalescence: 5x faster LLM reasoning (2024-02-02, .txt Engineering) Demonstrates the use of "aggregation" techniques to accelerate structured generation, which is faster than unstructured generation, but may affect the quality of generation.

Why Pydantic is Essential for LLMs (2024-01-19, Adam Azzam) Explained the emergence of Pydantic as a key tool that enables sharing of data models via JSON schema and reasoning between unstructured and structured data. The importance of quantifying the decision space was emphasized, as well as the potential problem of LLM overfitting on older schema versions.

Getting Started with Function Calls (2024-01-11, Elvis Saravia) Introduced function calls for connecting LLMs to external tools and APIs, provided examples of using the OpenAI API, and highlighted potential applications.

Pushing ChatGPT's Structured Data Support to the Limit (2023-12-21, Max Woolf) explores the use of paid APIs, JSON schemas, and Pydantic to leverage ChatGPT Methods of functionality. Techniques for improving the quality of output and the benefits of structured data support are presented.

Why choose Instructor? (2023-11-18, Jason Liu) Explains the advantages of the library, providing an easy-to-read approach, support for partial extraction and various types, and a self-correction mechanism. Recommended. Instructor Other resources on the site.

Using syntax to constrain the output of llama.cpp (2023-09-06, Ian Maurer) Combining context-independent syntax to enhance the output accuracy of llama.cpp, especially for biomedical data.

Data Extraction with OpenAI Functions and its Python Library (2023-07-09, Simon Willison) Demonstrates the extraction of structured data in a single API call via the OpenAI Python library and function calls, with code examples and suggestions for dealing with stream constraints.

video

(2024-04-09, Simon Willison) shows how the datasette-extract plugin can extract data from unstructured text and images and populate database tables via GPT-4 Turbo's API.

(2024-03-25, Andrej Baranovskij) demonstrated function call-based data extraction using Ollama, Instructor, and Sparrow agent The

(2024-03-18, Prompt Engineer) presented Hermes 2 Pro, a model with 7 billion parameters that excels at function calls and generating structured JSON output. Demonstrated an accuracy of 90% for function calls and 84% in JSON mode, outperforming other models.

(2024-02-24, Sophia Yang) demonstrated connecting a large language model to external tools, generating function arguments, and executing functions. This can be further extended to generate or manipulate JSON data.

(2024-02-13.Matt Williams) clarifies that the structured output generated by the model is used for parsing and calling functions. The implementations are compared, emphasizing Ollama approach is more concise and uses a handful of sample tips to maintain consistency.

(2024-02-12.Jason Liu(math.) genusWeights & Biases course) provides a concise course on how to use Pydantic to handle structured JSON output, function calls, and validation, covering the essentials of building robust pipelines and efficient production integrations.

(2023-10-10.Jason Liu(math.) genusAI Engineer Conference) discusses the importance of Pydantic in structured cueing and output validation, introduces the Instructor library, and demonstrates advanced applications for reliable and maintainable LLM applications.

Jupyter Notebooks

Function calls using llama-cpp-python and the OpenAI Python client Demonstrates integration, including setup using the Instructor library, and provides examples of getting weather information and extracting user details.

Function calls using the Mistral model Shows the connection of a Mistral model to an external tool through a simple example involving a payment transaction data frame.

chatgpt-structured-data leave it (to sb) Max Woolf provides, demonstrating ChatGPT's function calls and structured data support, covering a variety of use cases and data structures.

the charts (of best-sellers)

Berkeley Function Call Leaderboard (BFCL) is an evaluation framework for testing the function-calling capabilities of LLMs, consisting of more than 2000 question-function-answer pairs across languages such as Python, Java, JavaScript, SQL, and REST APIs, with a focus on simple, multiple, and parallel function calls, as well as function relevance detection.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...