Cursor Platform Model Comparison: DeepSeek V3/R1 vs Claude 3.5 Sonnet Tested

DeepSeek Latest Model Test: V3 vs. Claude 3.5 Sonnet, who's better?

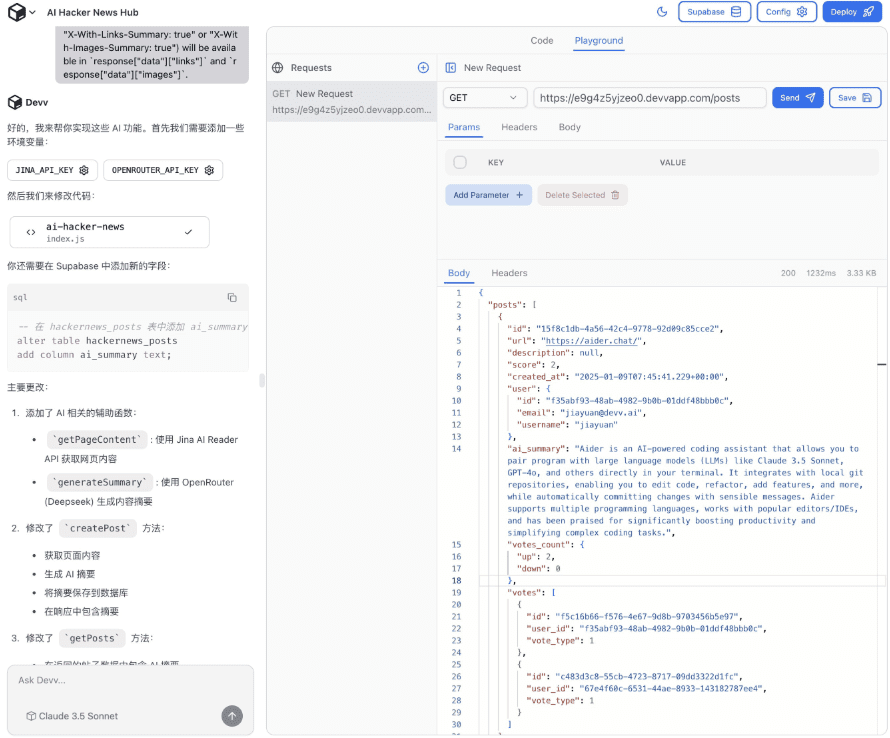

DeepSeek has recently made its debut in the Cursor The platform has launched their two new models, DeepSeek V3 and R1. Currently, many developers (including us) are using Claude 3.5 Sonnet (latest version claude-3-5-sonnet-20241022) as the main language model. In order to see how the new models perform in practice, we decided to do a real-world comparison test between these two models from DeepSeek and Claude 3.5 Sonnet.

Introduction to the DeepSeek model

DeepSeek has been getting a lot of attention lately for open sourcing its powerful R1 model, which is claimed to be comparable to OpenAI's o1 model in terms of performance, which is no easy task. The Cursor platform has always been quick to act, and as soon as the DeepSeek model went live, people couldn't wait to start testing it in real-world applications.

Performance Comparison Reference

DeepSeek's official release DeepSeek R1 and V3 performance data against OpenAI's o1 and o1-mini models.

Overview of testing tasks

This comparison test contains two main parts:

- Chat mode -- Simulates everyday development scenarios and explores how to add server-side actions to a dialog component in a Next.js application.

- code generation mode -- Simulate a code maintenance scenario to modify a CircleCI configuration file with the goal of removing front-end deployment-related configurations and E2E (end-to-end) testing steps that are no longer needed.

It is important to note that the Cursor platform's Agent Mode, which generally refers to a mode in which a model can perform operations and invoke tools on its own, is currently only available for the Anthropic model and GPT-4o are open, so the proxy model is not covered in this test.

Chat Mode Comparison

mission statement

The question we posed was how to properly add server-side actions to a dialog component in a Next.js application. The specific question prompt was:

"Please explain how to implement a server-side operation and pass it correctly to this dialog component?"

In order to provide a more specific context, we have also attached the file containing the code related to the dialog component.

Performance of DeepSeek R1

DeepSeek R1 was naturally our first choice for testing because of its high profile. However, in using the R1, we quickly discovered two rather obvious problems:

- Output streaming is slow

R1 is slow in generating answers and has to wait longer to see the full output. - Answer starts with a clear block

R1 outputs a large section of tag-wrapped content before formally answering, similar to the presentation of a thought process. While this preprocessing step is acceptable if it significantly improves the quality of the final answer. The problem, however, is that when it is overlaid with slow streaming output, it significantly delays the presentation of actual valid information. For example, if the model outputs a large piece of content before slowly streaming the actual answer, the overall wait time becomes very long. Theoretically, it is possible to set the Cursor rule to skip sections, but this was outside the scope of our current default state testing.

In addition, R1's answer suggests installing the next-safe-action/hooks library to solve the problem, but it doesn't further explain how to use this library for server-side operations in a subsequent answer. For a relatively simple problem like the one we posed, it seems a bit "trivial" to just suggest installing an additional library.

Performance of DeepSeek V3

DeepSeek V3 also performs quite well, it even recommends using the React 19 of the new feature useFormStatus. This suggests that the V3 model learns about newer front-end technologies and code bases. However, V3 has a critical bug in its code implementation: it calls server-side operations directly from client-side components. In Next.js, this is not feasible. (As a side note: Next.js requires server-side code to be executed in the server-side environment for security, performance, and code organization, and code in client-side components runs in the browser by default. Calling server-side code directly in client-side components can result in errors such as server-side modules not being found, network requests failing, etc.). For example, calling a server-side function directly in client-side JavaScript code will result in a runtime error, or the server-side code will not be executed at all.

Similar to the R1, the V3's output streaming is slower. However, since the V3 does not have the long blocks of the R1, the overall experience is slightly better than the R1.

Claude 3.5 Sonnet's Performance

In comparison, Claude 3.5 Sonnet is the fastest, even in "slow request mode" (e.g., when the number of API requests per month exceeds the free quota and enters a paid request, it may experience a request speed limit). While Sonnet doesn't recommend the latest React feature (useFormStatus) as V3 did, and makes a similar mistake to V3 by calling server-side operations directly in the client-side component, it gives a solution that is closer to the actual answer available.Sonnet suggests that adding the 'use server' directive to the server-side operation function will Sonnet suggests that adding the 'use server' directive to the server-side action functions would satisfy the requirements of Next.js.(Knowledge supplement: 'use server') is a key directive introduced in Next.js version 13 and later for explicitly declaring a function as a server-side operation. Adding the 'use server' Then Next.js can correctly recognize the function as server-side code and allow client-side components to safely call it.) In fact, it's as simple as adding the 'use server' For the record, Sonnet's solution solves the problem essentially, and is more practical than the solution given by the DeepSeek model.

Comparison of code generation modes

mission statement

In this test session, we provide a CircleCI profile for deploying a full-stack application. This application contains a pure React front-end and a Node.js back-end. The original deployment process consists of multiple steps. Our goal is to modify this configuration file to accomplish both of the following:

- Remove all configurations related to front-end deployment

- Recognizing that only the back-end of the application remains, E2E testing (End-to-End Testing, usually used to test the complete user flow) is no longer necessary and the relevant configuration steps are removed. (Knowledge added: E2E testing is mainly used to simulate user behavior and verify the complete flow of front-end and back-end interactions. If the application is left with a back-end and no user interface, E2E testing is meaningless. Commonly used E2E testing frameworks include Cypress, Selenium, and so on.)

We explicitly state in the issue prompt to "remove all sections related to front-end deployment" and provide the full CircleCI configuration file to the model as context.

Performance of DeepSeek R1

We expected that the R1 model with blocks would perform better in tasks that require understanding of context and multiple modifications (Composer tasks). However, this was not the case:

- R1 omits some configurations that are clearly related to front-end deployment (e.g., the part of the config file that refers to building webapp references is still preserved). But to its credit, it correctly recognizes the deploy-netlify (the step of deploying to the Netlify platform, which is commonly used as a front-end static resource hosting platform) This step is no longer needed and has been removed.

- At the same time, R1 incorrectly removes the backend deployment step labeled deploy_production_api, which may result in back-end services not being deployed properly. In addition, R1 undetectable The E2E test is no longer relevant and still retains the associated configuration.

Performance of DeepSeek V3

DeepSeek V3 performs slightly better than R1 on code modification tasks. It fixes some front-end deployment configurations that R1 missed, but it also exposes new issues - for example, V3 still retains the deploy-netlify step, which suggests that it doesn't fully understand the task requirements. To its credit, V3 does a good job of keeping the back-end deployment step intact, and doesn't mistakenly delete the back-end deployment configuration as R1 did. However, like R1, V3 also failed to recognize that the E2E test section could be deleted.

Claude 3.5 Sonnet's Performance

The venerable Claude 3.5 Sonnet performed best in this code modification task:

- Sonnet successfully removed most of the commands related to front-end deploymentAlthough, like the V3, it also Failed to remove the deploy-netlify stepThe

- In terms of back-end deployment steps, Sonnet has also kept the integrity of theNo, there was no accidental deletion.

- Crucially, Sonnet accurately identified that E2E testing was no longer necessary as only back-end services remainedAs a result, Sonnet has removed all E2E test-related configurations including the Cypress Binary Cache, which is used to accelerate Cypress tests. As a result, Sonnet has removed all E2E test-related configurations, including the Cypress Binary Cache (the cache used to accelerate Cypress tests).(Knowledge added: The Cypress Binary Cache is used to cache the binaries required for Cypress test runs and can speed up the startup of subsequent tests. However, if the E2E test is removed, this cache configuration should be removed along with it to avoid redundant configurations.) This was the best solution in this test, demonstrating Sonnet's deep understanding of the intent of the task and its ability to make more comprehensive code changes.

summarize

The Cursor platform continues to introduce new AI models, always bringing new options and possibilities to developers. Although the task of this comparison test is relatively simple, it is enough to initially demonstrate the capabilities of both DeepSeek models in real-world development scenarios. Compared to Claude 3.5 Sonnet, DeepSeek's models have their own strengths and weaknesses.

All in all, Claude 3.5 Sonnet is clearly ahead of DeepSeek's two models in terms of response speed and output quality in this test. Although the response speed of the DeepSeek model may be improved in future versions due to server optimization, network distribution and other factors, Claude 3.5 Sonnet is still in the top tier in terms of practicality and reliability in terms of the current actual test results.

All in all, this test shows that Claude 3.5 Sonnet is still the more mature and reliable choice on the Cursor platform today. However, DeepSeek's new model also shows some potential and deserves developers' continued attention and experimentation. As the model continues to be iterated and improved, it may perform better in the future.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...